-

Notifications

You must be signed in to change notification settings - Fork 588

HDDS-4562. Old bucket needs to be accessible after the cluster was upgraded to the Quota version. #1677

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

adoroszlai

left a comment

adoroszlai

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks @captainzmc for fixing this. The old bucket is now writeable, reproduced the problem and verified the fix using upgrade compose environment.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hi @captainzmc

Few questions:

- Can we make use of proto default value to -1 for quotaInBytes value in proto.

- So on old buckets, we cannot set quota, as we don't have any info of bytesUsed/namespace count, or if it can be set how this will be handled?

- And how upgrades are handled for quota feature overall, like for older volumes and buckets under it?

|

Not related to this PR, can you also provide info on how the clear space quota works and it's usage. Because I see when bucket clear space quota comes, we reset to -1, but what will happen to volume quota (Will it reclaim bucket quota? or any information on clear space quota usage and how it works also can you share some info. |

|

@captainzmc see #1691 for acceptance test with two lines that are currently disabled to verify this fix. If you could review that PR, then uncommenting them in this PR would improve test coverage. |

Thanks for @bharatviswa504’s advices.

|

Yes, I have submitted the use of the latest quota in Doc. Can be seen here: |

Thanks for @adoroszlai 's suggestion, I will deal with the acceptance test here. |

7833495 to

265bf1d

Compare

When volume quota is not set and bucket quota is set, it is just reset quota of the bucket to -1. when clear bucket quota operation is performed Is my understanding correct here? Example: Now clear quota on V1/B2 we set quota of V1/B2 to -1, and now a new bucket can be still created in this volume? New Bucket created with quota 50MB. (V1/B3) So, in this volume there are 3 buckets, only 2 are considered in to count, as clearQuota is run(on V1/B2), and we are not considering this bucket in quota calculations, so this bucket will not be counted under quota, but for volume we have crossed the quota of that volume. (As user has run clear quota on V1/B2) So, here clear quota means resetting the quota to -1? Or what is the real purpose of this clearQuota usage on clusters, in what scenarios this will be useful on the cluster?

But I don't see any guards in the cluster to not allow quota operations for older buckets/volumes. And also do you think, we need to document this in our docs? |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Why do we need to do this during set Operation?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This check simply ensures that when we use RpcClient set Quota, the value of quota must be legal (not less than -1). But the getQuotaValue conversion step inside is redundant, I‘ll delete this conversion.

Might be use int64, but protobuf document said it is inefficient for negative numbers, not sure it is good idea. Just thought of bringing this up here?

I think it will also help if we plan to support quota on older buckets. The default will be -2, all older buckets will have -2, new buckets created after this feature will have -1. So, if some one sets quota on older buckets in this way, we can figure it out, just an idea saying here. |

I'll change the type to sint64, which is both negative by default and more efficient than int64. |

Thanks to @bharatviswa504‘s advice, you found a very important point.

I’ll refine the docs to make it clear that older volumes/buckets are not recommended to use quota. |

a38bd49 to

576e3d3

Compare

576e3d3 to

bc0ec8b

Compare

|

When the acceptance test upgrade, namespace will also have the problem that old volumes cannot create buckets. This also fixed in this PR. R: @amaliujia @linyiqun |

|

Updated PR and fixed review issues. |

linyiqun

left a comment

linyiqun

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@captainzmc , current PR change looks good to me. But I catch some places we can improve in follow-up JIRA.

|

|

||

| d. Volume quota is not currently supported separately, and volume quota takes effect only if bucket quota is set. Because ozone only check the usedBytes of the bucket when we write the key. | ||

|

|

||

| e. If the cluster is upgraded from old version less than 1.1.0, use of quota on older volumes and buckets is not recommended. Since the old key is not counted to the bucket's usedBytes, the quota setting is inaccurate at this point. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We can make this check in OM side and throw exception once user try to set quota on older version volumes/buckets.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Currently, we have not agreed on whether bucket can be enabled for the old quota. At present, we only put forward the suggestion in the document very briefly. If the requirement is clear, we can issue Jira and solve it.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Make sense to me.

| So far, we know that Ozone allows users to create volumes, buckets, and keys. A Volume usually contains several buckets, and each Bucket also contains a certain number of keys. Obviously, it should allow the user to define quotas (for example, how many buckets can be created under a Volume or how much space can be used by a Bucket), which is a common requirement for storage systems. | ||

|

|

||

| ## Currently supported | ||

| 1. Storage Space level quota |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The namespace quota introduction can also be added. We could file a new JIRA for tacking this.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I can add namespace quota to this documentation: https://issues.apache.org/jira/browse/HDDS-4594. Before adding to documentation, I will first finish namespace support on bucket: https://issues.apache.org/jira/browse/HDDS-4277

So, now if the volume quota is not set, the bucket Clearspace quota is just disabling quota on the buckets. And also just setting quota at volume level will not be counted, until bucket level quotas are set. For my understanding so volume quota is set, and bucket quota is set, clearing volume quota is just set to -1, so that now quota is tracked at bucket level. And now when bucket quota is cleared, when volume quota is set, we disallow the operation.

Now the example mentioned scenarios will not happen. Thanks for opening this Jira and tracking this issue. |

If we want to disallow the quota support for older buckets, one idea to do at code level. |

bharatviswa504

left a comment

bharatviswa504

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Overall LGTM.

Few comments, and one question regarding handling old buckets/volumes.

|

|

||

| a. By default, the quota for volume and bucket is not enabled. | ||

|

|

||

| b. When volume quota is enabled, the total size of bucket quota cannot exceed volume. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Minor:

When volume quota is enabled, the total quota of the buckets, cannot exceed the volume quota.

|

|

||

| d. Volume quota is not currently supported separately, and volume quota takes effect only if bucket quota is set. Because ozone only check the usedBytes of the bucket when we write the key. | ||

|

|

||

| e. If the cluster is upgraded from old version less than 1.1.0, use of quota on older volumes and buckets is not recommended. Since the old key is not counted to the bucket's usedBytes, the quota setting is inaccurate at this point. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Can we also add documentation about the clear space quota and the behavior?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes. To prevent conflicts, I have updated the usage and behavior of clear space quota in this PR.

|

Can we use If a case is really set "0" quota to a bucket thus lock the bucket from creating, then users can choose to use a negative value. |

|

O, actually from the code seems that setting default value as -1 is solved in proto. If so please ignore my previous comment (which assumed that proto cannot set -1 as default value for quota) |

User using old Ozone version may still has the desire to use the new Quota feature. It's better not shut the door completely. |

@bharatviswa504 Yes. And the problem of clear quota will be fixed in HDDS-4588. |

Hi @ChenSammi, I think it's dangerous recalculating usedBytes in upgrade . If the amount of data is too large, it will greatly increase the time, and if the processing is not good, it will cause the upgrade to fail. |

@ChenSammi Above idea is to detect older buckets/volumes in the Ozone cluster, from discussion as it is said not recommended, said we can fail the operation But, if we want to support, when they setQuota, we can know this is an old bucket, we can say a warning message to the user, older keys are not considered. I am fine with whatever way we go, if we clearly document the behavior it will not be confusing to end-users. |

Even this will be a costly operation, during that operation we should acquire bucket read lock and do the calculation, so all writes will be stalled in the system. (As during this operation, we should not allow new writes to get accurate bytesused). |

b5c8b92 to

7dce9f7

Compare

|

Updated PR, set default to -2. No matter whether or not we support old volume/bucket setting quota, we need to distinguish which volumes and buckets are old. So Here I take @bharatviswa504's advice. |

7dce9f7 to

c2704f7

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Why we change above condition check? Above check is to confirmed that the quota is enabled, not a validation check. If we want to make sure quota value is a positive value, I prefer to additionally add omVolumeArgs.getQuotaInNamespace() > 0 check.

if (omVolumeArgs.getQuotaInNamespace() != OzoneConsts.QUOTA_RESET && omVolumeArgs.getQuotaInNamespace() > 0) {

}There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@linyiqun Here with omVolumeArgs.getQuotaInNamespace () > 0 is enough? Because when it > 0 is certainly is not equal to -1.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@captainzmc , here my point is that we should firstly check if the quota was enabled by using one variable flag(here I understand is OzoneConsts.QUOTA_RESET). Then we check the quota value if it's valid. Is there a switch config for the quota now? If the quota is not enabled, we don't need to do the quota check anymore.

For the current value OzoneConsts.QUOTA_RESET is -1, omVolumeArgs.getQuotaInNamespace () > 0 makes the sense. But once OzoneConsts.QUOTA_RESET to a positive value, this check won't work. So omVolumeArgs.getQuotaInNamespace() != OzoneConsts.QUOTA_RESET would still be a better check.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for @linyiqun's feedback.

Current there are two case -1 or -2 (old volume/bucket) not check quota, actually we have guarantee on the client, user cann’t set negative quota, Therefore, except for clear quota and old quota, there is no negative case.

In addition to using omVolumeArgs.getQuotaInNamespace ()! = OzoneConsts.QUOTA_RESET also need add omvolumeargs.getQuotainNamespace ()! = 2. The judgment condition will increase too much and the code will not be clear enough, we can use omVolumeArgs. getQuotaInNamespace () > 0, this will make the code much cleaner.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Okay, go ahead for this.

d7ef4e8 to

828f2e3

Compare

828f2e3 to

554c9a8

Compare

| optional uint64 usedBytes = 14; | ||

| optional uint64 quotaInBytes = 15; | ||

| optional uint64 quotaInNamespace = 16; | ||

| optional int64 quotaInBytes = 15 [default = -2]; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

#1677 (comment)

Any reason for moving back to int64?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Interesting, thanks for info.

bharatviswa504

left a comment

bharatviswa504

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

+1 LGTM.

| optional uint64 usedBytes = 14; | ||

| optional uint64 quotaInBytes = 15; | ||

| optional uint64 quotaInNamespace = 16; | ||

| optional int64 quotaInBytes = 15 [default = -2]; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Interesting, thanks for info.

|

If no more comments, I will commit this by tomorrow. |

|

Thank you @captainzmc for the contribution and everyone for the review. if any one has more comments, we can open a new Jira to fix them. |

…graded to the Quota version. (apache#1677) Cherry picked from master to fix acceptance test failure in upgrade test. Merging again from this point would have introduced 52 new conflicts.

(#1822) * HDDS-4587. Merge remote-tracking branch 'upstream/master' into HDDS-3698. * HDDS-4587. Addressing CI failure. * HDDS-4562. Old bucket needs to be accessible after the cluster was upgraded to the Quota version. (#1677) Cherry picked from master to fix acceptance test failure in upgrade test. Merging again from this point would have introduced 52 new conflicts. * HDDS-4770. Upgrade Ratis Thirdparty to 0.6.0 (#1868) Cherry picked from master because 0.6.0-SNAPSHOT is no longer in the repos Co-authored-by: micah zhao <[email protected]> Co-authored-by: Doroszlai, Attila <[email protected]>

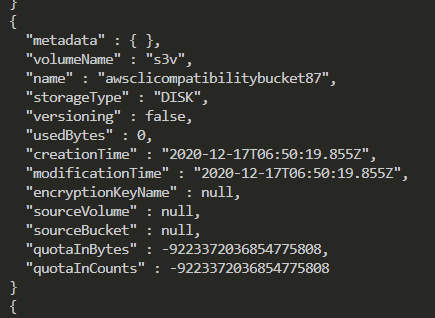

What changes were proposed in this pull request?

long quotaInBytes are new fields in the bucketArgs, this field will go to 0 by default in the old bucket when the old cluster is upgraded. At this point, the data writes are rejected.

This is similar to creating a bucket without specifying quotaInBytes, where quotaInBytes is set to -1 by default via getQuotaValue. We can use 0 as a special term.

What is the link to the Apache JIRA

https://issues.apache.org/jira/browse/HDDS-4562

How was this patch tested?

ut added