-

Notifications

You must be signed in to change notification settings - Fork 3k

Python: Resolve write/read schemas #5116

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

python/src/iceberg/avro/resolver.py

Outdated

| # For now we only support the binary compatible ones | ||

| IntegerType: {LongType}, | ||

| StringType: {BinaryType}, | ||

| BinaryType: {StringType}, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't think this is currently allowed by the spec, but I don't see a reason why it shouldn't be. Maybe we should add it to the spec? What do you think?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

For background, one of the restrictions that we place on type promotion is that promotion is only allowed if hashing the value produces the same result. That's why hashInt(v) is implemented as hashLong(castToLong(v)). Otherwise, when a table column is promoted from int to long, any metadata values for bucket partitions would be suddenly incorrect.

It should be possible to promote from binary to string (or the opposite) because the hash value is the same.

I think we can also relax this constraint a bit, so if there is no bucket transform on a column, you can perform a type promotion that would not be allowed otherwise. For example, int to string promotion could only be allowed if there was no partition spec with a bucket function on the int column.

There are also odd cases with timestamps. If a long value is a timestamp in microseconds, then it could be promoted to timestamp or timestamptz because the hash function would match (no need to modify the value). But if the long was a timestamp in milliseconds, we could only promote to timestamp or timestamptz if there was no bucket transform applied to the value in a partition spec.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

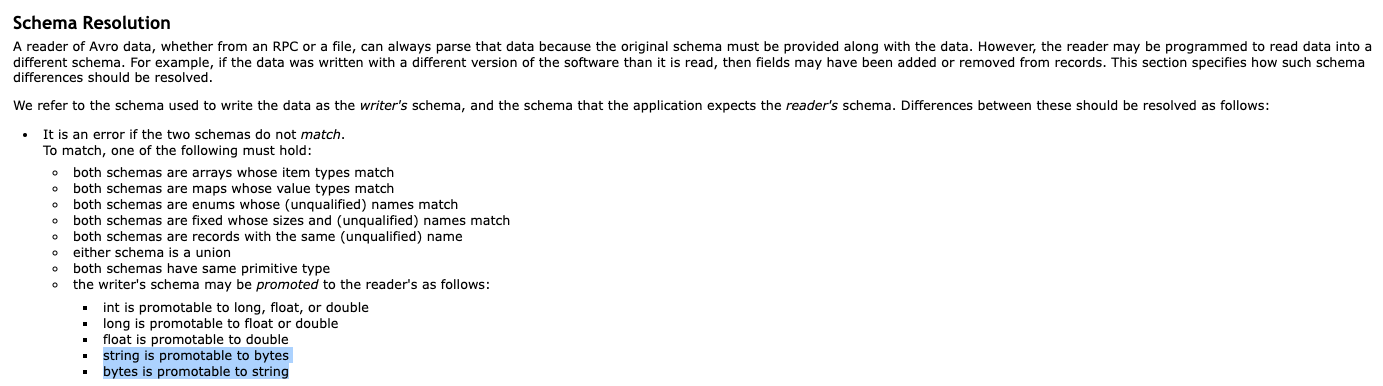

I got this from the Avro schema evolution:

And thanks for the elaborate background information. And I agree that we should add it to the spec indeed. For the hashing, for Python it seems to check out:

➜ python git:(fd-resolve-write-read-schemas) ✗ python3

Python 3.9.13 (main, May 24 2022, 21:13:51)

[Clang 13.1.6 (clang-1316.0.21.2)] on darwin

Type "help", "copyright", "credits" or "license" for more information.

>>> hash("vo")

292714697147949111

>>> hash("vo".encode("utf8"))

292714697147949111For Java, this does not seem to be the case:

➜ python git:(fd-resolve-write-read-schemas) ✗ scala

Welcome to Scala 2.13.8 (OpenJDK 64-Bit Server VM, Java 18.0.1.1).

Type in expressions for evaluation. Or try :help.

scala> new java.lang.String("vo").hashCode

val res1: Int = 3769

scala> new java.lang.String("vo").getBytes()

val res2: Array[Byte] = Array(118, 111)

scala> new java.lang.String("vo").getBytes().hashCode()

val res3: Int = 1479286669

We should also make sure that the hashes are the same across languages. I think this is okay for now because we implement this using the MurmurHash library.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hashing in this case is documented in the Iceberg spec: https://iceberg.apache.org/spec/#appendix-b-32-bit-hash-requirements

That's the one I'm talking about, and we have verified that it is the same between Java and Python.

I also want to note that the type promotions that we care about are the promotions that are allowed in Iceberg, not Avro. While we're using Avro files, Avro is more permissive in these cases than Iceberg allows.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I've created a PR here to add string/binary conversion to the Spec: #5151

I also want to note that the type promotions that we care about are the promotions that are allowed in Iceberg, not Avro. While we're using Avro files, Avro is more permissive in these cases than Iceberg allows.

Good point, I was focussing on the Avro ones here (since it is in the Avro package), but we can also make this pluggable by providing different promotion dictionaries for Avro and Iceberg.

python/src/iceberg/avro/resolver.py

Outdated

| # These are all allowed according to the Avro spec | ||

| # IntegerType: {LongType, FloatType, DoubleType}, | ||

| # LongType: {FloatType, DoubleType}, | ||

| # FloatType: {DoubleType}, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is allowed by the Iceberg spec, as is promotion from DecimalType(P, S) to DecimalType(P2, S) where P2 > P.

Promoting float to double should be possible fairly easily. All you have to do is check whether it's allowed and then use the source type's reader. Since Python uses a double to represent both, it will automatically produce the correct result.

Same thing for DecimalType. As long as you're reading the correct fixed size you should be fine.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I left the float to double one out for now because of the binary incompatibility as mentioned a few lines above. It adds additional complexity because we still need to read the float (4 bytes) and convert it into a double. If we would just plug in the double reader, then we would consume 8 bytes which will lead to problems.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, that's correct. We would need to still use the reader that corresponds to the write schema. It should be possible to do that in the PrimitiveType function.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ah, you already mentioned that =) I've updated the code by converting the dict into a promote singledispatch which gives us a bit more flexibility. For the float/double case, it will just return the file type reader. For the decimal, it will check the precision, and for converting the int to a float, it will do the conversion. It comes with tests. Let me know what you think!

| # KIND, either express or implied. See the License for the | ||

| # specific language governing permissions and limitations | ||

| # under the License. | ||

| import pytest |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'd recommend taking a look at the test cases in Java, which are pretty thorough and can find a lot of weird cases:

| public abstract class TestReadProjection { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I've been looking into this, and am happy to replicate the tests on the Python side. However, it depends on the writeAndRead method that requires a write path. Maybe an idea to postpone this until we have the write path (which I see already glooming in the not-so-distant future).

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We may be able to use fastavro for it, but we'd need to make sure there are field IDs to do projection right. (We could add name mapping for that.) So yeah, I'm happy adding this when we have a write path. I think the implementation is correct.

We should probably add a write path soon, like you said.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I was thinking of using FastAvro, but then we need to do everything in Avro schemas, which is a bit of a hassle. Otherwise we can just use the typed iceberg schemas 🚀

…solve-write-read-schemas

…solve-write-read-schemas

900a529 to

7801199

Compare

7801199 to

6602daa

Compare

|

|

||

| class ValidationError(Exception): | ||

| ... | ||

| """Raises when there is an issue with the schema""" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is used in other places as well, but the comment is fine for now.

|

Merged! Nice work, @Fokko! |

This PR allows you to supply a read schema when reading an Avro file. It will construct a read tree that only reads the actual fields that it needs, and skips over the ones that aren't part of the read schema.

Also combined

read_longandread_intinto one. They are still two separate readers, but just one method on the decoder. This is because they are binary compatible, and there is no long in Python.