-

Notifications

You must be signed in to change notification settings - Fork 3k

Fix ClassCastException when flink read map and array data from parquet format data file. #3081

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Fix ClassCastException when flink read map and array data from parquet format data file. #3081

Conversation

…t format data file.

|

@openinx @chenjunjiedada, could you help to review this PR? Thanks. |

|

Make sense to me! |

|

LGTM! |

| import org.apache.flink.table.data.StringData; | ||

| import org.apache.flink.table.data.TimestampData; | ||

|

|

||

| import org.apache.flink.table.data.*; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In iceberg, we usually don't use * to import package, it's more clear to import the specify package one by one.

openinx

left a comment

openinx

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for reporting this bug, @zhong-yj ! The root cause is: apache flink couldn't serialize & deserialize customized MapData and ArrayData, technically it's a bug from apache flink , not iceberg bug. It's reasonable to customize our own ReusableArrayData to reuse the array data for saving memory purpose, and we should't copy all the elements from reusable array data for just fixing the serialize issue.

Actually, we apache flink 1.13 has a fix for this issue: https://issues.apache.org/jira/browse/FLINK-21247 . I think you can try to upgrade the flink to 1.13 to reproduce this bug .

Though we've a fix in flink 1.13, but it still expose an import issue in our apache iceberg flink module: we don't have SQL nested data unit tests to address this. I think it's worth to public PR to address this testing thing ( that may need us to merge the #2629 firstly).

|

This bug has been addressed since we've successfully integrated to flink 1.13.2 in here : #3116 |

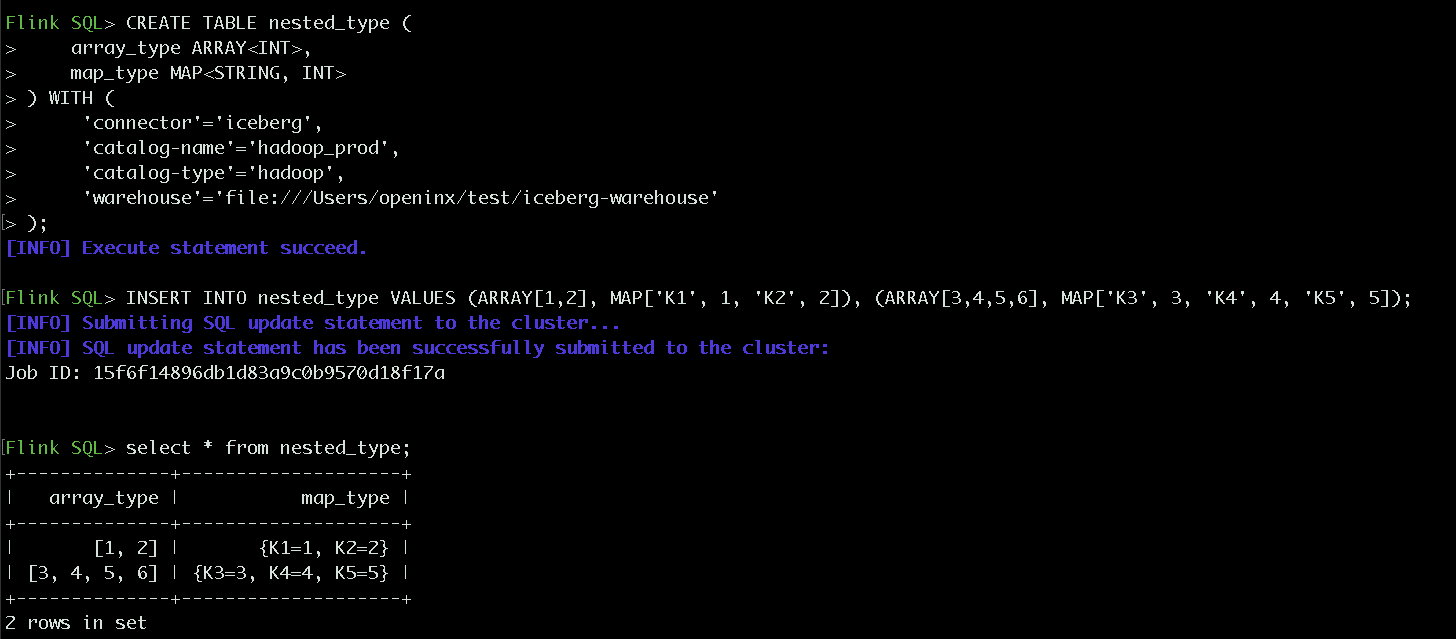

This PR fixs ClassCastException when using Flink to read map and array data from parquet format data file. It adds a buildGenericMapData() in FlinkParquetReaders$ReusableMapData and adds a buildGenericArrayData() in FlinkParquetReaders$ReusableArrayData, and the XXReaders would build GenericXXData for return, which can be recognized by TypeSerializer of Flink.

Related to #3080.