-

Notifications

You must be signed in to change notification settings - Fork 2.5k

[HUDI-4561] Improve incremental query using the fileSlice adjacent to read.end-commit #6324

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[HUDI-4561] Improve incremental query using the fileSlice adjacent to read.end-commit #6324

Conversation

|

@danny0405 can you help review it, thx. |

|

If the end commit is active and is used as the filtering instant for scan, shouldn't |

yes, it works, but it can't handle the condition when end commit is archived, if we use if the end commit is active, if |

In general, let's not make things complex here, the only right way for multi-versioning is the timeline instant, one instant has its counterpart fs view, we should not dig into file slices for different version for one snapshot query even though there is performance gain. If start/end commit are both archived, very probably they are cleaned also, we have 2 choices here

If start commit is archived but end commit is active, there is also possibility that the active instants with smaller timestamp that end commit is cleaned, we should check that first before we use the end commit as the fs view version filtering condition, that makes the thing more complex too. So, i would -1 if this is only an improvement not a bug fix. |

…lashJd/hudi into incremental_query_since_clean_instance

|

@danny0405 looking forward to your reply |

yihua

left a comment

yihua

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@danny0405 it seems that Hudi 1.0 already solves the problem with completion time and new file slicing logic. Let me know if this is something specific to Hudi on Flink.

|

yeah, we have switched to completion time semantics since 1.0 for Flink in here: hudi/hudi-common/src/main/java/org/apache/hudi/common/table/timeline/CompletionTimeQueryView.java Line 259 in 276133b

|

// 1. there are files in metadata be deleted;

// 2. read from earliest

// 3. the start commit is archived

// 4. the end commit is archived

this query will turns to a fullTableScan.

In this condition, the endInstant parameter in getInputSplits() will be the latest

instance, cause to scan the latest fileSlice(which may be larger as time goes by) and then open it and filter the record using instantRange.

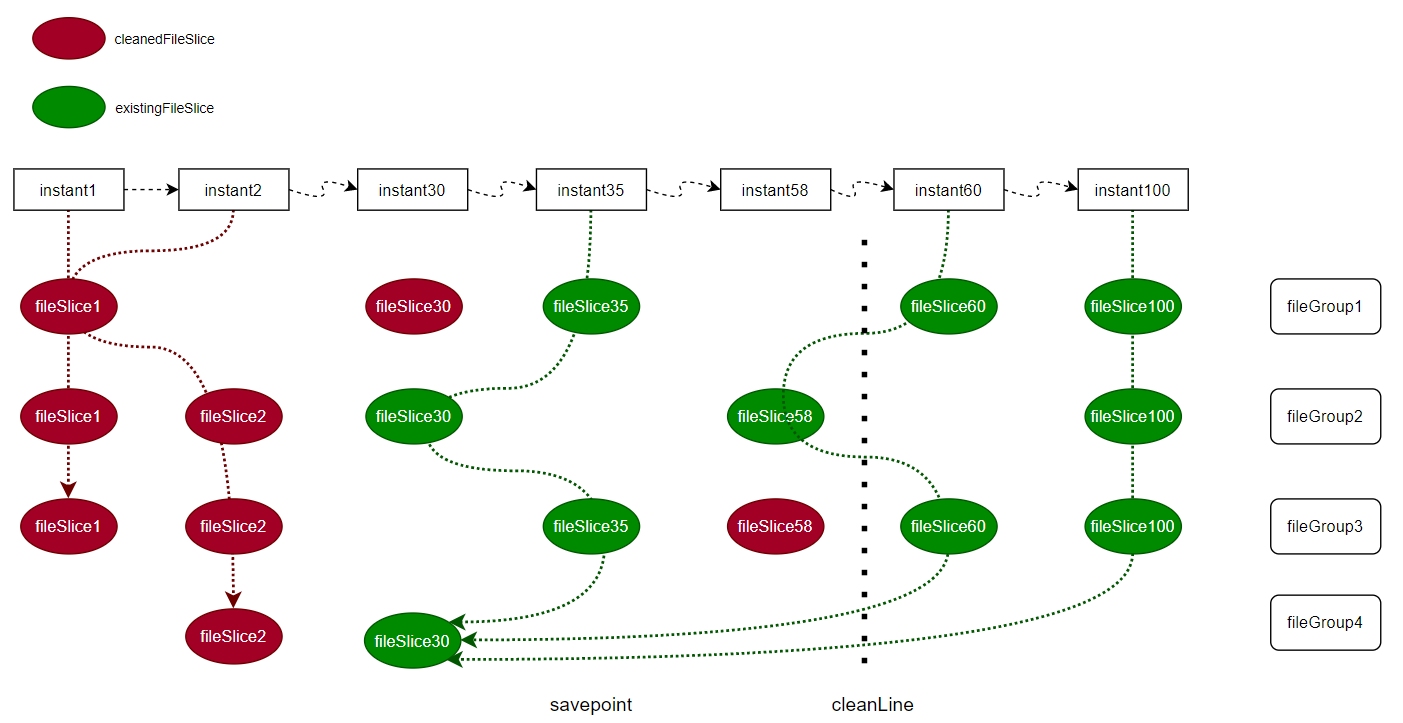

Considering a query scenario, read.start-commit is archived and read.end-commit is in activeTimeLine, this is a fullTableScan. But we can set the endInstant parameter to read.end-commit, not the lastest instance, so as to read less data, more over, if there is an upsert between read.end-commit and the lastest instance, if we use lastest instance as endInstant, we will lose the insert data between read.start-commit and read.end-commit(the data is upserted, so the original data is missing in the lastest instance).

Considering another query scenario, read.start-commit is archived and read.end-commit is also archived, this is a fullTableScan. if read.end-commit is long along and be cleaned, but there is savepoint after it, we can use this savepoint to incremental query the table, not care about the data inserted or upserted after the savepoint.

The core idea is making the searching fileSlice adjacent to read.end-commit.

ps. This PR is based on #6096