-

Notifications

You must be signed in to change notification settings - Fork 2.5k

[HUDI-2648] Retry FileSystem action instead of failed directly. #3887

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

@hudi-bot azure run |

|

hi @nsivabalan could you please help to review this PR at your convenience? Thanks a lot if you could give me a hand! |

| .setBasePath(config.getBasePath()) | ||

| .setLoadActiveTimelineOnLoad(true) | ||

| .setConsistencyGuardConfig(config.getConsistencyGuardConfig()) | ||

| .setFileSystemGuardConfig(config.getFileSystemGuardConfig()) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

hi @zhangyue19921010 ,i think also require add about setFileSystemGuardConfig in HoodieFlinkTable calss

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sure, thing. Will get it done.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

cc @danny0405 :) Hope you are interested in this patch.

|

Hi @vinothchandar and @bvaradar. Friendly ping. I notice that you are very familiar with hudi filesystem wrapper. So could you please pay a little attention for this patch if it's possible?According to our Hudi on S3 experience, it's a common issue to meet the S3 threshold, and may need this retry wrapper to solve it. |

|

looks like a good thing to have. have marked it as release blocker. will try to review it sooner. |

nsivabalan

left a comment

nsivabalan

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Left some minor comments.

| public class FileSystemGuardConfig extends HoodieConfig { | ||

|

|

||

| public static final ConfigProperty<String> FILESYSTEM_RETRY_ENABLE = ConfigProperty | ||

| .key("hoodie.filesystem.action.retry.enabled") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

"enable" would suffice. we don't need a "d" in the end, to be in line with other configs

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sure, changed.

| .withDocumentation("Maximum amount of time (in ms), to wait for next retry."); | ||

|

|

||

| public static final ConfigProperty<Integer> MAX_RETRY_NUMBERS = ConfigProperty | ||

| .key("hoodie.filesystem.action.retry.max_numbers") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

can you help me understand, why do we need both max retry number and max internal ms ? I thought either one is good enough.

So, either 100*4 = 4 retries w/ 100 ms delay.

or 2000/100 = 20 retries w/ 100 ms delay.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We use (long) Math.pow(2, retryCount) * initialIntervalTime + random.nextInt(100); to calculate sleep time before each retry. And we may need MAX_RETRY_INTERVAL_MS to control the maximum duration of a single sleep in case sleep too longMath.min(getWaitTimeExp(retries), maxIntervalTime).

Also use MAX_RETRY_NUMBERS to control max retry numbers to limit total retry time.

| int retries = 0; | ||

| boolean success = false; | ||

| RuntimeException exception = null; | ||

| T t = null; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

can we please name the variables nicely.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sure thing, changed.

| } | ||

| retries++; | ||

| } | ||

| } while (retries <= num); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

we can probably remove L88 and do retries++ here

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

emmm, we only do ++ when caught exception, so maybe can't move it out of catch() {} block.

vinothchandar

left a comment

vinothchandar

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Would n't the aws s3 client already do this? the retries? Can you please explain do we need this at the Hudi layer>?

|

Hi @vinothchandar Thanks for your attention. The necessary to build a retry layer on hudi is to let hudi can deal with different kinds of filesystem not only S3. Anyway, really appreciate for @nsivabalan and @vinothchandar 's attention. And sorry to bother you guys. |

|

cos(Cloud Object Storage) need this patch help to retry https://cloud.tencent.com/product/cos |

Thanks for @mincwang 's info, It looks like https://intl.cloud.tencent.com/?lang=en&pg= (or some other dfs ) needs hoodie to take care of retry logic. So would we please going on this patch and get it done. What's your opinion? @nsivabalan and @vinothchandar Looking forward to your reply :> |

|

sure, makes sense if there are other cloud stores that needs this retry. Can you please address the feedback given already. |

| @ConfigClassProperty(name = "FileSystem Guard Configurations", | ||

| groupName = ConfigGroups.Names.WRITE_CLIENT, | ||

| description = "The filesystem guard related config options, to help deal with runtime exception like s3 list/get/put/delete performance issues.") | ||

| public class FileSystemGuardConfig extends HoodieConfig { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

do you think naming this "FileSystemRetryConfig" would be more appropriate?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sure, "FileSystemRetryConfig" is more appropriate. Changed.

Sure, I will address the comments ASAP. Thanks a lot for your attention. |

|

@zhangyue19921010 Thanks for patiently working through this, with us. I am wondering if we can guard this behavior for each storage scheme. |

vinothchandar

left a comment

vinothchandar

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Left some high level restructuring. Let me know what you think! We can take it from there.

| return HoodieTableMetaClient.builder().setConf(hadoopConf).setBasePath(config.getBasePath()) | ||

| .setLoadActiveTimelineOnLoad(loadActiveTimelineOnLoad).setConsistencyGuardConfig(config.getConsistencyGuardConfig()) | ||

| .setLayoutVersion(Option.of(new TimelineLayoutVersion(config.getTimelineLayoutVersion()))).build(); | ||

| return HoodieTableMetaClient.builder() |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

could we please avoid non-essential style fixes in the PR. Makes it harder to review.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Changed :)

| HoodieTableMetaClient.builder().setConf(context.getHadoopConf().get()).setBasePath(config.getBasePath()) | ||

| .setLoadActiveTimelineOnLoad(true).setConsistencyGuardConfig(config.getConsistencyGuardConfig()) | ||

| .setLayoutVersion(Option.of(new TimelineLayoutVersion(config.getTimelineLayoutVersion()))).build(); | ||

| HoodieTableMetaClient.builder() |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

same.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

changed :)

| public class FileSystemRetryConfig extends HoodieConfig { | ||

|

|

||

| public static final ConfigProperty<String> FILESYSTEM_RETRY_ENABLE = ConfigProperty | ||

| .key("hoodie.filesystem.action.retry.enable") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

can we drop action from the property name, given action has a different meaning inside Hudi? May be filesystem.operation?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Okay, Changed.

| public static HoodieTableMetaClient reload(HoodieTableMetaClient oldMetaClient) { | ||

| return HoodieTableMetaClient.builder().setConf(oldMetaClient.hadoopConf.get()).setBasePath(oldMetaClient.basePath).setLoadActiveTimelineOnLoad(oldMetaClient.loadActiveTimelineOnLoad) | ||

| .setConsistencyGuardConfig(oldMetaClient.consistencyGuardConfig).setLayoutVersion(Option.of(oldMetaClient.timelineLayoutVersion)).setPayloadClassName(null).build(); | ||

| return HoodieTableMetaClient.builder() |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

can you please comment on these reformatted blocks to highlight what has actually changed

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Changed.

| if (fs == null) { | ||

| FileSystem fileSystem = FSUtils.getFs(metaPath, hadoopConf.newCopy()); | ||

| FileSystem fileSystem; | ||

| if (fileSystemRetryConfig.isFileSystemActionRetryEnable()) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

could we make this a property of the StorageScheme?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Emmm..., it's easy to make this as a property of the StorageScheme something like FILE("file", append: false, retry: true)

What I am more worried about is that this may mean that the retry function is turned on by default. And it may be hard for users to disable this retry if they don't need it.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yeah, I am not sure if we can make it a property of StorageScheme. we should let users enable on a need basis.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Guess, what vinoth refers to is, we should add a property named supportsRetry or something to each StorageScheme. So, only for those storage schemes where retries are supported, we can check the config (fileSystemRetryConfig.isFileSystemActionRetryEnable()) and then invoke our retry Helper.

@vinothchandar : can you please clarify on your above suggestion.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In that case, I think we can just have the config alone. and let the user decide.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ack and changed as your suggestion. Thanks a lot.

|

|

||

| public class RetryHelper<T> { | ||

| private static final Logger LOG = LogManager.getLogger(RetryHelper.class); | ||

| private HoodieWrapperFileSystem.CheckedFunction<T> func; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Could we keep this generic? if we cannot avoid referencing any wrapper file system stuff here, then we need to move this back with the fs package?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sure, Changed.

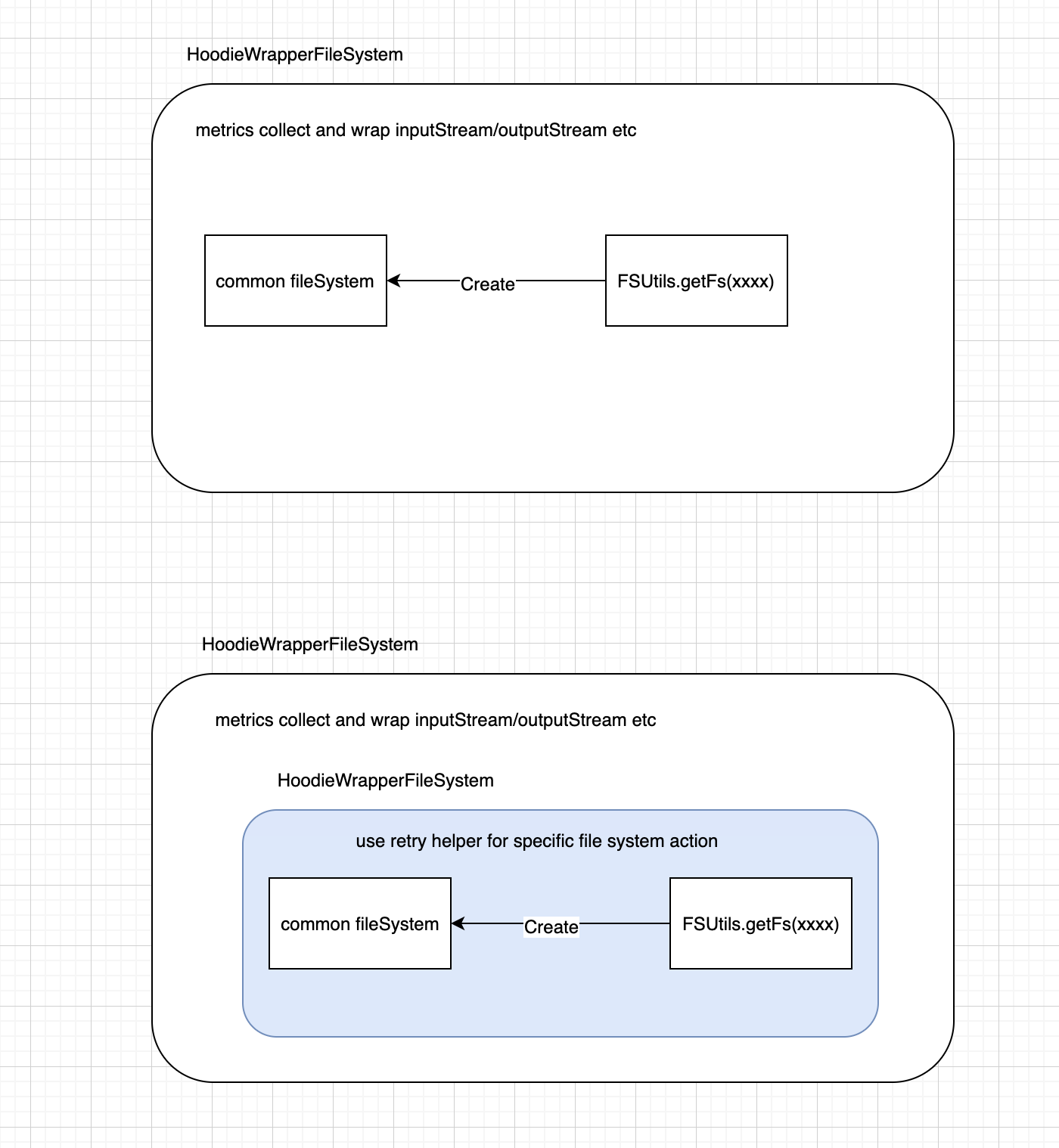

Hi @vinothchandar Thanks a lot for your attention. Let me explain the framework of this retry wrapper. As this picture shows, we design a new wrapper named hoodieRetryWrapper to wrap common file system which will retry specific common filesystem operation. In summary, hoodieRetryWrapper can wrap and guard all kinds of storage scheme as long as let hoodieRetryWrapper hold this storage scheme client. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM. Lets wait to hear from vinoth on his suggestion.

| if (fs == null) { | ||

| FileSystem fileSystem = FSUtils.getFs(metaPath, hadoopConf.newCopy()); | ||

| FileSystem fileSystem; | ||

| if (fileSystemRetryConfig.isFileSystemActionRetryEnable()) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Guess, what vinoth refers to is, we should add a property named supportsRetry or something to each StorageScheme. So, only for those storage schemes where retries are supported, we can check the config (fileSystemRetryConfig.isFileSystemActionRetryEnable()) and then invoke our retry Helper.

@vinothchandar : can you please clarify on your above suggestion.

|

@zhangyue19921010 : lets not wait for Vinoth. he has been busy recently. Lets go ahead for now. |

Sure thing. Will do it ASAP :) |

|

thanks! |

One thing we should be very careful with is in what exception/error we should do a retry here ? If it is a normal user triggered exception, should we still retry here ? |

|

Hi @nsivabalan and @danny0405 Thanks a lot for you review. Also and a new config named

updated: only check PTAL :) Thanks a lot. |

nsivabalan

left a comment

nsivabalan

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM. few minor comments. I will go ahead and land the patch. Please take it as a follow up patch.

thanks for the contribution!

| .withDocumentation("Maximum amount of time (in ms), to wait for next retry."); | ||

|

|

||

| public static final ConfigProperty<Integer> MAX_RETRY_NUMBERS = ConfigProperty | ||

| .key("hoodie.filesystem.operation.retry.max_numbers") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

may be we can name this as "hoodie.filesystem.operation.retry.max.count"

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

max_times seems better ?

| import java.util.Random; | ||

| import java.util.stream.Collectors; | ||

|

|

||

| public class RetryHelper<T> { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

java docs

| private static final Logger LOG = LogManager.getLogger(RetryHelper.class); | ||

| private CheckedFunction<T> func; | ||

| private int num; | ||

| private long maxIntervalTime; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

can some of these be final ?

|

|

||

| // Test the scenario that fs keeps retrying until it fails. | ||

| @Test | ||

| public void testProcessFilesWithExceptions() throws Exception { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Can we add one more test to cover this scenario

- to assert that call succeeds after 2(basically before hitting max retries) retries.

Thanks a lot for your review and merge. Sure I will do it ASAP. |

…he#3887) Co-authored-by: yuezhang <yuezhang@freewheel.tv>

https://issues.apache.org/jira/browse/HUDI-2648

What is the purpose of the pull request

Hoodie will do lots of list/get/put/delete etc actions on filesystem.

Sometimes will meet the fileSystem performance issue or short service suspension, for example

Using hoodie on S3, and will throw lots of exception like this

It's because of meet the throttle of S3 list/get/put/delete.

https://aws.amazon.com/premiumsupport/knowledge-center/s3-request-limit-avoid-throttling/

This exception will cause app crashed, which can be solved by retry wrapper.

Brief change log

(for example:)

Verify this pull request

(Please pick either of the following options)

This pull request is a trivial rework / code cleanup without any test coverage.

(or)

This pull request is already covered by existing tests, such as (please describe tests).

(or)

This change added tests and can be verified as follows:

(example:)

Committer checklist

Has a corresponding JIRA in PR title & commit

Commit message is descriptive of the change

CI is green

Necessary doc changes done or have another open PR

For large changes, please consider breaking it into sub-tasks under an umbrella JIRA.