-

Notifications

You must be signed in to change notification settings - Fork 2.5k

[HUDI-2559] Millisecond granularity for instant timestamps #3824

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Still working to test this. We are going to have issues parsing previous commits that do not have the milliseconds on them so I'm coming up with a strategy for that |

hudi-common/src/main/java/org/apache/hudi/common/table/timeline/HoodieActiveTimeline.java

Outdated

Show resolved

Hide resolved

|

@vinothchandar Thanks for the heads up. My last commit abstracts the serde operations into methods on the timeline so that we can properly handle the varying instant formats. I will poke around the compaction code to see if I can spot the special case. |

|

Here are the places that needs fixes

|

|

@nsivabalan @vinothchandar That should be fine as we now use the methods on the active timeline to parse the instant which will handle second and ms granularity here |

|

Added test to validate that date math that is being done by compaction still works |

|

Also, checked HoodieHeartBeatClient to check if we have any interplay there. Looks like this is what we are doing and looks like we may not need any changes. just wanted to bring to others notice. Every commit will create a file under heartbeat folder. file name is same as instant time. |

One potential issue I see is that if you have an old instant without millis such as I am trying to find examples of that happening in the codebase but if you know of something then I can focus on that. |

|

The All that to say that old commit times will continue to evaluate against new instant timestamps just fine @nsivabalan |

|

@nsivabalan These tests are failing even on the master branch for me. Are you able to reproduce this locally? I'm unsure how my changes could have affecting these tests but I'll continue to look into it. |

|

yeah, master is broken ATM. I have put up a patch to fix that. once CI succeeds, will merge the fix in. |

|

@hudi-bot azure run |

hudi-common/src/main/java/org/apache/hudi/common/table/timeline/HoodieActiveTimeline.java

Outdated

Show resolved

Hide resolved

hudi-common/src/main/java/org/apache/hudi/common/table/timeline/HoodieActiveTimeline.java

Outdated

Show resolved

Hide resolved

…e the default number of milliseconds added to second granularity instants

… override the default number of milliseconds added to second granularity instants" This reverts commit 52b7ea1.

|

@hudi-bot azure run |

this is the main part and also all these solo time, bootstrap instant time and the special instants we have in code already, have higher granularity. |

vinothchandar

left a comment

vinothchandar

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Would love having a functional test here with both sec and millisecond granularity mixed into the timeline. I ll check few other things and this should be good to go.

| val instantLength = queryInstant.length | ||

| if (instantLength == 19 || instantLength == 23) { // for yyyy-MM-dd HH:mm:ss[:SSS] | ||

| HoodieActiveTimeline.getInstantForDateString(queryInstant) | ||

| } else if (instantLength == 14 || instantLength == 17) { // for yyyyMMddHHmmss[SSS] |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

nit: should we use the 17/ instant length, from the constant we have up above?

|

@vinothchandar : writing a UT in TestHoodieActiveTimeline would suffice, just to compare old and new timestamp formats? or are you looking for tests at write client layer sort of. |

|

I am looking for a FT, to ensure an existing table can transition correctly to the new format. yeah may be writing this at timeline level and ensuring all API guarantees are met is a good addition. Regardless, we should perform some upgrade testing out of band. |

|

I was trying to understand how this plays with the bootstrap instant times here. hudi/hudi-common/src/main/java/org/apache/hudi/common/table/timeline/HoodieTimeline.java Line 89 in e028580

00000000000000 is still YYYYMMDDHHMMSS. So we good. |

vinothchandar

left a comment

vinothchandar

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM . thanks @davehagman for this! @nsivabalan can we get more tests in, test it out using yamls as well , ensure things like time based compaction work (may be another UT). and we can land. (rebase and ci)

|

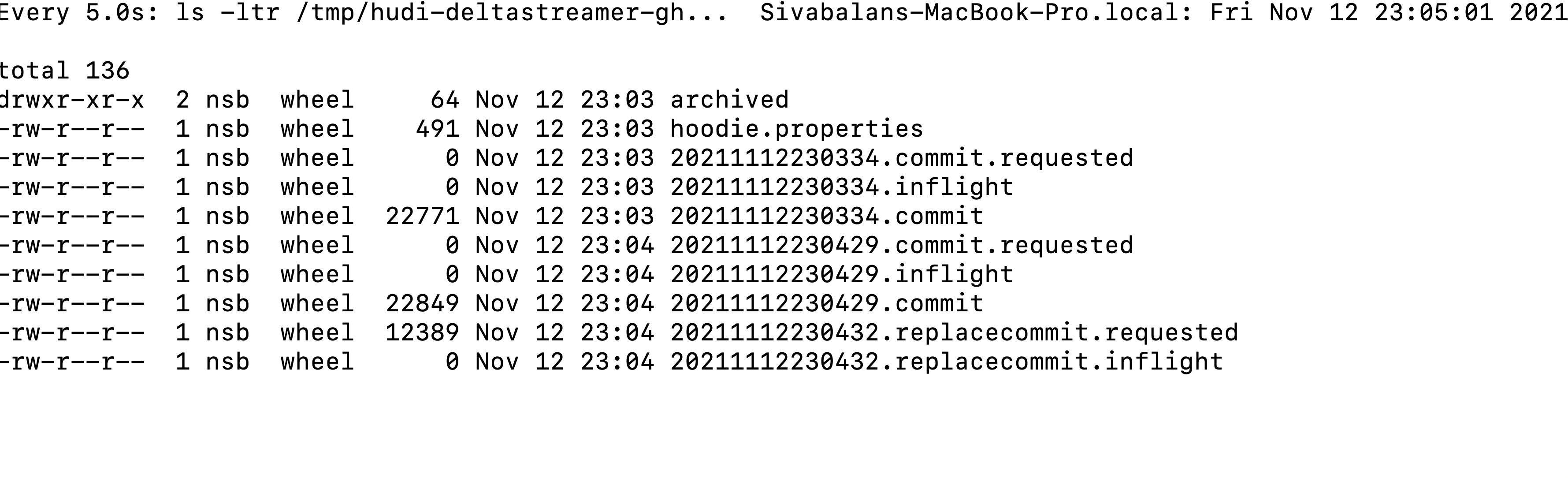

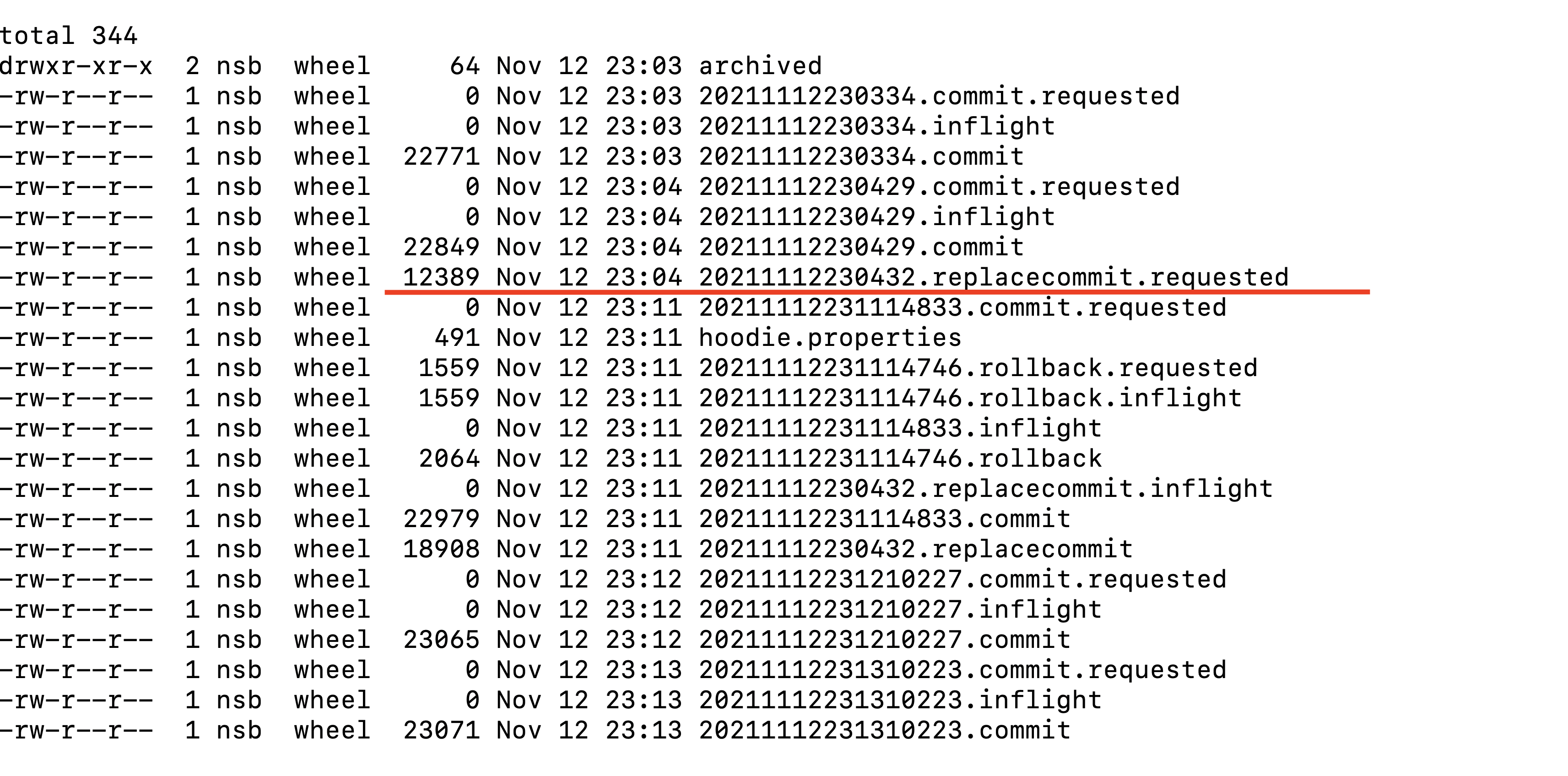

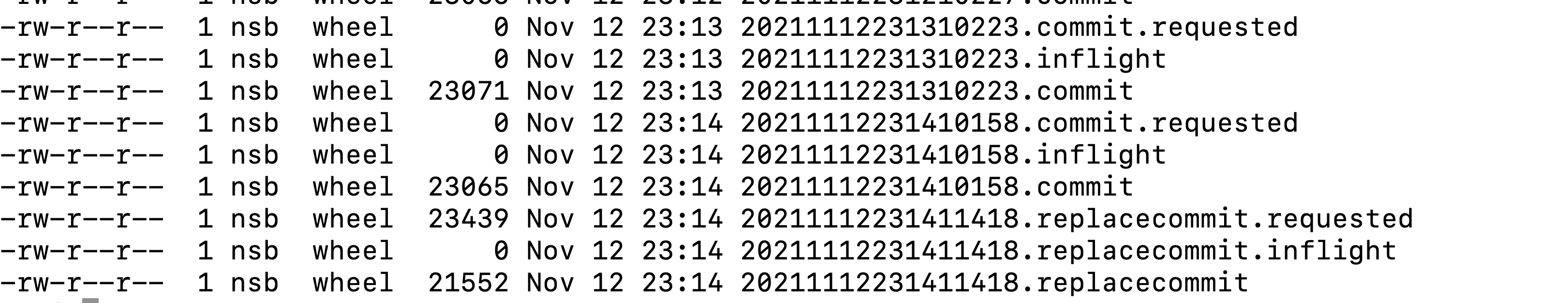

summary of tests I did with this patch. Looks to be good.

Attaching screen shots from my local testing. |

|

closing in favor of #4024 |

What is the purpose of the pull request

In order to support multiple writers against a single table we need to eliminate (or at least greatly reduce) the chance that multiple writers running in parallel will create instants using the same instant ID (it is currently based on the current time down to second granularity).

The current strategy has a shockingly high rate of instant time collisions even with just two writers. In order to make collisions far less likely this PR adds millisecond granularity to the instant timestamp. It is worth noting that this does not guarantee a collision-free solution so we also include steps to reconcile state in case it does happen.

Brief change log

(for example:)

Verify this pull request

Committer checklist

Has a corresponding JIRA in PR title & commit

Commit message is descriptive of the change

CI is green

Necessary doc changes done or have another open PR