This demonstration features the seamless integration of Spring Cloud Data Flow with Kafka-Binder, incorporating three microservices that are interconnected over message broker establishing a cohesive data workflow/pipeline.

Generate Stock Instruments -> Update prices for the Stock Insruments -> Confirm the updated prices of the Stock Insruments

- Microservice Type:

Data source - Creates a random stock instrument object with the instrument's name at a frequency of 1 instrument every second.

- Produces this stock instrument as a message to a topic on the message broker.

- Microservice Type:

Data processor - Listens to a topic on the message broker to receive instrument names.

- Updates the prices of stock instruments.

- Produces the updated stock instruments as a message to another topic on the message broker.

- Microservice Type:

Data sink - Listens to a topic on the message broker to receive stock instruments with updated prices.

- Writes the price update confirmation to the console (stdout).

The microservices are configured and orchestrated using the Spring Cloud Data Flow framework. Here's an overview of the process:

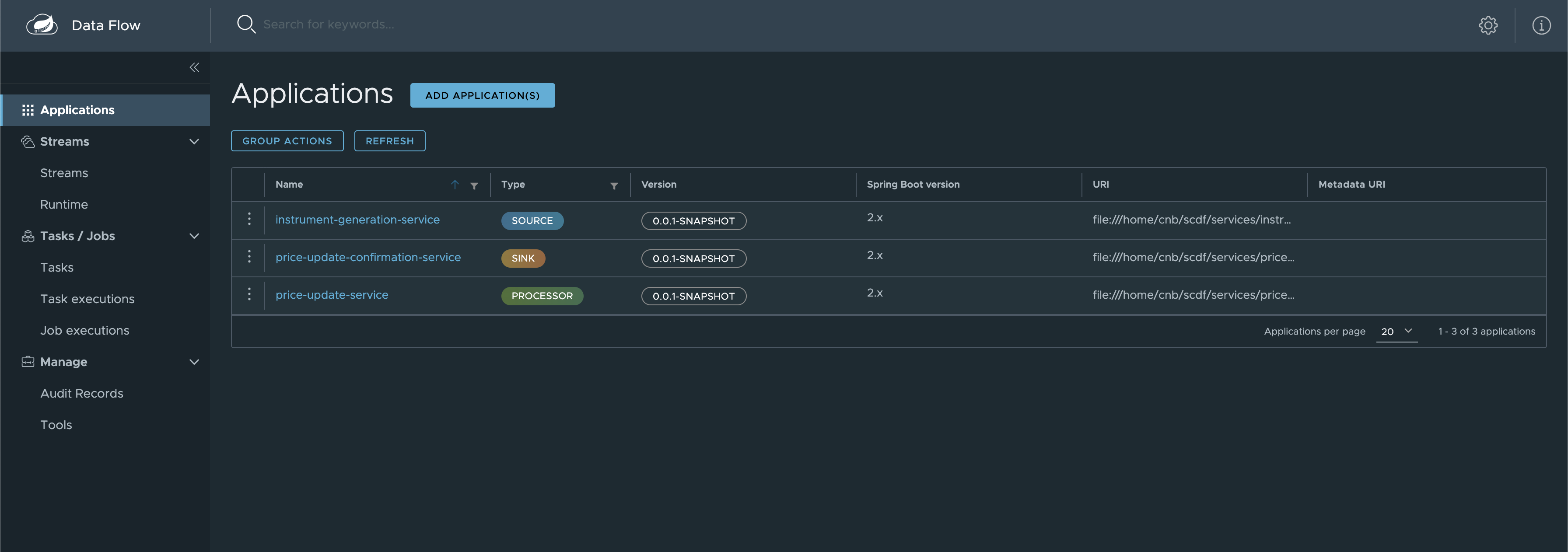

Microservices are registered as applications with the Spring Cloud Data Flow server. The path to the executable JAR files is specified during registration.

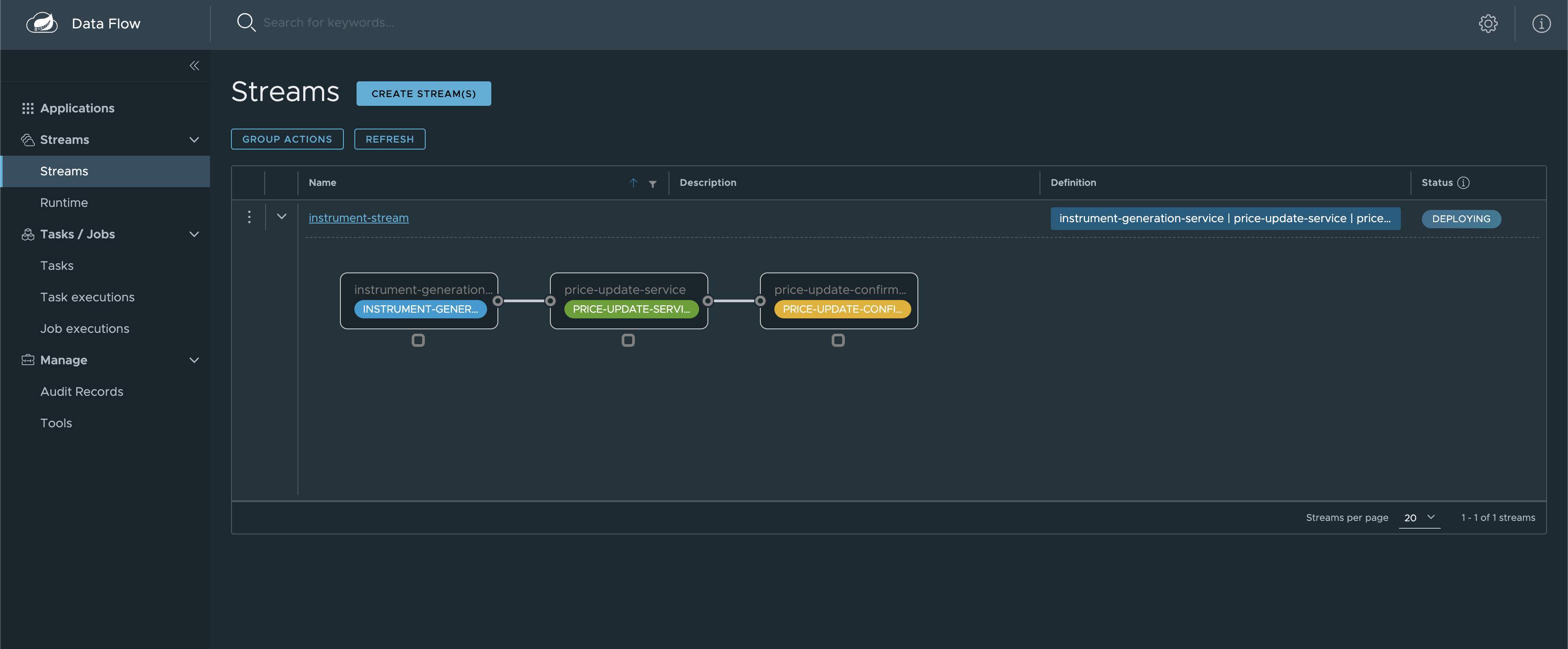

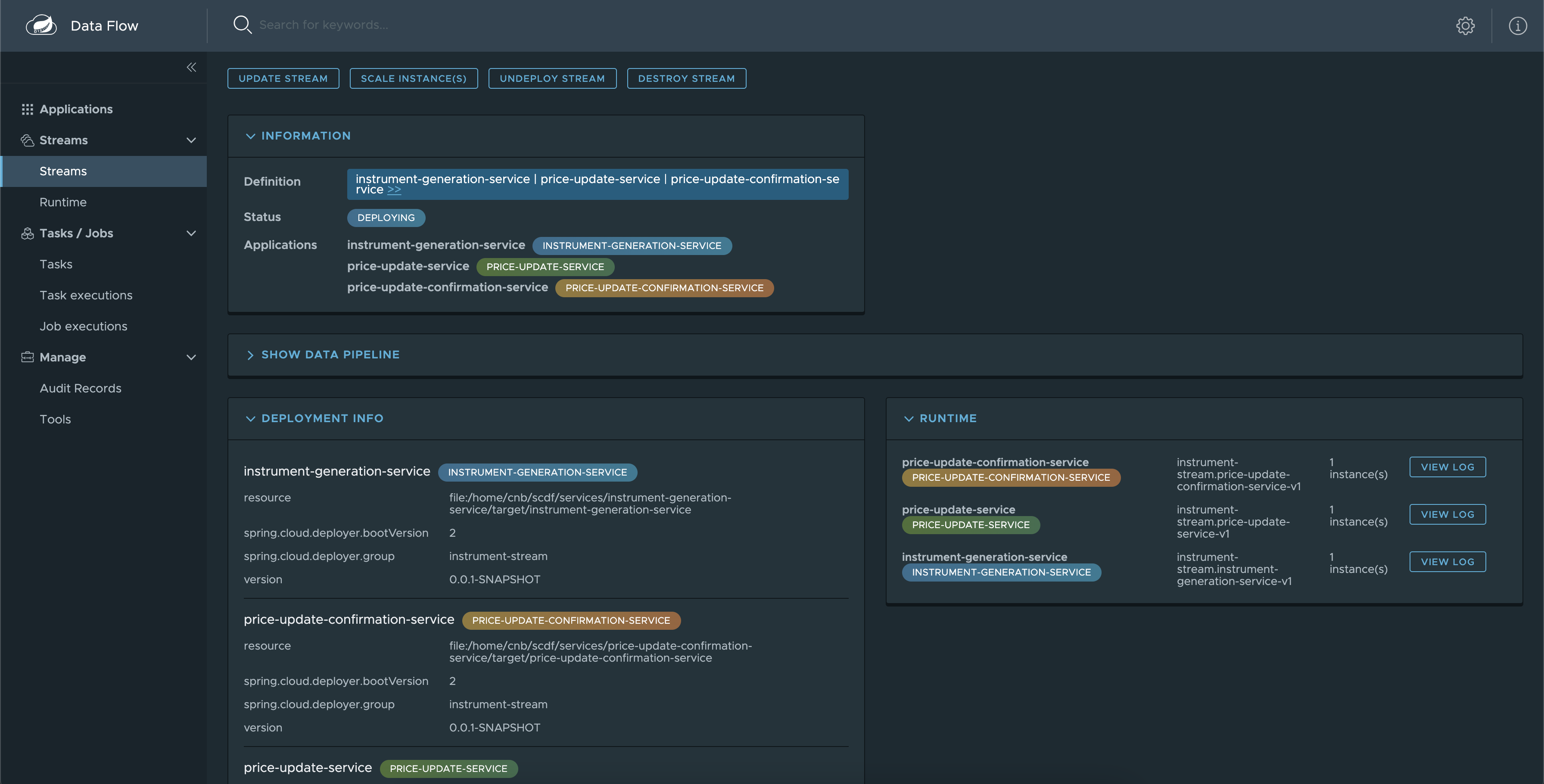

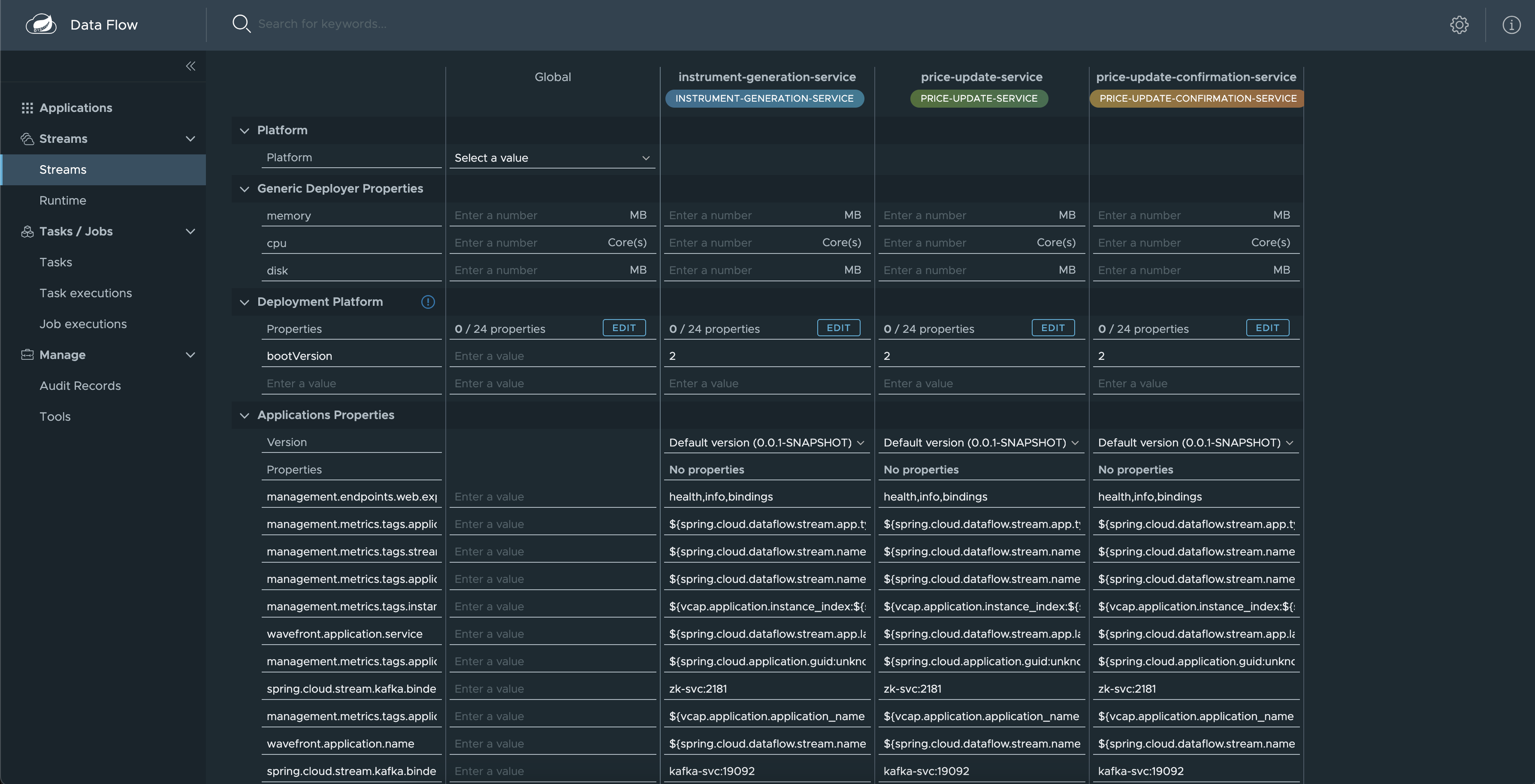

Streams (workflows/pipelines) are created using the registered applications. A common configuration for using Kafka as a message broker is specified during stream creation. This approach ensures consistency across all applications within the stream.

Once a stream is created, it is deployed to initiate data processing.

The stream is setup with a common configuration to use Kafka as a message broker. This is not configured at each application level, but instead specified at the time of creating a stream. This allows all the apps in the stream to use the same Kafka configuration.

The docker-compose setup defines all the base services required to run the entire workflow.

flowchart LR

igs[Instrument Generation Service]

pus[Price Update Service]

pucs[Price Update Confirmation Service]

t1[Topic1];

t2[Topic1];

igs --> t1;

t1 --> pus;

pus --> t2;

t2 --> pucs;

subgraph Kafka

t1[Topic1];

t2[Topic1];

end

make buildmake start-servicesWith all services up, access:

| Description | Link |

|---|---|

| Kafka UI | http://localhost:8080 |

| Grafana UI | http://localhost:3000 |

| Spring Cloud Data Flow Dashboard | http://localhost:9393/dashboard |

| Spring Cloud Data Flow Endpoints | http://localhost:9393/ |