-

Notifications

You must be signed in to change notification settings - Fork 6

[Data Source]Add default option fetchsize of MLSQLJDBC #12

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[Data Source]Add default option fetchsize of MLSQLJDBC #12

Conversation

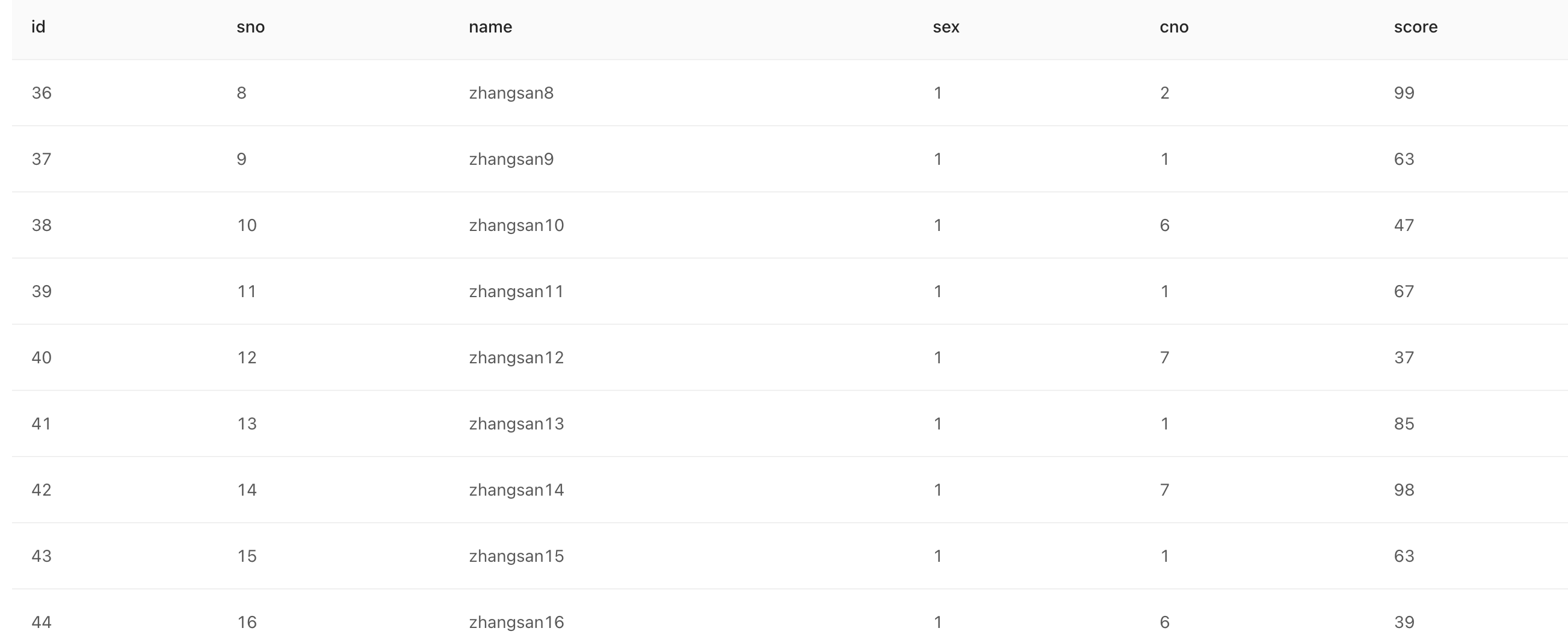

RecurrenceI inserted more than 512M data in the database and set the following JVM parameters: Through the |

|

|

||

| trait JDBCSource { | ||

|

|

||

| def fillJDBCOptions(reader: DataFrameReader, config: DataSourceConfig, formatName: String, dbSplitter: String): (Option[String], Option[String]) = { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Notice that this is only happen in MySQL JDBC Driver. According to your refractor, the change will infect all jdbc data source.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, I ignored other data sources, I changed it.

| url = options.get("url") | ||

| }) | ||

| } | ||

| url = url.map(x => if (x.contains("useCursorFetch")) x else s"$x&useCursorFetch=true") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The url parameters only supported by MySQL JDBC Driver. we should do like this.

|

Please update mlsql-docs accordingly. |

Fix fetchsize related configuration only for mysql setting

| import org.apache.spark.sql.DataFrameReader | ||

| import streaming.dsl.{ConnectMeta, DBMappingKey} | ||

|

|

||

| trait JDBCSource { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The trait name and the function names in this trait i guess we can do better.

In the following pr, spark supports the jdbc parameter fetch_size to be set to a negative number. Namely, to allow data fetch in stream manner (row-by-row fetch) against MySQL database.

apache/spark#26244

On this basis, we provide a default value of fetch_size to use row-by-row fetch, which can avoid loading too large data to cause OOM.