Transfer Learning shootout for PyTorch's model zoo (torchvision).

- Load any pretrained model with custom final layer (num_classes) from PyTorch's model zoo in one line

model_pretrained, diff = load_model_merged('inception_v3', num_classes)- Retrain minimal (as inferred on load) or a custom amount of layers on multiple GPUs. Optionally with Cyclical Learning Rate (Smith 2017).

final_param_names = [d[0] for d in diff]

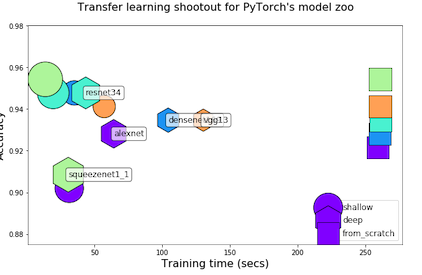

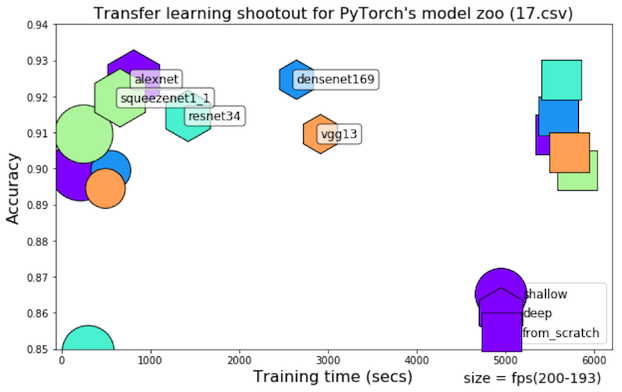

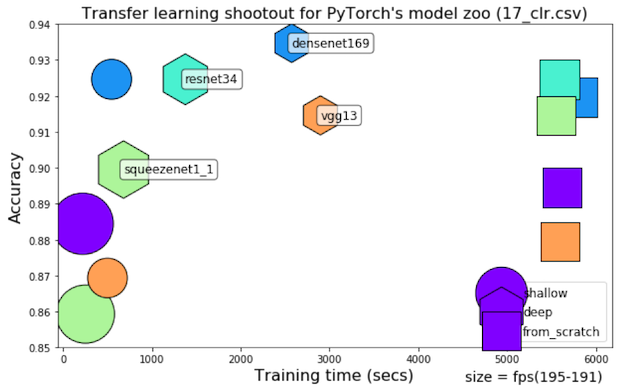

stats = train_eval(model_pretrained, trainloader, testloader, final_params_names)- Chart

training_time,evaluation_time(fps), top-1accuracyfor varying levels of retraining depth (shallow, deep and from scratch)

|

|---|

| Transfer learning on example dataset Bee vs Ants with 2xV100 GPUs |

num_classes = 23, slightly unbalanced, high variance in rotation and motion blur artifacts with 1xGTX1080Ti

|

|---|

| Constant LR with momentum |

|

|---|

| Cyclical Learning Rate |