Deep Web Extractor system is using statistical machine learning models for crawling and data discovery from the Deep Web (i.e., massive and quality portion of World Wide Web) to build knowledge based databases.

The main objectives are performed by this system as given below:

- To discover and extract the deep web's content of quality for web searchers.

- To discover automated means for identifying search-able web form interfaces and directing queries to them to digout information.

- To build domain specific data repositories (e.g. real estate, newspapers, health, etc.) for purposeful analysis and building knowledge base databases.

- To handle the complex queries, like queries containing different range values, not entertained by traditional search engines.

- To facilitate Law and Enforcement Agencies to detect Fraudulent web user.

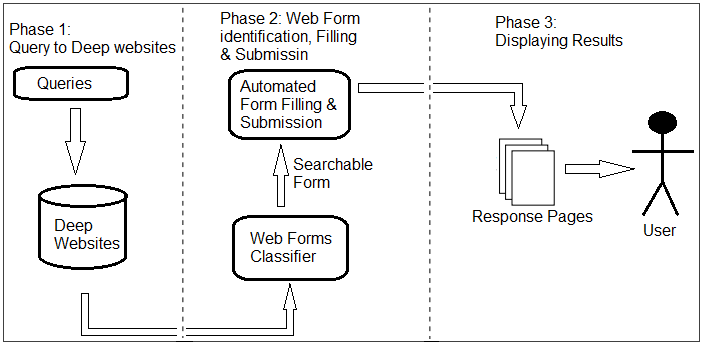

The proposed architecture of Deep Web Extractor (DWX) system is shown in Figure: