This repository contains multiple character-level language models (charLLM). Each language model is designed to generate text at the character level, providing a granular level of control and flexibility.

- Character-Level MLP LLM (First MLP LLM)

- GPT-2 (under process)

The Character-Level MLP language model is implemented based on the approach described in the paper "A Neural Probabilistic Language Model" by Bential et al. (2002). It utilizes a multilayer perceptron architecture to generate text at the character level.

This repository is tested on Python 3.8+, and PyTorch 2.0.0+.

First, create a virtual environment with the version of Python you're going to use and activate it.

Then, you will need to install PyTorch.

When backends has been installed, CharLLMs can be installed using pip as follows:

pip install charLLMCharLLMs can be installed using conda as follows:

git clone https://github.com/RAravindDS/Neural-Probabilistic-Language-Model.gitTo use the Character-Level MLP language model, follow these steps:

- Install the package dependencies.

- Import the

CharMLPclass from thecharLLMmodule. - Create an instance of the

CharMLPclass. - Train the model on a suitable dataset.

- Generate text using the trained model.

Demo for NPLM (A Neural Probabilistic Language Model)

# Import the class

>>> from charLLM import NPLM # Neural Probabilistic Language Model

>>> text_path = "path-to-text-file.txt"

>>> model_parameters = {

"block_size" :3,

"train_size" :0.8,

'epochs' :10000,

'batch_size' :32,

'hidden_layer' :100,

'embedding_dimension' :50,

'learning_rate' :0.1

}

>>> obj = NPLM(text_path, model_parameters) # Initialize the class

>>> obj.train_model()

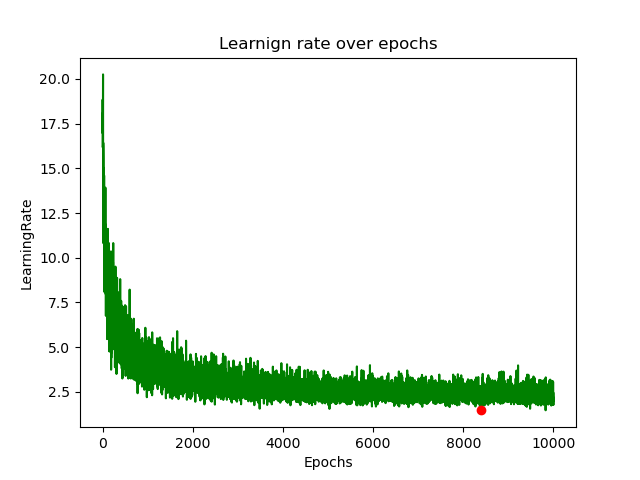

## It outputs the val_loss and image

>>> obj.sampling(words_needed=10) #It samples 10 tokens. Model Output Graph

Feel free to explore the repository and experiment with the different language models provided.

Contributions to this repository are welcome. If you have implemented a novel character-level language model or would like to enhance the existing models, please consider contributing to the project. Thank you !

This repository is licensed under the MIT License.