-

Notifications

You must be signed in to change notification settings - Fork 9.1k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

nullable vs type arrays (JSON Schema compatibility) #1389

Comments

|

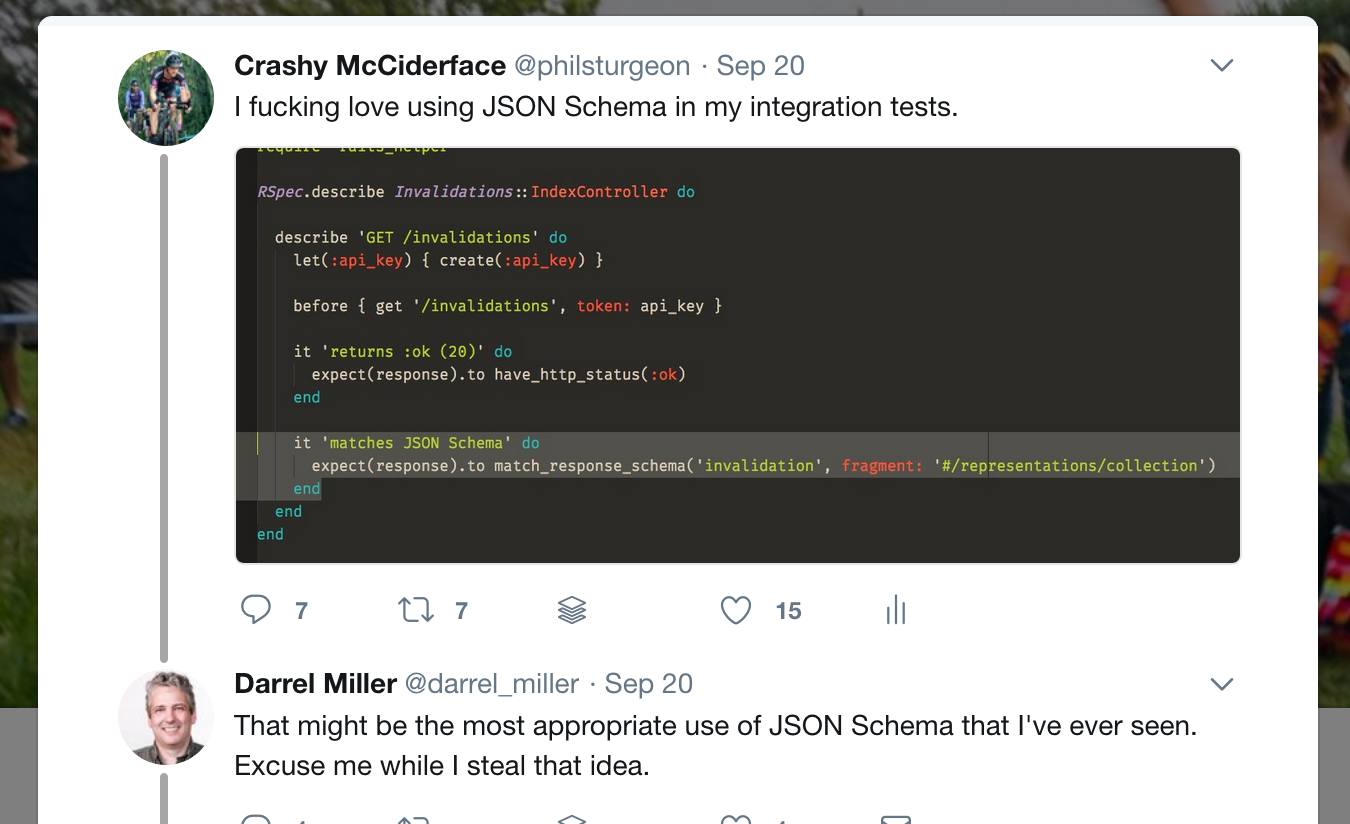

I know this has been discussed greatly in the past, and as a brief recap: I love OpenAPI, have been pushing it hard at work, and I also love contract testing. JSON Schema is fantastic at contract testing, doing this sort of thing: @darrelmiller likes the idea, but this is really tricky as the very act of using JSON Schema is causing me to write invalid OAI files. I regularly use It's so common for OAI users to use So that same thread @darrelmiller asked this question:

Honestly it would be fantastic if there was some sort of solution that lead to JSON Schema and OAI handling types identically (supporting a recommendation for "Parameter Bags" to avoid giving out middle fingers as not all languages support union types, etc), but I will be happy to just avoid that whole can of worms. I change my stance on this and suggest we go with @handrews' proposal:

That would cover 99% of what people are trying to do. We'd still be left with an item on the "JSON Schema vs OAI discrepancies" list, but it wouldn't cut quite so bad, and it would make thousands of invalid OAI files valid... 👍 |

I think the key thing is that the remaining item would be a "feature of JSON Schema that is not supported", while the current situation is "feature of JSON Schema that is functionally supported but requires a conflicting syntax". I'm not that bothered by a system saying "hey, we don't quite want to use all of JSON Schema as defined for validation". In fact, I want to spend effort in draft-08 making it easier to do that in general, so that projects like OpenAPI don't have to awkwardly document the deltas and either write their own tooling or try to wrap up existing tooling some way to forbid bits and pieces. With the two-element array option, and some effort in draft-08 around being able to declare support for things like vocabulary subsets, we could get OAI and JSON Schema fully compatible with each other, without requiring OAI to support everything JSON Schema dreams up for validation. After all, validation and code generation are really not the same things, and we should not pretend that they are. |

|

Another reason for supporting @handrews suggestion is that despite commentary/opinion otherwise on whether the v2.0 spec formally permits this or not, the real world continues to intrude. Companies like SAP (see http://api.sap.com/) produce exactly that construct in their v2.0 output. They expect tool providers to consume it because:

|

|

I can confirm that the first three bullet points in @RobJDean185's comment led to our use of two- and three-element array values for The fourth bullet point actually didn't play a role 😄 Once we support publishing OpenAPI 3.0.0 descriptions we'll of course strictly use single string values for In Swagger 2.0 descriptions we also use

(e.g. for data types DECFLOAT34, or NUMBER(...) with precision greater than 17) This might be an addition to @handrews' proposal: also allow (a permutation of) this special three-element array for numeric data types. |

|

We use the following script to convert nullable |

|

This would be a very helpful step towards aligning OpenAPI and JSON Schema. Can we also confirm that If we say that null values are valid by default, it's another step towards aligning OAS and JSON Schema, and it should make the transition to the new 2-element array syntax that much easier. With today's syntax, you can still disallow null values by specifying So the translation would be:

Correct? |

|

... Just revisited the spec and I see that

This has implications for This is a subtle point that is probably going to trip up some API designers. See #1368 for an example. Maybe there's a way we can clarify this in the spec. |

|

@tedepstein if you have a keyword that can possibly fail validation by being absent, that is very, very bad. The empty schema

If there is any wiggle room in the wording of the spec, the only way out of this that I can think of is to define the default behavior in terms of If I wonder if any implementations effectively do that anyway. |

Totally.

I tend to think that this problem (and others) haven't surfaced very often because people rarely do message schema validation in actual API implementations and client libraries. The mainstream code generators don't implement it. I don't know if there are open source libraries that implement OAS-compliant schema validation; and if there are, they may not be widely used. Which is kind of unfortunate, really. A missed opportunity.

That's really what I was hoping for. Having a keyword with different default values, depending on another keyword, is definitely odd. But in this case, it would be much better than the alternative. Strictly speaking, this would be a breaking change. But maybe the TSC will have an opinion about this, or some insight into the original intent.

If anyone can point to any OpenAPI-compliant schema validators in the wild, that would help. |

|

http://OpenAPI.tools/ plenty on there.

…--

Phil Sturgeon

@philsturgeon

On Apr 29, 2019, at 07:09, Ted Epstein ***@***.***> wrote:

@tedepstein if you have a keyword that can possibly fail validation by being absent, that is very, very bad. The empty schema {} must always pass. Is this really the current behavior? Because if so that is a conceptually huge (if hopefully very rare in practice) divergence from JSON Schema.

nullable is a fatally flawed keyword and the single biggest headache we have for interoperability. Breaking the empty schema is so fundamental...

Totally. nullable throws a monkey wrench into the whole JSON Schema paradigm. It's the only keyword that expands the allowed range of values. As such its neither an assertion, nor an annotation, nor an applicator. It's something else entirely.

I assume the only reason this works is that people either have never implemented it accurately or just very rarely use null.

I tend to think that this problem (and others) haven't surfaced very often because people rarely do schema validation. The mainstream code generators don't implement it. I don't know if there are open source libraries that implement OAS-compliant schema validation; and if there, they may not be widely used.

If there is any wiggle room in the wording of the spec, the only way out of this that I can think of is to define the default behavior in terms of type. Namely:

If type is present with any value, nullable defaults to false

If type is absent, nullable defaults to true

That's really what I was hoping for. Having a keyword with different default values, depending on another keyword, is definitely odd. But in this case, it would be much better than the alternative.

Strictly speaking, it would be a breaking change. But maybe the TSC will have an opinion about this, or some insight into the original intent.

I wonder if any implementations effectively do that anyway.

If anyone can point to any OpenAPI-compliant schema validators in the wild, that would help.

—

You are receiving this because you commented.

Reply to this email directly, view it on GitHub, or mute the thread.

|

|

Thanks, @philsturgeon . @handrews , I think that answers our question. Assuming at least some of those message validators follow the spec exactly, changing But allowing the 2-element |

|

I thought the idea was to add alternativeSchemas in 3.1 to avoid needing to worry about fixing any of this, then 4.0 kicks out Schema and makes alternativeSchema the new Schema.

Instead of trying to ad hoc fix single discrepancies between OpenAPI Schema Objects and the already out of date versions of JSON Schema it was roughly based on, the idea as I understood it was to get rid of OpenAPI Schema Objects entirely and let JSON Schema, XML Schema, Protobuf, etc take over the domain model.

…--

Phil Sturgeon

@philsturgeon

On Apr 29, 2019, at 12:18, Ted Epstein ***@***.***> wrote:

Thanks, @philsturgeon .

@handrews , I think that answers our question. Assuming at least some of those message validators follow the spec exactly, changing nullable to a different default would be a breaking change.

But allowing the 2-element array as you've suggested, and possibly deprecating nullable, would not be.

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub, or mute the thread.

|

|

@philsturgeon there's also #1766 |

|

Why can't we just add a |

|

@whitlockjc are you aware of how normal JSON Schema handles this? |

|

I mean, that's an option, but why the excessive resistance to compatibility? |

…. This adds a formal proposal to clarify the semantics of nullable, providing the necessary background and links to related resources.

* Addressing #1389, and clearing the way for PRs #2046 and #1977. This adds a formal proposal to clarify the semantics of nullable, providing the necessary background and links to related resources. * Corrected table formatting. * Minor tweaks and corrections. * Correct Change Log heading. * Cleaned up notes about translation to JSON Schema and *Of inheritance semantics. * Fix Change Log heading in the proposal template. * Snappy answers to stupid questions. * Change single-quote 'null' to double-quote "null" Thanks, @handrews for the review. * Clarified the proposed definition of nullable Somehow in our collaborative editing, we neglected to state that `nullable` adds `"null"` to the set of allowed types. We just said that it "expands" the `type` value, but didn't state the obvious (to us) manner of said expansion. Correcting that oversight in this commit. * Corrected the alternative, heavy-handed interpretation of nullable. * Added more explicit detail about the primary use case. * Added a more complete explanation of the problems created by disallowing nulls by default.

|

Here is the proposal to clarify |

* Addressing #1389, and clearing the way for PRs #2046 and #1977. This adds a formal proposal to clarify the semantics of nullable, providing the necessary background and links to related resources. * Corrected table formatting. * Minor tweaks and corrections. * Correct Change Log heading. * Cleaned up notes about translation to JSON Schema and *Of inheritance semantics. * Fix Change Log heading in the proposal template. * Snappy answers to stupid questions. * Change single-quote 'null' to double-quote "null" Thanks, @handrews for the review. * Clarified the proposed definition of nullable Somehow in our collaborative editing, we neglected to state that `nullable` adds `"null"` to the set of allowed types. We just said that it "expands" the `type` value, but didn't state the obvious (to us) manner of said expansion. Correcting that oversight in this commit. * Corrected the alternative, heavy-handed interpretation of nullable. * Added more explicit detail about the primary use case. * Added a more complete explanation of the problems created by disallowing nulls by default. * Added issue #2057 - interactions between nullable and default value.

|

This was fixed in #1977 so we can close this. |

I understand that fully supporting

typearrays is a challenge for a variety of reasons. I would like to suggest that a compromise that would be functionally identical tonullablebut compatible with JSON Schema:typeMUST be a string or a two-element array with unique items"null"The only way in which this is inherently more difficult to implement than

nullableis that parsingtypebecomes slightly harder. The functionality, validation outcomes, code generation, etc. should be identical.The text was updated successfully, but these errors were encountered: