Added an option to use zero-copy marshaller for the gRPC read operation #77

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

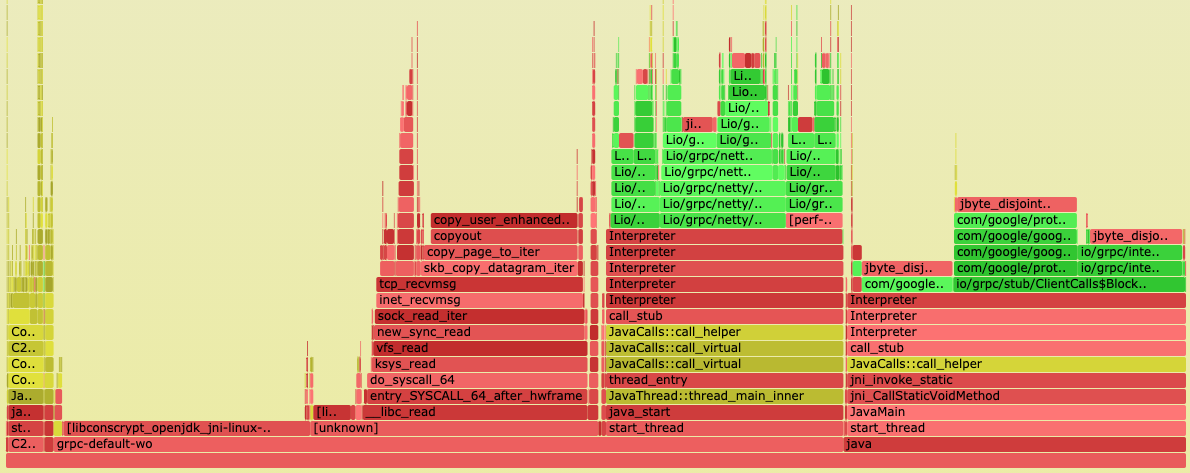

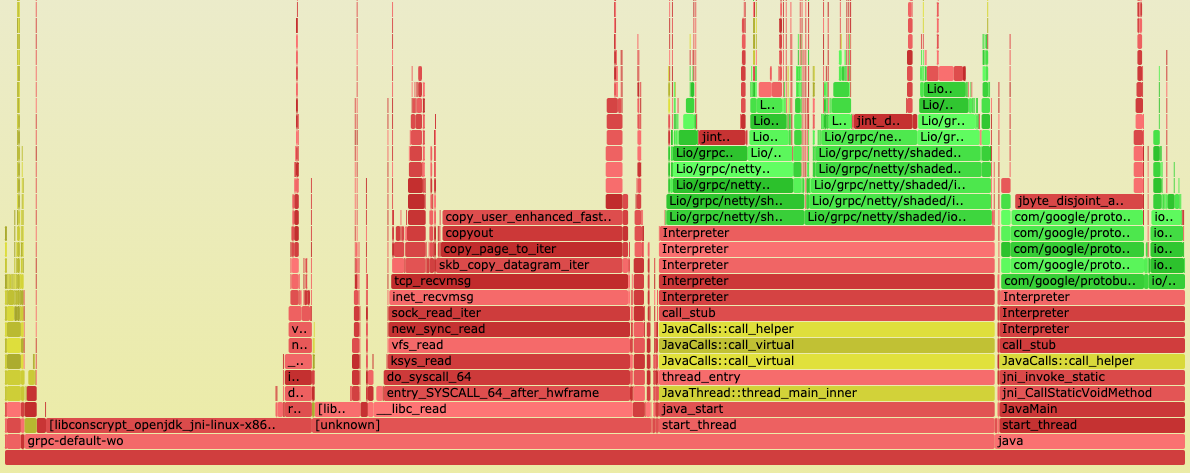

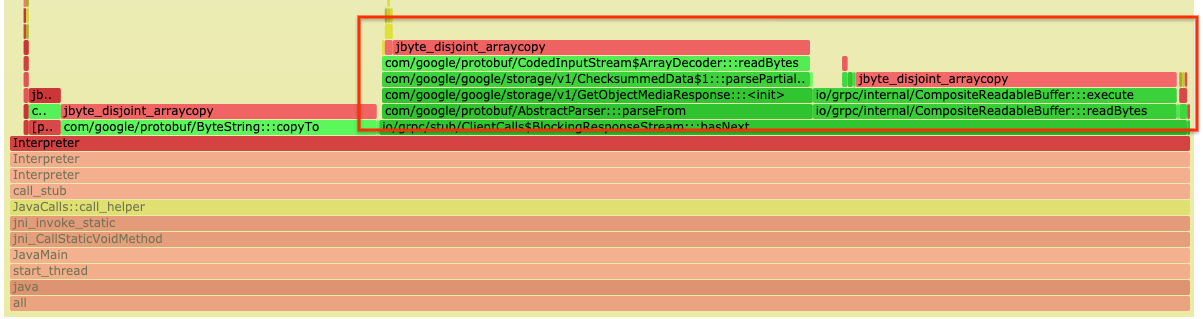

gRPC client benchmark added an option to use the zero-copy marshaller based on the recent protobuf optimization which has been available from

protobuf-java1.36.0 and the new gRPC feature (grpc/grpc-java#8102) which will be available fromgrpc-java1.39.0. This is expected to reduce two memory copies involved in protobuf-message deserialization step.