-

Notifications

You must be signed in to change notification settings - Fork 37

Controller Warning Slack Notifier #421

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

57ff700 to

5b4a624

Compare

eventbroadcaster/notifiersink.go

Outdated

| } | ||

|

|

||

| if err = s.parseEventToNotifier(event, dis); err != nil { | ||

| fmt.Println(err) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Expected? It'll print an error on standard output with no formatting while I would expect more something handled by a logger here.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Definitely not, it slipped by me, thank you for noticing

I would still like to log this error not there but probably upstream, in the NotifierSink interface

I'll do another proposal

edit: never mind this is the NotifierSink interface, I'll add a logger there

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Final word:

logger has been added almost everywhere, to send errors from NotifierSink and warnings from the SlackNotifier

| return | ||

| } | ||

|

|

||

| func (s *NotifierSink) Create(event *corev1.Event) (*corev1.Event, error) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What happens when this function returns an error? Does it retry or stop here? Because we'll likely reach this case if the username stored in the disruption status is invalid (for instance when a disruption is created by something else than a human, like a workflow).

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nothing happens: the error is generated in the slack notifier, gets back to the NotifierSink.Create function, which just absorbs the error (the error from parseEventToNotifier is just ignored, as it would actually generate a "Event Rejected" error log)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Final implemented behavior:

- if username isn't an email address, nothing happens

- if username is an email address, but no slack user i found, a warning is logged

- if username is an email address and a slack user is found, notification is sent

Co-authored-by: Joris Bonnefoy <joris.bonnefoy@datadoghq.com>

Devatoria

left a comment

Devatoria

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM, the last remaining thing would be to add a few lines about this feature in the features doc maybe? https://github.com/DataDog/chaos-controller/blob/main/docs/features.md

|

This feature is very useful, thanks for that! I was wondering if you are planning to add support for HTTP sinks. I implemented something similar in kube-monkey back in time which works for any HTTP collectors, including Slack, as this can be treated as a simple REST call. |

Devatoria

left a comment

Devatoria

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

2 small nits in doc otherwise LGTM

Co-authored-by: Joris Bonnefoy <joris.bonnefoy@datadoghq.com>

Thank you for the positive feedback ! |

What does this PR do?

Please briefly describe your changes as well as the motivation behind them:

This PR creates a Notifier interface to send kubernetes events to the Slack API, with a useful format. I allows to leverage kubernetes events creation mechanisms (anti-spam, lifecycle integration, tooling, etc.) as a way to notify users about unexpected behaviors in their disruption, directly as a Slack DM.

Currently, users have little to no information when a disruption goes wrong in a controlled way (stuck in removal, no target found, etc.). With this PR, any warning event from the controller (which we generate ourselves and have total control over) will be sent to the user as a Slack DM, along with information about the disruption (where to find it, number of targets, etc.) so they know quickly they have to dig and know where to start.

Code Quality Checklist

Testing

unittests orend-to-endtests.unittests orend-to-endtests.NOOP: warningto assert the warning was sent to the console.plain1b.us1.staging.dogat the release of this PR so you can try it there. There will be a dedicated branch in chaos-k8s-ressources to observe the differences in deployment files.Diagrams

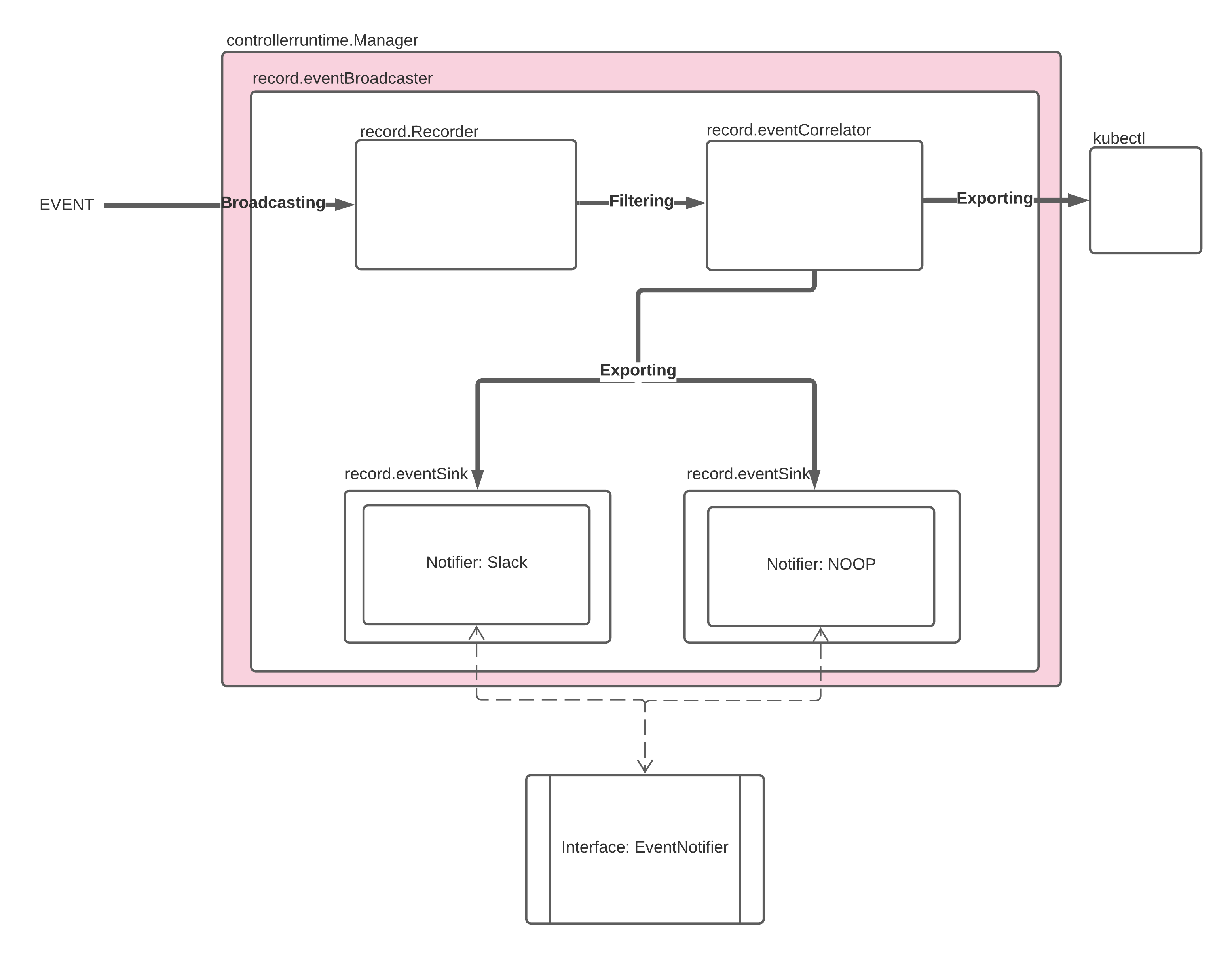

Notifier Class/Event Lifecycle Diagram:

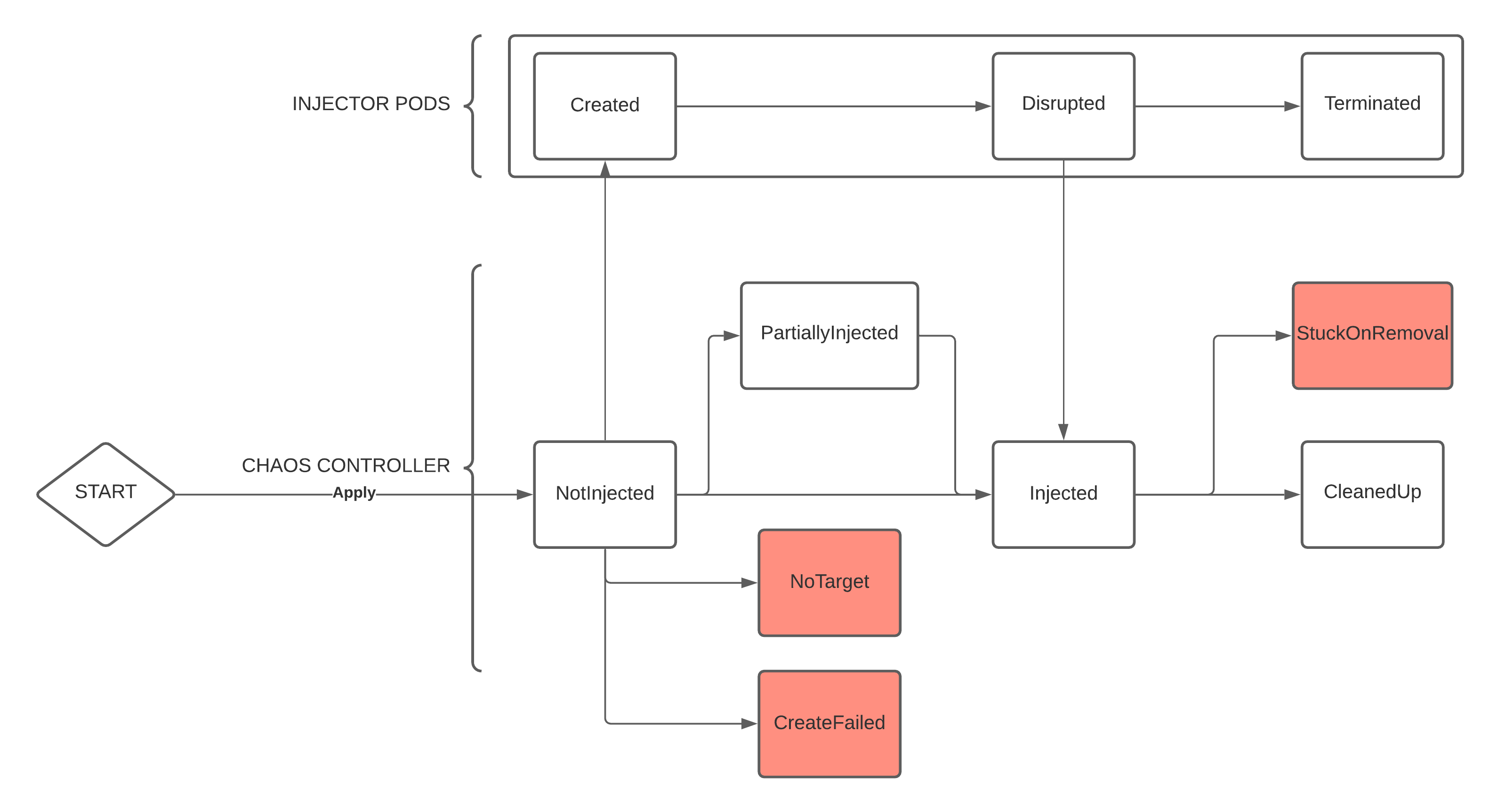

Controller Events Lifecycle (not exhaustive):