You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

Absolutely, the embeddings are sparse due to the relu at the end of the model. It doesn't make them worse, although I did remove that relu in the development branch I'm working on. Don't worry about it.

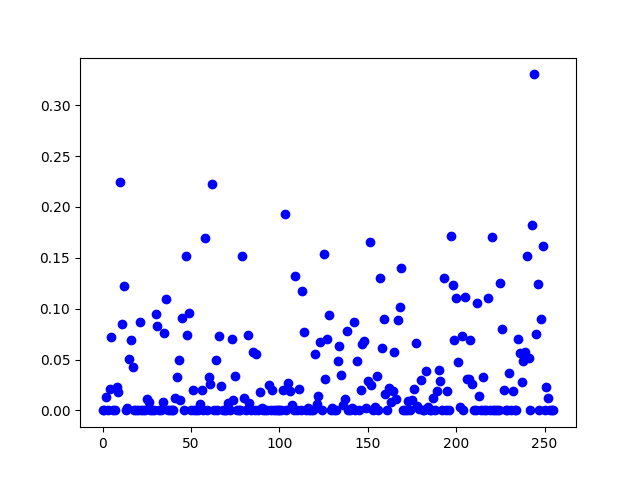

I plot the embedding vector and it's mostly zeros. Is this expected?

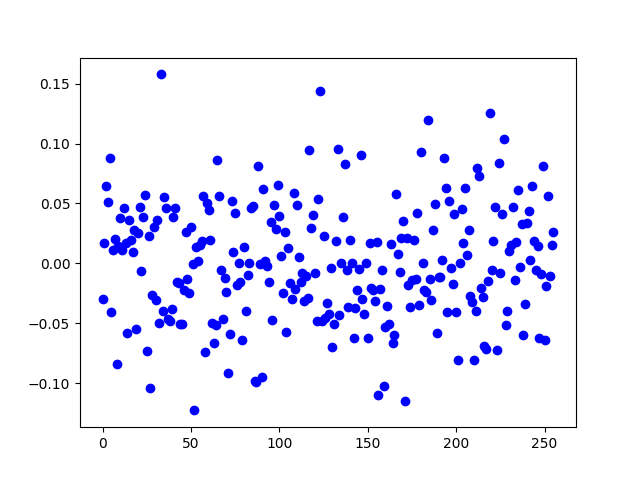

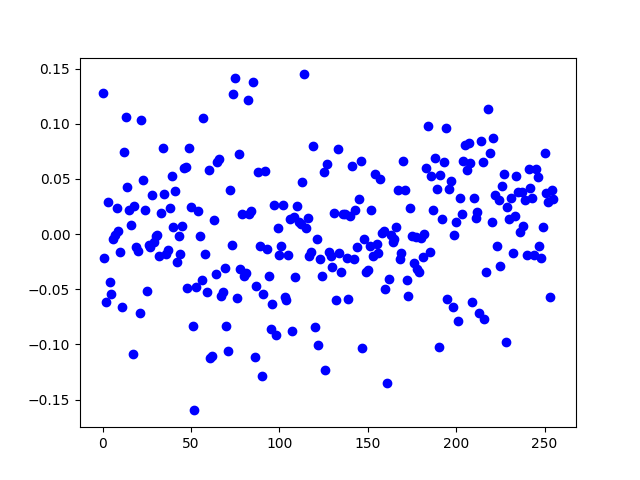

I want to use the embedding in another project. I also plotted their example embeddings and those seem to be distributed significantly better.

Embedding from this project

Embeddings from AutoVC

And here's the test code;

Am I doing something wrong? Thanks.

The text was updated successfully, but these errors were encountered: