diff --git a/autogen/agentchat/conversable_agent.py b/autogen/agentchat/conversable_agent.py

index 79a3c0d0156f..674c8b9248d7 100644

--- a/autogen/agentchat/conversable_agent.py

+++ b/autogen/agentchat/conversable_agent.py

@@ -1723,7 +1723,7 @@ def check_termination_and_human_reply(

sender_name = "the sender" if sender is None else sender.name

if self.human_input_mode == "ALWAYS":

reply = self.get_human_input(

- f"Provide feedback to {sender_name}. Press enter to skip and use auto-reply, or type 'exit' to end the conversation: "

+ f"Replying as {self.name}. Provide feedback to {sender_name}. Press enter to skip and use auto-reply, or type 'exit' to end the conversation: "

)

no_human_input_msg = "NO HUMAN INPUT RECEIVED." if not reply else ""

# if the human input is empty, and the message is a termination message, then we will terminate the conversation

@@ -1836,7 +1836,7 @@ async def a_check_termination_and_human_reply(

sender_name = "the sender" if sender is None else sender.name

if self.human_input_mode == "ALWAYS":

reply = await self.a_get_human_input(

- f"Provide feedback to {sender_name}. Press enter to skip and use auto-reply, or type 'exit' to end the conversation: "

+ f"Replying as {self.name}. Provide feedback to {sender_name}. Press enter to skip and use auto-reply, or type 'exit' to end the conversation: "

)

no_human_input_msg = "NO HUMAN INPUT RECEIVED." if not reply else ""

# if the human input is empty, and the message is a termination message, then we will terminate the conversation

diff --git a/autogen/oai/openai_utils.py b/autogen/oai/openai_utils.py

index 5c02f692d7e6..df70e01ff7df 100644

--- a/autogen/oai/openai_utils.py

+++ b/autogen/oai/openai_utils.py

@@ -33,6 +33,9 @@

# gpt-4

"gpt-4": (0.03, 0.06),

"gpt-4-32k": (0.06, 0.12),

+ # gpt-4o-mini

+ "gpt-4o-mini": (0.000150, 0.000600),

+ "gpt-4o-mini-2024-07-18": (0.000150, 0.000600),

# gpt-3.5 turbo

"gpt-3.5-turbo": (0.0005, 0.0015), # default is 0125

"gpt-3.5-turbo-0125": (0.0005, 0.0015), # 16k

diff --git a/autogen/token_count_utils.py b/autogen/token_count_utils.py

index 365285e09551..220007a2bd12 100644

--- a/autogen/token_count_utils.py

+++ b/autogen/token_count_utils.py

@@ -36,6 +36,8 @@ def get_max_token_limit(model: str = "gpt-3.5-turbo-0613") -> int:

"gpt-4-vision-preview": 128000,

"gpt-4o": 128000,

"gpt-4o-2024-05-13": 128000,

+ "gpt-4o-mini": 128000,

+ "gpt-4o-mini-2024-07-18": 128000,

}

return max_token_limit[model]

diff --git a/autogen/version.py b/autogen/version.py

index 93824aa1f87c..c4feccf559b4 100644

--- a/autogen/version.py

+++ b/autogen/version.py

@@ -1 +1 @@

-__version__ = "0.2.32"

+__version__ = "0.2.33"

diff --git a/notebook/agentchat_RetrieveChat_mongodb.ipynb b/notebook/agentchat_RetrieveChat_mongodb.ipynb

index 18494e28401d..0f24cf16579f 100644

--- a/notebook/agentchat_RetrieveChat_mongodb.ipynb

+++ b/notebook/agentchat_RetrieveChat_mongodb.ipynb

@@ -29,7 +29,7 @@

":::\n",

"````\n",

"\n",

- "Ensure you have a MongoDB Atlas instance with Cluster Tier >= M30."

+ "Ensure you have a MongoDB Atlas instance with Cluster Tier >= M10. Read more on Cluster support [here](https://www.mongodb.com/docs/atlas/atlas-search/manage-indexes/#create-and-manage-fts-indexes)"

]

},

{

diff --git a/notebook/agentchat_microsoft_fabric.ipynb b/notebook/agentchat_microsoft_fabric.ipynb

index e4c2a7119cf9..97cab73b4eaa 100644

--- a/notebook/agentchat_microsoft_fabric.ipynb

+++ b/notebook/agentchat_microsoft_fabric.ipynb

@@ -113,32 +113,36 @@

}

],

"source": [

- "import types\n",

+ "from synapse.ml.mlflow import get_mlflow_env_config\n",

"\n",

- "import httpx\n",

- "from synapse.ml.fabric.credentials import get_openai_httpx_sync_client\n",

"\n",

- "import autogen\n",

+ "def get_config_list():\n",

+ " mlflow_env_configs = get_mlflow_env_config()\n",

+ " access_token = mlflow_env_configs.driver_aad_token\n",

+ " prebuilt_AI_base_url = mlflow_env_configs.workload_endpoint + \"cognitive/openai/\"\n",

"\n",

- "http_client = get_openai_httpx_sync_client()\n",

- "http_client.__deepcopy__ = types.MethodType(\n",

- " lambda self, memo: self, http_client\n",

- ") # https://microsoft.github.io/autogen/docs/topics/llm_configuration#adding-http-client-in-llm_config-for-proxy\n",

+ " config_list = [\n",

+ " {\n",

+ " \"model\": \"gpt-4o\",\n",

+ " \"api_key\": access_token,\n",

+ " \"base_url\": prebuilt_AI_base_url,\n",

+ " \"api_type\": \"azure\",\n",

+ " \"api_version\": \"2024-02-01\",\n",

+ " },\n",

+ " ]\n",

"\n",

- "config_list = [\n",

- " {\n",

- " \"model\": \"gpt-4o\",\n",

- " \"http_client\": http_client,\n",

- " \"api_type\": \"azure\",\n",

- " \"api_version\": \"2024-02-01\",\n",

- " },\n",

- "]\n",

+ " # Set temperature, timeout and other LLM configurations\n",

+ " llm_config = {\n",

+ " \"config_list\": config_list,\n",

+ " \"temperature\": 0,\n",

+ " \"timeout\": 600,\n",

+ " }\n",

+ " return config_list, llm_config\n",

+ "\n",

+ "config_list, llm_config = get_config_list()\n",

"\n",

- "# Set temperature, timeout and other LLM configurations\n",

- "llm_config = {\n",

- " \"config_list\": config_list,\n",

- " \"temperature\": 0,\n",

- "}"

+ "assert len(config_list) > 0\n",

+ "print(\"models to use: \", [config_list[i][\"model\"] for i in range(len(config_list))])"

]

},

{

@@ -300,6 +304,8 @@

}

],

"source": [

+ "import autogen\n",

+ "\n",

"# create an AssistantAgent instance named \"assistant\"\n",

"assistant = autogen.AssistantAgent(\n",

" name=\"assistant\",\n",

diff --git a/test/agentchat/contrib/test_gpt_assistant.py b/test/agentchat/contrib/test_gpt_assistant.py

index 6fc69097fc0d..7132cb72053b 100755

--- a/test/agentchat/contrib/test_gpt_assistant.py

+++ b/test/agentchat/contrib/test_gpt_assistant.py

@@ -28,6 +28,7 @@

filter_dict={

"api_type": ["openai"],

"model": [

+ "gpt-4o-mini",

"gpt-4o",

"gpt-4-turbo",

"gpt-4-turbo-preview",

diff --git a/test/agentchat/test_conversable_agent.py b/test/agentchat/test_conversable_agent.py

index 3d59980d7a1d..c0d37a7bd7a1 100755

--- a/test/agentchat/test_conversable_agent.py

+++ b/test/agentchat/test_conversable_agent.py

@@ -25,7 +25,13 @@

here = os.path.abspath(os.path.dirname(__file__))

-gpt4_config_list = [{"model": "gpt-4"}, {"model": "gpt-4-turbo"}, {"model": "gpt-4-32k"}, {"model": "gpt-4o"}]

+gpt4_config_list = [

+ {"model": "gpt-4"},

+ {"model": "gpt-4-turbo"},

+ {"model": "gpt-4-32k"},

+ {"model": "gpt-4o"},

+ {"model": "gpt-4o-mini"},

+]

@pytest.fixture

diff --git a/test/agentchat/test_function_call.py b/test/agentchat/test_function_call.py

index d3e174949b4b..0f1d4f909426 100755

--- a/test/agentchat/test_function_call.py

+++ b/test/agentchat/test_function_call.py

@@ -213,7 +213,7 @@ def test_update_function():

config_list_gpt4 = autogen.config_list_from_json(

OAI_CONFIG_LIST,

filter_dict={

- "tags": ["gpt-4", "gpt-4-32k", "gpt-4o"],

+ "tags": ["gpt-4", "gpt-4-32k", "gpt-4o", "gpt-4o-mini"],

},

file_location=KEY_LOC,

)

diff --git a/test/oai/test_client.py b/test/oai/test_client.py

index ea6f7ba7c5f7..443ec995de48 100755

--- a/test/oai/test_client.py

+++ b/test/oai/test_client.py

@@ -143,7 +143,9 @@ def test_customized_cost():

config.update({"price": [1000, 1000]})

client = OpenAIWrapper(config_list=config_list, cache_seed=None)

response = client.create(prompt="1+3=")

- assert response.cost >= 4 and response.cost < 10, "Due to customized pricing, cost should be > 4 and < 10"

+ assert (

+ response.cost >= 4

+ ), f"Due to customized pricing, cost should be > 4. Message: {response.choices[0].message.content}"

@pytest.mark.skipif(skip, reason="openai>=1 not installed")

diff --git a/test/oai/test_utils.py b/test/oai/test_utils.py

index 96956d07d90d..fd81d3f9f548 100755

--- a/test/oai/test_utils.py

+++ b/test/oai/test_utils.py

@@ -55,8 +55,8 @@

{

"model": "gpt-35-turbo-v0301",

"tags": ["gpt-3.5-turbo", "gpt35_turbo"],

- "api_key": "111113fc7e8a46419bfac511bb301111",

- "base_url": "https://1111.openai.azure.com",

+ "api_key": "Your Azure OAI API Key",

+ "base_url": "https://deployment_name.openai.azure.com",

"api_type": "azure",

"api_version": "2024-02-01"

},

diff --git a/website/blog/2024-07-25-AgentOps/index.mdx b/website/blog/2024-07-25-AgentOps/index.mdx

new file mode 100644

index 000000000000..5c71279f2e03

--- /dev/null

+++ b/website/blog/2024-07-25-AgentOps/index.mdx

@@ -0,0 +1,70 @@

+---

+title: AgentOps, the Best Tool for AutoGen Agent Observability

+authors:

+ - areibman

+ - bboynton97

+tags: [LLM,Agent,Observability,AutoGen,AgentOps]

+---

+

+# AgentOps, the Best Tool for AutoGen Agent Observability

+ +

+## TL;DR

+* AutoGen® offers detailed multi-agent observability with AgentOps.

+* AgentOps offers the best experience for developers building with AutoGen in just two lines of code.

+* Enterprises can now trust AutoGen in production with detailed monitoring and logging from AgentOps.

+

+AutoGen is excited to announce an integration with AgentOps, the industry leader in agent observability and compliance. Back in February, [Bloomberg declared 2024 the year of AI Agents](https://www.bloomberg.com/news/newsletters/2024-02-15/tech-companies-bet-the-world-is-ready-for-ai-agents). And it's true! We've seen AI transform from simplistic chatbots to autonomously making decisions and completing tasks on a user's behalf.

+

+However, as with most new technologies, companies and engineering teams can be slow to develop processes and best practices. One part of the agent workflow we're betting on is the importance of observability. Letting your agents run wild might work for a hobby project, but if you're building enterprise-grade agents for production, it's crucial to understand where your agents are succeeding and failing. Observability isn't just an option; it's a requirement.

+

+As agents evolve into even more powerful and complex tools, you should view them increasingly as tools designed to augment your team's capabilities. Agents will take on more prominent roles and responsibilities, take action, and provide immense value. However, this means you must monitor your agents the same way a good manager maintains visibility over their personnel. AgentOps offers developers observability for debugging and detecting failures. It provides the tools to monitor all the key metrics your agents use in one easy-to-read dashboard. Monitoring is more than just a “nice to have”; it's a critical component for any team looking to build and scale AI agents.

+

+## What is Agent Observability?

+

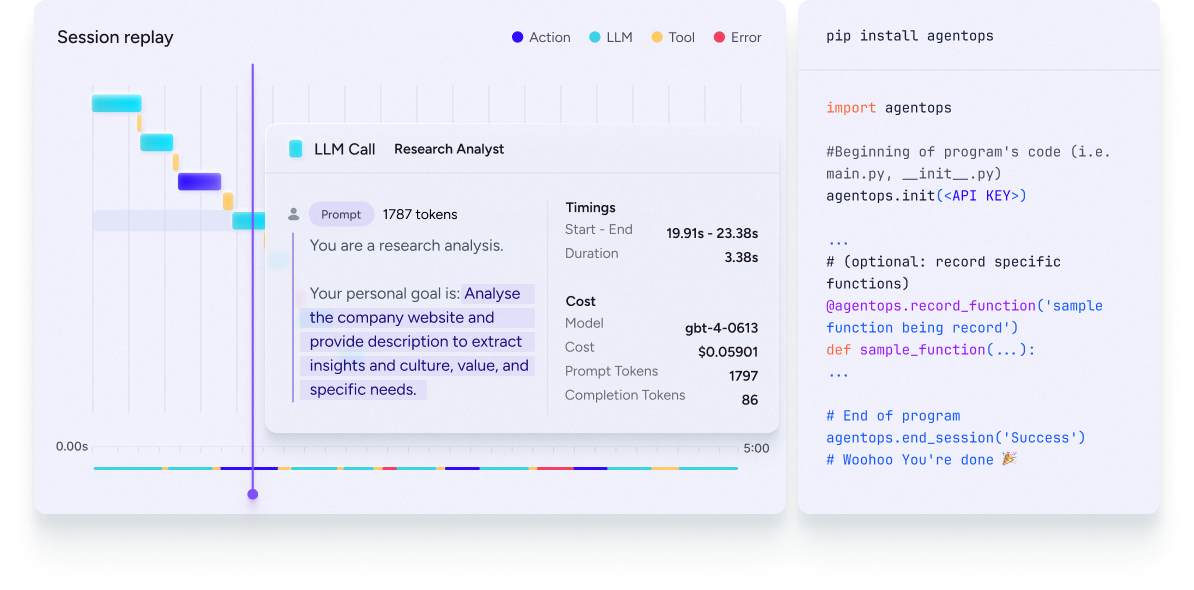

+Agent observability, in its most basic form, allows you to monitor, troubleshoot, and clarify the actions of your agent during its operation. The ability to observe every detail of your agent's activity, right down to a timestamp, enables you to trace its actions precisely, identify areas for improvement, and understand the reasons behind any failures — a key aspect of effective debugging. Beyond enhancing diagnostic precision, this level of observability is integral for your system's reliability. Think of it as the ability to identify and address issues before they spiral out of control. Observability isn't just about keeping things running smoothly and maximizing uptime; it's about strengthening your agent-based solutions.

+

+

+

+## TL;DR

+* AutoGen® offers detailed multi-agent observability with AgentOps.

+* AgentOps offers the best experience for developers building with AutoGen in just two lines of code.

+* Enterprises can now trust AutoGen in production with detailed monitoring and logging from AgentOps.

+

+AutoGen is excited to announce an integration with AgentOps, the industry leader in agent observability and compliance. Back in February, [Bloomberg declared 2024 the year of AI Agents](https://www.bloomberg.com/news/newsletters/2024-02-15/tech-companies-bet-the-world-is-ready-for-ai-agents). And it's true! We've seen AI transform from simplistic chatbots to autonomously making decisions and completing tasks on a user's behalf.

+

+However, as with most new technologies, companies and engineering teams can be slow to develop processes and best practices. One part of the agent workflow we're betting on is the importance of observability. Letting your agents run wild might work for a hobby project, but if you're building enterprise-grade agents for production, it's crucial to understand where your agents are succeeding and failing. Observability isn't just an option; it's a requirement.

+

+As agents evolve into even more powerful and complex tools, you should view them increasingly as tools designed to augment your team's capabilities. Agents will take on more prominent roles and responsibilities, take action, and provide immense value. However, this means you must monitor your agents the same way a good manager maintains visibility over their personnel. AgentOps offers developers observability for debugging and detecting failures. It provides the tools to monitor all the key metrics your agents use in one easy-to-read dashboard. Monitoring is more than just a “nice to have”; it's a critical component for any team looking to build and scale AI agents.

+

+## What is Agent Observability?

+

+Agent observability, in its most basic form, allows you to monitor, troubleshoot, and clarify the actions of your agent during its operation. The ability to observe every detail of your agent's activity, right down to a timestamp, enables you to trace its actions precisely, identify areas for improvement, and understand the reasons behind any failures — a key aspect of effective debugging. Beyond enhancing diagnostic precision, this level of observability is integral for your system's reliability. Think of it as the ability to identify and address issues before they spiral out of control. Observability isn't just about keeping things running smoothly and maximizing uptime; it's about strengthening your agent-based solutions.

+

+ +

+## Why AgentOps?

+

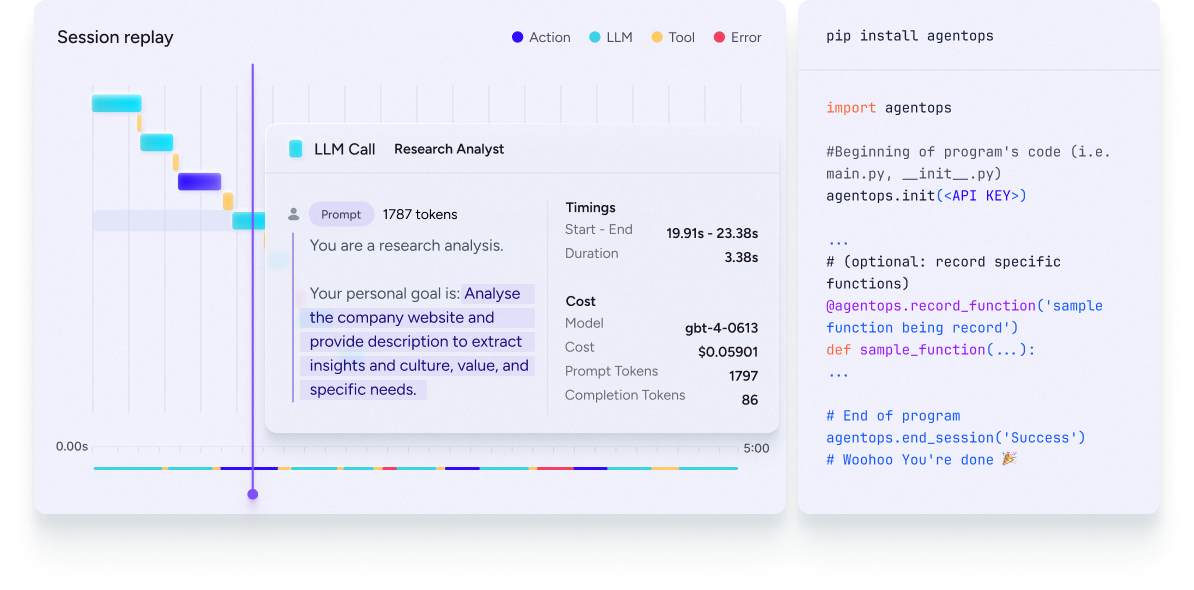

+AutoGen has simplified the process of building agents, yet we recognized the need for an easy-to-use, native tool for observability. We've previously discussed AgentOps, and now we're excited to partner with AgentOps as our official agent observability tool. Integrating AgentOps with AutoGen simplifies your workflow and boosts your agents' performance through clear observability, ensuring they operate optimally. For more details, check out our [AgentOps documentation](https://microsoft.github.io/autogen/docs/notebooks/agentchat_agentops/).

+

+

+

+## Why AgentOps?

+

+AutoGen has simplified the process of building agents, yet we recognized the need for an easy-to-use, native tool for observability. We've previously discussed AgentOps, and now we're excited to partner with AgentOps as our official agent observability tool. Integrating AgentOps with AutoGen simplifies your workflow and boosts your agents' performance through clear observability, ensuring they operate optimally. For more details, check out our [AgentOps documentation](https://microsoft.github.io/autogen/docs/notebooks/agentchat_agentops/).

+

+ +

+Enterprises and enthusiasts trust AutoGen as the leader in building agents. With our partnership with AgentOps, developers can now natively debug agents for efficiency and ensure compliance, providing a comprehensive audit trail for all of your agents' activities. AgentOps allows you to monitor LLM calls, costs, latency, agent failures, multi-agent interactions, tool usage, session-wide statistics, and more all from one dashboard.

+

+By combining the agent-building capabilities of AutoGen with the observability tools of AgentOps, we're providing our users with a comprehensive solution that enhances agent performance and reliability. This collaboration establishes that enterprises can confidently deploy AI agents in production environments, knowing they have the best tools to monitor, debug, and optimize their agents.

+

+The best part is that it only takes two lines of code. All you need to do is set an `AGENTOPS_API_KEY` in your environment (Get API key here: https://app.agentops.ai/account) and call `agentops.init()`:

+```

+import os

+import agentops

+

+agentops.init(os.environ["AGENTOPS_API_KEY"])

+```

+

+## AgentOps's Features

+

+AgentOps includes all the functionality you need to ensure your agents are suitable for real-world, scalable solutions.

+

+

+

+Enterprises and enthusiasts trust AutoGen as the leader in building agents. With our partnership with AgentOps, developers can now natively debug agents for efficiency and ensure compliance, providing a comprehensive audit trail for all of your agents' activities. AgentOps allows you to monitor LLM calls, costs, latency, agent failures, multi-agent interactions, tool usage, session-wide statistics, and more all from one dashboard.

+

+By combining the agent-building capabilities of AutoGen with the observability tools of AgentOps, we're providing our users with a comprehensive solution that enhances agent performance and reliability. This collaboration establishes that enterprises can confidently deploy AI agents in production environments, knowing they have the best tools to monitor, debug, and optimize their agents.

+

+The best part is that it only takes two lines of code. All you need to do is set an `AGENTOPS_API_KEY` in your environment (Get API key here: https://app.agentops.ai/account) and call `agentops.init()`:

+```

+import os

+import agentops

+

+agentops.init(os.environ["AGENTOPS_API_KEY"])

+```

+

+## AgentOps's Features

+

+AgentOps includes all the functionality you need to ensure your agents are suitable for real-world, scalable solutions.

+

+ +

+* **Analytics Dashboard:** The AgentOps Analytics Dashboard allows you to configure and assign agents and automatically track what actions each agent is taking simultaneously. When used with AutoGen, AgentOps is automatically configured for multi-agent compatibility, allowing users to track multiple agents across runs easily. Instead of a terminal-level screen, AgentOps provides a superior user experience with its intuitive interface.

+* **Tracking LLM Costs:** Cost tracking is natively set up within AgentOps and provides a rolling total. This allows developers to see and track their run costs and accurately predict future costs.

+* **Recursive Thought Detection:** One of the most frustrating aspects of agents is when they get trapped and perform the same task repeatedly for hours on end. AgentOps can identify when agents fall into infinite loops, ensuring efficiency and preventing wasteful computation.

+

+AutoGen users also have access to the following features in AgentOps:

+

+* **Replay Analytics:** Watch step-by-step agent execution graphs.

+* **Custom Reporting:** Create custom analytics on agent performance.

+* **Public Model Testing:** Test your agents against benchmarks and leaderboards.

+* **Custom Tests:** Run your agents against domain-specific tests.

+* **Compliance and Security:** Create audit logs and detect potential threats, such as profanity and leaks of Personally Identifiable Information.

+* **Prompt Injection Detection:** Identify potential code injection and secret leaks.

+

+## Conclusion

+

+By integrating AgentOps into AutoGen, we've given our users everything they need to make production-grade agents, improve them, and track their performance to ensure they're doing exactly what you need them to do. Without it, you're operating blindly, unable to tell where your agents are succeeding or failing. AgentOps provides the required observability tools needed to monitor, debug, and optimize your agents for enterprise-level performance. It offers everything developers need to scale their AI solutions, from cost tracking to recursive thought detection.

+

+Did you find this note helpful? Would you like to share your thoughts, use cases, and findings? Please join our observability channel in the [AutoGen Discord](https://discord.gg/hXJknP54EH).

diff --git a/website/blog/authors.yml b/website/blog/authors.yml

index 0e023514465d..f9e7495c5f30 100644

--- a/website/blog/authors.yml

+++ b/website/blog/authors.yml

@@ -140,3 +140,15 @@ marklysze:

title: AI Freelancer

url: https://github.com/marklysze

image_url: https://github.com/marklysze.png

+

+areibman:

+ name: Alex Reibman

+ title: Co-founder/CEO at AgentOps

+ url: https://github.com/areibman

+ image_url: https://github.com/areibman.png

+

+bboynton97:

+ name: Braelyn Boynton

+ title: AI Engineer at AgentOps

+ url: https://github.com/bboynton97

+ image_url: https://github.com/bboynton97.png

diff --git a/website/docs/Use-Cases/agent_chat.md b/website/docs/Use-Cases/agent_chat.md

index c55b0d29d5d6..59156c0eb046 100644

--- a/website/docs/Use-Cases/agent_chat.md

+++ b/website/docs/Use-Cases/agent_chat.md

@@ -18,7 +18,7 @@ designed to solve tasks through inter-agent conversations. Specifically, the age

The figure below shows the built-in agents in AutoGen.

-We have designed a generic [`ConversableAgent`](../reference/agentchat/conversable_agent#conversableagent-objects)

+We have designed a generic [`ConversableAgent`](../reference/agentchat/conversable_agent.md#conversableagent-objects)

class for Agents that are capable of conversing with each other through the exchange of messages to jointly finish a task. An agent can communicate with other agents and perform actions. Different agents can differ in what actions they perform after receiving messages. Two representative subclasses are [`AssistantAgent`](../reference/agentchat/assistant_agent.md#assistantagent-objects) and [`UserProxyAgent`](../reference/agentchat/user_proxy_agent.md#userproxyagent-objects)

- The [`AssistantAgent`](../reference/agentchat/assistant_agent.md#assistantagent-objects) is designed to act as an AI assistant, using LLMs by default but not requiring human input or code execution. It could write Python code (in a Python coding block) for a user to execute when a message (typically a description of a task that needs to be solved) is received. Under the hood, the Python code is written by LLM (e.g., GPT-4). It can also receive the execution results and suggest corrections or bug fixes. Its behavior can be altered by passing a new system message. The LLM [inference](#enhanced-inference) configuration can be configured via [`llm_config`].

diff --git a/website/docs/tutorial/conversation-patterns.ipynb b/website/docs/tutorial/conversation-patterns.ipynb

index 7ea8f0bfa517..56004e3b3b81 100644

--- a/website/docs/tutorial/conversation-patterns.ipynb

+++ b/website/docs/tutorial/conversation-patterns.ipynb

@@ -1559,7 +1559,7 @@

"metadata": {},

"source": [

"The implementation of the nested chats handler makes use of the\n",

- "[`register_reply`](../reference/agentchat/conversable_agent/#register_reply)\n",

+ "[`register_reply`](/docs/reference/agentchat/conversable_agent/#register_reply)\n",

"method, which allows you to make extensive customization to\n",

" `ConversableAgent`. The GroupChatManager uses the same mechanism to implement the group chat.\n",

"\n",

@@ -1579,7 +1579,7 @@

"\n",

"In this chapter, we covered two-agent chat, sequential chat, group chat,\n",

"and nested chat patterns. You can compose these patterns like LEGO blocks to \n",

- "create complex workflows. You can also use [`register_reply`](../reference/agentchat/conversable_agent/#register_reply) to create new patterns.\n",

+ "create complex workflows. You can also use [`register_reply`](/docs/reference/agentchat/conversable_agent/#register_reply) to create new patterns.\n",

"\n",

"This is the last chapter on basic AutoGen concepts. \n",

"In the next chatper, we will give you some tips on what to do next."

+

+* **Analytics Dashboard:** The AgentOps Analytics Dashboard allows you to configure and assign agents and automatically track what actions each agent is taking simultaneously. When used with AutoGen, AgentOps is automatically configured for multi-agent compatibility, allowing users to track multiple agents across runs easily. Instead of a terminal-level screen, AgentOps provides a superior user experience with its intuitive interface.

+* **Tracking LLM Costs:** Cost tracking is natively set up within AgentOps and provides a rolling total. This allows developers to see and track their run costs and accurately predict future costs.

+* **Recursive Thought Detection:** One of the most frustrating aspects of agents is when they get trapped and perform the same task repeatedly for hours on end. AgentOps can identify when agents fall into infinite loops, ensuring efficiency and preventing wasteful computation.

+

+AutoGen users also have access to the following features in AgentOps:

+

+* **Replay Analytics:** Watch step-by-step agent execution graphs.

+* **Custom Reporting:** Create custom analytics on agent performance.

+* **Public Model Testing:** Test your agents against benchmarks and leaderboards.

+* **Custom Tests:** Run your agents against domain-specific tests.

+* **Compliance and Security:** Create audit logs and detect potential threats, such as profanity and leaks of Personally Identifiable Information.

+* **Prompt Injection Detection:** Identify potential code injection and secret leaks.

+

+## Conclusion

+

+By integrating AgentOps into AutoGen, we've given our users everything they need to make production-grade agents, improve them, and track their performance to ensure they're doing exactly what you need them to do. Without it, you're operating blindly, unable to tell where your agents are succeeding or failing. AgentOps provides the required observability tools needed to monitor, debug, and optimize your agents for enterprise-level performance. It offers everything developers need to scale their AI solutions, from cost tracking to recursive thought detection.

+

+Did you find this note helpful? Would you like to share your thoughts, use cases, and findings? Please join our observability channel in the [AutoGen Discord](https://discord.gg/hXJknP54EH).

diff --git a/website/blog/authors.yml b/website/blog/authors.yml

index 0e023514465d..f9e7495c5f30 100644

--- a/website/blog/authors.yml

+++ b/website/blog/authors.yml

@@ -140,3 +140,15 @@ marklysze:

title: AI Freelancer

url: https://github.com/marklysze

image_url: https://github.com/marklysze.png

+

+areibman:

+ name: Alex Reibman

+ title: Co-founder/CEO at AgentOps

+ url: https://github.com/areibman

+ image_url: https://github.com/areibman.png

+

+bboynton97:

+ name: Braelyn Boynton

+ title: AI Engineer at AgentOps

+ url: https://github.com/bboynton97

+ image_url: https://github.com/bboynton97.png

diff --git a/website/docs/Use-Cases/agent_chat.md b/website/docs/Use-Cases/agent_chat.md

index c55b0d29d5d6..59156c0eb046 100644

--- a/website/docs/Use-Cases/agent_chat.md

+++ b/website/docs/Use-Cases/agent_chat.md

@@ -18,7 +18,7 @@ designed to solve tasks through inter-agent conversations. Specifically, the age

The figure below shows the built-in agents in AutoGen.

-We have designed a generic [`ConversableAgent`](../reference/agentchat/conversable_agent#conversableagent-objects)

+We have designed a generic [`ConversableAgent`](../reference/agentchat/conversable_agent.md#conversableagent-objects)

class for Agents that are capable of conversing with each other through the exchange of messages to jointly finish a task. An agent can communicate with other agents and perform actions. Different agents can differ in what actions they perform after receiving messages. Two representative subclasses are [`AssistantAgent`](../reference/agentchat/assistant_agent.md#assistantagent-objects) and [`UserProxyAgent`](../reference/agentchat/user_proxy_agent.md#userproxyagent-objects)

- The [`AssistantAgent`](../reference/agentchat/assistant_agent.md#assistantagent-objects) is designed to act as an AI assistant, using LLMs by default but not requiring human input or code execution. It could write Python code (in a Python coding block) for a user to execute when a message (typically a description of a task that needs to be solved) is received. Under the hood, the Python code is written by LLM (e.g., GPT-4). It can also receive the execution results and suggest corrections or bug fixes. Its behavior can be altered by passing a new system message. The LLM [inference](#enhanced-inference) configuration can be configured via [`llm_config`].

diff --git a/website/docs/tutorial/conversation-patterns.ipynb b/website/docs/tutorial/conversation-patterns.ipynb

index 7ea8f0bfa517..56004e3b3b81 100644

--- a/website/docs/tutorial/conversation-patterns.ipynb

+++ b/website/docs/tutorial/conversation-patterns.ipynb

@@ -1559,7 +1559,7 @@

"metadata": {},

"source": [

"The implementation of the nested chats handler makes use of the\n",

- "[`register_reply`](../reference/agentchat/conversable_agent/#register_reply)\n",

+ "[`register_reply`](/docs/reference/agentchat/conversable_agent/#register_reply)\n",

"method, which allows you to make extensive customization to\n",

" `ConversableAgent`. The GroupChatManager uses the same mechanism to implement the group chat.\n",

"\n",

@@ -1579,7 +1579,7 @@

"\n",

"In this chapter, we covered two-agent chat, sequential chat, group chat,\n",

"and nested chat patterns. You can compose these patterns like LEGO blocks to \n",

- "create complex workflows. You can also use [`register_reply`](../reference/agentchat/conversable_agent/#register_reply) to create new patterns.\n",

+ "create complex workflows. You can also use [`register_reply`](/docs/reference/agentchat/conversable_agent/#register_reply) to create new patterns.\n",

"\n",

"This is the last chapter on basic AutoGen concepts. \n",

"In the next chatper, we will give you some tips on what to do next."

+

+## TL;DR

+* AutoGen® offers detailed multi-agent observability with AgentOps.

+* AgentOps offers the best experience for developers building with AutoGen in just two lines of code.

+* Enterprises can now trust AutoGen in production with detailed monitoring and logging from AgentOps.

+

+AutoGen is excited to announce an integration with AgentOps, the industry leader in agent observability and compliance. Back in February, [Bloomberg declared 2024 the year of AI Agents](https://www.bloomberg.com/news/newsletters/2024-02-15/tech-companies-bet-the-world-is-ready-for-ai-agents). And it's true! We've seen AI transform from simplistic chatbots to autonomously making decisions and completing tasks on a user's behalf.

+

+However, as with most new technologies, companies and engineering teams can be slow to develop processes and best practices. One part of the agent workflow we're betting on is the importance of observability. Letting your agents run wild might work for a hobby project, but if you're building enterprise-grade agents for production, it's crucial to understand where your agents are succeeding and failing. Observability isn't just an option; it's a requirement.

+

+As agents evolve into even more powerful and complex tools, you should view them increasingly as tools designed to augment your team's capabilities. Agents will take on more prominent roles and responsibilities, take action, and provide immense value. However, this means you must monitor your agents the same way a good manager maintains visibility over their personnel. AgentOps offers developers observability for debugging and detecting failures. It provides the tools to monitor all the key metrics your agents use in one easy-to-read dashboard. Monitoring is more than just a “nice to have”; it's a critical component for any team looking to build and scale AI agents.

+

+## What is Agent Observability?

+

+Agent observability, in its most basic form, allows you to monitor, troubleshoot, and clarify the actions of your agent during its operation. The ability to observe every detail of your agent's activity, right down to a timestamp, enables you to trace its actions precisely, identify areas for improvement, and understand the reasons behind any failures — a key aspect of effective debugging. Beyond enhancing diagnostic precision, this level of observability is integral for your system's reliability. Think of it as the ability to identify and address issues before they spiral out of control. Observability isn't just about keeping things running smoothly and maximizing uptime; it's about strengthening your agent-based solutions.

+

+

+

+## TL;DR

+* AutoGen® offers detailed multi-agent observability with AgentOps.

+* AgentOps offers the best experience for developers building with AutoGen in just two lines of code.

+* Enterprises can now trust AutoGen in production with detailed monitoring and logging from AgentOps.

+

+AutoGen is excited to announce an integration with AgentOps, the industry leader in agent observability and compliance. Back in February, [Bloomberg declared 2024 the year of AI Agents](https://www.bloomberg.com/news/newsletters/2024-02-15/tech-companies-bet-the-world-is-ready-for-ai-agents). And it's true! We've seen AI transform from simplistic chatbots to autonomously making decisions and completing tasks on a user's behalf.

+

+However, as with most new technologies, companies and engineering teams can be slow to develop processes and best practices. One part of the agent workflow we're betting on is the importance of observability. Letting your agents run wild might work for a hobby project, but if you're building enterprise-grade agents for production, it's crucial to understand where your agents are succeeding and failing. Observability isn't just an option; it's a requirement.

+

+As agents evolve into even more powerful and complex tools, you should view them increasingly as tools designed to augment your team's capabilities. Agents will take on more prominent roles and responsibilities, take action, and provide immense value. However, this means you must monitor your agents the same way a good manager maintains visibility over their personnel. AgentOps offers developers observability for debugging and detecting failures. It provides the tools to monitor all the key metrics your agents use in one easy-to-read dashboard. Monitoring is more than just a “nice to have”; it's a critical component for any team looking to build and scale AI agents.

+

+## What is Agent Observability?

+

+Agent observability, in its most basic form, allows you to monitor, troubleshoot, and clarify the actions of your agent during its operation. The ability to observe every detail of your agent's activity, right down to a timestamp, enables you to trace its actions precisely, identify areas for improvement, and understand the reasons behind any failures — a key aspect of effective debugging. Beyond enhancing diagnostic precision, this level of observability is integral for your system's reliability. Think of it as the ability to identify and address issues before they spiral out of control. Observability isn't just about keeping things running smoothly and maximizing uptime; it's about strengthening your agent-based solutions.

+

+ +

+## Why AgentOps?

+

+AutoGen has simplified the process of building agents, yet we recognized the need for an easy-to-use, native tool for observability. We've previously discussed AgentOps, and now we're excited to partner with AgentOps as our official agent observability tool. Integrating AgentOps with AutoGen simplifies your workflow and boosts your agents' performance through clear observability, ensuring they operate optimally. For more details, check out our [AgentOps documentation](https://microsoft.github.io/autogen/docs/notebooks/agentchat_agentops/).

+

+

+

+## Why AgentOps?

+

+AutoGen has simplified the process of building agents, yet we recognized the need for an easy-to-use, native tool for observability. We've previously discussed AgentOps, and now we're excited to partner with AgentOps as our official agent observability tool. Integrating AgentOps with AutoGen simplifies your workflow and boosts your agents' performance through clear observability, ensuring they operate optimally. For more details, check out our [AgentOps documentation](https://microsoft.github.io/autogen/docs/notebooks/agentchat_agentops/).

+

+ +

+Enterprises and enthusiasts trust AutoGen as the leader in building agents. With our partnership with AgentOps, developers can now natively debug agents for efficiency and ensure compliance, providing a comprehensive audit trail for all of your agents' activities. AgentOps allows you to monitor LLM calls, costs, latency, agent failures, multi-agent interactions, tool usage, session-wide statistics, and more all from one dashboard.

+

+By combining the agent-building capabilities of AutoGen with the observability tools of AgentOps, we're providing our users with a comprehensive solution that enhances agent performance and reliability. This collaboration establishes that enterprises can confidently deploy AI agents in production environments, knowing they have the best tools to monitor, debug, and optimize their agents.

+

+The best part is that it only takes two lines of code. All you need to do is set an `AGENTOPS_API_KEY` in your environment (Get API key here: https://app.agentops.ai/account) and call `agentops.init()`:

+```

+import os

+import agentops

+

+agentops.init(os.environ["AGENTOPS_API_KEY"])

+```

+

+## AgentOps's Features

+

+AgentOps includes all the functionality you need to ensure your agents are suitable for real-world, scalable solutions.

+

+

+

+Enterprises and enthusiasts trust AutoGen as the leader in building agents. With our partnership with AgentOps, developers can now natively debug agents for efficiency and ensure compliance, providing a comprehensive audit trail for all of your agents' activities. AgentOps allows you to monitor LLM calls, costs, latency, agent failures, multi-agent interactions, tool usage, session-wide statistics, and more all from one dashboard.

+

+By combining the agent-building capabilities of AutoGen with the observability tools of AgentOps, we're providing our users with a comprehensive solution that enhances agent performance and reliability. This collaboration establishes that enterprises can confidently deploy AI agents in production environments, knowing they have the best tools to monitor, debug, and optimize their agents.

+

+The best part is that it only takes two lines of code. All you need to do is set an `AGENTOPS_API_KEY` in your environment (Get API key here: https://app.agentops.ai/account) and call `agentops.init()`:

+```

+import os

+import agentops

+

+agentops.init(os.environ["AGENTOPS_API_KEY"])

+```

+

+## AgentOps's Features

+

+AgentOps includes all the functionality you need to ensure your agents are suitable for real-world, scalable solutions.

+

+ +

+* **Analytics Dashboard:** The AgentOps Analytics Dashboard allows you to configure and assign agents and automatically track what actions each agent is taking simultaneously. When used with AutoGen, AgentOps is automatically configured for multi-agent compatibility, allowing users to track multiple agents across runs easily. Instead of a terminal-level screen, AgentOps provides a superior user experience with its intuitive interface.

+* **Tracking LLM Costs:** Cost tracking is natively set up within AgentOps and provides a rolling total. This allows developers to see and track their run costs and accurately predict future costs.

+* **Recursive Thought Detection:** One of the most frustrating aspects of agents is when they get trapped and perform the same task repeatedly for hours on end. AgentOps can identify when agents fall into infinite loops, ensuring efficiency and preventing wasteful computation.

+

+AutoGen users also have access to the following features in AgentOps:

+

+* **Replay Analytics:** Watch step-by-step agent execution graphs.

+* **Custom Reporting:** Create custom analytics on agent performance.

+* **Public Model Testing:** Test your agents against benchmarks and leaderboards.

+* **Custom Tests:** Run your agents against domain-specific tests.

+* **Compliance and Security:** Create audit logs and detect potential threats, such as profanity and leaks of Personally Identifiable Information.

+* **Prompt Injection Detection:** Identify potential code injection and secret leaks.

+

+## Conclusion

+

+By integrating AgentOps into AutoGen, we've given our users everything they need to make production-grade agents, improve them, and track their performance to ensure they're doing exactly what you need them to do. Without it, you're operating blindly, unable to tell where your agents are succeeding or failing. AgentOps provides the required observability tools needed to monitor, debug, and optimize your agents for enterprise-level performance. It offers everything developers need to scale their AI solutions, from cost tracking to recursive thought detection.

+

+Did you find this note helpful? Would you like to share your thoughts, use cases, and findings? Please join our observability channel in the [AutoGen Discord](https://discord.gg/hXJknP54EH).

diff --git a/website/blog/authors.yml b/website/blog/authors.yml

index 0e023514465d..f9e7495c5f30 100644

--- a/website/blog/authors.yml

+++ b/website/blog/authors.yml

@@ -140,3 +140,15 @@ marklysze:

title: AI Freelancer

url: https://github.com/marklysze

image_url: https://github.com/marklysze.png

+

+areibman:

+ name: Alex Reibman

+ title: Co-founder/CEO at AgentOps

+ url: https://github.com/areibman

+ image_url: https://github.com/areibman.png

+

+bboynton97:

+ name: Braelyn Boynton

+ title: AI Engineer at AgentOps

+ url: https://github.com/bboynton97

+ image_url: https://github.com/bboynton97.png

diff --git a/website/docs/Use-Cases/agent_chat.md b/website/docs/Use-Cases/agent_chat.md

index c55b0d29d5d6..59156c0eb046 100644

--- a/website/docs/Use-Cases/agent_chat.md

+++ b/website/docs/Use-Cases/agent_chat.md

@@ -18,7 +18,7 @@ designed to solve tasks through inter-agent conversations. Specifically, the age

The figure below shows the built-in agents in AutoGen.

-We have designed a generic [`ConversableAgent`](../reference/agentchat/conversable_agent#conversableagent-objects)

+We have designed a generic [`ConversableAgent`](../reference/agentchat/conversable_agent.md#conversableagent-objects)

class for Agents that are capable of conversing with each other through the exchange of messages to jointly finish a task. An agent can communicate with other agents and perform actions. Different agents can differ in what actions they perform after receiving messages. Two representative subclasses are [`AssistantAgent`](../reference/agentchat/assistant_agent.md#assistantagent-objects) and [`UserProxyAgent`](../reference/agentchat/user_proxy_agent.md#userproxyagent-objects)

- The [`AssistantAgent`](../reference/agentchat/assistant_agent.md#assistantagent-objects) is designed to act as an AI assistant, using LLMs by default but not requiring human input or code execution. It could write Python code (in a Python coding block) for a user to execute when a message (typically a description of a task that needs to be solved) is received. Under the hood, the Python code is written by LLM (e.g., GPT-4). It can also receive the execution results and suggest corrections or bug fixes. Its behavior can be altered by passing a new system message. The LLM [inference](#enhanced-inference) configuration can be configured via [`llm_config`].

diff --git a/website/docs/tutorial/conversation-patterns.ipynb b/website/docs/tutorial/conversation-patterns.ipynb

index 7ea8f0bfa517..56004e3b3b81 100644

--- a/website/docs/tutorial/conversation-patterns.ipynb

+++ b/website/docs/tutorial/conversation-patterns.ipynb

@@ -1559,7 +1559,7 @@

"metadata": {},

"source": [

"The implementation of the nested chats handler makes use of the\n",

- "[`register_reply`](../reference/agentchat/conversable_agent/#register_reply)\n",

+ "[`register_reply`](/docs/reference/agentchat/conversable_agent/#register_reply)\n",

"method, which allows you to make extensive customization to\n",

" `ConversableAgent`. The GroupChatManager uses the same mechanism to implement the group chat.\n",

"\n",

@@ -1579,7 +1579,7 @@

"\n",

"In this chapter, we covered two-agent chat, sequential chat, group chat,\n",

"and nested chat patterns. You can compose these patterns like LEGO blocks to \n",

- "create complex workflows. You can also use [`register_reply`](../reference/agentchat/conversable_agent/#register_reply) to create new patterns.\n",

+ "create complex workflows. You can also use [`register_reply`](/docs/reference/agentchat/conversable_agent/#register_reply) to create new patterns.\n",

"\n",

"This is the last chapter on basic AutoGen concepts. \n",

"In the next chatper, we will give you some tips on what to do next."

+

+* **Analytics Dashboard:** The AgentOps Analytics Dashboard allows you to configure and assign agents and automatically track what actions each agent is taking simultaneously. When used with AutoGen, AgentOps is automatically configured for multi-agent compatibility, allowing users to track multiple agents across runs easily. Instead of a terminal-level screen, AgentOps provides a superior user experience with its intuitive interface.

+* **Tracking LLM Costs:** Cost tracking is natively set up within AgentOps and provides a rolling total. This allows developers to see and track their run costs and accurately predict future costs.

+* **Recursive Thought Detection:** One of the most frustrating aspects of agents is when they get trapped and perform the same task repeatedly for hours on end. AgentOps can identify when agents fall into infinite loops, ensuring efficiency and preventing wasteful computation.

+

+AutoGen users also have access to the following features in AgentOps:

+

+* **Replay Analytics:** Watch step-by-step agent execution graphs.

+* **Custom Reporting:** Create custom analytics on agent performance.

+* **Public Model Testing:** Test your agents against benchmarks and leaderboards.

+* **Custom Tests:** Run your agents against domain-specific tests.

+* **Compliance and Security:** Create audit logs and detect potential threats, such as profanity and leaks of Personally Identifiable Information.

+* **Prompt Injection Detection:** Identify potential code injection and secret leaks.

+

+## Conclusion

+

+By integrating AgentOps into AutoGen, we've given our users everything they need to make production-grade agents, improve them, and track their performance to ensure they're doing exactly what you need them to do. Without it, you're operating blindly, unable to tell where your agents are succeeding or failing. AgentOps provides the required observability tools needed to monitor, debug, and optimize your agents for enterprise-level performance. It offers everything developers need to scale their AI solutions, from cost tracking to recursive thought detection.

+

+Did you find this note helpful? Would you like to share your thoughts, use cases, and findings? Please join our observability channel in the [AutoGen Discord](https://discord.gg/hXJknP54EH).

diff --git a/website/blog/authors.yml b/website/blog/authors.yml

index 0e023514465d..f9e7495c5f30 100644

--- a/website/blog/authors.yml

+++ b/website/blog/authors.yml

@@ -140,3 +140,15 @@ marklysze:

title: AI Freelancer

url: https://github.com/marklysze

image_url: https://github.com/marklysze.png

+

+areibman:

+ name: Alex Reibman

+ title: Co-founder/CEO at AgentOps

+ url: https://github.com/areibman

+ image_url: https://github.com/areibman.png

+

+bboynton97:

+ name: Braelyn Boynton

+ title: AI Engineer at AgentOps

+ url: https://github.com/bboynton97

+ image_url: https://github.com/bboynton97.png

diff --git a/website/docs/Use-Cases/agent_chat.md b/website/docs/Use-Cases/agent_chat.md

index c55b0d29d5d6..59156c0eb046 100644

--- a/website/docs/Use-Cases/agent_chat.md

+++ b/website/docs/Use-Cases/agent_chat.md

@@ -18,7 +18,7 @@ designed to solve tasks through inter-agent conversations. Specifically, the age

The figure below shows the built-in agents in AutoGen.

-We have designed a generic [`ConversableAgent`](../reference/agentchat/conversable_agent#conversableagent-objects)

+We have designed a generic [`ConversableAgent`](../reference/agentchat/conversable_agent.md#conversableagent-objects)

class for Agents that are capable of conversing with each other through the exchange of messages to jointly finish a task. An agent can communicate with other agents and perform actions. Different agents can differ in what actions they perform after receiving messages. Two representative subclasses are [`AssistantAgent`](../reference/agentchat/assistant_agent.md#assistantagent-objects) and [`UserProxyAgent`](../reference/agentchat/user_proxy_agent.md#userproxyagent-objects)

- The [`AssistantAgent`](../reference/agentchat/assistant_agent.md#assistantagent-objects) is designed to act as an AI assistant, using LLMs by default but not requiring human input or code execution. It could write Python code (in a Python coding block) for a user to execute when a message (typically a description of a task that needs to be solved) is received. Under the hood, the Python code is written by LLM (e.g., GPT-4). It can also receive the execution results and suggest corrections or bug fixes. Its behavior can be altered by passing a new system message. The LLM [inference](#enhanced-inference) configuration can be configured via [`llm_config`].

diff --git a/website/docs/tutorial/conversation-patterns.ipynb b/website/docs/tutorial/conversation-patterns.ipynb

index 7ea8f0bfa517..56004e3b3b81 100644

--- a/website/docs/tutorial/conversation-patterns.ipynb

+++ b/website/docs/tutorial/conversation-patterns.ipynb

@@ -1559,7 +1559,7 @@

"metadata": {},

"source": [

"The implementation of the nested chats handler makes use of the\n",

- "[`register_reply`](../reference/agentchat/conversable_agent/#register_reply)\n",

+ "[`register_reply`](/docs/reference/agentchat/conversable_agent/#register_reply)\n",

"method, which allows you to make extensive customization to\n",

" `ConversableAgent`. The GroupChatManager uses the same mechanism to implement the group chat.\n",

"\n",

@@ -1579,7 +1579,7 @@

"\n",

"In this chapter, we covered two-agent chat, sequential chat, group chat,\n",

"and nested chat patterns. You can compose these patterns like LEGO blocks to \n",

- "create complex workflows. You can also use [`register_reply`](../reference/agentchat/conversable_agent/#register_reply) to create new patterns.\n",

+ "create complex workflows. You can also use [`register_reply`](/docs/reference/agentchat/conversable_agent/#register_reply) to create new patterns.\n",

"\n",

"This is the last chapter on basic AutoGen concepts. \n",

"In the next chatper, we will give you some tips on what to do next."