Replies: 18 comments 61 replies

-

|

That's not a bug, that is exactly what is expected.

You need to design you code to work another way, or use SSE or websockets. |

Beta Was this translation helpful? Give feedback.

-

I meant that, after 30 seconds, the ESP stalls indefinitely, even if the client (chrome or

I did, nothing new is printed at the point of the stall.

Yeah! That's exactly what I'm doing.

I am testing with Thank you for trying on your end! |

Beta Was this translation helpful? Give feedback.

-

|

interesting. |

Beta Was this translation helpful? Give feedback.

-

|

So... I was able to fix this. Or more like enforce some safety, anyone dare to try? do not consider this as a final solution, but at least it allows me to pass this slow test with default values |

Beta Was this translation helpful? Give feedback.

-

|

@vortigont @Levak : fyi i have updated the sample to be able to control the delay + total payload length:

I am finally able to reproduce. |

Beta Was this translation helpful? Give feedback.

-

|

I guess I have a solution. Separate the I will need to make a fork to commit the changes a bit later tonight. For now, here is a preview: static void _async_service_task(void *pvParameters){

lwip_event_packet_t * packet = NULL;

for (;;) {

- if(_get_async_event(&packet)){

+ if(_get_async_event(&packet) || _get_async_poll(&packet)){

#if CONFIG_ASYNC_TCP_USE_WDTQueueHandle_t _async_queue;

+QueueHandle_t _poll_queue;

+static inline bool _init_poll_queue(){

+ if(!_poll_queue){

+ _poll_queue = xQueueCreate(CONFIG_ASYNC_TCP_POLL_QUEUE_SIZE, sizeof(lwip_event_packet_t *));

+ if(!_poll_queue){

+ return false;

+ }

+ }

+ return true;

+}

+static inline bool _send_async_poll(lwip_event_packet_t ** e){

+ return _async_queue && xQueueSend(_poll_queue, e, portMAX_DELAY) == pdPASS;

+}

+static inline bool _get_async_poll(lwip_event_packet_t ** e){

+ return _async_queue && xQueueReceive(_poll_queue, e, portMAX_DELAY) == pdPASS;

+} } else if(e->event == LWIP_TCP_CLEAR){

- _remove_events_with_arg(e->arg);

+ _remove_events_with_arg(_async_queue, e->arg);

+ _remove_events_with_arg(_poll_queue, e->arg); static int8_t _tcp_poll(void * arg, struct tcp_pcb * pcb) {

+ // inhibit polling when event queue is getting filled up, let it handle _onack's

+ if (uxQueueMessagesWaiting(_poll_queue) > CONFIG_ASYNC_TCP_POLL_QUEUE_SIZE - 1)

+ return ERR_OK;

//ets_printf("+P: 0x%08x\n", pcb);

lwip_event_packet_t * e = (lwip_event_packet_t *)malloc(sizeof(lwip_event_packet_t));

e->event = LWIP_TCP_POLL;

e->arg = arg;

e->poll.pcb = pcb;

- if (!_send_async_event(&e)) {

+ if (!_send_async_poll(&e)) {

free((void*)(e));

}

return ERR_OK;

} |

Beta Was this translation helpful? Give feedback.

-

|

@Levak :

I definitely think you should use websocket or sse for that because your SD reading would be done outside of the async_tcp callbacks and you would just need to send events / ws messages after having read a batch or files, folder or whatever. Also, if you have a TWDT in your app (like i use to) you can apply a TWDT to only some specific tasks., not the SD reading task. That's what I do in my apps with my TaskManager lib (https://github.com/mathieucarbou/MycilaTaskManager). I am splitting my app parts in tasks (once or recurrent) associate them to managers, which can be started async or not, linked to a TWDT or not. Typically for long running tasks like MQTT publishing I do not activate the TWDT. |

Beta Was this translation helpful? Give feedback.

-

|

Wow, you had quite productive discussion here, guys.

yeah, that was just a PoC as I told

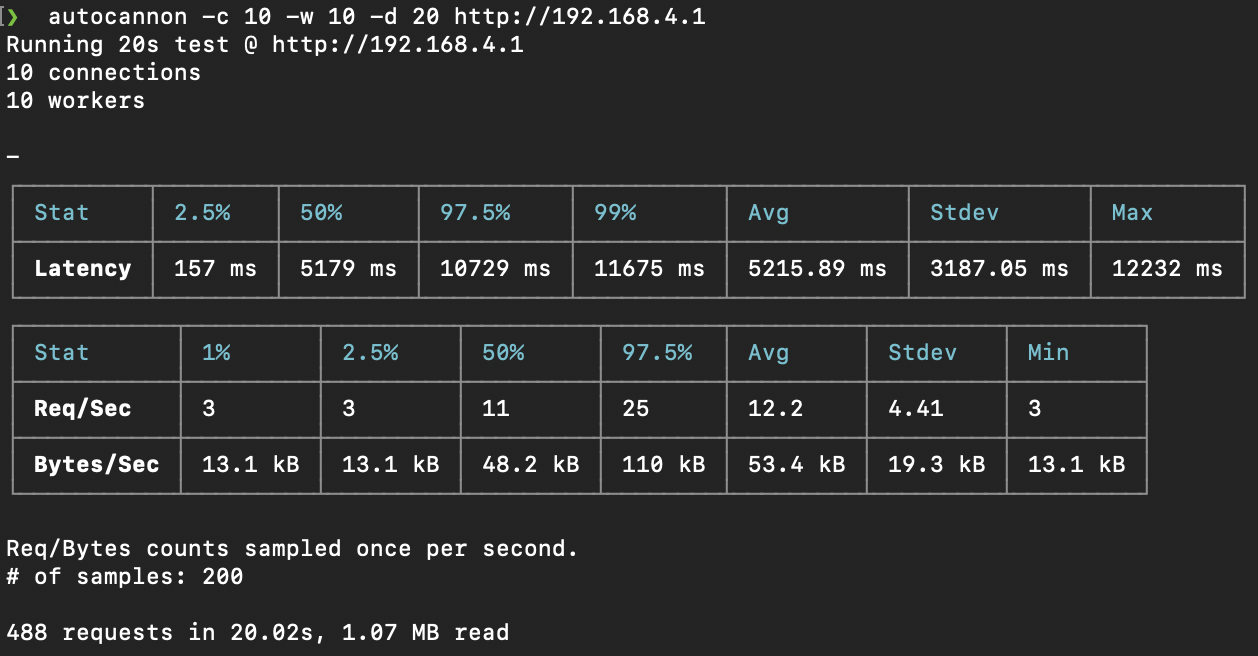

that could be a solution but not that simple, having two queues brings you an execution ordering problem that might pop out randomly. Some of the messages should be processed in order for a specific connection or a special care should be taken for cases like closing/timeouting etc.. Another side effect is that high polling rate could give you a very low latency but quite slow connections. Which is what Mathieu hit with his benchmark as I see later on. A very simple, but not perfect, solution is to use probability-based throttling as I mentioned. I've updated my [branch](https:// Very simple and cheap in terms of memory/resources but it works OK for multiple slow connections too, 'cause it allows poll events from other connections to enter the queue. It distributes unfair and make new connections to start really slow but it would catch up eventually. In general it needs a more deep refactoring here. I'm not that good at this level but some old networking skills working with traffic shapers rings some bells in my head :) Will try to optimize it a bit more (with a help of testing) :) Here what it gives me with Mathieu's cannon (I had to comment out rate-limit midware, btw) |

Beta Was this translation helpful? Give feedback.

-

|

Kudos for all of us participating in this discussion! |

Beta Was this translation helpful? Give feedback.

-

|

looks not bad as for me. Could be considered as minimal acceptable solution, other more deeper changes (if required) we could suggest/estimate separately? |

Beta Was this translation helpful? Give feedback.

-

|

Released! |

Beta Was this translation helpful? Give feedback.

-

|

Nice done, team!

Not at all, actually it's not a proper fix for chunked responses as I think, but more of a things that must be there for other cases, like massive SSE's or overloaded websockets which relies on polls a lot. For chunked responses it should be other approach and I'm already have some ideas where to look into. Will keep you in touch in this thread. And another thing - AsycnTCPSock's implementation is definitely worth a closer look in the light of modern IDF's API. I like its being more abstracted. Let's keep it in mind for upcoming no-8266 option. |

Beta Was this translation helpful? Give feedback.

-

|

I've found out how deep this rabbit hole is :) One thing is in-flight buffer credits - it intended to throttle refill (user callback) calls for long lived connections for a constantly incoming poll events if there is already enough chunks being transmitted. Another is buffer refill moderation - it tries to reduce refill (user callback) calls from being called too often when socket buff space is small compared to in-flight data size. This effectively drops ack events and reduces queue size while making sure to have a buffer at leat 50% loaded. And from the AsyncTCP side it tries to coalesce consecutive poll events into one, it evicts the queue from excessive polls and allows other connections to get more chance to get task's time. I've done some basic testing with downloading static file from LittleFS and running that ugly

Origin: crash by watchdog I do not have SD card setup currently to test, so would appreciate some feedback from @Levak |

Beta Was this translation helpful? Give feedback.

-

|

another testcase:

Origin: crash by watchdog |

Beta Was this translation helpful? Give feedback.

-

|

thanks for that issues links, @Levak. I never know that this problem exists in fatfs code actially. Maybe because I have never used it yet? :)

sorry to say but that is not the right way to do this also :( By doing this you would suffocate all other webserver operations also including websockets if you would switch to this approach. Something like:

That's not simple piece of code but "this is the way" :) |

Beta Was this translation helpful? Give feedback.

-

|

thanks for the tests @mathieucarbou

yes, that is exactly what

all my changes so far to asynctcp was to try minimize the impact of long callbacks to the queue itself were pure math, so very cheap, costs no memory and does not do any intermediate mallocs. But in the end all does come to the same thing - if a single event takes the task for too long - all other events (i.e. connections) are blocked. Even if the q could reorder it somehow it will anyway stall eventually on slowest ones. The only way here is to create a pool of worker threads and somehow manage it. Won't be that easy and cheap and also won't work for single core boards at all. Have some other idea in mind though... |

Beta Was this translation helpful? Give feedback.

-

|

I've tested downloading of files from SDCARD. Work perfectly. Two large files in parallel with multiple small reqs is also fine. Large files are not affecting small downloads at all. |

Beta Was this translation helpful? Give feedback.

-

|

that's a nice catch with

|

Beta Was this translation helpful? Give feedback.

-

Hi there!

Description

When the ESPAsyncWebServer sends a very long chunked response (over 30 seconds), the ESP32 crashes on a watchdog timeout in AsyncTCP. I can see in Chrome DevTools that the chunked answer is being sent correctly, one chunk at a time, but when the 30 seconds mark hits, the ESP32 resets.

In my code, I am trying to traverse a list of files from an SD card. After roughly 300 files (700 in this particular test) sent, aka 30 seconds, the problem appears.

Pagination can be implemented, but we are trying to stay retro-compatible with an official WebUI that lacks such feature. This is sadly a regression compared to a basic WebServer app, where the timeout can be as long as one wants, as long as the client doesn't disconnect.

Link: https://github.com/Levak/sdwifi/blob/async/sdwifi.ino#L714

Board: esp32-pico-d4 (Fysetc SD WIFI PRO)

Stack trace

E (809882) task_wdt: Task watchdog got triggered. The following tasks/users did not reset the watchdog in time:

E (809882) task_wdt: - async_tcp (CPU 0/1)

E (809882) task_wdt: Tasks currently running:

E (809882) task_wdt: CPU 0: IDLE0

E (809882) task_wdt: CPU 1: loopTask

Additional notes

Old discussion about this code

Beta Was this translation helpful? Give feedback.

All reactions