-

Notifications

You must be signed in to change notification settings - Fork 4.9k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Kube cluster shutsdown after a few minutes MiniKube VM still running #2326

Comments

|

It seems like a way to workaround this issue is to stop the VM in Hyper-V Manager and un-enable Dynamic Memory. Seems a bit hacky to do that, but it appears to work. the problem is if you use Can a temporary fix in this area be to disable Dynamic Memory when minikube is provisioning the VM Hyper-V? |

|

completing this request should fix the bug... #1766 |

|

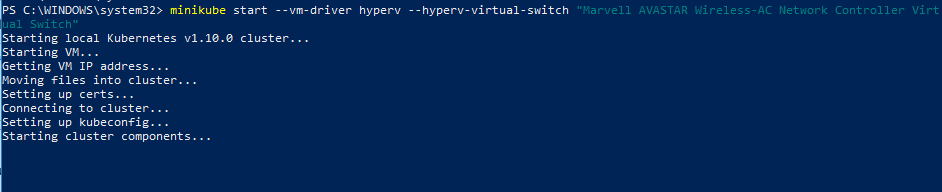

Hi @JockDaRock , thank you for your post on medium. I followed your steps form your post on medium; any my console output has been stuck at this for sometime ... As a result of this hanging, I deleted the minikube VM from hyper-v and tried to run the minikube again. Any thoughts on this ? Thanks, |

|

Hey @IoTFier , Thank you for your response and giving it a try. I have not heard anything back from the Kubernetes team on this issue sadly (going on almost 5 months). Anyway, I am not familiar with your particular problem. But I can try and reproduce this week and see what happens. First impression is to try a complete reinstall of minikube. |

|

@IoTFier just as a heads up, I have seen errors like that before. I'm sure there is a way to fix it either through the VM (i.e. fixing services), reconfiguring parts of the minikube environment (I haven't read through all of the setup code yet), or reconfiguring kubectl. Remember, minikube's job is to make it easy to setup a single node Kubernetes cluster for testing, that doesn't mean you can't set one up by hand on Windows. Meaning, if the minikube start fails you can perform all of the steps it would have performed by hand... but then why use minikube. However, it is pretty easy to just clean up a bad install and then try again. To clean up a bad install, stop the minikube VM in HyperV. (You may have to stop the service if it is really hung - which has happened to me but I feel that may be more my system than a general problem.) Once the VM is stopped, delete it using the HyperV manager. Then open your Windows home directory in Windows Explorer (C:\Users{your username}). In there you will see a .minikube folder. Assuming you have just set things up and it has failed (since you were just issuing the minukube start command), delete the .minikube folder. This will let the minikube start command restart the setup and configuration. IF you plan on changing any of the defaults that name the minikube VM or change where things are stored you should also delete the .kube folder in your home directory as well. It contains the kubectl (and other kubeXXX apps) configuration and will not point to the correct information. Of course, you could correct a lot of that (I am assuming) by issuing I will also admit that I'm sure you can remove a portion of that folder to have it reinstall while caching the ISO download but since it is only 160Mb or so it isn't a big deal. BTW... I'm using the latest minikube 0.28.1 and I haven't experienced this bug with dynamic memory. |

|

Closing open localkube issues, as localkube was long deprecated and removed from the last two minikube releases. I hope you were able to find another solution that worked out for you - if not, please open a new PR. |

Is this a BUG REPORT or FEATURE REQUEST? (choose one):

BUG REPORT

Please provide the following details:

Environment:

Windows 10 / Surface Pro 4, 16 GB RAM, i7 processor

Minikube version (use

minikube version): v 0.24.1OS (e.g. from /etc/os-release): Windows 10

VM Driver (e.g.

cat ~/.minikube/machines/minikube/config.json | grep DriverName): hyper-vISO version (e.g.

cat ~/.minikube/machines/minikube/config.json | grep -i ISOorminikube ssh cat /etc/VERSION): v0.23.6Install tools: chocolatey

Others:

The above can be generated in one go with the following commands (can be copied and pasted directly into your terminal):

What happened:

I created a Virtual-Switch in Hyper-V Manager in Windows to use with minikube. To do this I open the Hyper-V manager in windows. On the right of the Hyper-V manager in Windows, I clicked the Virtual Switch Manager setting. I then add a new Virtual Switch call "Primary Virtual Switch" and select external switch for the kind of switch it is.

I then executed the following command in administrator cli:

minikube start --vm-driver hyperv --hyperv-virtual-switch "Primary Virtual Switch"waited for my cluster to start...

After the cluster starts running, I deploy an app for testing.

Everything starts up just fine and is working as expected... until it's NOT working.

A few minutes later I am unable to access any part of the application I ran. The cluster shuts down - status of minilube is VM is running and cluster is down.

What you expected to happen:

I expect for the kube cluster to remain running...

How to reproduce it (as minimally and precisely as possible):

see above description of what happened.

The below logs was all I was able to get.

Output of

minikube logs(if applicable):Anything else do we need to know:

Not sure, I think that is about everything...

The text was updated successfully, but these errors were encountered: