-

Notifications

You must be signed in to change notification settings - Fork 309

Add a DistilBertMaskedLM task model #724

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add a DistilBertMaskedLM task model #724

Conversation

mattdangerw

left a comment

mattdangerw

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thank you! Left some initial comments, also looks like there are some test failures that need investigation.

| This preprocessing layer will prepare inputs for a masked language modeling | ||

| task. It is primarily intended for use with the | ||

| `keras_nlp.models.DistilBertMaskedLM` task model. Preprocessing will occur in |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

fix line length

| masked. | ||

| mask_selection_length: The maximum number of masked tokens supported | ||

| by the layer. | ||

| mask_token_rate: float, defaults to 0.8. `mask_token_rate` must be |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is prexising I know, but let's remove these defaults for this arg and below (we don't do this for other args in this list)

| multiple steps. | ||

| - Tokenize any number of input segments using the `tokenizer`. | ||

| - Pack the inputs together with the appropriate `"<s>"`, `"</s>"` and |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

These are not the special tokens used by DistilBert, this will need updating.

| # Alternatively, you can create a preprocessor from your own vocabulary. | ||

| # The usage is exactly the same as above. | ||

| vocab = {"<s>": 0, "<pad>": 1, "</s>": 2, "<mask>": 3} |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

this is not the right format for the vocabulary for this model, will need updating.

| Disclaimer: Pre-trained models are provided on an "as is" basis, without | ||

| warranties or conditions of any kind. The underlying model is provided by a | ||

| third party and subject to a separate license, available | ||

| [here](https://github.com/facebookresearch/fairseq). |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

update this to the right disclaimer for the model, distilbert is huggingface

thanks @mattdangerw for review, I make the changes soon |

|

|

@ADITYADAS1999 thanks! Look like there are still some test failure to fix in the preprocessor layer you are adding from your screen shot! You can also see the failures in the automatic testing running on this PR. |

yes , I working on this.... |

This reverts commit 555ba33.

|

hey @mattdangerw all the tests are checked , can you review for necessary changes. |

|

@mattdangerw I just left some comments, as peer review. I hope peer review is allowed at KerasNLP ! I would request you to take a look as well Before @ADITYADAS1999 addresses them. |

hey @shivance is there a issue in PR ? can you explain little bit. |

|

@shivance you are welcome to leave comments if you spot things, but I don't actually see anything from you here. Did you mean to post something to this PR? |

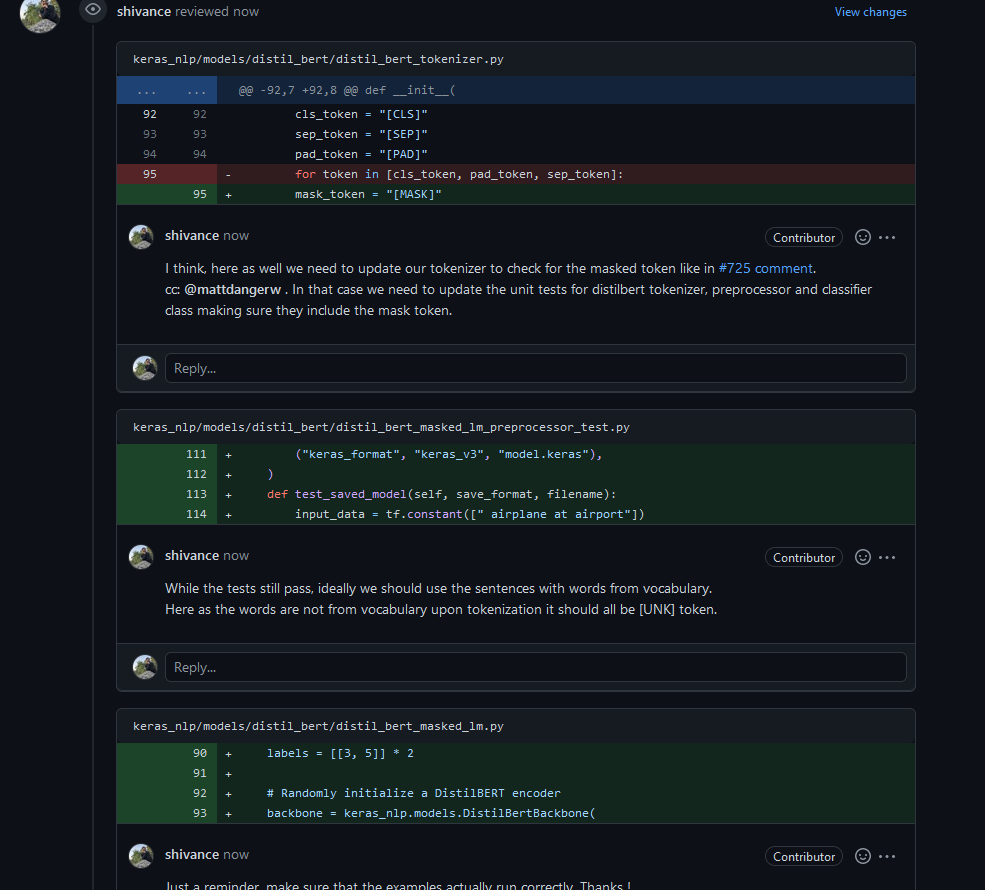

| ("keras_format", "keras_v3", "model.keras"), | ||

| ) | ||

| def test_saved_model(self, save_format, filename): | ||

| input_data = tf.constant([" airplane at airport"]) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

While the tests still pass, ideally we should use the sentences with words from vocabulary.

Here as the words are not from vocabulary upon tokenization it should all be [UNK] token.

| labels = [[3, 5]] * 2 | ||

| # Randomly initialize a DistilBERT encoder | ||

| backbone = keras_nlp.models.DistilBertBackbone( |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Just a reminder, make sure that the examples actually run correctly. Thanks !

|

@ADITYADAS1999 @mattdangerw Could you check now, if my comments are visible? |

yes @shivance it's visible for me. @mattdangerw is it visible for you ? |

|

Yes all visible now! With github I often "Start a review" but forgot to finish, if you don't finish the review your comments will not post. |

Yes Yes @mattdangerw , That's what happened 😅. Reviewed a PR for the first time. Also could you review comments? If they are valid. |

mattdangerw

left a comment

mattdangerw

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thank you! This looks good!

There is one comment re the saved model test from @shivance that is worth doing.

I'm running a quick training job to make sure things look ok, can merge when that is done.

|

Thanks pulling this in now! Here's the trial run -> https://gist.github.com/mattdangerw/b16c257973762a0b4ab9a34f6a932cc1 |

|

Thank you @mattdangerw 🎉 |

|

Hey @mattdangerw @jbischof I want to participate gsoc this year, can you guide or advice in project idea, proposals docs in kerasNLP ? 👨💻 |

DistilBertMaskedLMPreprocessorpreprocessor layer and tests.DistilBertMaskedLMtask model and tests.