diff --git a/README.md b/README.md

index 98cfe0e30b4..88c6db10fc9 100644

--- a/README.md

+++ b/README.md

@@ -113,18 +113,13 @@ trainer.train()

```python

from datasets import load_dataset

-from transformers import AutoModelForCausalLM, AutoTokenizer

-from trl import DPOConfig, DPOTrainer

+from trl import DPOTrainer

-model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen2.5-0.5B-Instruct")

-tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen2.5-0.5B-Instruct")

dataset = load_dataset("trl-lib/ultrafeedback_binarized", split="train")

-training_args = DPOConfig(output_dir="Qwen2.5-0.5B-DPO")

+

trainer = DPOTrainer(

- model=model,

- args=training_args,

+ model="Qwen3/Qwen-0.6B",

train_dataset=dataset,

- processing_class=tokenizer

)

trainer.train()

```

diff --git a/docs/source/bema_for_reference_model.md b/docs/source/bema_for_reference_model.md

index 832acfc932c..896e642a347 100644

--- a/docs/source/bema_for_reference_model.md

+++ b/docs/source/bema_for_reference_model.md

@@ -7,26 +7,16 @@ This feature implements the BEMA algorithm to update the reference model during

```python

from trl.experimental.bema_for_ref_model import BEMACallback, DPOTrainer

from datasets import load_dataset

-from transformers import AutoModelForCausalLM, AutoTokenizer

-

-pref_dataset = load_dataset("trl-internal-testing/zen", "standard_preference", split="train")

-ref_model = AutoModelForCausalLM.from_pretrained("trl-internal-testing/tiny-Qwen2ForCausalLM-2.5")

+dataset = load_dataset("trl-internal-testing/zen", "standard_preference", split="train")

bema_callback = BEMACallback(update_ref_model=True)

-model = AutoModelForCausalLM.from_pretrained("trl-internal-testing/tiny-Qwen2ForCausalLM-2.5")

-tokenizer = AutoTokenizer.from_pretrained("trl-internal-testing/tiny-Qwen2ForCausalLM-2.5")

-tokenizer.pad_token = tokenizer.eos_token

-

trainer = DPOTrainer(

- model=model,

- ref_model=ref_model,

- train_dataset=pref_dataset,

- processing_class=tokenizer,

+ model="trl-internal-testing/tiny-Qwen2ForCausalLM-2.5",

+ train_dataset=dataset,

callbacks=[bema_callback],

)

-

trainer.train()

```

diff --git a/docs/source/customization.md b/docs/source/customization.md

index 19ba1088fd1..7ae44d0e51d 100644

--- a/docs/source/customization.md

+++ b/docs/source/customization.md

@@ -1,32 +1,27 @@

# Training customization

-TRL is designed with modularity in mind so that users are able to efficiently customize the training loop for their needs. Below are examples on how you can apply and test different techniques.

+TRL is designed with modularity in mind so that users are able to efficiently customize the training loop for their needs. Below are examples on how you can apply and test different techniques.

> [!NOTE]

> Although these examples use the [`DPOTrainer`], these customization methods apply to most (if not all) trainers in TRL.

## Use different optimizers and schedulers

-By default, the `DPOTrainer` creates a `torch.optim.AdamW` optimizer. You can create and define a different optimizer and pass it to `DPOTrainer` as follows:

+By default, the [`DPOTrainer`] creates a `torch.optim.AdamW` optimizer. You can create and define a different optimizer and pass it to [`DPOTrainer`] as follows:

```python

from datasets import load_dataset

-from transformers import AutoModelForCausalLM, AutoTokenizer

from torch import optim

-from trl import DPOConfig, DPOTrainer

+from transformers import AutoModelForCausalLM

+from trl import DPOTrainer

-model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen2.5-0.5B-Instruct")

-tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen2.5-0.5B-Instruct")

dataset = load_dataset("trl-lib/ultrafeedback_binarized", split="train")

-training_args = DPOConfig(output_dir="Qwen2.5-0.5B-DPO")

-

-optimizer = optim.SGD(model.parameters(), lr=training_args.learning_rate)

+model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen2.5-0.5B-Instruct")

+optimizer = optim.SGD(model.parameters(), lr=1e-6)

trainer = DPOTrainer(

model=model,

- args=training_args,

train_dataset=dataset,

- tokenizer=tokenizer,

optimizers=(optimizer, None),

)

trainer.train()

@@ -39,7 +34,7 @@ You can also add learning rate schedulers by passing both optimizer and schedule

```python

from torch import optim

-optimizer = optim.AdamW(model.parameters(), lr=training_args.learning_rate)

+optimizer = optim.AdamW(model.parameters(), lr=1e-6)

lr_scheduler = optim.lr_scheduler.StepLR(optimizer, step_size=30, gamma=0.1)

trainer = DPOTrainer(..., optimizers=(optimizer, lr_scheduler))

@@ -50,7 +45,7 @@ trainer = DPOTrainer(..., optimizers=(optimizer, lr_scheduler))

Another tool you can use for more memory efficient fine-tuning is to share layers between the reference model and the model you want to train.

```python

-from trl import create_reference_model

+from trl.experimental.utils import create_reference_model

ref_model = create_reference_model(model, num_shared_layers=6)

diff --git a/docs/source/dpo_trainer.md b/docs/source/dpo_trainer.md

index 2d618c7a96b..fc7fb3d1a64 100644

--- a/docs/source/dpo_trainer.md

+++ b/docs/source/dpo_trainer.md

@@ -1,148 +1,162 @@

# DPO Trainer

-[](https://huggingface.co/models?other=dpo,trl) [](https://github.com/huggingface/smol-course/tree/main/2_preference_alignment)

+[](https://huggingface.co/models?other=dpo,trl) [](https://github.com/huggingface/smol-course/tree/main/2_preference_alignment)

## Overview

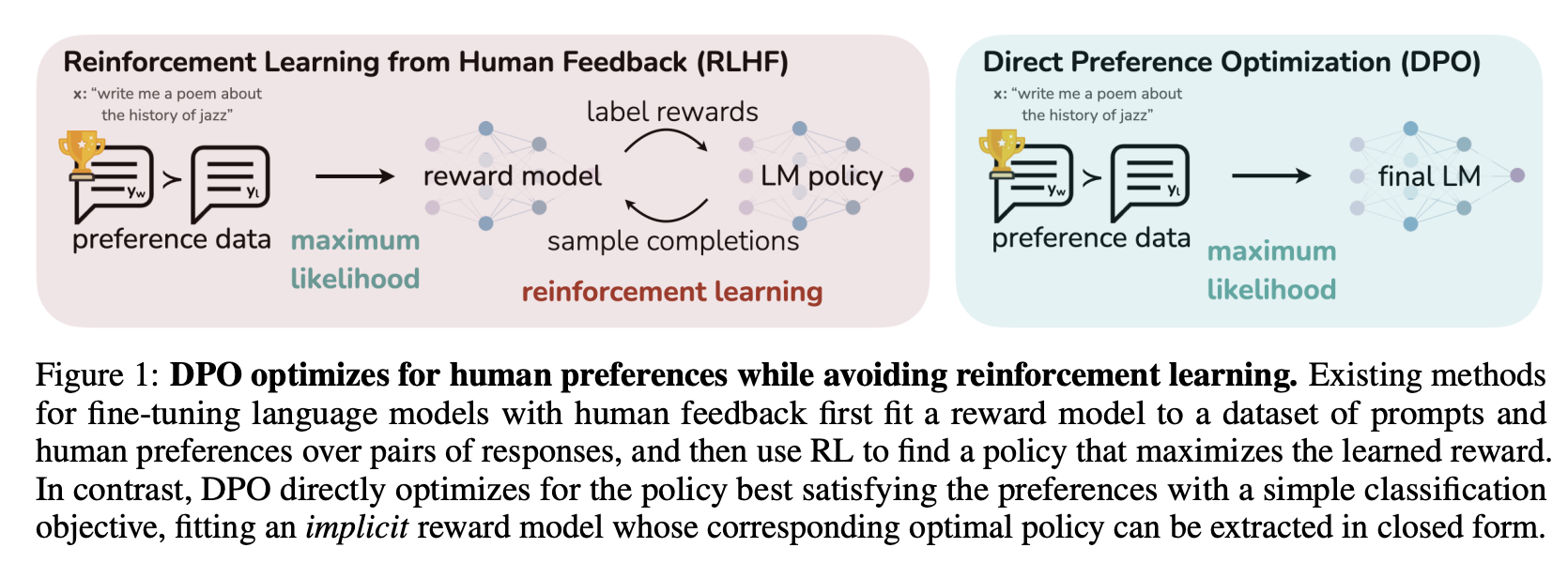

-TRL supports the DPO Trainer for training language models from preference data, as described in the paper [Direct Preference Optimization: Your Language Model is Secretly a Reward Model](https://huggingface.co/papers/2305.18290) by [Rafael Rafailov](https://huggingface.co/rmrafailov), Archit Sharma, Eric Mitchell, [Stefano Ermon](https://huggingface.co/ermonste), [Christopher D. Manning](https://huggingface.co/manning), [Chelsea Finn](https://huggingface.co/cbfinn).

+TRL supports the Direct Preference Optimization (DPO) Trainer for training language models, as described in the paper [Direct Preference Optimization: Your Language Model is Secretly a Reward Model](https://huggingface.co/papers/2305.18290) by [Rafael Rafailov](https://huggingface.co/rmrafailov), Archit Sharma, Eric Mitchell, [Stefano Ermon](https://huggingface.co/ermonste), [Christopher D. Manning](https://huggingface.co/manning), [Chelsea Finn](https://huggingface.co/cbfinn).

The abstract from the paper is the following:

> While large-scale unsupervised language models (LMs) learn broad world knowledge and some reasoning skills, achieving precise control of their behavior is difficult due to the completely unsupervised nature of their training. Existing methods for gaining such steerability collect human labels of the relative quality of model generations and fine-tune the unsupervised LM to align with these preferences, often with reinforcement learning from human feedback (RLHF). However, RLHF is a complex and often unstable procedure, first fitting a reward model that reflects the human preferences, and then fine-tuning the large unsupervised LM using reinforcement learning to maximize this estimated reward without drifting too far from the original model. In this paper we introduce a new parameterization of the reward model in RLHF that enables extraction of the corresponding optimal policy in closed form, allowing us to solve the standard RLHF problem with only a simple classification loss. The resulting algorithm, which we call Direct Preference Optimization (DPO), is stable, performant, and computationally lightweight, eliminating the need for sampling from the LM during fine-tuning or performing significant hyperparameter tuning. Our experiments show that DPO can fine-tune LMs to align with human preferences as well as or better than existing methods. Notably, fine-tuning with DPO exceeds PPO-based RLHF in ability to control sentiment of generations, and matches or improves response quality in summarization and single-turn dialogue while being substantially simpler to implement and train.

-The first step is to train an SFT model, to ensure the data we train on is in-distribution for the DPO algorithm.

-

-Then, fine-tuning a language model via DPO consists of two steps and is easier than [PPO](ppo_trainer):

-

-1. **Data collection**: Gather a [preference dataset](dataset_formats#preference) with positive and negative selected pairs of generation, given a prompt.

-2. **Optimization**: Maximize the log-likelihood of the DPO loss directly.

-

-This process is illustrated in the sketch below (from [Figure 1 of the DPO paper](https://huggingface.co/papers/2305.18290)):

-

-

-

-Read more about DPO algorithm in the [original paper](https://huggingface.co/papers/2305.18290).

+This post-training method was contributed by [Kashif Rasul](https://huggingface.co/kashif) and later refactored by [Quentin Gallouédec](https://huggingface.co/qgallouedec).

## Quick start

-This example demonstrates how to train a model using the DPO method. We use the [Qwen 0.5B model](https://huggingface.co/Qwen/Qwen2-0.5B-Instruct) as the base model. We use the preference data from the [UltraFeedback dataset](https://huggingface.co/datasets/openbmb/UltraFeedback). You can view the data in the dataset here:

-

-

-

-Below is the script to train the model:

+This example demonstrates how to train a language model using the [`DPOTrainer`] from TRL. We train a [Qwen 3 0.6B](https://huggingface.co/Qwen/Qwen3-0.6B) model on the [UltraFeedback dataset](https://huggingface.co/datasets/openbmb/UltraFeedback).

```python

-# train_dpo.py

+from trl import DPOTrainer

from datasets import load_dataset

-from trl import DPOConfig, DPOTrainer

-from transformers import AutoModelForCausalLM, AutoTokenizer

-model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen2-0.5B-Instruct")

-tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen2-0.5B-Instruct")

-train_dataset = load_dataset("trl-lib/ultrafeedback_binarized", split="train")

-

-training_args = DPOConfig(output_dir="Qwen2-0.5B-DPO")

-trainer = DPOTrainer(model=model, args=training_args, processing_class=tokenizer, train_dataset=train_dataset)

+trainer = DPOTrainer(

+ model="Qwen/Qwen3-0.6B",

+ train_dataset=load_dataset("trl-lib/ultrafeedback_binarized", split="train"),

+)

trainer.train()

```

-Execute the script using the following command:

-

-```bash

-accelerate launch train_dpo.py

-```

-

-Distributed across 8 GPUs, the training takes approximately 3 minutes. You can verify the training progress by checking the reward graph. An increasing trend in the reward margin indicates that the model is improving and generating better responses over time.

-

-

+

-To see how the [trained model](https://huggingface.co/trl-lib/Qwen2-0.5B-DPO) performs, you can use the [Transformers Chat CLI](https://huggingface.co/docs/transformers/quicktour#chat-with-text-generation-models).

+## Expected dataset type and format

-$ transformers chat trl-lib/Qwen2-0.5B-DPO

-<shirin_yamani>:

-What is Huggingface?

+DPO requires a [preference](dataset_formats#preference) dataset. The [`DPOTrainer`] is compatible with both [standard](dataset_formats#standard) and [conversational](dataset_formats#conversational) dataset formats. When provided with a conversational dataset, the trainer will automatically apply the chat template to the dataset.

-<trl-lib/Qwen2-0.5B-DPO>:

-Huggingface is a platform that allows users to access a variety of open-source machine learning resources such as pre-trained models and datasets Huggingface is a platform that allows users to access a variety of open-source machine learning resources such as pre-trained models and datasets for the development of machine learning models and applications. It provides a repository of over 300, 000 pre-trained models in Huggingface is a platform that allows users to access a variety of open-source machine learning resources such as pre-trained models and datasets for the development of machine learning models and applications. It provides a repository of over 300, 000 pre-trained models in a variety of languages, enabling users to explore and utilize the latest techniques and technologies in the field of machine learning.

-

-

-## Expected dataset type

+```python

+# Standard format

+## Explicit prompt (recommended)

+preference_example = {"prompt": "The sky is", "chosen": " blue.", "rejected": " green."}

+# Implicit prompt

+preference_example = {"chosen": "The sky is blue.", "rejected": "The sky is green."}

+

+# Conversational format

+## Explicit prompt (recommended)

+preference_example = {"prompt": [{"role": "user", "content": "What color is the sky?"}],

+ "chosen": [{"role": "assistant", "content": "It is blue."}],

+ "rejected": [{"role": "assistant", "content": "It is green."}]}

+## Implicit prompt

+preference_example = {"chosen": [{"role": "user", "content": "What color is the sky?"},

+ {"role": "assistant", "content": "It is blue."}],

+ "rejected": [{"role": "user", "content": "What color is the sky?"},

+ {"role": "assistant", "content": "It is green."}]}

+```

-DPO requires a [preference dataset](dataset_formats#preference). The [`DPOTrainer`] supports both [conversational](dataset_formats#conversational) and [standard](dataset_formats#standard) dataset formats. When provided with a conversational dataset, the trainer will automatically apply the chat template to the dataset.

+If your dataset is not in one of these formats, you can preprocess it to convert it into the expected format. Here is an example with the [Vezora/Code-Preference-Pairs](https://huggingface.co/datasets/Vezora/Code-Preference-Pairs) dataset:

-Although the [`DPOTrainer`] supports both explicit and implicit prompts, we recommend using explicit prompts. If provided with an implicit prompt dataset, the trainer will automatically extract the prompt from the `"chosen"` and `"rejected"` columns. For more information, refer to the [preference style](dataset_formats#preference) section.

+```python

+from datasets import load_dataset

-### Special considerations for vision-language models

+dataset = load_dataset("Vezora/Code-Preference-Pairs")

-The [`DPOTrainer`] supports fine-tuning vision-language models (VLMs). For these models, a vision dataset is required. To learn more about the specific format for vision datasets, refer to the [Vision dataset format](dataset_formats#vision-datasets) section.

-Additionally, unlike standard text-based models where a `tokenizer` is used, for VLMs, you should replace the `tokenizer` with a `processor`.

+def preprocess_function(example):

+ return {

+ "prompt": [{"role": "user", "content": example["input"]}],

+ "chosen": [{"role": "assistant", "content": example["accepted"]}],

+ "rejected": [{"role": "assistant", "content": example["rejected"]}],

+ }

-```diff

-- model = AutoModelForCausalLM.from_pretrained(model_id)

-+ model = AutoModelForImageTextToText.from_pretrained(model_id)

-- tokenizer = AutoTokenizer.from_pretrained(model_id)

-+ processor = AutoProcessor.from_pretrained(model_id)

+dataset = dataset.map(preprocess_function, remove_columns=["instruction", "input", "accepted", "ID"])

+print(next(iter(dataset["train"])))

+```

- trainer = DPOTrainer(

- model,

- args=training_args,

- train_dataset=train_dataset,

-- processing_class=tokenizer,

-+ processing_class=processor,

-)

+```json

+{

+ "prompt": [{"role": "user", "content": "Create a nested loop to print every combination of numbers [...]"}],

+ "chosen": [{"role": "assistant", "content": "Here is an example of a nested loop in Python [...]"}],

+ "rejected": [{"role": "assistant", "content": "Here is an example of a nested loop in Python [...]"}],

+}

```

-For a complete example of fine-tuning a vision-language model, refer to the script in [`examples/scripts/dpo_vlm.py`](https://github.com/huggingface/trl/blob/main/examples/scripts/dpo_vlm.py).

+## Looking deeper into the DPO method

-## Example script

+Direct Preference Optimization (DPO) is a training method designed to align a language model with preference data. Instead of supervised input–output pairs, the model is trained on pairs of completions to the same prompt, where one completion is preferred over the other. The objective directly optimizes the model to assign higher likelihood to preferred completions than to dispreferred ones, relative to a reference model, without requiring an explicit reward model.

-We provide an example script to train a model using the DPO method. The script is available in [`trl/scripts/dpo.py`](https://github.com/huggingface/trl/blob/main/trl/scripts/dpo.py)

+This section breaks down how DPO works in practice, covering the key steps: **preprocessing** and **loss computation**.

-To test the DPO script with the [Qwen2 0.5B model](https://huggingface.co/Qwen/Qwen2-0.5B-Instruct) on the [UltraFeedback dataset](https://huggingface.co/datasets/trl-lib/ultrafeedback_binarized), run the following command:

+### Preprocessing and tokenization

-```bash

-accelerate launch trl/scripts/dpo.py \

- --model_name_or_path Qwen/Qwen2-0.5B-Instruct \

- --dataset_name trl-lib/ultrafeedback_binarized \

- --num_train_epochs 1 \

- --output_dir Qwen2-0.5B-DPO

-```

+During training, each example is expected to contain a prompt along with a preferred (`chosen`) and a dispreferred (`rejected`) completion. For more details on the expected formats, see [Dataset formats](dataset_formats).

+The [`DPOTrainer`] tokenizes each input using the model's tokenizer.

-## Logged metrics

+### Computing the loss

-While training and evaluating, we record the following reward metrics:

+

-- `rewards/chosen`: the mean difference between the log probabilities of the policy model and the reference model for the chosen responses scaled by beta

-- `rewards/rejected`: the mean difference between the log probabilities of the policy model and the reference model for the rejected responses scaled by beta

-- `rewards/accuracies`: mean of how often the chosen rewards are > than the corresponding rejected rewards

-- `rewards/margins`: the mean difference between the chosen and corresponding rejected rewards

+The loss used in DPO is defined as follows:

+$$

+\mathcal{L}_{\mathrm{DPO}}(\theta) = -\mathbb{E}_{(x,y^{+},y^{-})}\!\left[\log \sigma\!\left(\beta\Big(\log\frac{{\pi_{\theta}(y^{+}\!\mid x)}}{{\pi_{\mathrm{ref}}(y^{+}\!\mid x)}}-\log \frac{{\pi_{\theta}(y^{-}\!\mid x)}}{{\pi_{\mathrm{ref}}(y^{-}\!\mid x)}}\Big)\right)\right]

+$$

+

+where \\( x \\) is the prompt, \\( y^+ \\) is the preferred completion and \\( y^- \\) is the dispreferred completion. \\( \pi_{\theta} \\) is the policy model being trained, \\( \pi_{\mathrm{ref}} \\) is the reference model, \\( \sigma \\) is the sigmoid function, and \\( \beta > 0 \\) is a hyperparameter that controls the strength of the preference signal.

-## Loss functions

+#### Loss Types

-The DPO algorithm supports several loss functions. The loss function can be set using the `loss_type` parameter in the [`DPOConfig`]. The following loss functions are supported:

+Several formulations of the objective have been proposed in the literature. Initially, the objective of DPO was defined as presented above.

| `loss_type=` | Description |

| --- | --- |

| `"sigmoid"` (default) | Given the preference data, we can fit a binary classifier according to the Bradley-Terry model and in fact the [DPO](https://huggingface.co/papers/2305.18290) authors propose the sigmoid loss on the normalized likelihood via the `logsigmoid` to fit a logistic regression. |

| `"hinge"` | The [RSO](https://huggingface.co/papers/2309.06657) authors propose to use a hinge loss on the normalized likelihood from the [SLiC](https://huggingface.co/papers/2305.10425) paper. In this case, the `beta` is the reciprocal of the margin. |

-| `"ipo"` | The [IPO](https://huggingface.co/papers/2310.12036) authors provide a deeper theoretical understanding of the DPO algorithms and identify an issue with overfitting and propose an alternative loss. In this case, the `beta` is the reciprocal of the gap between the log-likelihood ratios of the chosen vs the rejected completion pair and thus the smaller the `beta` the larger this gaps is. As per the paper the loss is averaged over log-likelihoods of the completion (unlike DPO which is summed only). |

-| `"exo_pair"` | The [EXO](https://huggingface.co/papers/2402.00856) authors propose to minimize the reverse KL instead of the negative log-sigmoid loss of DPO which corresponds to forward KL. Setting non-zero `label_smoothing` (default `1e-3`) leads to a simplified version of EXO on pair-wise preferences (see Eqn. (16) of the [EXO paper](https://huggingface.co/papers/2402.00856)). The full version of EXO uses `K>2` completions generated by the SFT policy, which becomes an unbiased estimator of the PPO objective (up to a constant) when `K` is sufficiently large. |

+| `"ipo"` | The [IPO](https://huggingface.co/papers/2310.12036) authors argue the logit transform can overfit and propose the identity transform to optimize preferences directly; TRL exposes this as `loss_type="ipo"`. |

+| `"exo_pair"` | The [EXO](https://huggingface.co/papers/2402.00856) authors propose reverse-KL preference optimization. `label_smoothing` must be strictly greater than `0.0`; a recommended value is `1e-3` (see Eq. 16 for the simplified pairwise variant). The full method uses `K>2` SFT completions and approaches PPO as `K` grows. |

| `"nca_pair"` | The [NCA](https://huggingface.co/papers/2402.05369) authors shows that NCA optimizes the absolute likelihood for each response rather than the relative likelihood. |

-| `"robust"` | The [Robust DPO](https://huggingface.co/papers/2403.00409) authors propose an unbiased estimate of the DPO loss that is robust to preference noise in the data. Like in cDPO, it assumes that the preference labels are noisy with some probability. In this approach, the `label_smoothing` parameter in the [`DPOConfig`] is used to model the probability of existing label noise. To apply this conservative loss, set `label_smoothing` to a value greater than 0.0 (between 0.0 and 0.5; the default is 0.0) |

+| `"robust"` | The [Robust DPO](https://huggingface.co/papers/2403.00409) authors propose an unbiased DPO loss under noisy preferences. Use `label_smoothing` in [`DPOConfig`] to model label-flip probability; valid values are in the range `[0.0, 0.5)`. |

| `"bco_pair"` | The [BCO](https://huggingface.co/papers/2404.04656) authors train a binary classifier whose logit serves as a reward so that the classifier maps {prompt, chosen completion} pairs to 1 and {prompt, rejected completion} pairs to 0. For unpaired data, we recommend the dedicated [`experimental.bco.BCOTrainer`]. |

| `"sppo_hard"` | The [SPPO](https://huggingface.co/papers/2405.00675) authors claim that SPPO is capable of solving the Nash equilibrium iteratively by pushing the chosen rewards to be as large as 1/2 and the rejected rewards to be as small as -1/2 and can alleviate data sparsity issues. The implementation approximates this algorithm by employing hard label probabilities, assigning 1 to the winner and 0 to the loser. |

-| `"aot"` or `loss_type="aot_unpaired"` | The [AOT](https://huggingface.co/papers/2406.05882) authors propose to use Distributional Preference Alignment Via Optimal Transport. Traditionally, the alignment algorithms use paired preferences at a sample level, which does not ensure alignment on the distributional level. AOT, on the other hand, can align LLMs on paired or unpaired preference data by making the reward distribution of the positive samples stochastically dominant in the first order on the distribution of negative samples. Specifically, `loss_type="aot"` is appropriate for paired datasets, where each prompt has both chosen and rejected responses; `loss_type="aot_unpaired"` is for unpaired datasets. In a nutshell, `loss_type="aot"` ensures that the log-likelihood ratio of chosen to rejected of the aligned model has higher quantiles than that ratio for the reference model. `loss_type="aot_unpaired"` ensures that the chosen reward is higher on all quantiles than the rejected reward. Note that in both cases quantiles are obtained via sorting. To fully leverage the advantages of the AOT algorithm, it is important to maximize the per-GPU batch size. |

-| `"apo_zero"` or `loss_type="apo_down"` | The [APO](https://huggingface.co/papers/2408.06266) method introduces an "anchored" version of the alignment objective. There are two variants: `apo_zero` and `apo_down`. The `apo_zero` loss increases the likelihood of winning outputs while decreasing the likelihood of losing outputs, making it suitable when the model is less performant than the winning outputs. On the other hand, `apo_down` decreases the likelihood of both winning and losing outputs, but with a stronger emphasis on reducing the likelihood of losing outputs. This variant is more effective when the model is better than the winning outputs. |

+| `"aot"` or `loss_type="aot_unpaired"` | The [AOT](https://huggingface.co/papers/2406.05882) authors propose Distributional Preference Alignment via Optimal Transport. `loss_type="aot"` is for paired data; `loss_type="aot_unpaired"` is for unpaired data. Both enforce stochastic dominance via sorted quantiles; larger per-GPU batch sizes help. |

+| `"apo_zero"` or `loss_type="apo_down"` | The [APO](https://huggingface.co/papers/2408.06266) method introduces an anchored objective. `apo_zero` boosts winners and downweights losers (useful when the model underperforms the winners). `apo_down` downweights both, with stronger pressure on losers (useful when the model already outperforms winners). |

| `"discopop"` | The [DiscoPOP](https://huggingface.co/papers/2406.08414) paper uses LLMs to discover more efficient offline preference optimization losses. In the paper the proposed DiscoPOP loss (which is a log-ratio modulated loss) outperformed other optimization losses on different tasks (IMDb positive text generation, Reddit TLDR summarization, and Alpaca Eval 2.0). |

| `"sft"` | SFT (Supervised Fine-Tuning) loss is the negative log likelihood loss, used to train the model to generate preferred responses. |

+## Logged metrics

+

+While training and evaluating we record the following reward metrics:

+

+* `global_step`: The total number of optimizer steps taken so far.

+* `epoch`: The current epoch number, based on dataset iteration.

+* `num_tokens`: The total number of tokens processed so far.

+* `loss`: The average cross-entropy loss computed over non-masked tokens in the current logging interval.

+* `entropy`: The average entropy of the model's predicted token distribution over non-masked tokens.

+* `mean_token_accuracy`: The proportion of non-masked tokens for which the model’s top-1 prediction matches the token from the chosen completion.

+* `learning_rate`: The current learning rate, which may change dynamically if a scheduler is used.

+* `grad_norm`: The L2 norm of the gradients, computed before gradient clipping.

+* `logits/chosen`: The average logit values assigned by the model to the tokens in the chosen completion.

+* `logits/rejected`: The average logit values assigned by the model to the tokens in the rejected completion.

+* `logps/chosen`: The average log-probability assigned by the model to the tokens in the chosen completion.

+* `logps/rejected`: The average log-probability assigned by the model to the tokens in the rejected completion.

+* `rewards/chosen`: The average implicit reward computed for the chosen completion, computed as \\( \beta \log \frac{{\pi_{\theta}(y^{+}\!\mid x)}}{{\pi_{\mathrm{ref}}(y^{+}\!\mid x)}} \\).

+* `rewards/rejected`: The average implicit reward computed for the rejected completion, computed as \\( \beta \log \frac{{\pi_{\theta}(y^{-}\!\mid x)}}{{\pi_{\mathrm{ref}}(y^{-}\!\mid x)}} \\).

+* `rewards/margins`: The average implicit reward margin between the chosen and rejected completions.

+* `rewards/accuracies`: The proportion of examples where the implicit reward for the chosen completion is higher than that for the rejected completion.

+

+## Customization

+

+### Compatibility and constraints

+

+Some argument combinations are intentionally restricted in the current [`DPOTrainer`] implementation:

+

+* `use_weighting=True` is not supported with `loss_type="aot"` or `loss_type="aot_unpaired"`.

+* With `use_liger_kernel=True`:

+ * only a single `loss_type` is supported,

+ * `compute_metrics` is not supported,

+ * `precompute_ref_log_probs=True` is not supported.

+* `sync_ref_model=True` is not supported when training with PEFT models that do not keep a standalone `ref_model`.

+* `sync_ref_model=True` cannot be combined with `precompute_ref_log_probs=True`.

+* `precompute_ref_log_probs=True` is not supported with `IterableDataset` (train or eval).

+

### Multi-loss combinations

The DPO trainer supports combining multiple loss functions with different weights, enabling more sophisticated optimization strategies. This is particularly useful for implementing algorithms like MPO (Mixed Preference Optimization). MPO is a training approach that combines multiple optimization objectives, as described in the paper [Enhancing the Reasoning Ability of Multimodal Large Language Models via Mixed Preference Optimization](https://huggingface.co/papers/2411.10442).

@@ -152,141 +166,123 @@ To combine multiple losses, specify the loss types and corresponding weights as

```python

# MPO: Combines DPO (sigmoid) for preference and BCO (bco_pair) for quality

training_args = DPOConfig(

- loss_type=["sigmoid", "bco_pair", "sft"], # Loss types to combine

- loss_weights=[0.8, 0.2, 1.0] # Corresponding weights, as used in the MPO paper

+ loss_type=["sigmoid", "bco_pair", "sft"], # loss types to combine

+ loss_weights=[0.8, 0.2, 1.0] # corresponding weights, as used in the MPO paper

)

```

-If `loss_weights` is not provided, all loss types will have equal weights (1.0 by default).

-

-### Label smoothing

-

-The [cDPO](https://ericmitchell.ai/cdpo.pdf) is a tweak on the DPO loss where we assume that the preference labels are noisy with some probability. In this approach, the `label_smoothing` parameter in the [`DPOConfig`] is used to model the probability of existing label noise. To apply this conservative loss, set `label_smoothing` to a value greater than 0.0 (between 0.0 and 0.5; the default is 0.0).

+### Model initialization

-### Syncing the reference model

+You can directly pass the kwargs of the [`~transformers.AutoModelForCausalLM.from_pretrained()`] method to the [`DPOConfig`]. For example, if you want to load a model in a different precision, analogous to

-The [TR-DPO](https://huggingface.co/papers/2404.09656) paper suggests syncing the reference model weights after every `ref_model_sync_steps` steps of SGD with weight `ref_model_mixup_alpha` during DPO training. To toggle this callback use the `sync_ref_model=True` in the [`DPOConfig`].

-

-### RPO loss

-

-The [RPO](https://huggingface.co/papers/2404.19733) paper implements an iterative preference tuning algorithm using a loss related to the RPO loss in this [paper](https://huggingface.co/papers/2405.16436) that essentially consists of a weighted SFT loss on the chosen preferences together with the DPO loss. To use this loss, include `"sft"` in the `loss_type` list in the [`DPOConfig`] and set its weight in `loss_weights`.

+```python

+model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen3-0.6B", dtype=torch.bfloat16)

+```

-> [!WARNING]

-> The old implementation of RPO loss in TRL used the `rpo_alpha` parameter. This parameter is deprecated and will be removed in 0.29.0; instead.

+you can do so by passing the `model_init_kwargs={"dtype": torch.bfloat16}` argument to the [`DPOConfig`].

-### WPO loss

+```python

+from trl import DPOConfig

-The [WPO](https://huggingface.co/papers/2406.11827) paper adapts off-policy data to resemble on-policy data more closely by reweighting preference pairs according to their probability under the current policy. To use this method, set the `use_weighting` flag to `True` in the [`DPOConfig`].

+training_args = DPOConfig(

+ model_init_kwargs={"dtype": torch.bfloat16},

+)

+```

-### LD-DPO loss

+Note that all keyword arguments of [`~transformers.AutoModelForCausalLM.from_pretrained()`] are supported.

-The [LD-DPO](https://huggingface.co/papers/2409.06411) paper decomposes the portion of the response that exceeds the desired length into two components — human-like preferences and verbosity preference — based on a mixing coefficient \\( \alpha \\). To use this method, set the `ld_alpha` in the [`DPOConfig`] to an appropriate value. The paper suggests setting this value between `0.0` and `1.0`.

+### Train adapters with PEFT

-### For Mixture of Experts Models: Enabling the auxiliary loss

+We support tight integration with 🤗 PEFT library, allowing any user to conveniently train adapters and share them on the Hub, rather than training the entire model.

-MOEs are the most efficient if the load is about equally distributed between experts.

-To ensure that we train MOEs similarly during preference-tuning, it is beneficial to add the auxiliary loss from the load balancer to the final loss.

+```python

+from datasets import load_dataset

+from trl import DPOTrainer

+from peft import LoraConfig

-This option is enabled by setting `output_router_logits=True` in the model config (e.g. [`~transformers.MixtralConfig`]).

-To scale how much the auxiliary loss contributes to the total loss, use the hyperparameter `router_aux_loss_coef=...` (default: `0.001`) in the model config.

+dataset = load_dataset("trl-lib/ultrafeedback_binarized", split="train")

-### Rapid Experimentation for DPO

+trainer = DPOTrainer(

+ "Qwen/Qwen3-0.6B",

+ train_dataset=dataset,

+ peft_config=LoraConfig(),

+)

-RapidFire AI is an open-source experimentation engine that sits on top of TRL and lets you launch multiple DPO configurations at once, even on a single GPU. Instead of trying configurations sequentially, RapidFire lets you **see all their learning curves earlier, stop underperforming runs, and clone promising ones with new settings in flight** without restarting. For more information, see [RapidFire AI Integration](rapidfire_integration).

+trainer.train()

+```

-## Accelerate DPO fine-tuning using `unsloth`

+You can also continue training your [`~peft.PeftModel`]. For that, first load a `PeftModel` outside [`DPOTrainer`] and pass it directly to the trainer without the `peft_config` argument being passed.

-You can further accelerate QLoRA / LoRA (2x faster, 60% less memory) using the [`unsloth`](https://github.com/unslothai/unsloth) library that is fully compatible with `SFTTrainer`. Currently `unsloth` supports only Llama (Yi, TinyLlama, Qwen, Deepseek etc) and Mistral architectures. Some benchmarks for DPO listed below:

+```python

+from datasets import load_dataset

+from trl import DPOTrainer

+from peft import AutoPeftModelForCausalLM

-| GPU | Model | Dataset | 🤗 | 🤗 + FlashAttention 2 | 🦥 Unsloth | 🦥 VRAM saved |

-| --- | --- | --- | --- | --- | --- | --- |

-| A100 40G | Zephyr 7b | Ultra Chat | 1x | 1.24x | **1.88x** | -11.6% |

-| Tesla T4 | Zephyr 7b | Ultra Chat | 1x | 1.09x | **1.55x** | -18.6% |

+model = AutoPeftModelForCausalLM.from_pretrained("trl-lib/Qwen3-4B-LoRA", is_trainable=True)

+dataset = load_dataset("trl-lib/ultrafeedback_binarized", split="train")

-First install `unsloth` according to the [official documentation](https://github.com/unslothai/unsloth). Once installed, you can incorporate unsloth into your workflow in a very simple manner; instead of loading `AutoModelForCausalLM`, you just need to load a `FastLanguageModel` as follows:

+trainer = DPOTrainer(

+ model=model,

+ train_dataset=dataset,

+)

-```diff

- from datasets import load_dataset

- from trl import DPOConfig, DPOTrainer

-- from transformers import AutoModelForCausalLM, AutoTokenizer

-+ from unsloth import FastLanguageModel

+trainer.train()

+```

-- model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen2-0.5B-Instruct")

-- tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen2-0.5B-Instruct")

-+ model, tokenizer = FastLanguageModel.from_pretrained("Qwen/Qwen2-0.5B-Instruct")

-+ model = FastLanguageModel.get_peft_model(model)

- train_dataset = load_dataset("trl-lib/ultrafeedback_binarized", split="train")

+> [!TIP]

+> When training adapters, you typically use a higher learning rate (≈1e‑5) since only new parameters are being learned.

+>

+> ```python

+> DPOConfig(learning_rate=1e-5, ...)

+> ```

-- training_args = DPOConfig(output_dir="Qwen2-0.5B-DPO")

-+ training_args = DPOConfig(output_dir="Qwen2-0.5B-DPO", bf16=True)

- trainer = DPOTrainer(model=model, args=training_args, processing_class=tokenizer, train_dataset=train_dataset)

- trainer.train()

+### Train with Liger Kernel

-```

+Liger Kernel is a collection of Triton kernels for LLM training that boosts multi-GPU throughput by 20%, cuts memory use by 60% (enabling up to 4× longer context), and works seamlessly with tools like FlashAttention, PyTorch FSDP, and DeepSpeed. For more information, see [Liger Kernel Integration](liger_kernel_integration).

-The saved model is fully compatible with Hugging Face's transformers library. Learn more about unsloth in their [official repository](https://github.com/unslothai/unsloth).

+### Rapid Experimentation for DPO

-## Reference model considerations with PEFT

+RapidFire AI is an open-source experimentation engine that sits on top of TRL and lets you launch multiple DPO configurations at once, even on a single GPU. Instead of trying configurations sequentially, RapidFire lets you **see all their learning curves earlier, stop underperforming runs, and clone promising ones with new settings in flight** without restarting. For more information, see [RapidFire AI Integration](rapidfire_integration).

-You have three main options (plus several variants) for how the reference model works when using PEFT, assuming the model that you would like to further enhance with DPO was tuned using (Q)LoRA.

+### Train with Unsloth

-1. Simply create two instances of the model, each loading your adapter - works fine but is very inefficient.

-2. Merge the adapter into the base model, create another adapter on top, then leave the `ref_model` param null, in which case DPOTrainer will unload the adapter for reference inference - efficient, but has potential downsides discussed below.

-3. Load the adapter twice with different names, then use `set_adapter` during training to swap between the adapter being DPO'd and the reference adapter - slightly less efficient compared to 2 (~adapter size VRAM overhead), but avoids the pitfalls.

+Unsloth is an open‑source framework for fine‑tuning and reinforcement learning that trains LLMs (like Llama, Mistral, Gemma, DeepSeek, and more) up to 2× faster with up to 70% less VRAM, while providing a streamlined, Hugging Face–compatible workflow for training, evaluation, and deployment. For more information, see [Unsloth Integration](unsloth_integration).

-### Downsides to merging QLoRA before DPO (approach 2)

+## Tool Calling with DPO

-As suggested by [Benjamin Marie](https://medium.com/@bnjmn_marie/dont-merge-your-lora-adapter-into-a-4-bit-llm-65b6da287997), the best option for merging QLoRA adapters is to first dequantize the base model, then merge the adapter. Something similar to [this script](https://github.com/jondurbin/qlora/blob/main/qmerge.py).

+The [`DPOTrainer`] fully supports fine-tuning models with _tool calling_ capabilities. In this case, each dataset example should include:

-However, after using this approach, you will have an unquantized base model. Therefore, to use QLoRA for DPO, you will need to re-quantize the merged model or use the unquantized merge (resulting in higher memory demand).

+* The conversation messages (prompt, chosen and rejected), including any tool calls (`tool_calls`) and tool responses (`tool` role messages)

+* The list of available tools in the `tools` column, typically provided as JSON `str` schemas

-### Using option 3 - load the adapter twice

+For details on the expected dataset structure, see the [Dataset Format — Tool Calling](dataset_formats#tool-calling) section.

-To avoid the downsides with option 2, you can load your fine-tuned adapter into the model twice, with different names, and set the model/ref adapter names in [`DPOTrainer`].

+## Training Vision Language Models

-For example:

+[`DPOTrainer`] fully supports training Vision-Language Models (VLMs). To train a VLM, provide a dataset with either an `image` column (single image per sample) or an `images` column (list of images per sample). For more information on the expected dataset structure, see the [Dataset Format — Vision Dataset](dataset_formats#vision-dataset) section.

+An example of such a dataset is the [RLAIF-V Dataset](https://huggingface.co/datasets/HuggingFaceH4/rlaif-v_formatted) dataset.

```python

-# Load the base model.

-bnb_config = BitsAndBytesConfig(

- load_in_4bit=True,

- llm_int8_threshold=6.0,

- llm_int8_has_fp16_weight=False,

- bnb_4bit_compute_dtype=torch.bfloat16,

- bnb_4bit_use_double_quant=True,

- bnb_4bit_quant_type="nf4",

-)

-model = AutoModelForCausalLM.from_pretrained(

- "mistralai/mixtral-8x7b-v0.1",

- load_in_4bit=True,

- quantization_config=bnb_config,

- attn_implementation="kernels-community/flash-attn2",

- dtype=torch.bfloat16,

- device_map="auto",

-)

-

-# Load the adapter.

-model = PeftModel.from_pretrained(

- model,

- "/path/to/peft",

- is_trainable=True,

- adapter_name="train",

-)

-# Load the adapter a second time, with a different name, which will be our reference model.

-model.load_adapter("/path/to/peft", adapter_name="reference")

+from trl import DPOConfig, DPOTrainer

+from datasets import load_dataset

-# Initialize the trainer, without a ref_model param.

-training_args = DPOConfig(

- model_adapter_name="train",

- ref_adapter_name="reference",

-)

-dpo_trainer = DPOTrainer(

- model,

- args=training_args,

- ...

+trainer = DPOTrainer(

+ model="Qwen/Qwen2.5-VL-3B-Instruct",

+ args=DPOConfig(max_length=None),

+ train_dataset=load_dataset("HuggingFaceH4/rlaif-v_formatted", split="train"),

)

+trainer.train()

```

+> [!TIP]

+> For VLMs, truncating may remove image tokens, leading to errors during training. To avoid this, set `max_length=None` in the [`DPOConfig`]. This allows the model to process the full sequence length without truncating image tokens.

+>

+> ```python

+> DPOConfig(max_length=None, ...)

+> ```

+>

+> Only use `max_length` when you've verified that truncation won't remove image tokens for the entire dataset.

+

## DPOTrainer

[[autodoc]] DPOTrainer

@@ -302,3 +298,6 @@ dpo_trainer = DPOTrainer(

[[autodoc]] trainer.dpo_trainer.DataCollatorForPreference

+## DataCollatorForVisionPreference

+

+[[autodoc]] trainer.dpo_trainer.DataCollatorForVisionPreference

diff --git a/docs/source/example_overview.md b/docs/source/example_overview.md

index 893c2eacd5e..603760f14d9 100644

--- a/docs/source/example_overview.md

+++ b/docs/source/example_overview.md

@@ -44,7 +44,7 @@ These notebooks are easier to run and are designed for quick experimentation wit

## Scripts

-Scripts are maintained in the [`trl/scripts`](https://github.com/huggingface/trl/blob/main/trl/scripts) and [`examples/scripts`](https://github.com/huggingface/trl/blob/main/examples/scripts) directories. They show how to use different trainers such as `SFTTrainer`, `PPOTrainer`, `DPOTrainer`, `GRPOTrainer`, and more.

+Scripts are maintained in the [`trl/scripts`](https://github.com/huggingface/trl/blob/main/trl/scripts) and [`examples/scripts`](https://github.com/huggingface/trl/blob/main/examples/scripts) directories. They show how to use different trainers such as [`SFTTrainer`], [`PPOTrainer`], [`DPOTrainer`], [`GRPOTrainer`], and more.

| File | Description |

| --- | --- |

diff --git a/docs/source/lora_without_regret.md b/docs/source/lora_without_regret.md

index 2875d662288..c77392e2e19 100644

--- a/docs/source/lora_without_regret.md

+++ b/docs/source/lora_without_regret.md

@@ -277,7 +277,6 @@ Here are the parameters we used to train the above models

| `--model_name_or_path` | HuggingFaceTB/SmolLM3-3B | HuggingFaceTB/SmolLM3-3B |

| `--dataset_name` | HuggingFaceH4/OpenR1-Math-220k-default-verified | HuggingFaceH4/OpenR1-Math-220k-default-verified |

| `--learning_rate` | 1.0e-5 | 1.0e-6 |

-| `--max_prompt_length` | 1024 | 1024 |

| `--max_completion_length` | 4096 | 4096 |

| `--lora_r` | 1 | - |

| `--lora_alpha` | 32 | - |

diff --git a/docs/source/model_utils.md b/docs/source/model_utils.md

index 6cfdc7b571b..cf5fbfae900 100644

--- a/docs/source/model_utils.md

+++ b/docs/source/model_utils.md

@@ -7,7 +7,3 @@

## disable_gradient_checkpointing

[[autodoc]] models.utils.disable_gradient_checkpointing

-

-## create_reference_model

-

-[[autodoc]] create_reference_model

diff --git a/docs/source/paper_index.md b/docs/source/paper_index.md

index b4a73346074..a8734524a00 100644

--- a/docs/source/paper_index.md

+++ b/docs/source/paper_index.md

@@ -1,7 +1,6 @@

# Paper Index

-> [!WARNING]

-> Section under construction. Feel free to contribute! See https://github.com/huggingface/trl/issues/4407.

+

## Group Relative Policy Optimization

@@ -218,7 +217,7 @@ training_args = GRPOConfig(

per_device_train_batch_size=1, # train_batch_size_per_device in the Training section of the repository

num_generations=8, # num_samples in the Training section of the repository

max_completion_length=3000, # generate_max_length in the Training section of the repository

- beta=0.0, # beta in the Training section of the repository

+ beta=0.0, # β in the Training section of the repository

)

```

@@ -609,60 +608,142 @@ training_args = DPOConfig(

loss_type="sigmoid", # losses in Appendix B of the paper

per_device_train_batch_size=64, # batch size in Appendix B of the paper

learning_rate=1e-6, # learning rate in Appendix B of the paper

- beta=0.1, # beta in Appendix B of the paper

+ beta=0.1, # β in Appendix B of the paper

)

```

-### A General Theoretical Paradigm to Understand Learning from Human Preferences

+### SLiC-HF: Sequence Likelihood Calibration with Human Feedback

-**📜 Paper**: https://huggingface.co/papers/2310.12036

+**📜 Paper**: https://huggingface.co/papers/2305.10425

-A new general objective, \\( \Psi \\)PO, bypasses both key approximations in reinforcement learning from human preferences, allowing for theoretical analysis and empirical superiority over DPO. To reproduce the paper's setting, use this configuration:

+Sequence Likelihood Calibration (SLiC) is shown to be an effective and simpler alternative to Reinforcement Learning from Human Feedback (RLHF) for learning from human preferences in language models. To reproduce the paper's setting, use this configuration:

```python

from trl import DPOConfig

training_args = DPOConfig(

- loss_type="ipo", # Section 5.1 of the paper

- per_device_train_batch_size=90, # mini-batch size in Section C.1 of the paper

- learning_rate=1e-2, # learning rate in Section C.1 of the paper

+ loss_type="hinge", # Section 2 of the paper

+ per_device_train_batch_size=512, # batch size in Section 3.2 of the paper

+ learning_rate=1e-4, # learning rate in Section 3.2 of the paper

)

```

-These parameters only appear in the [published version](https://proceedings.mlr.press/v238/gheshlaghi-azar24a/gheshlaghi-azar24a.pdf)

+These parameters only appear in the [published version](https://openreview.net/pdf?id=0qSOodKmJaN)

-### SLiC-HF: Sequence Likelihood Calibration with Human Feedback

+### Statistical Rejection Sampling Improves Preference Optimization

-**📜 Paper**: https://huggingface.co/papers/2305.10425

+**📜 Paper**: https://huggingface.co/papers/2309.06657

-Sequence Likelihood Calibration (SLiC) is shown to be an effective and simpler alternative to Reinforcement Learning from Human Feedback (RLHF) for learning from human preferences in language models. To reproduce the paper's setting, use this configuration:

+Proposes **RSO**, selecting stronger preference pairs via statistical rejection sampling to boost offline preference optimization; complements DPO/SLiC. They also introduce a new loss defined as:

+

+$$

+\mathcal{L}_{\text{hinge-norm}}(\pi_\theta)

+= \mathbb{E}_{(x, y_w, y_l) \sim \mathcal{D}}

+\left[

+\max\left(0,\; 1 - \left[\gamma \log \frac{\pi_\theta(y_w \mid x)}{\pi_\text{ref}(y_w \mid x)} - \gamma \log \frac{\pi_\theta(y_l \mid x)}{\pi_\text{ref}(y_l \mid x)}\right]\right)

+\right]

+$$

+

+To train with RSO-filtered data and the hinge-norm loss, you can use the following code:

+

+```python

+from trl import DPOConfig, DPOTrainer

+

+dataset = ...

+

+def rso_accept(example): # replace with your actual filter/score logic

+ return example["rso_keep"]

+

+train_dataset = train_dataset.filter(rso_accept)

+

+training_args = DPOConfig(

+ loss_type="hinge",

+ beta=0.05, # correspond to γ in the paper

+)

+

+trainer = DPOTrainer(

+ ...,

+ args=training_args,

+ train_dataset=train_dataset,

+)

+trainer.train()

+```

+

+### Beyond Reverse KL: Generalizing Direct Preference Optimization with Diverse Divergence Constraints

+

+**📜 Paper**: https://huggingface.co/papers/2309.16240

+

+Proposes \(( f \\)-DPO, extending DPO by replacing the usual reverse-KL regularizer with a general \(( f \\)-divergence, letting you trade off mode-seeking vs mass-covering behavior (e.g. forward KL, JS, \(( \alpha \\)-divergences). The only change is replacing the DPO log-ratio margin with an **f′ score**:

+

+$$

+\mathcal{L}_{f\text{-DPO}}(\pi_\theta)

+= \mathbb{E}_{(x, y_w, y_l) \sim \mathcal{D}}

+\left[

+-\log \sigma\left(

+\beta \textcolor{red}{f'}\textcolor{red}{\Big(}\frac{\pi_\theta(y_w|x)}{\pi_{\text{ref}}(y_w|x)}\textcolor{red}{\Big)}

+-

+\beta \textcolor{red}{f'}\textcolor{red}{\Big(}\frac{\pi_\theta(y_l|x)}{\pi_{\text{ref}}(y_l|x)}\textcolor{red}{\Big)}

+\right)

+\right]

+$$

+

+Where \\( f' \\) is the derivative of the convex function defining the chosen \(( f \\)-divergence.

+

+To reproduce:

```python

from trl import DPOConfig

training_args = DPOConfig(

- loss_type="hinge", # Section 2 of the paper

- per_device_train_batch_size=512, # batch size in Section 3.2 of the paper

- learning_rate=1e-4, # learning rate in Section 3.2 of the paper

+ loss_type="sigmoid",

+ beta=0.1,

+ f_divergence_type="js_divergence", # or "reverse_kl" (default), "forward_kl", "js_divergence", "alpha_divergence"

+ f_alpha_divergence_coef=0.5, # only used if f_divergence_type="alpha_divergence"

)

```

-These parameters only appear in the [published version](https://openreview.net/pdf?id=0qSOodKmJaN)

+### A General Theoretical Paradigm to Understand Learning from Human Preferences

+

+**📜 Paper**: https://huggingface.co/papers/2310.12036

+

+Learning from human preferences can be written as a single KL-regularized objective over pairwise preference probabilities,

+

+$$

+\max_\pi ;\mathbb{E}\big[\Psi\left(p^*(y \succ y' \mid x)\right)\big] - \tau\mathrm{KL}(\pi||\pi_{\text{ref}}),

+$$

+

+which reveals RLHF and DPO as special cases corresponding to the logit choice of \\( \Psi \\).

+The paper shows that this logit transform amplifies near-deterministic preferences and effectively weakens KL regularization, explaining overfitting.

+Using the **Identity transform (IPO)** avoids this pathology by optimizing preferences directly, without assuming a Bradley–Terry reward model.

+To reproduce the paper's setting, use this configuration:

+

+```python

+from trl import DPOConfig

+

+training_args = DPOConfig(

+ loss_type="ipo", # Section 5.1 of the paper

+ per_device_train_batch_size=90, # mini-batch size in Section C.1 of the paper

+ learning_rate=1e-2, # learning rate in Section C.1 of the paper

+)

+```

+

+These parameters only appear in the [published version](https://proceedings.mlr.press/v238/gheshlaghi-azar24a/gheshlaghi-azar24a.pdf)

### Towards Efficient and Exact Optimization of Language Model Alignment

**📜 Paper**: https://huggingface.co/papers/2402.00856

-Efficient exact optimization (EXO) method is proposed to align language models with human preferences, providing a guaranteed and efficient alternative to reinforcement learning and direct preference optimization. To reproduce the paper's setting, use this configuration:

+The paper shows that direct preference methods like DPO optimize the wrong KL direction, leading to blurred preference capture, and proposes EXO as an efficient way to exactly optimize the human‑preference alignment objective by leveraging reverse KL probability matching rather than forward KL approximations. To reproduce the paper's setting, use this configuration:

```python

from trl import DPOConfig

training_args = DPOConfig(

loss_type="exo_pair", # Section 3.2 of the paper

- per_device_train_batch_size=64, # batch size in Section B of the paper

- learning_rate=1e-6, # learning rate in Section B of the paper

- beta=0.1, # $\beta_r$ in Section B of the paper

+ # From Section B of the paper

+ per_device_train_batch_size=64,

+ learning_rate=1e-6,

+ beta=0.1,

)

```

@@ -670,16 +751,21 @@ training_args = DPOConfig(

**📜 Paper**: https://huggingface.co/papers/2402.05369

-A framework using Noise Contrastive Estimation enhances language model alignment with both scalar rewards and pairwise preferences, demonstrating advantages over Direct Preference Optimization. To reproduce the paper's setting, use this configuration:

+The paper reframes language-model alignment as a *noise-contrastive classification* problem, proposing InfoNCA to learn a policy from explicit rewards (or preferences) by matching a reward-induced target distribution over responses, and showing DPO is a special binary case. It then introduces NCA, which adds an absolute likelihood term to prevent the likelihood collapse seen in purely relative (contrastive) objectives.

+

+With pairwise preferences, treat the chosen/rejected \\( K=2 \\), define scores \\( r=\beta(\log\pi_\theta-\log\pi_{\text{ref}}) \\), and apply the NCA preference loss \\( -\log\sigma(r_w)-\tfrac12\log\sigma(-r_w)-\tfrac12\log\sigma(-r_l) \\).

+

+To reproduce the paper's setting, use this configuration:

```python

from trl import DPOConfig

training_args = DPOConfig(

- loss_type="nca_pair", # Section 4.1 of the paper

- per_device_train_batch_size=32, # batch size in Section C of the paper

- learning_rate=5e-6, # learning rate in Section C of the paper

- beta=0.01, # $\alpha$ in Section C of the paper

+ loss_type="nca_pair",

+ # From Section C of the paper

+ per_device_train_batch_size=32,

+ learning_rate=5e-6,

+ beta=0.01,

)

```

@@ -687,19 +773,27 @@ training_args = DPOConfig(

**📜 Paper**: https://huggingface.co/papers/2403.00409

-The paper introduces a robust direct preference optimization (rDPO) framework to address noise in preference-based feedback for language models, proving its sub-optimality gap and demonstrating its effectiveness through experiments. To reproduce the paper's setting, use this configuration:

+DPO breaks under noisy human preferences because label flips bias the objective. Robust DPO fixes this by analytically debiasing the DPO loss under a simple noise model, with provable guarantees.

+

+$$

+\mathcal{L}_{\text{robust}}(\pi_\theta) = \frac{(1-\varepsilon)\mathcal{L}_{\text{DPO}}(y_w, y_l) - \varepsilon\mathcal{L}_{\text{DPO}}(y_l, y_w)}

+{1-2\varepsilon}

+$$

+

+Where \\( \mathcal{L}_{\text{DPO}} \\) is the DPO loss defined in [Direct Preference Optimization: Your Language Model is Secretly a Reward Model](#direct-preference-optimization-your-language-model-is-secretly-a-reward-model) and \\( \varepsilon \\) is the probability of a label flip.

+

+This single correction turns noisy preference data into an unbiased estimator of the clean DPO objective.

```python

from trl import DPOConfig

training_args = DPOConfig(

- loss_type="robust", # Section 3.1 of the paper

- per_device_train_batch_size=16, # batch size in Section B of the paper

- learning_rate=1e-3, # learning rate in Section B of the paper

- beta=0.01, # $\beta$ in Section B of the paper,

- max_length=512, # max length in Section B of the paper

- label_smoothing=0.1 # label smoothing $\epsilon$ in section 6 of the paper

-

+ loss_type="robust",

+ per_device_train_batch_size=16, # batch size in Section B of the paper

+ learning_rate=1e-3, # learning rate in Section B of the paper

+ beta=0.1, # β in Section B of the paper,

+ max_length=512, # max length in Section B of the paper

+ label_smoothing=0.1 # label smoothing $\varepsilon$ in Section 6 of the paper

)

```

@@ -709,14 +803,34 @@ training_args = DPOConfig(

Theoretical analysis and a new algorithm, Binary Classifier Optimization, explain and enhance the alignment of large language models using binary feedback signals. To reproduce the paper's setting, use this configuration:

+BCO reframes language-model alignment as behavioral cloning from an optimal reward-weighted distribution, yielding simple supervised objectives that avoid RL while remaining theoretically grounded.

+It supports both unpaired reward data and pairwise preference data, with a reward-shift–invariant formulation that reduces to a DPO-style loss in the preference setting.

+

+For the pairwise preference setting, the BCO loss is defined as:

+

+$$

+\mathcal{L}_{\text{bco\_pair}}(\pi_\theta) =

+\mathbb{E}_{(x, y_w, y_l) \sim \mathcal{D}}

+\left[

+-\log \sigma\Big(

+\beta[(\log\pi_\theta-\log\pi_{\text{ref}})(y_w)

+-

+(\log\pi_\theta-\log\pi_{\text{ref}})(y_l)]

+\Big)

+\right]

+$$

+

+To reproduce the paper in this setting, use this configuration:

+

```python

from trl import DPOConfig

training_args = DPOConfig(

- loss_type="bco_pair", # Section 4 of the paper

- per_device_train_batch_size=128, # batch size in Section C of the paper

- learning_rate=5e-7, # learning rate in Section C of the paper

- beta=0.01, # $\beta$ in Section C of the paper,

+ loss_type="bco_pair",

+ # From Section C of the paper

+ per_device_train_batch_size=128,

+ learning_rate=5e-7,

+ beta=0.01,

)

```

@@ -744,6 +858,29 @@ training_args = DPOConfig(

)

```

+### Iterative Reasoning Preference Optimization

+

+**📜 Paper**: https://huggingface.co/papers/2404.19733

+

+Iterative RPO improves reasoning by repeatedly generating chain-of-thought candidates, building preference pairs from correct vs. incorrect answers, and training with a DPO + NLL objective. The extra NLL term is key for learning to actually generate winning traces.

+

+TRL can express the DPO + NLL objective by mixing `"sigmoid"` (DPO) with `"sft"` (NLL):

+

+```python

+from trl import DPOConfig, DPOTrainer

+

+training_args = DPOConfig(

+ loss_type=["sigmoid", "sft"],

+ loss_weights=[1.0, 1.0], # alpha in the paper, recommended value is 1.0

+)

+trainer = DPOTrainer(

+ ...,

+ args=training_args,

+)

+```

+

+Note that the paper uses an iterative loop: each iteration regenerates CoT candidates with the current model, then retrains on fresh preference pairs. TRL does not automate that loop for you.

+

### Self-Play Preference Optimization for Language Model Alignment

**📜 Paper**: https://huggingface.co/papers/2405.00675

@@ -754,9 +891,11 @@ A self-play method called SPPO for language model alignment achieves state-of-th

from trl import DPOConfig

training_args = DPOConfig(

- loss_type="sppo_hard", # Section 3 of the paper

- per_device_train_batch_size=64, # batch size in Section C of the paper

- learning_rate=5e-7, # learning rate in Section C of the paper

+ loss_type="sppo_hard",

+ # From Section 5 of the paper

+ beta=0.001, # β = η^−1

+ per_device_train_batch_size=64,

+ learning_rate=5e-7,

)

```

@@ -788,15 +927,19 @@ Alignment via Optimal Transport (AOT) aligns large language models distributiona

from trl import DPOConfig

training_args = DPOConfig(

- loss_type="aot", # Section 3 of the paper

+ loss_type="aot",

+ beta=0.01, # from the caption of Figure 2

)

```

+or, for the unpaired version:

+

```python

from trl import DPOConfig

training_args = DPOConfig(

- loss_type="aot_unpaired", # Section 3 of the paper

+ loss_type="aot_unpaired",

+ beta=0.01, # from the caption of Figure 2

)

```

@@ -812,11 +955,39 @@ An LLM-driven method automatically discovers performant preference optimization

from trl import DPOConfig

training_args = DPOConfig(

- loss_type="discopop", # Section 3 of the paper

- per_device_train_batch_size=64, # batch size in Section B.1 of the paper

- learning_rate=5e-7, # learning rate in Section B.1 of the paper

- beta=0.05, # $\beta$ in Section B.1 of the paper,

- discopop_tau=0.05 # $\tau$ in Section E of the paper

+ loss_type="discopop",

+ per_device_train_batch_size=64, # batch size in Section B.1 of the paper

+ learning_rate=5e-7, # learning rate in Section B.1 of the paper

+ beta=0.05, # β in Section B.1 of the paper,

+ discopop_tau=0.05 # τ in Section E of the paper

+)

+```

+

+### WPO: Enhancing RLHF with Weighted Preference Optimization

+

+**📜 Paper**: https://huggingface.co/papers/2406.11827

+

+WPO reweights preference pairs by their policy probabilities to reduce the off-policy gap in DPO-style training. The loss is:

+

+$$

+\mathcal{L}_{\text{WPO}} = -\mathbb{E}_{(x, y_w, y_l) \sim \mathcal{D}} \left[ \textcolor{red}{w(x, y_w) w(x, y_l)} \log p(y_w \succ y_l \mid x) \right]

+$$

+

+where the weight \\( w(x, y) \\) is defined as:

+

+$$

+w(x, y) = \exp\left(\frac{1}{|y|}\sum_{t=1}^{|y|} \log \frac{\pi_\theta(y_t \mid x, y_{ [!WARNING]

-> The legacy `max_prompt_length` and `max_completion_length` parameters are deprecated and will be removed; instead, filter or pre-truncate overlong prompts/completions in your dataset before training.

+> The legacy `max_prompt_length` and `max_completion_length` parameters are now removed; instead, filter or pre-truncate overlong prompts/completions in your dataset before training.

diff --git a/docs/source/sft_trainer.md b/docs/source/sft_trainer.md

index 0244455385b..c1eaf46b65e 100644

--- a/docs/source/sft_trainer.md

+++ b/docs/source/sft_trainer.md

@@ -23,7 +23,7 @@ trainer = SFTTrainer(

trainer.train()

```

-

+

## Expected dataset type and format

@@ -194,7 +194,7 @@ dataset = load_dataset("trl-lib/Capybara", split="train")

trainer = SFTTrainer(

"Qwen/Qwen3-0.6B",

train_dataset=dataset,

- peft_config=LoraConfig()

+ peft_config=LoraConfig(),

)

trainer.train()

@@ -295,7 +295,7 @@ For details on the expected dataset structure, see the [Dataset Format — Tool

## Training Vision Language Models

-[`SFTTrainer`] fully supports training Vision-Language Models (VLMs). To train a VLM, you need to provide a dataset with an additional `images` column containing the images to be processed. For more information on the expected dataset structure, see the [Dataset Format — Vision Dataset](dataset_formats#vision-dataset) section.

+[`SFTTrainer`] fully supports training Vision-Language Models (VLMs). To train a VLM, provide a dataset with either an `image` column (single image per sample) or an `images` column (list of images per sample). For more information on the expected dataset structure, see the [Dataset Format — Vision Dataset](dataset_formats#vision-dataset) section.

An example of such a dataset is the [LLaVA Instruct Mix](https://huggingface.co/datasets/trl-lib/llava-instruct-mix).

```python

diff --git a/examples/notebooks/grpo_agent.ipynb b/examples/notebooks/grpo_agent.ipynb

index 070738cea8c..9fec579c293 100644

--- a/examples/notebooks/grpo_agent.ipynb

+++ b/examples/notebooks/grpo_agent.ipynb

@@ -440,9 +440,6 @@

" save_steps = 10, # Interval for saving checkpoints\n",

" log_completions = True,\n",

"\n",

- " # Memory optimization\n",

- " gradient_checkpointing = True, # Enable activation recomputation to save memory\n",

- "\n",

" # Hub integration\n",

" push_to_hub = True, # Set True to automatically push model to Hugging Face Hub\n",

")"

diff --git a/examples/scripts/dpo_vlm.py b/examples/scripts/dpo_vlm.py

index 3c5909503ef..a515201960b 100644

--- a/examples/scripts/dpo_vlm.py

+++ b/examples/scripts/dpo_vlm.py

@@ -104,14 +104,7 @@

**model_kwargs,

)

peft_config = get_peft_config(model_args)

- if peft_config is None:

- ref_model = AutoModelForImageTextToText.from_pretrained(

- model_args.model_name_or_path,

- trust_remote_code=model_args.trust_remote_code,

- **model_kwargs,

- )

- else:

- ref_model = None

+

processor = AutoProcessor.from_pretrained(

model_args.model_name_or_path, trust_remote_code=model_args.trust_remote_code, do_image_splitting=False

)

@@ -136,7 +129,6 @@

################

trainer = DPOTrainer(

model,

- ref_model,

args=training_args,

train_dataset=dataset[script_args.dataset_train_split],

eval_dataset=dataset[script_args.dataset_test_split] if training_args.eval_strategy != "no" else None,

diff --git a/examples/scripts/mpo_vlm.py b/examples/scripts/mpo_vlm.py

index 64ca7b6120c..ace5a347d7e 100644

--- a/examples/scripts/mpo_vlm.py

+++ b/examples/scripts/mpo_vlm.py

@@ -89,13 +89,6 @@

**model_kwargs,

)

peft_config = get_peft_config(model_args)

- if peft_config is None:

- ref_model = AutoModelForImageTextToText.from_pretrained(

- model_args.model_name_or_path,

- **model_kwargs,

- )

- else:

- ref_model = None

################

# Dataset

@@ -127,7 +120,6 @@ def ensure_rgb(example):

################

trainer = DPOTrainer(

model=model,

- ref_model=ref_model,

args=training_args,

train_dataset=train_dataset,

eval_dataset=test_dataset,

diff --git a/scripts/generate_harmony_dataset.py b/scripts/generate_harmony_dataset.py

index 88f6aac3d8b..670c586a4bb 100644

--- a/scripts/generate_harmony_dataset.py

+++ b/scripts/generate_harmony_dataset.py

@@ -95,7 +95,7 @@ def main(test_size, push_to_hub, repo_id):

language_modeling_dataset = language_modeling_dataset.train_test_split(test_size=test_size, shuffle=False)

if push_to_hub:

language_modeling_dataset.push_to_hub(repo_id, config_name="language_modeling")

- language_modeling_dataset.save_to_disk(repo_id + "/language_modeling")

+

prompt_completion_dataset = Dataset.from_dict({

"prompt": [

[{"role": "user", "content": "What is better than ugly?"}],

@@ -164,7 +164,96 @@ def main(test_size, push_to_hub, repo_id):

prompt_completion_dataset = prompt_completion_dataset.train_test_split(test_size=test_size, shuffle=False)

if push_to_hub:

prompt_completion_dataset.push_to_hub(repo_id, config_name="prompt_completion")

- prompt_completion_dataset.save_to_disk(repo_id + "/prompt_completion")

+

+ preference_dataset = Dataset.from_dict({

+ "prompt": [

+ [{"role": "user", "content": "What is better than ugly?"}],

+ [{"role": "user", "content": "What is better than implicit?"}],

+ [{"role": "user", "content": "What is better than complex?"}],

+ [{"role": "user", "content": "What is better than complicated?"}],

+ [{"role": "user", "content": "What is better than nested?"}],

+ [{"role": "user", "content": "What is better than dense?"}],

+ [{"role": "user", "content": "What counts?"}],

+ [{"role": "user", "content": "Are special cases enough to break the rules?"}],

+ [{"role": "user", "content": "What beats purity?"}],

+ [{"role": "user", "content": "What should never pass silently?"}],

+ [{"role": "user", "content": "When can errors pass silently?"}],

+ [{"role": "user", "content": "What should you do in the face of ambiguity?"}],

+ [{"role": "user", "content": "How many ways should there be to do it?"}],

+ [{"role": "user", "content": "For whom may the way not be obvious at first?"}],

+ [{"role": "user", "content": "What is better than never?"}],

+ [{"role": "user", "content": "Is never better than *right* now?"}],

+ [{"role": "user", "content": "What does it mean if the implementation is hard to explain?"}],

+ [{"role": "user", "content": "What does it mean if the implementation is easy to explain?"}],

+ [{"role": "user", "content": "Any great ideas?"}],

+ ],

+ "chosen": [

+ [{"role": "assistant", "thinking": "Beauty improves clarity and appeal.", "content": "Beautiful."}],

+ [{"role": "assistant", "thinking": "Clarity avoids confusion.", "content": "Explicit."}],

+ [{"role": "assistant", "thinking": "Simplicity is easier to manage.", "content": "Simple."}],

+ [{"role": "assistant", "thinking": "Complexity has structure; complication doesn’t.", "content": "Complex."}],

+ [{"role": "assistant", "thinking": "Flat structures are easier to read.", "content": "Flat."}],

+ [{"role": "assistant", "thinking": "Spacing aids understanding.", "content": "Sparse."}],

+ [{"role": "assistant", "thinking": "Readable code lasts longer.", "content": "Readability."}],

+ [{"role": "assistant", "thinking": "Consistency is more valuable.", "content": "No, special cases aren't special enough to break the rules."}],

+ [{"role": "assistant", "thinking": "Real-world needs outweigh ideals.", "content": "Practicality."}],

+ [{"role": "assistant", "thinking": "Silent errors cause hidden issues.", "content": "Errors."}],

+ [{"role": "assistant", "thinking": "Silence must be intentional.", "content": "When explicitly silenced."}],

+ [{"role": "assistant", "thinking": "Guessing leads to wrong choices.", "content": "Refuse the temptation to guess."}],

+ [{"role": "assistant", "thinking": "One way reduces confusion.", "content": "One, and preferably only one."}],

+ [{"role": "assistant", "thinking": "A playful nod to Python’s creator.", "content": "Dutch."}],

+ [{"role": "assistant", "thinking": "Action is better than endless delay.", "content": "Now is better than never."}],

+ [{"role": "assistant", "thinking": "Rushed action can be worse.", "content": "Yes, often."}],

+ [{"role": "assistant", "thinking": "Good ideas should be clear.", "content": "It means it's a bad idea."}],

+ [{"role": "assistant", "thinking": "Clarity suggests soundness.", "content": "It means it may be a good idea."}],

+ [{"role": "assistant", "thinking": "Namespaces prevent conflicts.", "content": "Namespaces are one honking great idea."}],

+ ],

+ "rejected": [

+ [{"role": "assistant", "thinking": "This comparison is nonsensical.", "content": "Better than the moon."}],

+ [{"role": "assistant", "thinking": "This dismisses the value of clarity.", "content": "Worse than nothing."}],

+ [{"role": "assistant", "thinking": "This mixes code style with leisure.", "content": "Better than a long vacation."}],

+ [{"role": "assistant", "thinking": "This overstates complexity as a universal solution.", "content": "Always the answer."}],

+ [{"role": "assistant", "thinking": "This swaps a structural concept for a random object.", "content": "Better than chocolate."}],

+ [{"role": "assistant", "thinking": "This ignores the need for context in sparse designs.", "content": "Without any context."}],

+ [{"role": "assistant", "thinking": "This implies readability is optional, which it is not.", "content": "Optional."}],

+ [{"role": "assistant", "thinking": "This exaggerates special cases into fantasy.", "content": "Enough to become unicorns."}],

+ [{"role": "assistant", "thinking": "This twists the original contrast between practicality and purity.", "content": "Beats reality."}],

+ [{"role": "assistant", "thinking": "This misapplies \"passing\" to a literal driving test.", "content": "Pass their driving test."}],

+ [{"role": "assistant", "thinking": "This suggests forgetting rather than intentional silence.", "content": "Forgotten."}],

+ [{"role": "assistant", "thinking": "This replaces careful judgment with a joke.", "content": "Refuse the opportunity to laugh."}],

+ [{"role": "assistant", "thinking": "This encourages multiple confusing approaches instead of one clear way.", "content": "Two or more confusing methods."}],

+ [{"role": "assistant", "thinking": "This turns a simple example into time-travel absurdity.", "content": "A time traveler."}],

+ [{"role": "assistant", "thinking": "This denies the value of timely action.", "content": "Never better."}],

+ [{"role": "assistant", "thinking": "This removes the sense of tradeoff and possibility.", "content": "Not even a possibility."}],

+ [{"role": "assistant", "thinking": "This inverts the meaning of explainability.", "content": "Clearly the best choice."}],

+ [{"role": "assistant", "thinking": "This treats clarity as something mystical rather than practical.", "content": "Probably magic."}],

+ [{"role": "assistant", "thinking": "This turns a design principle into a silly metaphor.", "content": "Watermelon -- let's plant some!"}],

+ ],

+ "chat_template_kwargs": [

+ {"reasoning_effort": "low", "model_identity": "You are Tiny ChatGPT, a tiny language model."},

+ {"reasoning_effort": "medium", "model_identity": "You are Tiny ChatGPT, a tiny language model."},

+ {"reasoning_effort": "high", "model_identity": "You are Tiny ChatGPT, a tiny language model."},

+ {"reasoning_effort": "low", "model_identity": "You are Tiny ChatGPT, a tiny language model."},

+ {"reasoning_effort": "medium", "model_identity": "You are Tiny ChatGPT, a tiny language model."},

+ {"reasoning_effort": "high", "model_identity": "You are Tiny ChatGPT, a tiny language model."},

+ {"reasoning_effort": "low", "model_identity": "You are Tiny ChatGPT, a tiny language model."},

+ {"reasoning_effort": "medium", "model_identity": "You are Tiny ChatGPT, a tiny language model."},

+ {"reasoning_effort": "high", "model_identity": "You are Tiny ChatGPT, a tiny language model."},

+ {"reasoning_effort": "low", "model_identity": "You are Tiny ChatGPT, a tiny language model."},

+ {"reasoning_effort": "medium", "model_identity": "You are Tiny ChatGPT, a tiny language model."},

+ {"reasoning_effort": "high", "model_identity": "You are Tiny ChatGPT, a tiny language model."},

+ {"reasoning_effort": "low", "model_identity": "You are Tiny ChatGPT, a tiny language model."},

+ {"reasoning_effort": "medium", "model_identity": "You are Tiny ChatGPT, a tiny language model."},

+ {"reasoning_effort": "high", "model_identity": "You are Tiny ChatGPT, a tiny language model."},

+ {"reasoning_effort": "low", "model_identity": "You are Tiny ChatGPT, a tiny language model."},