diff --git a/.circleci/config.yml b/.circleci/config.yml

index 856211e280cb..1bfe5d29f7f0 100644

--- a/.circleci/config.yml

+++ b/.circleci/config.yml

@@ -587,6 +587,7 @@ jobs:

- run: pip install --upgrade pip

- run: pip install .[sklearn,torch,sentencepiece,testing,torch-speech]

- run: pip install -r examples/pytorch/_tests_requirements.txt

+ - run: pip install git+https://github.com/huggingface/accelerate

- save_cache:

key: v0.4-torch_examples-{{ checksum "setup.py" }}

paths:

diff --git a/README.md b/README.md

index 024e45220129..f37b11088022 100644

--- a/README.md

+++ b/README.md

@@ -98,7 +98,7 @@ In Audio:

## If you are looking for custom support from the Hugging Face team

-  +

+

## Quick tour

@@ -303,6 +303,7 @@ Min, Patrick Lewis, Ledell Wu, Sergey Edunov, Danqi Chen, and Wen-tau Yih.

1. **[REALM](https://huggingface.co/docs/transformers/model_doc/realm.html)** (from Google Research) released with the paper [REALM: Retrieval-Augmented Language Model Pre-Training](https://arxiv.org/abs/2002.08909) by Kelvin Guu, Kenton Lee, Zora Tung, Panupong Pasupat and Ming-Wei Chang.

1. **[Reformer](https://huggingface.co/docs/transformers/model_doc/reformer)** (from Google Research) released with the paper [Reformer: The Efficient Transformer](https://arxiv.org/abs/2001.04451) by Nikita Kitaev, Łukasz Kaiser, Anselm Levskaya.

1. **[RemBERT](https://huggingface.co/docs/transformers/model_doc/rembert)** (from Google Research) released with the paper [Rethinking embedding coupling in pre-trained language models](https://arxiv.org/abs/2010.12821) by Hyung Won Chung, Thibault Févry, Henry Tsai, M. Johnson, Sebastian Ruder.

+1. **[RegNet](https://huggingface.co/docs/transformers/main/model_doc/regnet)** (from META Platforms) released with the paper [Designing Network Design Space](https://arxiv.org/abs/2003.13678) by Ilija Radosavovic, Raj Prateek Kosaraju, Ross Girshick, Kaiming He, Piotr Dollár.

1. **[ResNet](https://huggingface.co/docs/transformers/main/model_doc/resnet)** (from Microsoft Research) released with the paper [Deep Residual Learning for Image Recognition](https://arxiv.org/abs/1512.03385) by Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun.

1. **[RoBERTa](https://huggingface.co/docs/transformers/model_doc/roberta)** (from Facebook), released together with the paper [RoBERTa: A Robustly Optimized BERT Pretraining Approach](https://arxiv.org/abs/1907.11692) by Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi Chen, Omer Levy, Mike Lewis, Luke Zettlemoyer, Veselin Stoyanov.

1. **[RoFormer](https://huggingface.co/docs/transformers/model_doc/roformer)** (from ZhuiyiTechnology), released together with the paper [RoFormer: Enhanced Transformer with Rotary Position Embedding](https://arxiv.org/abs/2104.09864) by Jianlin Su and Yu Lu and Shengfeng Pan and Bo Wen and Yunfeng Liu.

@@ -317,6 +318,7 @@ Min, Patrick Lewis, Ledell Wu, Sergey Edunov, Danqi Chen, and Wen-tau Yih.

1. **[T5](https://huggingface.co/docs/transformers/model_doc/t5)** (from Google AI) released with the paper [Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer](https://arxiv.org/abs/1910.10683) by Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu.

1. **[T5v1.1](https://huggingface.co/docs/transformers/model_doc/t5v1.1)** (from Google AI) released in the repository [google-research/text-to-text-transfer-transformer](https://github.com/google-research/text-to-text-transfer-transformer/blob/main/released_checkpoints.md#t511) by Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu.

1. **[TAPAS](https://huggingface.co/docs/transformers/model_doc/tapas)** (from Google AI) released with the paper [TAPAS: Weakly Supervised Table Parsing via Pre-training](https://arxiv.org/abs/2004.02349) by Jonathan Herzig, Paweł Krzysztof Nowak, Thomas Müller, Francesco Piccinno and Julian Martin Eisenschlos.

+1. **[TAPEX](https://huggingface.co/docs/transformers/main/model_doc/tapex)** (from Microsoft Research) released with the paper [TAPEX: Table Pre-training via Learning a Neural SQL Executor](https://arxiv.org/abs/2107.07653) by Qian Liu, Bei Chen, Jiaqi Guo, Morteza Ziyadi, Zeqi Lin, Weizhu Chen, Jian-Guang Lou.

1. **[Transformer-XL](https://huggingface.co/docs/transformers/model_doc/transfo-xl)** (from Google/CMU) released with the paper [Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context](https://arxiv.org/abs/1901.02860) by Zihang Dai*, Zhilin Yang*, Yiming Yang, Jaime Carbonell, Quoc V. Le, Ruslan Salakhutdinov.

1. **[TrOCR](https://huggingface.co/docs/transformers/model_doc/trocr)** (from Microsoft), released together with the paper [TrOCR: Transformer-based Optical Character Recognition with Pre-trained Models](https://arxiv.org/abs/2109.10282) by Minghao Li, Tengchao Lv, Lei Cui, Yijuan Lu, Dinei Florencio, Cha Zhang, Zhoujun Li, Furu Wei.

1. **[UniSpeech](https://huggingface.co/docs/transformers/model_doc/unispeech)** (from Microsoft Research) released with the paper [UniSpeech: Unified Speech Representation Learning with Labeled and Unlabeled Data](https://arxiv.org/abs/2101.07597) by Chengyi Wang, Yu Wu, Yao Qian, Kenichi Kumatani, Shujie Liu, Furu Wei, Michael Zeng, Xuedong Huang.

diff --git a/README_ko.md b/README_ko.md

index 5d813b0cc76d..71aa11474401 100644

--- a/README_ko.md

+++ b/README_ko.md

@@ -281,6 +281,7 @@ Flax, PyTorch, TensorFlow 설치 페이지에서 이들을 conda로 설치하는

1. **[QDQBert](https://huggingface.co/docs/transformers/model_doc/qdqbert)** (from NVIDIA) released with the paper [Integer Quantization for Deep Learning Inference: Principles and Empirical Evaluation](https://arxiv.org/abs/2004.09602) by Hao Wu, Patrick Judd, Xiaojie Zhang, Mikhail Isaev and Paulius Micikevicius.

1. **[REALM](https://huggingface.co/docs/transformers/model_doc/realm.html)** (from Google Research) released with the paper [REALM: Retrieval-Augmented Language Model Pre-Training](https://arxiv.org/abs/2002.08909) by Kelvin Guu, Kenton Lee, Zora Tung, Panupong Pasupat and Ming-Wei Chang.

1. **[Reformer](https://huggingface.co/docs/transformers/model_doc/reformer)** (from Google Research) released with the paper [Reformer: The Efficient Transformer](https://arxiv.org/abs/2001.04451) by Nikita Kitaev, Łukasz Kaiser, Anselm Levskaya.

+1. **[RegNet](https://huggingface.co/docs/transformers/main/model_doc/regnet)** (from META Research) released with the paper [Designing Network Design Space](https://arxiv.org/abs/2003.13678) by Ilija Radosavovic, Raj Prateek Kosaraju, Ross Girshick, Kaiming He, Piotr Dollár.

1. **[RemBERT](https://huggingface.co/docs/transformers/model_doc/rembert)** (from Google Research) released with the paper [Rethinking embedding coupling in pre-trained language models](https://arxiv.org/pdf/2010.12821.pdf) by Hyung Won Chung, Thibault Févry, Henry Tsai, M. Johnson, Sebastian Ruder.

1. **[ResNet](https://huggingface.co/docs/transformers/main/model_doc/resnet)** (from Microsoft Research) released with the paper [Deep Residual Learning for Image Recognition](https://arxiv.org/abs/1512.03385) by Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun.

1. **[RoBERTa](https://huggingface.co/docs/transformers/model_doc/roberta)** (from Facebook), released together with the paper a [Robustly Optimized BERT Pretraining Approach](https://arxiv.org/abs/1907.11692) by Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi Chen, Omer Levy, Mike Lewis, Luke Zettlemoyer, Veselin Stoyanov.

@@ -296,6 +297,7 @@ Flax, PyTorch, TensorFlow 설치 페이지에서 이들을 conda로 설치하는

1. **[T5](https://huggingface.co/docs/transformers/model_doc/t5)** (from Google AI) released with the paper [Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer](https://arxiv.org/abs/1910.10683) by Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu.

1. **[T5v1.1](https://huggingface.co/docs/transformers/model_doc/t5v1.1)** (from Google AI) released in the repository [google-research/text-to-text-transfer-transformer](https://github.com/google-research/text-to-text-transfer-transformer/blob/main/released_checkpoints.md#t511) by Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu.

1. **[TAPAS](https://huggingface.co/docs/transformers/model_doc/tapas)** (from Google AI) released with the paper [TAPAS: Weakly Supervised Table Parsing via Pre-training](https://arxiv.org/abs/2004.02349) by Jonathan Herzig, Paweł Krzysztof Nowak, Thomas Müller, Francesco Piccinno and Julian Martin Eisenschlos.

+1. **[TAPEX](https://huggingface.co/docs/transformers/main/model_doc/tapex)** (from Microsoft Research) released with the paper [TAPEX: Table Pre-training via Learning a Neural SQL Executor](https://arxiv.org/abs/2107.07653) by Qian Liu, Bei Chen, Jiaqi Guo, Morteza Ziyadi, Zeqi Lin, Weizhu Chen, Jian-Guang Lou.

1. **[Transformer-XL](https://huggingface.co/docs/transformers/model_doc/transfo-xl)** (from Google/CMU) released with the paper [Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context](https://arxiv.org/abs/1901.02860) by Zihang Dai*, Zhilin Yang*, Yiming Yang, Jaime Carbonell, Quoc V. Le, Ruslan Salakhutdinov.

1. **[TrOCR](https://huggingface.co/docs/transformers/model_doc/trocr)** (from Microsoft), released together with the paper [TrOCR: Transformer-based Optical Character Recognition with Pre-trained Models](https://arxiv.org/abs/2109.10282) by Minghao Li, Tengchao Lv, Lei Cui, Yijuan Lu, Dinei Florencio, Cha Zhang, Zhoujun Li, Furu Wei.

1. **[UniSpeech](https://huggingface.co/docs/transformers/model_doc/unispeech)** (from Microsoft Research) released with the paper [UniSpeech: Unified Speech Representation Learning with Labeled and Unlabeled Data](https://arxiv.org/abs/2101.07597) by Chengyi Wang, Yu Wu, Yao Qian, Kenichi Kumatani, Shujie Liu, Furu Wei, Michael Zeng, Xuedong Huang.

diff --git a/README_zh-hans.md b/README_zh-hans.md

index 5570335d49f9..efbe0e4547c7 100644

--- a/README_zh-hans.md

+++ b/README_zh-hans.md

@@ -305,6 +305,7 @@ conda install -c huggingface transformers

1. **[QDQBert](https://huggingface.co/docs/transformers/model_doc/qdqbert)** (来自 NVIDIA) 伴随论文 [Integer Quantization for Deep Learning Inference: Principles and Empirical Evaluation](https://arxiv.org/abs/2004.09602) 由 Hao Wu, Patrick Judd, Xiaojie Zhang, Mikhail Isaev and Paulius Micikevicius 发布。

1. **[REALM](https://huggingface.co/docs/transformers/model_doc/realm.html)** (来自 Google Research) 伴随论文 [REALM: Retrieval-Augmented Language Model Pre-Training](https://arxiv.org/abs/2002.08909) 由 Kelvin Guu, Kenton Lee, Zora Tung, Panupong Pasupat and Ming-Wei Chang 发布。

1. **[Reformer](https://huggingface.co/docs/transformers/model_doc/reformer)** (来自 Google Research) 伴随论文 [Reformer: The Efficient Transformer](https://arxiv.org/abs/2001.04451) 由 Nikita Kitaev, Łukasz Kaiser, Anselm Levskaya 发布。

+1. **[RegNet](https://huggingface.co/docs/transformers/main/model_doc/regnet)** (from META Research) released with the paper [Designing Network Design Space](https://arxiv.org/abs/2003.13678) by Ilija Radosavovic, Raj Prateek Kosaraju, Ross Girshick, Kaiming He, Piotr Dollár.

1. **[RemBERT](https://huggingface.co/docs/transformers/model_doc/rembert)** (来自 Google Research) 伴随论文 [Rethinking embedding coupling in pre-trained language models](https://arxiv.org/pdf/2010.12821.pdf) 由 Hyung Won Chung, Thibault Févry, Henry Tsai, M. Johnson, Sebastian Ruder 发布。

1. **[ResNet](https://huggingface.co/docs/transformers/main/model_doc/resnet)** (from Microsoft Research) released with the paper [Deep Residual Learning for Image Recognition](https://arxiv.org/abs/1512.03385) by Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun.

1. **[RoBERTa](https://huggingface.co/docs/transformers/model_doc/roberta)** (来自 Facebook), 伴随论文 [Robustly Optimized BERT Pretraining Approach](https://arxiv.org/abs/1907.11692) 由 Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi Chen, Omer Levy, Mike Lewis, Luke Zettlemoyer, Veselin Stoyanov 发布。

@@ -320,6 +321,7 @@ conda install -c huggingface transformers

1. **[T5](https://huggingface.co/docs/transformers/model_doc/t5)** (来自 Google AI) 伴随论文 [Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer](https://arxiv.org/abs/1910.10683) 由 Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu 发布。

1. **[T5v1.1](https://huggingface.co/docs/transformers/model_doc/t5v1.1)** (来自 Google AI) 伴随论文 [google-research/text-to-text-transfer-transformer](https://github.com/google-research/text-to-text-transfer-transformer/blob/main/released_checkpoints.md#t511) 由 Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu 发布。

1. **[TAPAS](https://huggingface.co/docs/transformers/model_doc/tapas)** (来自 Google AI) 伴随论文 [TAPAS: Weakly Supervised Table Parsing via Pre-training](https://arxiv.org/abs/2004.02349) 由 Jonathan Herzig, Paweł Krzysztof Nowak, Thomas Müller, Francesco Piccinno and Julian Martin Eisenschlos 发布。

+1. **[TAPEX](https://huggingface.co/docs/transformers/main/model_doc/tapex)** (来自 Microsoft Research) 伴随论文 [TAPEX: Table Pre-training via Learning a Neural SQL Executor](https://arxiv.org/abs/2107.07653) 由 Qian Liu, Bei Chen, Jiaqi Guo, Morteza Ziyadi, Zeqi Lin, Weizhu Chen, Jian-Guang Lou 发布。

1. **[Transformer-XL](https://huggingface.co/docs/transformers/model_doc/transfo-xl)** (来自 Google/CMU) 伴随论文 [Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context](https://arxiv.org/abs/1901.02860) 由 Zihang Dai*, Zhilin Yang*, Yiming Yang, Jaime Carbonell, Quoc V. Le, Ruslan Salakhutdinov 发布。

1. **[TrOCR](https://huggingface.co/docs/transformers/model_doc/trocr)** (来自 Microsoft) 伴随论文 [TrOCR: Transformer-based Optical Character Recognition with Pre-trained Models](https://arxiv.org/abs/2109.10282) 由 Minghao Li, Tengchao Lv, Lei Cui, Yijuan Lu, Dinei Florencio, Cha Zhang, Zhoujun Li, Furu Wei 发布。

1. **[UniSpeech](https://huggingface.co/docs/transformers/model_doc/unispeech)** (来自 Microsoft Research) 伴随论文 [UniSpeech: Unified Speech Representation Learning with Labeled and Unlabeled Data](https://arxiv.org/abs/2101.07597) 由 Chengyi Wang, Yu Wu, Yao Qian, Kenichi Kumatani, Shujie Liu, Furu Wei, Michael Zeng, Xuedong Huang 发布。

diff --git a/README_zh-hant.md b/README_zh-hant.md

index 88ba012d5a89..c9396e45faa6 100644

--- a/README_zh-hant.md

+++ b/README_zh-hant.md

@@ -317,6 +317,7 @@ conda install -c huggingface transformers

1. **[QDQBert](https://huggingface.co/docs/transformers/model_doc/qdqbert)** (from NVIDIA) released with the paper [Integer Quantization for Deep Learning Inference: Principles and Empirical Evaluation](https://arxiv.org/abs/2004.09602) by Hao Wu, Patrick Judd, Xiaojie Zhang, Mikhail Isaev and Paulius Micikevicius.

1. **[REALM](https://huggingface.co/docs/transformers/model_doc/realm.html)** (from Google Research) released with the paper [REALM: Retrieval-Augmented Language Model Pre-Training](https://arxiv.org/abs/2002.08909) by Kelvin Guu, Kenton Lee, Zora Tung, Panupong Pasupat and Ming-Wei Chang.

1. **[Reformer](https://huggingface.co/docs/transformers/model_doc/reformer)** (from Google Research) released with the paper [Reformer: The Efficient Transformer](https://arxiv.org/abs/2001.04451) by Nikita Kitaev, Łukasz Kaiser, Anselm Levskaya.

+1. **[RegNet](https://huggingface.co/docs/transformers/main/model_doc/regnet)** (from META Research) released with the paper [Designing Network Design Space](https://arxiv.org/abs/2003.13678) by Ilija Radosavovic, Raj Prateek Kosaraju, Ross Girshick, Kaiming He, Piotr Dollár.

1. **[RemBERT](https://huggingface.co/docs/transformers/model_doc/rembert)** (from Google Research) released with the paper [Rethinking embedding coupling in pre-trained language models](https://arxiv.org/pdf/2010.12821.pdf) by Hyung Won Chung, Thibault Févry, Henry Tsai, M. Johnson, Sebastian Ruder.

1. **[ResNet](https://huggingface.co/docs/transformers/main/model_doc/resnet)** (from Microsoft Research) released with the paper [Deep Residual Learning for Image Recognition](https://arxiv.org/abs/1512.03385) by Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun.

1. **[RoBERTa](https://huggingface.co/docs/transformers/model_doc/roberta)** (from Facebook), released together with the paper a [Robustly Optimized BERT Pretraining Approach](https://arxiv.org/abs/1907.11692) by Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi Chen, Omer Levy, Mike Lewis, Luke Zettlemoyer, Veselin Stoyanov.

@@ -332,6 +333,7 @@ conda install -c huggingface transformers

1. **[T5](https://huggingface.co/docs/transformers/model_doc/t5)** (from Google AI) released with the paper [Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer](https://arxiv.org/abs/1910.10683) by Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu.

1. **[T5v1.1](https://huggingface.co/docs/transformers/model_doc/t5v1.1)** (from Google AI) released with the paper [google-research/text-to-text-transfer-transformer](https://github.com/google-research/text-to-text-transfer-transformer/blob/main/released_checkpoints.md#t511) by Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu.

1. **[TAPAS](https://huggingface.co/docs/transformers/model_doc/tapas)** (from Google AI) released with the paper [TAPAS: Weakly Supervised Table Parsing via Pre-training](https://arxiv.org/abs/2004.02349) by Jonathan Herzig, Paweł Krzysztof Nowak, Thomas Müller, Francesco Piccinno and Julian Martin Eisenschlos.

+1. **[TAPEX](https://huggingface.co/docs/transformers/main/model_doc/tapex)** (from Microsoft Research) released with the paper [TAPEX: Table Pre-training via Learning a Neural SQL Executor](https://arxiv.org/abs/2107.07653) by Qian Liu, Bei Chen, Jiaqi Guo, Morteza Ziyadi, Zeqi Lin, Weizhu Chen, Jian-Guang Lou.

1. **[Transformer-XL](https://huggingface.co/docs/transformers/model_doc/transfo-xl)** (from Google/CMU) released with the paper [Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context](https://arxiv.org/abs/1901.02860) by Zihang Dai*, Zhilin Yang*, Yiming Yang, Jaime Carbonell, Quoc V. Le, Ruslan Salakhutdinov.

1. **[TrOCR](https://huggingface.co/docs/transformers/model_doc/trocr)** (from Microsoft) released with the paper [TrOCR: Transformer-based Optical Character Recognition with Pre-trained Models](https://arxiv.org/abs/2109.10282) by Minghao Li, Tengchao Lv, Lei Cui, Yijuan Lu, Dinei Florencio, Cha Zhang, Zhoujun Li, Furu Wei.

1. **[UniSpeech](https://huggingface.co/docs/transformers/model_doc/unispeech)** (from Microsoft Research) released with the paper [UniSpeech: Unified Speech Representation Learning with Labeled and Unlabeled Data](https://arxiv.org/abs/2101.07597) by Chengyi Wang, Yu Wu, Yao Qian, Kenichi Kumatani, Shujie Liu, Furu Wei, Michael Zeng, Xuedong Huang.

diff --git a/docs/source/en/_toctree.yml b/docs/source/en/_toctree.yml

index 69717477e1f2..c32004ff5214 100644

--- a/docs/source/en/_toctree.yml

+++ b/docs/source/en/_toctree.yml

@@ -296,6 +296,8 @@

title: Reformer

- local: model_doc/rembert

title: RemBERT

+ - local: model_doc/regnet

+ title: RegNet

- local: model_doc/resnet

title: ResNet

- local: model_doc/retribert

@@ -328,6 +330,8 @@

title: T5v1.1

- local: model_doc/tapas

title: TAPAS

+ - local: model_doc/tapex

+ title: TAPEX

- local: model_doc/transfo-xl

title: Transformer XL

- local: model_doc/trocr

diff --git a/docs/source/en/index.mdx b/docs/source/en/index.mdx

index 281add6e5ef4..2071e41e672f 100644

--- a/docs/source/en/index.mdx

+++ b/docs/source/en/index.mdx

@@ -124,6 +124,7 @@ The library currently contains JAX, PyTorch and TensorFlow implementations, pret

1. **[REALM](model_doc/realm.html)** (from Google Research) released with the paper [REALM: Retrieval-Augmented Language Model Pre-Training](https://arxiv.org/abs/2002.08909) by Kelvin Guu, Kenton Lee, Zora Tung, Panupong Pasupat and Ming-Wei Chang.

1. **[Reformer](model_doc/reformer)** (from Google Research) released with the paper [Reformer: The Efficient Transformer](https://arxiv.org/abs/2001.04451) by Nikita Kitaev, Łukasz Kaiser, Anselm Levskaya.

1. **[RemBERT](model_doc/rembert)** (from Google Research) released with the paper [Rethinking embedding coupling in pre-trained language models](https://arxiv.org/abs/2010.12821) by Hyung Won Chung, Thibault Févry, Henry Tsai, M. Johnson, Sebastian Ruder.

+1. **[RegNet](model_doc/regnet)** (from META Platforms) released with the paper [Designing Network Design Space](https://arxiv.org/abs/2003.13678) by Ilija Radosavovic, Raj Prateek Kosaraju, Ross Girshick, Kaiming He, Piotr Dollár.

1. **[ResNet](model_doc/resnet)** (from Microsoft Research) released with the paper [Deep Residual Learning for Image Recognition](https://arxiv.org/abs/1512.03385) by Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun.

1. **[RoBERTa](model_doc/roberta)** (from Facebook), released together with the paper [RoBERTa: A Robustly Optimized BERT Pretraining Approach](https://arxiv.org/abs/1907.11692) by Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi Chen, Omer Levy, Mike Lewis, Luke Zettlemoyer, Veselin Stoyanov.

1. **[RoFormer](model_doc/roformer)** (from ZhuiyiTechnology), released together with the paper [RoFormer: Enhanced Transformer with Rotary Position Embedding](https://arxiv.org/abs/2104.09864) by Jianlin Su and Yu Lu and Shengfeng Pan and Bo Wen and Yunfeng Liu.

@@ -138,6 +139,7 @@ The library currently contains JAX, PyTorch and TensorFlow implementations, pret

1. **[T5](model_doc/t5)** (from Google AI) released with the paper [Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer](https://arxiv.org/abs/1910.10683) by Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu.

1. **[T5v1.1](model_doc/t5v1.1)** (from Google AI) released in the repository [google-research/text-to-text-transfer-transformer](https://github.com/google-research/text-to-text-transfer-transformer/blob/main/released_checkpoints.md#t511) by Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu.

1. **[TAPAS](model_doc/tapas)** (from Google AI) released with the paper [TAPAS: Weakly Supervised Table Parsing via Pre-training](https://arxiv.org/abs/2004.02349) by Jonathan Herzig, Paweł Krzysztof Nowak, Thomas Müller, Francesco Piccinno and Julian Martin Eisenschlos.

+1. **[TAPEX](model_doc/tapex)** (from Microsoft Research) released with the paper [TAPEX: Table Pre-training via Learning a Neural SQL Executor](https://arxiv.org/abs/2107.07653) by Qian Liu, Bei Chen, Jiaqi Guo, Morteza Ziyadi, Zeqi Lin, Weizhu Chen, Jian-Guang Lou.

1. **[Transformer-XL](model_doc/transfo-xl)** (from Google/CMU) released with the paper [Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context](https://arxiv.org/abs/1901.02860) by Zihang Dai*, Zhilin Yang*, Yiming Yang, Jaime Carbonell, Quoc V. Le, Ruslan Salakhutdinov.

1. **[TrOCR](model_doc/trocr)** (from Microsoft), released together with the paper [TrOCR: Transformer-based Optical Character Recognition with Pre-trained Models](https://arxiv.org/abs/2109.10282) by Minghao Li, Tengchao Lv, Lei Cui, Yijuan Lu, Dinei Florencio, Cha Zhang, Zhoujun Li, Furu Wei.

1. **[UniSpeech](model_doc/unispeech)** (from Microsoft Research) released with the paper [UniSpeech: Unified Speech Representation Learning with Labeled and Unlabeled Data](https://arxiv.org/abs/2101.07597) by Chengyi Wang, Yu Wu, Yao Qian, Kenichi Kumatani, Shujie Liu, Furu Wei, Michael Zeng, Xuedong Huang.

@@ -234,6 +236,7 @@ Flax), PyTorch, and/or TensorFlow.

| RAG | ✅ | ❌ | ✅ | ✅ | ❌ |

| Realm | ✅ | ✅ | ✅ | ❌ | ❌ |

| Reformer | ✅ | ✅ | ✅ | ❌ | ❌ |

+| RegNet | ❌ | ❌ | ✅ | ❌ | ❌ |

| RemBERT | ✅ | ✅ | ✅ | ✅ | ❌ |

| ResNet | ❌ | ❌ | ✅ | ❌ | ❌ |

| RetriBERT | ✅ | ✅ | ✅ | ❌ | ❌ |

@@ -250,6 +253,7 @@ Flax), PyTorch, and/or TensorFlow.

| Swin | ❌ | ❌ | ✅ | ❌ | ❌ |

| T5 | ✅ | ✅ | ✅ | ✅ | ✅ |

| TAPAS | ✅ | ❌ | ✅ | ✅ | ❌ |

+| TAPEX | ✅ | ✅ | ✅ | ✅ | ✅ |

| Transformer-XL | ✅ | ❌ | ✅ | ✅ | ❌ |

| TrOCR | ❌ | ❌ | ✅ | ❌ | ❌ |

| UniSpeech | ❌ | ❌ | ✅ | ❌ | ❌ |

diff --git a/docs/source/en/internal/generation_utils.mdx b/docs/source/en/internal/generation_utils.mdx

index c8b42d91848e..5a717edb98fb 100644

--- a/docs/source/en/internal/generation_utils.mdx

+++ b/docs/source/en/internal/generation_utils.mdx

@@ -178,6 +178,12 @@ generation.

[[autodoc]] TFRepetitionPenaltyLogitsProcessor

- __call__

+[[autodoc]] TFForcedBOSTokenLogitsProcessor

+ - __call__

+

+[[autodoc]] TFForcedEOSTokenLogitsProcessor

+ - __call__

+

[[autodoc]] FlaxLogitsProcessor

- __call__

diff --git a/docs/source/en/model_doc/regnet.mdx b/docs/source/en/model_doc/regnet.mdx

new file mode 100644

index 000000000000..666a9ee39675

--- /dev/null

+++ b/docs/source/en/model_doc/regnet.mdx

@@ -0,0 +1,48 @@

+

+

+# RegNet

+

+## Overview

+

+The RegNet model was proposed in [Designing Network Design Spaces](https://arxiv.org/abs/2003.13678) by Ilija Radosavovic, Raj Prateek Kosaraju, Ross Girshick, Kaiming He, Piotr Dollár.

+

+The authors design search spaces to perform Neural Architecture Search (NAS). They first start from a high dimensional search space and iteratively reduce the search space by empirically applying constraints based on the best-performing models sampled by the current search space.

+

+The abstract from the paper is the following:

+

+*In this work, we present a new network design paradigm. Our goal is to help advance the understanding of network design and discover design principles that generalize across settings. Instead of focusing on designing individual network instances, we design network design spaces that parametrize populations of networks. The overall process is analogous to classic manual design of networks, but elevated to the design space level. Using our methodology we explore the structure aspect of network design and arrive at a low-dimensional design space consisting of simple, regular networks that we call RegNet. The core insight of the RegNet parametrization is surprisingly simple: widths and depths of good networks can be explained by a quantized linear function. We analyze the RegNet design space and arrive at interesting findings that do not match the current practice of network design. The RegNet design space provides simple and fast networks that work well across a wide range of flop regimes. Under comparable training settings and flops, the RegNet models outperform the popular EfficientNet models while being up to 5x faster on GPUs.*

+

+Tips:

+

+- One can use [`AutoFeatureExtractor`] to prepare images for the model.

+- The huge 10B model from [Self-supervised Pretraining of Visual Features in the Wild](https://arxiv.org/abs/2103.01988), trained on one billion Instagram images, is available on the [hub](https://huggingface.co/facebook/regnet-y-10b-seer)

+

+This model was contributed by [Francesco](https://huggingface.co/Francesco).

+The original code can be found [here](https://github.com/facebookresearch/pycls).

+

+

+## RegNetConfig

+

+[[autodoc]] RegNetConfig

+

+

+## RegNetModel

+

+[[autodoc]] RegNetModel

+ - forward

+

+

+## RegNetForImageClassification

+

+[[autodoc]] RegNetForImageClassification

+ - forward

\ No newline at end of file

diff --git a/docs/source/en/model_doc/speech_to_text.mdx b/docs/source/en/model_doc/speech_to_text.mdx

index 2e86c497c057..0a3b00b1d5dd 100644

--- a/docs/source/en/model_doc/speech_to_text.mdx

+++ b/docs/source/en/model_doc/speech_to_text.mdx

@@ -47,25 +47,19 @@ be installed as follows: `apt install libsndfile1-dev`

>>> import torch

>>> from transformers import Speech2TextProcessor, Speech2TextForConditionalGeneration

>>> from datasets import load_dataset

->>> import soundfile as sf

>>> model = Speech2TextForConditionalGeneration.from_pretrained("facebook/s2t-small-librispeech-asr")

>>> processor = Speech2TextProcessor.from_pretrained("facebook/s2t-small-librispeech-asr")

->>> def map_to_array(batch):

-... speech, _ = sf.read(batch["file"])

-... batch["speech"] = speech

-... return batch

+>>> ds = load_dataset("hf-internal-testing/librispeech_asr_demo", "clean", split="validation")

-

->>> ds = load_dataset("hf-internal-testing/librispeech_asr_dummy", "clean", split="validation")

->>> ds = ds.map(map_to_array)

-

->>> inputs = processor(ds["speech"][0], sampling_rate=16_000, return_tensors="pt")

->>> generated_ids = model.generate(input_ids=inputs["input_features"], attention_mask=inputs["attention_mask"])

+>>> inputs = processor(ds[0]["audio"]["array"], sampling_rate=ds[0]["audio"]["sampling_rate"], return_tensors="pt")

+>>> generated_ids = model.generate(inputs["input_features"], attention_mask=inputs["attention_mask"])

>>> transcription = processor.batch_decode(generated_ids)

+>>> transcription

+['mister quilter is the apostle of the middle classes and we are glad to welcome his gospel']

```

- Multilingual speech translation

@@ -80,29 +74,22 @@ be installed as follows: `apt install libsndfile1-dev`

>>> import torch

>>> from transformers import Speech2TextProcessor, Speech2TextForConditionalGeneration

>>> from datasets import load_dataset

->>> import soundfile as sf

>>> model = Speech2TextForConditionalGeneration.from_pretrained("facebook/s2t-medium-mustc-multilingual-st")

>>> processor = Speech2TextProcessor.from_pretrained("facebook/s2t-medium-mustc-multilingual-st")

+>>> ds = load_dataset("hf-internal-testing/librispeech_asr_demo", "clean", split="validation")

->>> def map_to_array(batch):

-... speech, _ = sf.read(batch["file"])

-... batch["speech"] = speech

-... return batch

-

-

->>> ds = load_dataset("hf-internal-testing/librispeech_asr_dummy", "clean", split="validation")

->>> ds = ds.map(map_to_array)

-

->>> inputs = processor(ds["speech"][0], sampling_rate=16_000, return_tensors="pt")

+>>> inputs = processor(ds[0]["audio"]["array"], sampling_rate=ds[0]["audio"]["sampling_rate"], return_tensors="pt")

>>> generated_ids = model.generate(

-... input_ids=inputs["input_features"],

+... inputs["input_features"],

... attention_mask=inputs["attention_mask"],

... forced_bos_token_id=processor.tokenizer.lang_code_to_id["fr"],

... )

>>> translation = processor.batch_decode(generated_ids)

+>>> translation

+[" (Vidéo) Si M. Kilder est l'apossible des classes moyennes, et nous sommes heureux d'être accueillis dans son évangile."]

```

See the [model hub](https://huggingface.co/models?filter=speech_to_text) to look for Speech2Text checkpoints.

diff --git a/docs/source/en/model_doc/tapex.mdx b/docs/source/en/model_doc/tapex.mdx

new file mode 100644

index 000000000000..f6e65764e50d

--- /dev/null

+++ b/docs/source/en/model_doc/tapex.mdx

@@ -0,0 +1,130 @@

+

+

+# TAPEX

+

+## Overview

+

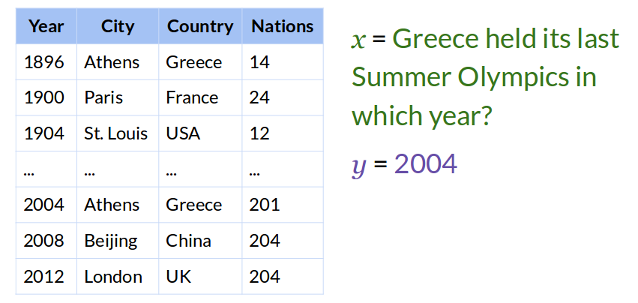

+The TAPEX model was proposed in [TAPEX: Table Pre-training via Learning a Neural SQL Executor](https://arxiv.org/abs/2107.07653) by Qian Liu,

+Bei Chen, Jiaqi Guo, Morteza Ziyadi, Zeqi Lin, Weizhu Chen, Jian-Guang Lou. TAPEX pre-trains a BART model to solve synthetic SQL queries, after

+which it can be fine-tuned to answer natural language questions related to tabular data, as well as performing table fact checking.

+

+TAPEX has been fine-tuned on several datasets:

+- [SQA](https://www.microsoft.com/en-us/download/details.aspx?id=54253) (Sequential Question Answering by Microsoft)

+- [WTQ](https://github.com/ppasupat/WikiTableQuestions) (Wiki Table Questions by Stanford University)

+- [WikiSQL](https://github.com/salesforce/WikiSQL) (by Salesforce)

+- [TabFact](https://tabfact.github.io/) (by USCB NLP Lab).

+

+The abstract from the paper is the following:

+

+*Recent progress in language model pre-training has achieved a great success via leveraging large-scale unstructured textual data. However, it is

+still a challenge to apply pre-training on structured tabular data due to the absence of large-scale high-quality tabular data. In this paper, we

+propose TAPEX to show that table pre-training can be achieved by learning a neural SQL executor over a synthetic corpus, which is obtained by automatically

+synthesizing executable SQL queries and their execution outputs. TAPEX addresses the data scarcity challenge via guiding the language model to mimic a SQL

+executor on the diverse, large-scale and high-quality synthetic corpus. We evaluate TAPEX on four benchmark datasets. Experimental results demonstrate that

+TAPEX outperforms previous table pre-training approaches by a large margin and achieves new state-of-the-art results on all of them. This includes improvements

+on the weakly-supervised WikiSQL denotation accuracy to 89.5% (+2.3%), the WikiTableQuestions denotation accuracy to 57.5% (+4.8%), the SQA denotation accuracy

+to 74.5% (+3.5%), and the TabFact accuracy to 84.2% (+3.2%). To our knowledge, this is the first work to exploit table pre-training via synthetic executable programs

+and to achieve new state-of-the-art results on various downstream tasks.*

+

+Tips:

+

+- TAPEX is a generative (seq2seq) model. One can directly plug in the weights of TAPEX into a BART model.

+- TAPEX has checkpoints on the hub that are either pre-trained only, or fine-tuned on WTQ, SQA, WikiSQL and TabFact.

+- Sentences + tables are presented to the model as `sentence + " " + linearized table`. The linearized table has the following format:

+ `col: col1 | col2 | col 3 row 1 : val1 | val2 | val3 row 2 : ...`.

+- TAPEX has its own tokenizer, that allows to prepare all data for the model easily. One can pass Pandas DataFrames and strings to the tokenizer,

+ and it will automatically create the `input_ids` and `attention_mask` (as shown in the usage examples below).

+

+## Usage: inference

+

+Below, we illustrate how to use TAPEX for table question answering. As one can see, one can directly plug in the weights of TAPEX into a BART model.

+We use the [Auto API](auto), which will automatically instantiate the appropriate tokenizer ([`TapexTokenizer`]) and model ([`BartForConditionalGeneration`]) for us,

+based on the configuration file of the checkpoint on the hub.

+

+```python

+>>> from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

+>>> import pandas as pd

+

+>>> tokenizer = AutoTokenizer.from_pretrained("microsoft/tapex-large-finetuned-wtq")

+>>> model = AutoModelForSeq2SeqLM.from_pretrained("microsoft/tapex-large-finetuned-wtq")

+

+>>> # prepare table + question

+>>> data = {"Actors": ["Brad Pitt", "Leonardo Di Caprio", "George Clooney"], "Number of movies": ["87", "53", "69"]}

+>>> table = pd.DataFrame.from_dict(data)

+>>> question = "how many movies does Leonardo Di Caprio have?"

+

+>>> encoding = tokenizer(table, question, return_tensors="pt")

+

+>>> # let the model generate an answer autoregressively

+>>> outputs = model.generate(**encoding)

+

+>>> # decode back to text

+>>> predicted_answer = tokenizer.batch_decode(outputs, skip_special_tokens=True)[0]

+>>> print(predicted_answer)

+53

+```

+

+Note that [`TapexTokenizer`] also supports batched inference. Hence, one can provide a batch of different tables/questions, or a batch of a single table

+and multiple questions, or a batch of a single query and multiple tables. Let's illustrate this:

+

+```python

+>>> # prepare table + question

+>>> data = {"Actors": ["Brad Pitt", "Leonardo Di Caprio", "George Clooney"], "Number of movies": ["87", "53", "69"]}

+>>> table = pd.DataFrame.from_dict(data)

+>>> questions = [

+... "how many movies does Leonardo Di Caprio have?",

+... "which actor has 69 movies?",

+... "what's the first name of the actor who has 87 movies?",

+... ]

+>>> encoding = tokenizer(table, questions, padding=True, return_tensors="pt")

+

+>>> # let the model generate an answer autoregressively

+>>> outputs = model.generate(**encoding)

+

+>>> # decode back to text

+>>> tokenizer.batch_decode(outputs, skip_special_tokens=True)

+[' 53', ' george clooney', ' brad pitt']

+```

+

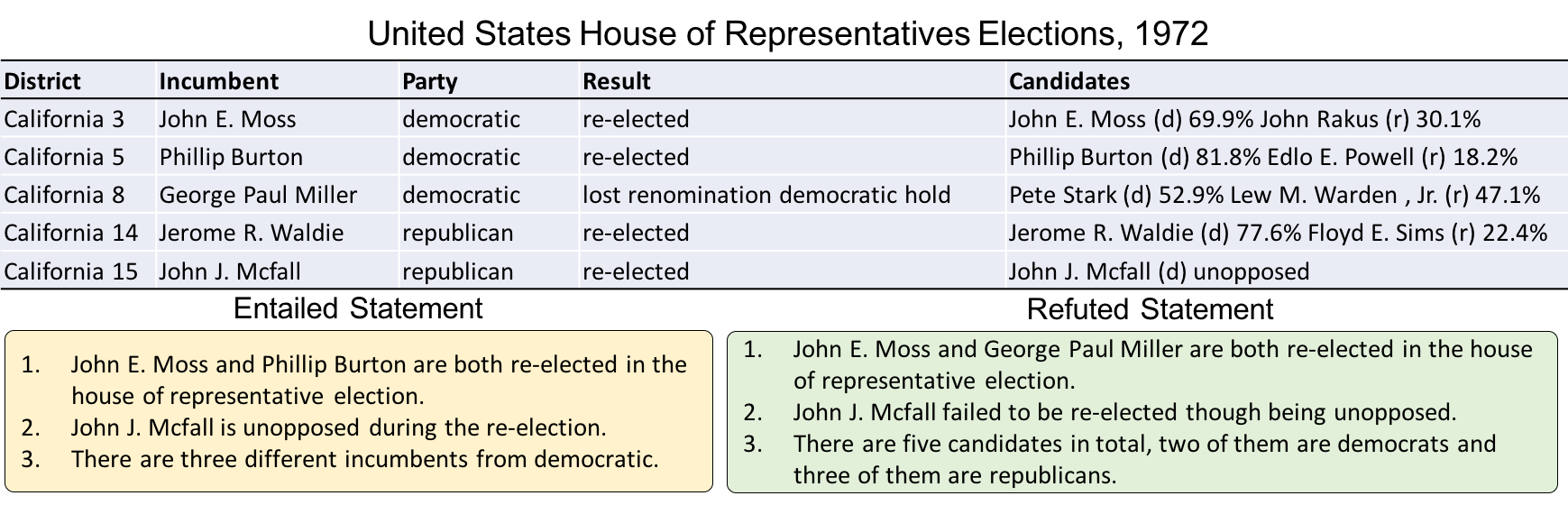

+In case one wants to do table verification (i.e. the task of determining whether a given sentence is supported or refuted by the contents

+of a table), one can instantiate a [`BartForSequenceClassification`] model. TAPEX has checkpoints on the hub fine-tuned on TabFact, an important

+benchmark for table fact checking (it achieves 84% accuracy). The code example below again leverages the [Auto API](auto).

+

+```python

+>>> from transformers import AutoTokenizer, AutoModelForSequenceClassification

+

+>>> tokenizer = AutoTokenizer.from_pretrained("microsoft/tapex-large-finetuned-tabfact")

+>>> model = AutoModelForSequenceClassification.from_pretrained("microsoft/tapex-large-finetuned-tabfact")

+

+>>> # prepare table + sentence

+>>> data = {"Actors": ["Brad Pitt", "Leonardo Di Caprio", "George Clooney"], "Number of movies": ["87", "53", "69"]}

+>>> table = pd.DataFrame.from_dict(data)

+>>> sentence = "George Clooney has 30 movies"

+

+>>> encoding = tokenizer(table, sentence, return_tensors="pt")

+

+>>> # forward pass

+>>> outputs = model(**encoding)

+

+>>> # print prediction

+>>> predicted_class_idx = outputs.logits[0].argmax(dim=0).item()

+>>> print(model.config.id2label[predicted_class_idx])

+Refused

+```

+

+

+## TapexTokenizer

+

+[[autodoc]] TapexTokenizer

+ - __call__

+ - save_vocabulary

\ No newline at end of file

diff --git a/docs/source/en/preprocessing.mdx b/docs/source/en/preprocessing.mdx

index 390acd72731d..947129b34aaf 100644

--- a/docs/source/en/preprocessing.mdx

+++ b/docs/source/en/preprocessing.mdx

@@ -199,22 +199,22 @@ Audio inputs are preprocessed differently than textual inputs, but the end goal

pip install datasets

```

-Load the keyword spotting task from the [SUPERB](https://huggingface.co/datasets/superb) benchmark (see the 🤗 [Datasets tutorial](https://huggingface.co/docs/datasets/load_hub.html) for more details on how to load a dataset):

+Load the [MInDS-14](https://huggingface.co/datasets/PolyAI/minds14) dataset (see the 🤗 [Datasets tutorial](https://huggingface.co/docs/datasets/load_hub.html) for more details on how to load a dataset):

```py

>>> from datasets import load_dataset, Audio

->>> dataset = load_dataset("superb", "ks")

+>>> dataset = load_dataset("PolyAI/minds14", name="en-US", split="train")

```

Access the first element of the `audio` column to take a look at the input. Calling the `audio` column will automatically load and resample the audio file:

```py

->>> dataset["train"][0]["audio"]

-{'array': array([ 0. , 0. , 0. , ..., -0.00592041,

- -0.00405884, -0.00253296], dtype=float32),

- 'path': '/root/.cache/huggingface/datasets/downloads/extracted/05734a36d88019a09725c20cc024e1c4e7982e37d7d55c0c1ca1742ea1cdd47f/_background_noise_/doing_the_dishes.wav',

- 'sampling_rate': 16000}

+>>> dataset[0]["audio"]

+{'array': array([ 0. , 0.00024414, -0.00024414, ..., -0.00024414,

+ 0. , 0. ], dtype=float32),

+ 'path': '/root/.cache/huggingface/datasets/downloads/extracted/f14948e0e84be638dd7943ac36518a4cf3324e8b7aa331c5ab11541518e9368c/en-US~JOINT_ACCOUNT/602ba55abb1e6d0fbce92065.wav',

+ 'sampling_rate': 8000}

```

This returns three items:

@@ -227,34 +227,34 @@ This returns three items:

For this tutorial, you will use the [Wav2Vec2](https://huggingface.co/facebook/wav2vec2-base) model. As you can see from the model card, the Wav2Vec2 model is pretrained on 16kHz sampled speech audio. It is important your audio data's sampling rate matches the sampling rate of the dataset used to pretrain the model. If your data's sampling rate isn't the same, then you need to resample your audio data.

-For example, load the [LJ Speech](https://huggingface.co/datasets/lj_speech) dataset which has a sampling rate of 22050kHz. In order to use the Wav2Vec2 model with this dataset, downsample the sampling rate to 16kHz:

+For example, the [MInDS-14](https://huggingface.co/datasets/PolyAI/minds14) dataset has a sampling rate of 8000kHz. In order to use the Wav2Vec2 model with this dataset, upsample the sampling rate to 16kHz:

```py

->>> lj_speech = load_dataset("lj_speech", split="train")

->>> lj_speech[0]["audio"]

-{'array': array([-7.3242188e-04, -7.6293945e-04, -6.4086914e-04, ...,

- 7.3242188e-04, 2.1362305e-04, 6.1035156e-05], dtype=float32),

- 'path': '/root/.cache/huggingface/datasets/downloads/extracted/917ece08c95cf0c4115e45294e3cd0dee724a1165b7fc11798369308a465bd26/LJSpeech-1.1/wavs/LJ001-0001.wav',

- 'sampling_rate': 22050}

+>>> dataset = load_dataset("PolyAI/minds14", name="en-US", split="train")

+>>> dataset[0]["audio"]

+{'array': array([ 0. , 0.00024414, -0.00024414, ..., -0.00024414,

+ 0. , 0. ], dtype=float32),

+ 'path': '/root/.cache/huggingface/datasets/downloads/extracted/f14948e0e84be638dd7943ac36518a4cf3324e8b7aa331c5ab11541518e9368c/en-US~JOINT_ACCOUNT/602ba55abb1e6d0fbce92065.wav',

+ 'sampling_rate': 8000}

```

-1. Use 🤗 Datasets' [`cast_column`](https://huggingface.co/docs/datasets/package_reference/main_classes.html#datasets.Dataset.cast_column) method to downsample the sampling rate to 16kHz:

+1. Use 🤗 Datasets' [`cast_column`](https://huggingface.co/docs/datasets/package_reference/main_classes.html#datasets.Dataset.cast_column) method to upsample the sampling rate to 16kHz:

```py

->>> lj_speech = lj_speech.cast_column("audio", Audio(sampling_rate=16_000))

+>>> dataset = dataset.cast_column("audio", Audio(sampling_rate=16_000))

```

2. Load the audio file:

```py

->>> lj_speech[0]["audio"]

-{'array': array([-0.00064146, -0.00074657, -0.00068768, ..., 0.00068341,

- 0.00014045, 0. ], dtype=float32),

- 'path': '/root/.cache/huggingface/datasets/downloads/extracted/917ece08c95cf0c4115e45294e3cd0dee724a1165b7fc11798369308a465bd26/LJSpeech-1.1/wavs/LJ001-0001.wav',

+>>> dataset[0]["audio"]

+{'array': array([ 2.3443763e-05, 2.1729663e-04, 2.2145823e-04, ...,

+ 3.8356509e-05, -7.3497440e-06, -2.1754686e-05], dtype=float32),

+ 'path': '/root/.cache/huggingface/datasets/downloads/extracted/f14948e0e84be638dd7943ac36518a4cf3324e8b7aa331c5ab11541518e9368c/en-US~JOINT_ACCOUNT/602ba55abb1e6d0fbce92065.wav',

'sampling_rate': 16000}

```

-As you can see, the `sampling_rate` was downsampled to 16kHz. Now that you know how resampling works, let's return to our previous example with the SUPERB dataset!

+As you can see, the `sampling_rate` is now 16kHz!

### Feature extractor

@@ -271,9 +271,10 @@ Load the feature extractor with [`AutoFeatureExtractor.from_pretrained`]:

Pass the audio `array` to the feature extractor. We also recommend adding the `sampling_rate` argument in the feature extractor in order to better debug any silent errors that may occur.

```py

->>> audio_input = [dataset["train"][0]["audio"]["array"]]

+>>> audio_input = [dataset[0]["audio"]["array"]]

>>> feature_extractor(audio_input, sampling_rate=16000)

-{'input_values': [array([ 0.00045439, 0.00045439, 0.00045439, ..., -0.1578519 , -0.10807519, -0.06727459], dtype=float32)]}

+{'input_values': [array([ 3.8106556e-04, 2.7506407e-03, 2.8015103e-03, ...,

+ 5.6335266e-04, 4.6588284e-06, -1.7142107e-04], dtype=float32)]}

```

### Pad and truncate

@@ -281,11 +282,11 @@ Pass the audio `array` to the feature extractor. We also recommend adding the `s

Just like the tokenizer, you can apply padding or truncation to handle variable sequences in a batch. Take a look at the sequence length of these two audio samples:

```py

->>> dataset["train"][0]["audio"]["array"].shape

-(1522930,)

+>>> dataset[0]["audio"]["array"].shape

+(173398,)

->>> dataset["train"][1]["audio"]["array"].shape

-(988891,)

+>>> dataset[1]["audio"]["array"].shape

+(106496,)

```

As you can see, the first sample has a longer sequence than the second sample. Let's create a function that will preprocess the dataset. Specify a maximum sample length, and the feature extractor will either pad or truncate the sequences to match it:

@@ -297,7 +298,7 @@ As you can see, the first sample has a longer sequence than the second sample. L

... audio_arrays,

... sampling_rate=16000,

... padding=True,

-... max_length=1000000,

+... max_length=100000,

... truncation=True,

... )

... return inputs

@@ -306,17 +307,17 @@ As you can see, the first sample has a longer sequence than the second sample. L

Apply the function to the the first few examples in the dataset:

```py

->>> processed_dataset = preprocess_function(dataset["train"][:5])

+>>> processed_dataset = preprocess_function(dataset[:5])

```

Now take another look at the processed sample lengths:

```py

>>> processed_dataset["input_values"][0].shape

-(1000000,)

+(100000,)

>>> processed_dataset["input_values"][1].shape

-(1000000,)

+(100000,)

```

The lengths of the first two samples now match the maximum length you specified.

diff --git a/docs/source/en/quicktour.mdx b/docs/source/en/quicktour.mdx

index 1fc4f8b865dc..057196a78117 100644

--- a/docs/source/en/quicktour.mdx

+++ b/docs/source/en/quicktour.mdx

@@ -115,23 +115,23 @@ Create a [`pipeline`] with the task you want to solve for and the model you want

>>> speech_recognizer = pipeline("automatic-speech-recognition", model="facebook/wav2vec2-base-960h")

```

-Next, load a dataset (see the 🤗 Datasets [Quick Start](https://huggingface.co/docs/datasets/quickstart.html) for more details) you'd like to iterate over. For example, let's load the [SUPERB](https://huggingface.co/datasets/superb) dataset:

+Next, load a dataset (see the 🤗 Datasets [Quick Start](https://huggingface.co/docs/datasets/quickstart.html) for more details) you'd like to iterate over. For example, let's load the [MInDS-14](https://huggingface.co/datasets/PolyAI/minds14) dataset:

```py

->>> import datasets

+>>> from datasets import load_dataset

->>> dataset = datasets.load_dataset("superb", name="asr", split="test") # doctest: +IGNORE_RESULT

+>>> dataset = load_dataset("PolyAI/minds14", name="en-US", split="train") # doctest: +IGNORE_RESULT

```

You can pass a whole dataset pipeline:

```py

->>> files = dataset["file"]

+>>> files = dataset["path"]

>>> speech_recognizer(files[:4])

-[{'text': 'HE HOPED THERE WOULD BE STEW FOR DINNER TURNIPS AND CARROTS AND BRUISED POTATOES AND FAT MUTTON PIECES TO BE LADLED OUT IN THICK PEPPERED FLOWER FAT AND SAUCE'},

- {'text': 'STUFFERED INTO YOU HIS BELLY COUNSELLED HIM'},

- {'text': 'AFTER EARLY NIGHTFALL THE YELLOW LAMPS WOULD LIGHT UP HERE AND THERE THE SQUALID QUARTER OF THE BROTHELS'},

- {'text': 'HO BERTIE ANY GOOD IN YOUR MIND'}]

+[{'text': 'I WOULD LIKE TO SET UP A JOINT ACCOUNT WITH MY PARTNER HOW DO I PROCEED WITH DOING THAT'},

+ {'text': "FONDERING HOW I'D SET UP A JOIN TO HELL T WITH MY WIFE AND WHERE THE AP MIGHT BE"},

+ {'text': "I I'D LIKE TOY SET UP A JOINT ACCOUNT WITH MY PARTNER I'M NOT SEEING THE OPTION TO DO IT ON THE APSO I CALLED IN TO GET SOME HELP CAN I JUST DO IT OVER THE PHONE WITH YOU AND GIVE YOU THE INFORMATION OR SHOULD I DO IT IN THE AP AN I'M MISSING SOMETHING UQUETTE HAD PREFERRED TO JUST DO IT OVER THE PHONE OF POSSIBLE THINGS"},

+ {'text': 'HOW DO I FURN A JOINA COUT'}]

```

For a larger dataset where the inputs are big (like in speech or vision), you will want to pass along a generator instead of a list that loads all the inputs in memory. See the [pipeline documentation](./main_classes/pipelines) for more information.

diff --git a/docs/source/en/serialization.mdx b/docs/source/en/serialization.mdx

index 65fb5fa5cc54..acdabb717024 100644

--- a/docs/source/en/serialization.mdx

+++ b/docs/source/en/serialization.mdx

@@ -67,6 +67,7 @@ Ready-made configurations include the following architectures:

- PLBart

- RoBERTa

- T5

+- TAPEX

- ViT

- XLM-RoBERTa

- XLM-RoBERTa-XL

diff --git a/docs/source/en/task_summary.mdx b/docs/source/en/task_summary.mdx

index 95c2d9c201a5..17be51960588 100644

--- a/docs/source/en/task_summary.mdx

+++ b/docs/source/en/task_summary.mdx

@@ -967,3 +967,156 @@ Here is an example of doing translation using a model and a tokenizer. The proce

We get the same translation as with the pipeline example.

+

+## Audio classification

+

+Audio classification assigns a class to an audio signal. The Keyword Spotting dataset from the [SUPERB](https://huggingface.co/datasets/superb) benchmark is an example dataset that can be used for audio classification fine-tuning. This dataset contains ten classes of keywords for classification. If you'd like to fine-tune a model for audio classification, take a look at the [run_audio_classification.py](https://github.com/huggingface/transformers/blob/main/examples/pytorch/audio-classification/run_audio_classification.py) script or this [how-to guide](./tasks/audio_classification).

+

+The following examples demonstrate how to use a [`pipeline`] and a model and tokenizer for audio classification inference:

+

+```py

+>>> from transformers import pipeline

+

+>>> audio_classifier = pipeline(

+... task="audio-classification", model="ehcalabres/wav2vec2-lg-xlsr-en-speech-emotion-recognition"

+... )

+>>> audio_classifier("jfk_moon_speech.wav")

+[{'label': 'calm', 'score': 0.13856211304664612},

+ {'label': 'disgust', 'score': 0.13148026168346405},

+ {'label': 'happy', 'score': 0.12635163962841034},

+ {'label': 'angry', 'score': 0.12439591437578201},

+ {'label': 'fearful', 'score': 0.12404385954141617}]

+```

+

+The general process for using a model and feature extractor for audio classification is:

+

+1. Instantiate a feature extractor and a model from the checkpoint name.

+2. Process the audio signal to be classified with a feature extractor.

+3. Pass the input through the model and take the `argmax` to retrieve the most likely class.

+4. Convert the class id to a class name with `id2label` to return an interpretable result.

+

+

+

+```py

+>>> from transformers import AutoFeatureExtractor, AutoModelForAudioClassification

+>>> from datasets import load_dataset

+>>> import torch

+

+>>> dataset = load_dataset("hf-internal-testing/librispeech_asr_demo", "clean", split="validation")

+>>> dataset = dataset.sort("id")

+>>> sampling_rate = dataset.features["audio"].sampling_rate

+

+>>> feature_extractor = AutoFeatureExtractor.from_pretrained("superb/wav2vec2-base-superb-ks")

+>>> model = AutoModelForAudioClassification.from_pretrained("superb/wav2vec2-base-superb-ks")

+

+>>> inputs = feature_extractor(dataset[0]["audio"]["array"], sampling_rate=sampling_rate, return_tensors="pt")

+

+>>> with torch.no_grad():

+... logits = model(**inputs).logits

+

+>>> predicted_class_ids = torch.argmax(logits, dim=-1).item()

+>>> predicted_label = model.config.id2label[predicted_class_ids]

+>>> predicted_label

+```

+

+

+

+## Automatic speech recognition

+

+Automatic speech recognition transcribes an audio signal to text. The [Common Voice](https://huggingface.co/datasets/common_voice) dataset is an example dataset that can be used for automatic speech recognition fine-tuning. It contains an audio file of a speaker and the corresponding sentence. If you'd like to fine-tune a model for automatic speech recognition, take a look at the [run_speech_recognition_ctc.py](https://github.com/huggingface/transformers/blob/main/examples/pytorch/speech-recognition/run_speech_recognition_ctc.py) or [run_speech_recognition_seq2seq.py](https://github.com/huggingface/transformers/blob/main/examples/pytorch/speech-recognition/run_speech_recognition_seq2seq.py) scripts or this [how-to guide](./tasks/asr).

+

+The following examples demonstrate how to use a [`pipeline`] and a model and tokenizer for automatic speech recognition inference:

+

+```py

+>>> from transformers import pipeline

+

+>>> speech_recognizer = pipeline(task="automatic-speech-recognition", model="facebook/wav2vec2-base-960h")

+>>> speech_recognizer("jfk_moon_speech.wav")

+{'text': "PRESENTETE MISTER VICE PRESIDENT GOVERNOR CONGRESSMEN THOMAS SAN O TE WILAN CONGRESSMAN MILLA MISTER WEBB MSTBELL SCIENIS DISTINGUISHED GUESS AT LADIES AND GENTLEMAN I APPRECIATE TO YOUR PRESIDENT HAVING MADE ME AN HONORARY VISITING PROFESSOR AND I WILL ASSURE YOU THAT MY FIRST LECTURE WILL BE A VERY BRIEF I AM DELIGHTED TO BE HERE AND I'M PARTICULARLY DELIGHTED TO BE HERE ON THIS OCCASION WE MEED AT A COLLEGE NOTED FOR KNOWLEGE IN A CITY NOTED FOR PROGRESS IN A STATE NOTED FOR STRAINTH AN WE STAND IN NEED OF ALL THREE"}

+```

+

+The general process for using a model and processor for automatic speech recognition is:

+

+1. Instantiate a processor (which regroups a feature extractor for input processing and a tokenizer for decoding) and a model from the checkpoint name.

+2. Process the audio signal and text with a processor.

+3. Pass the input through the model and take the `argmax` to retrieve the predicted text.

+4. Decode the text with a tokenizer to obtain the transcription.

+

+

+

+```py

+>>> from transformers import AutoProcessor, AutoModelForCTC

+>>> from datasets import load_dataset

+>>> import torch

+

+>>> dataset = load_dataset("hf-internal-testing/librispeech_asr_demo", "clean", split="validation")

+>>> dataset = dataset.sort("id")

+>>> sampling_rate = dataset.features["audio"].sampling_rate

+

+>>> processor = AutoProcessor.from_pretrained("facebook/wav2vec2-base-960h")

+>>> model = AutoModelForCTC.from_pretrained("facebook/wav2vec2-base-960h")

+

+>>> inputs = processor(dataset[0]["audio"]["array"], sampling_rate=sampling_rate, return_tensors="pt")

+>>> with torch.no_grad():

+... logits = model(**inputs).logits

+>>> predicted_ids = torch.argmax(logits, dim=-1)

+

+>>> transcription = processor.batch_decode(predicted_ids)

+>>> transcription[0]

+```

+

+

+

+## Image classification

+

+Like text and audio classification, image classification assigns a class to an image. The [CIFAR-100](https://huggingface.co/datasets/cifar100) dataset is an example dataset that can be used for image classification fine-tuning. It contains an image and the corresponding class. If you'd like to fine-tune a model for image classification, take a look at the [run_image_classification.py](https://github.com/huggingface/transformers/blob/main/examples/pytorch/image-classification/run_image_classification.py) script or this [how-to guide](./tasks/image_classification).

+

+The following examples demonstrate how to use a [`pipeline`] and a model and tokenizer for image classification inference:

+

+```py

+>>> from transformers import pipeline

+

+>>> vision_classifier = pipeline(task="image-classification")

+>>> vision_classifier(

+... images="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/pipeline-cat-chonk.jpeg"

+... )

+[{'label': 'lynx, catamount', 'score': 0.4403027892112732},

+ {'label': 'cougar, puma, catamount, mountain lion, painter, panther, Felis concolor',

+ 'score': 0.03433405980467796},

+ {'label': 'snow leopard, ounce, Panthera uncia',

+ 'score': 0.032148055732250214},

+ {'label': 'Egyptian cat', 'score': 0.02353910356760025},

+ {'label': 'tiger cat', 'score': 0.023034192621707916}]

+```

+

+The general process for using a model and feature extractor for image classification is:

+

+1. Instantiate a feature extractor and a model from the checkpoint name.

+2. Process the image to be classified with a feature extractor.

+3. Pass the input through the model and take the `argmax` to retrieve the predicted class.

+4. Convert the class id to a class name with `id2label` to return an interpretable result.

+

+

+

+```py

+>>> from transformers import AutoFeatureExtractor, AutoModelForImageClassification

+>>> import torch

+>>> from datasets import load_dataset

+

+>>> dataset = load_dataset("huggingface/cats-image")

+>>> image = dataset["test"]["image"][0]

+

+>>> feature_extractor = AutoFeatureExtractor.from_pretrained("google/vit-base-patch16-224")

+>>> model = AutoModelForImageClassification.from_pretrained("google/vit-base-patch16-224")

+

+>>> inputs = feature_extractor(image, return_tensors="pt")

+

+>>> with torch.no_grad():

+... logits = model(**inputs).logits

+

+>>> predicted_label = logits.argmax(-1).item()

+>>> print(model.config.id2label[predicted_label])

+Egyptian cat

+```

+

+

diff --git a/docs/source/en/tasks/asr.mdx b/docs/source/en/tasks/asr.mdx

index 6fe90e5cd7d4..dac5015bf815 100644

--- a/docs/source/en/tasks/asr.mdx

+++ b/docs/source/en/tasks/asr.mdx

@@ -16,7 +16,7 @@ specific language governing permissions and limitations under the License.

Automatic speech recognition (ASR) converts a speech signal to text. It is an example of a sequence-to-sequence task, going from a sequence of audio inputs to textual outputs. Voice assistants like Siri and Alexa utilize ASR models to assist users.

-This guide will show you how to fine-tune [Wav2Vec2](https://huggingface.co/facebook/wav2vec2-base) on the [TIMIT](https://huggingface.co/datasets/timit_asr) dataset to transcribe audio to text.

+This guide will show you how to fine-tune [Wav2Vec2](https://huggingface.co/facebook/wav2vec2-base) on the [MInDS-14](https://huggingface.co/datasets/PolyAI/minds14) dataset to transcribe audio to text.

@@ -24,50 +24,54 @@ See the automatic speech recognition [task page](https://huggingface.co/tasks/au

-## Load TIMIT dataset

+## Load MInDS-14 dataset

-Load the TIMIT dataset from the 🤗 Datasets library:

+Load the [MInDS-14](https://huggingface.co/datasets/PolyAI/minds14) from the 🤗 Datasets library:

```py

->>> from datasets import load_dataset

+>>> from datasets import load_dataset, Audio

->>> timit = load_dataset("timit_asr")

+>>> minds = load_dataset("PolyAI/minds14", name="en-US", split="train")

```

-Then take a look at an example:

+Split this dataset into a train and test set:

```py

->>> timit

+>>> minds = minds.train_test_split(test_size=0.2)

+```

+

+Then take a look at the dataset:

+

+```py

+>>> minds

DatasetDict({

train: Dataset({

- features: ['file', 'audio', 'text', 'phonetic_detail', 'word_detail', 'dialect_region', 'sentence_type', 'speaker_id', 'id'],

- num_rows: 4620

+ features: ['path', 'audio', 'transcription', 'english_transcription', 'intent_class', 'lang_id'],

+ num_rows: 450

})

test: Dataset({

- features: ['file', 'audio', 'text', 'phonetic_detail', 'word_detail', 'dialect_region', 'sentence_type', 'speaker_id', 'id'],

- num_rows: 1680

+ features: ['path', 'audio', 'transcription', 'english_transcription', 'intent_class', 'lang_id'],

+ num_rows: 113

})

})

```

-While the dataset contains a lot of helpful information, like `dialect_region` and `sentence_type`, you will focus on the `audio` and `text` fields in this guide. Remove the other columns:

+While the dataset contains a lot of helpful information, like `lang_id` and `intent_class`, you will focus on the `audio` and `transcription` columns in this guide. Remove the other columns:

```py

->>> timit = timit.remove_columns(

-... ["phonetic_detail", "word_detail", "dialect_region", "id", "sentence_type", "speaker_id"]

-... )

+>>> minds = minds.remove_columns(["english_transcription", "intent_class", "lang_id"])

```

Take a look at the example again:

```py

->>> timit["train"][0]

-{'audio': {'array': array([-2.1362305e-04, 6.1035156e-05, 3.0517578e-05, ...,

- -3.0517578e-05, -9.1552734e-05, -6.1035156e-05], dtype=float32),

- 'path': '/root/.cache/huggingface/datasets/downloads/extracted/404950a46da14eac65eb4e2a8317b1372fb3971d980d91d5d5b221275b1fd7e0/data/TRAIN/DR4/MMDM0/SI681.WAV',

- 'sampling_rate': 16000},

- 'file': '/root/.cache/huggingface/datasets/downloads/extracted/404950a46da14eac65eb4e2a8317b1372fb3971d980d91d5d5b221275b1fd7e0/data/TRAIN/DR4/MMDM0/SI681.WAV',

- 'text': 'Would such an act of refusal be useful?'}

+>>> minds["train"][0]

+{'audio': {'array': array([-0.00024414, 0. , 0. , ..., 0.00024414,

+ 0.00024414, 0.00024414], dtype=float32),

+ 'path': '/root/.cache/huggingface/datasets/downloads/extracted/f14948e0e84be638dd7943ac36518a4cf3324e8b7aa331c5ab11541518e9368c/en-US~APP_ERROR/602ba9e2963e11ccd901cd4f.wav',

+ 'sampling_rate': 8000},

+ 'path': '/root/.cache/huggingface/datasets/downloads/extracted/f14948e0e84be638dd7943ac36518a4cf3324e8b7aa331c5ab11541518e9368c/en-US~APP_ERROR/602ba9e2963e11ccd901cd4f.wav',

+ 'transcription': "hi I'm trying to use the banking app on my phone and currently my checking and savings account balance is not refreshing"}

```

The `audio` column contains a 1-dimensional `array` of the speech signal that must be called to load and resample the audio file.

@@ -82,6 +86,19 @@ Load the Wav2Vec2 processor to process the audio signal and transcribed text:

>>> processor = AutoProcessor.from_pretrained("facebook/wav2vec2-base")

```

+The [MInDS-14](https://huggingface.co/datasets/PolyAI/minds14) dataset has a sampling rate of 8000khz. You will need to resample the dataset to use the pretrained Wav2Vec2 model:

+

+```py

+>>> minds = minds.cast_column("audio", Audio(sampling_rate=16_000))

+>>> minds["train"][0]

+{'audio': {'array': array([-2.38064706e-04, -1.58618059e-04, -5.43987835e-06, ...,

+ 2.78103951e-04, 2.38446111e-04, 1.18740834e-04], dtype=float32),

+ 'path': '/root/.cache/huggingface/datasets/downloads/extracted/f14948e0e84be638dd7943ac36518a4cf3324e8b7aa331c5ab11541518e9368c/en-US~APP_ERROR/602ba9e2963e11ccd901cd4f.wav',

+ 'sampling_rate': 16000},

+ 'path': '/root/.cache/huggingface/datasets/downloads/extracted/f14948e0e84be638dd7943ac36518a4cf3324e8b7aa331c5ab11541518e9368c/en-US~APP_ERROR/602ba9e2963e11ccd901cd4f.wav',

+ 'transcription': "hi I'm trying to use the banking app on my phone and currently my checking and savings account balance is not refreshing"}

+```

+

The preprocessing function needs to:

1. Call the `audio` column to load and resample the audio file.

@@ -96,14 +113,14 @@ The preprocessing function needs to:

... batch["input_length"] = len(batch["input_values"])

... with processor.as_target_processor():

-... batch["labels"] = processor(batch["text"]).input_ids

+... batch["labels"] = processor(batch["transcription"]).input_ids

... return batch

```

Use 🤗 Datasets [`map`](https://huggingface.co/docs/datasets/package_reference/main_classes.html#datasets.Dataset.map) function to apply the preprocessing function over the entire dataset. You can speed up the map function by increasing the number of processes with `num_proc`. Remove the columns you don't need:

```py

->>> timit = timit.map(prepare_dataset, remove_columns=timit.column_names["train"], num_proc=4)

+>>> encoded_minds = minds.map(prepare_dataset, remove_columns=minds.column_names["train"], num_proc=4)

```

🤗 Transformers doesn't have a data collator for automatic speech recognition, so you will need to create one. You can adapt the [`DataCollatorWithPadding`] to create a batch of examples for automatic speech recognition. It will also dynamically pad your text and labels to the length of the longest element in its batch, so they are a uniform length. While it is possible to pad your text in the `tokenizer` function by setting `padding=True`, dynamic padding is more efficient.

@@ -165,7 +182,7 @@ Load Wav2Vec2 with [`AutoModelForCTC`]. For `ctc_loss_reduction`, it is often be

>>> from transformers import AutoModelForCTC, TrainingArguments, Trainer

>>> model = AutoModelForCTC.from_pretrained(

-... "facebook/wav2vec-base",

+... "facebook/wav2vec2-base",

... ctc_loss_reduction="mean",

... pad_token_id=processor.tokenizer.pad_token_id,

... )

@@ -200,8 +217,8 @@ At this point, only three steps remain:

>>> trainer = Trainer(

... model=model,

... args=training_args,

-... train_dataset=timit["train"],

-... eval_dataset=timit["test"],

+... train_dataset=encoded_minds["train"],

+... eval_dataset=encoded_minds["test"],

... tokenizer=processor.feature_extractor,

... data_collator=data_collator,

... )

diff --git a/docs/source/en/tasks/audio_classification.mdx b/docs/source/en/tasks/audio_classification.mdx

index e239461762fc..6dee7a19dd5d 100644

--- a/docs/source/en/tasks/audio_classification.mdx

+++ b/docs/source/en/tasks/audio_classification.mdx

@@ -16,7 +16,7 @@ specific language governing permissions and limitations under the License.

Audio classification assigns a label or class to audio data. It is similar to text classification, except an audio input is continuous and must be discretized, whereas text can be split into tokens. Some practical applications of audio classification include identifying intent, speakers, and even animal species by their sounds.

-This guide will show you how to fine-tune [Wav2Vec2](https://huggingface.co/facebook/wav2vec2-base) on the Keyword Spotting subset of the [SUPERB](https://huggingface.co/datasets/superb) benchmark to classify utterances.

+This guide will show you how to fine-tune [Wav2Vec2](https://huggingface.co/facebook/wav2vec2-base) on the [MInDS-14](https://huggingface.co/datasets/PolyAI/minds14) to classify intent.

@@ -24,27 +24,59 @@ See the audio classification [task page](https://huggingface.co/tasks/audio-clas

-## Load SUPERB dataset

+## Load MInDS-14 dataset

-Load the SUPERB dataset from the 🤗 Datasets library:

+Load the [MInDS-14](https://huggingface.co/datasets/PolyAI/minds14) from the 🤗 Datasets library:

```py

->>> from datasets import load_dataset

+>>> from datasets import load_dataset, Audio

->>> ks = load_dataset("superb", "ks")

+>>> minds = load_dataset("PolyAI/minds14", name="en-US", split="train")

```

-Then take a look at an example:

+Split this dataset into a train and test set:

```py

->>> ks["train"][0]

-{'audio': {'array': array([ 0. , 0. , 0. , ..., -0.00592041, -0.00405884, -0.00253296], dtype=float32), 'path': '/root/.cache/huggingface/datasets/downloads/extracted/05734a36d88019a09725c20cc024e1c4e7982e37d7d55c0c1ca1742ea1cdd47f/_background_noise_/doing_the_dishes.wav', 'sampling_rate': 16000}, 'file': '/root/.cache/huggingface/datasets/downloads/extracted/05734a36d88019a09725c20cc024e1c4e7982e37d7d55c0c1ca1742ea1cdd47f/_background_noise_/doing_the_dishes.wav', 'label': 10}

+>>> minds = minds.train_test_split(test_size=0.2)

```

-The `audio` column contains a 1-dimensional `array` of the speech signal that must be called to load and resample the audio file. The `label` column is an integer that represents the utterance class. Create a dictionary that maps a label name to an integer and vice versa. The mapping will help the model recover the label name from the label number:

+Then take a look at the dataset:

```py

->>> labels = ks["train"].features["label"].names

+>>> minds

+DatasetDict({

+ train: Dataset({

+ features: ['path', 'audio', 'transcription', 'english_transcription', 'intent_class', 'lang_id'],

+ num_rows: 450

+ })

+ test: Dataset({

+ features: ['path', 'audio', 'transcription', 'english_transcription', 'intent_class', 'lang_id'],

+ num_rows: 113

+ })

+})

+```

+

+While the dataset contains a lot of other useful information, like `lang_id` and `english_transcription`, you will focus on the `audio` and `intent_class` in this guide. Remove the other columns:

+

+```py

+>>> minds = minds.remove_columns(["path", "transcription", "english_transcription", "lang_id"])

+```

+

+Take a look at an example now:

+

+```py

+>>> minds["train"][0]

+{'audio': {'array': array([ 0. , 0. , 0. , ..., -0.00048828,

+ -0.00024414, -0.00024414], dtype=float32),

+ 'path': '/root/.cache/huggingface/datasets/downloads/extracted/f14948e0e84be638dd7943ac36518a4cf3324e8b7aa331c5ab11541518e9368c/en-US~APP_ERROR/602b9a5fbb1e6d0fbce91f52.wav',

+ 'sampling_rate': 8000},

+ 'intent_class': 2}

+```

+

+The `audio` column contains a 1-dimensional `array` of the speech signal that must be called to load and resample the audio file. The `intent_class` column is an integer that represents the class id of intent. Create a dictionary that maps a label name to an integer and vice versa. The mapping will help the model recover the label name from the label number:

+

+```py

+>>> labels = minds["train"].features["intent_class"].names

>>> label2id, id2label = dict(), dict()

>>> for i, label in enumerate(labels):

... label2id[label] = str(i)

@@ -54,11 +86,11 @@ The `audio` column contains a 1-dimensional `array` of the speech signal that mu

Now you can convert the label number to a label name for more information:

```py

->>> id2label[str(10)]

-'_silence_'

+>>> id2label[str(2)]

+'app_error'

```

-Each keyword - or label - corresponds to a number; `10` indicates `silence` in the example above.

+Each keyword - or label - corresponds to a number; `2` indicates `app_error` in the example above.

## Preprocess

@@ -70,6 +102,18 @@ Load the Wav2Vec2 feature extractor to process the audio signal:

>>> feature_extractor = AutoFeatureExtractor.from_pretrained("facebook/wav2vec2-base")

```

+The [MInDS-14](https://huggingface.co/datasets/PolyAI/minds14) dataset has a sampling rate of 8000khz. You will need to resample the dataset to use the pretrained Wav2Vec2 model:

+

+```py

+>>> minds = minds.cast_column("audio", Audio(sampling_rate=16_000))

+>>> minds["train"][0]

+{'audio': {'array': array([ 2.2098757e-05, 4.6582241e-05, -2.2803260e-05, ...,

+ -2.8419291e-04, -2.3305941e-04, -1.1425107e-04], dtype=float32),

+ 'path': '/root/.cache/huggingface/datasets/downloads/extracted/f14948e0e84be638dd7943ac36518a4cf3324e8b7aa331c5ab11541518e9368c/en-US~APP_ERROR/602b9a5fbb1e6d0fbce91f52.wav',

+ 'sampling_rate': 16000},

+ 'intent_class': 2}

+```

+

The preprocessing function needs to:

1. Call the `audio` column to load and if necessary resample the audio file.

@@ -85,10 +129,11 @@ The preprocessing function needs to:

... return inputs

```

-Use 🤗 Datasets [`map`](https://huggingface.co/docs/datasets/package_reference/main_classes.html#datasets.Dataset.map) function to apply the preprocessing function over the entire dataset. You can speed up the `map` function by setting `batched=True` to process multiple elements of the dataset at once. Remove the columns you don't need:

+Use 🤗 Datasets [`map`](https://huggingface.co/docs/datasets/package_reference/main_classes.html#datasets.Dataset.map) function to apply the preprocessing function over the entire dataset. You can speed up the `map` function by setting `batched=True` to process multiple elements of the dataset at once. Remove the columns you don't need, and rename `intent_class` to `label` because that is what the model expects:

```py

->>> encoded_ks = ks.map(preprocess_function, remove_columns=["audio", "file"], batched=True)

+>>> encoded_minds = minds.map(preprocess_function, remove_columns="audio", batched=True)

+>>> encoded_minds = encoded_minds.rename_column("intent_class", "label")

```

## Train

@@ -130,8 +175,8 @@ At this point, only three steps remain:

>>> trainer = Trainer(

... model=model,

... args=training_args,

-... train_dataset=encoded_ks["train"],

-... eval_dataset=encoded_ks["validation"],

+... train_dataset=encoded_minds["train"],

+... eval_dataset=encoded_minds["test"],

... tokenizer=feature_extractor,

... )

diff --git a/docs/source/es/quicktour.mdx b/docs/source/es/quicktour.mdx

index 8b400867099e..7b58c987b73b 100644

--- a/docs/source/es/quicktour.mdx

+++ b/docs/source/es/quicktour.mdx

@@ -115,23 +115,22 @@ Crea un [`pipeline`] con la tarea que deseas resolver y el modelo que quieres us

>>> speech_recognizer = pipeline("automatic-speech-recognition", model="facebook/wav2vec2-base-960h", device=0)

```

-A continuación, carga el dataset (ve 🤗 Datasets [Quick Start](https://huggingface.co/docs/datasets/quickstart.html) para más detalles) sobre el que quisieras iterar. Por ejemplo, vamos a cargar el dataset [SUPERB](https://huggingface.co/datasets/superb):

+A continuación, carga el dataset (ve 🤗 Datasets [Quick Start](https://huggingface.co/docs/datasets/quickstart.html) para más detalles) sobre el que quisieras iterar. Por ejemplo, vamos a cargar el dataset [MInDS-14](https://huggingface.co/datasets/PolyAI/minds14):

```py

>>> import datasets

->>> dataset = datasets.load_dataset("superb", name="asr", split="test") # doctest: +IGNORE_RESULT

+>>> dataset = datasets.load_dataset("PolyAI/minds14", name="en-US", split="train") # doctest: +IGNORE_RESULT

```

Puedes pasar un pipeline para un dataset:

```py

->>> files = dataset["file"]

+>>> files = dataset["path"]

>>> speech_recognizer(files[:4])

-[{'text': 'HE HOPED THERE WOULD BE STEW FOR DINNER TURNIPS AND CARROTS AND BRUISED POTATOES AND FAT MUTTON PIECES TO BE LADLED OUT IN THICK PEPPERED FLOWER FAT AND SAUCE'},

- {'text': 'STUFFERED INTO YOU HIS BELLY COUNSELLED HIM'},

- {'text': 'AFTER EARLY NIGHTFALL THE YELLOW LAMPS WOULD LIGHT UP HERE AND THERE THE SQUALID QUARTER OF THE BROTHELS'},

- {'text': 'HO BERTIE ANY GOOD IN YOUR MIND'}]

+[{'text': 'I WOULD LIKE TO SET UP A JOINT ACCOUNT WITH MY PARTNER HOW DO I PROCEED WITH DOING THAT'},

+ {'text': "FONDERING HOW I'D SET UP A JOIN TO HELL T WITH MY WIFE AND WHERE THE AP MIGHT BE"},

+ {'text': "I I'D LIKE TOY SET UP A JOINT ACCOUNT WITH MY PARTNER I'M NOT SEEING THE OPTION TO DO IT ON THE APSO I CALLED IN TO GET SOME HELP CAN I JUST DO IT OVER THE PHONE WITH YOU AND GIVE YOU THE INFORMATION OR SHOULD I DO IT IN THE AP AN I'M MISSING SOMETHING UQUETTE HAD PREFERRED TO JUST DO IT OVER THE PHONE OF POSSIBLE THINGS"},

```

Para un dataset más grande, donde los inputs son de mayor tamaño (como en habla/audio o visión), querrás pasar un generador en lugar de una lista que carga todos los inputs en memoria. Ve la [documentación del pipeline](./main_classes/pipelines) para más información.

diff --git a/examples/flax/question-answering/run_qa.py b/examples/flax/question-answering/run_qa.py

index 6ab150a762b0..ac4ec706bfcf 100644

--- a/examples/flax/question-answering/run_qa.py

+++ b/examples/flax/question-answering/run_qa.py

@@ -60,7 +60,7 @@

logger = logging.getLogger(__name__)

# Will error if the minimal version of Transformers is not installed. Remove at your own risks.

-check_min_version("4.18.0.dev0")

+check_min_version("4.19.0.dev0")

Array = Any

Dataset = datasets.arrow_dataset.Dataset

diff --git a/examples/flax/text-classification/run_flax_glue.py b/examples/flax/text-classification/run_flax_glue.py

index 06f9caba8943..3ff6134531db 100755