# SetFit - Efficient Few-shot Learning with Sentence Transformers

SetFit is an efficient and prompt-free framework for few-shot fine-tuning of [Sentence Transformers](https://sbert.net/). It achieves high accuracy with little labeled data - for instance, with only 8 labeled examples per class on the Customer Reviews sentiment dataset, SetFit is competitive with fine-tuning RoBERTa Large on the full training set of 3k examples 🤯!

-

Compared to other few-shot learning methods, SetFit has several unique features:

-* 🗣 **No prompts or verbalisers:** Current techniques for few-shot fine-tuning require handcrafted prompts or verbalisers to convert examples into a format that's suitable for the underlying language model. SetFit dispenses with prompts altogether by generating rich embeddings directly from text examples.

+* 🗣 **No prompts or verbalizers:** Current techniques for few-shot fine-tuning require handcrafted prompts or verbalizers to convert examples into a format suitable for the underlying language model. SetFit dispenses with prompts altogether by generating rich embeddings directly from text examples.

* 🏎 **Fast to train:** SetFit doesn't require large-scale models like T0 or GPT-3 to achieve high accuracy. As a result, it is typically an order of magnitude (or more) faster to train and run inference with.

* 🌎 **Multilingual support**: SetFit can be used with any [Sentence Transformer](https://huggingface.co/models?library=sentence-transformers&sort=downloads) on the Hub, which means you can classify text in multiple languages by simply fine-tuning a multilingual checkpoint.

+Check out the [SetFit Documentation](https://huggingface.co/docs/setfit) for more information!

+

## Installation

Download and install `setfit` by running:

```bash

-python -m pip install setfit

+pip install setfit

```

-If you want the bleeding-edge version, install from source by running:

+If you want the bleeding-edge version instead, install from source by running:

```bash

-python -m pip install git+https://github.com/huggingface/setfit.git

+pip install git+https://github.com/huggingface/setfit.git

```

## Usage

-The examples below provide a quick overview on the various features supported in `setfit`. For more examples, check out the [`notebooks`](https://github.com/huggingface/setfit/tree/main/notebooks) folder.

+The [quickstart](https://huggingface.co/docs/setfit/quickstart) is a good place to learn about training, saving, loading, and performing inference with SetFit models.

+

+For more examples, check out the [`notebooks`](https://github.com/huggingface/setfit/tree/main/notebooks) directory, the [tutorials](https://huggingface.co/docs/setfit/tutorials/overview), or the [how-to guides](https://huggingface.co/docs/setfit/how_to/overview).

### Training a SetFit model

`setfit` is integrated with the [Hugging Face Hub](https://huggingface.co/) and provides two main classes:

-* `SetFitModel`: a wrapper that combines a pretrained body from `sentence_transformers` and a classification head from either [`scikit-learn`](https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.LogisticRegression.html) or [`SetFitHead`](https://github.com/huggingface/setfit/blob/main/src/setfit/modeling.py) (a differentiable head built upon `PyTorch` with similar APIs to `sentence_transformers`).

-* `SetFitTrainer`: a helper class that wraps the fine-tuning process of SetFit.

+* [`SetFitModel`](https://huggingface.co/docs/setfit/reference/main#setfit.SetFitModel): a wrapper that combines a pretrained body from `sentence_transformers` and a classification head from either [`scikit-learn`](https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.LogisticRegression.html) or [`SetFitHead`](https://huggingface.co/docs/setfit/reference/main#setfit.SetFitHead) (a differentiable head built upon `PyTorch` with similar APIs to `sentence_transformers`).

+* [`Trainer`](https://huggingface.co/docs/setfit/reference/trainer#setfit.Trainer): a helper class that wraps the fine-tuning process of SetFit.

-Here is an end-to-end example using a classification head from `scikit-learn`:

+Here is a simple end-to-end training example using the default classification head from `scikit-learn`:

```python

from datasets import load_dataset

-from sentence_transformers.losses import CosineSimilarityLoss

-

-from setfit import SetFitModel, SetFitTrainer, sample_dataset

+from setfit import SetFitModel, Trainer, TrainingArguments, sample_dataset

# Load a dataset from the Hugging Face Hub

@@ -56,304 +57,50 @@ dataset = load_dataset("sst2")

# Simulate the few-shot regime by sampling 8 examples per class

train_dataset = sample_dataset(dataset["train"], label_column="label", num_samples=8)

-eval_dataset = dataset["validation"]

+eval_dataset = dataset["validation"].select(range(100))

+test_dataset = dataset["validation"].select(range(100, len(dataset["validation"])))

# Load a SetFit model from Hub

model = SetFitModel.from_pretrained("sentence-transformers/paraphrase-mpnet-base-v2")

-# Create trainer

-trainer = SetFitTrainer(

- model=model,

- train_dataset=train_dataset,

- eval_dataset=eval_dataset,

- loss_class=CosineSimilarityLoss,

- metric="accuracy",

+args = TrainingArguments(

batch_size=16,

- num_iterations=20, # The number of text pairs to generate for contrastive learning

- num_epochs=1, # The number of epochs to use for contrastive learning

- column_mapping={"sentence": "text", "label": "label"} # Map dataset columns to text/label expected by trainer

+ num_epochs=4,

+ evaluation_strategy="epoch",

+ save_strategy="epoch",

+ load_best_model_at_end=True,

)

-# Train and evaluate

-trainer.train()

-metrics = trainer.evaluate()

-

-# Push model to the Hub

-trainer.push_to_hub("my-awesome-setfit-model")

-

-# Download from Hub and run inference

-model = SetFitModel.from_pretrained("lewtun/my-awesome-setfit-model")

-# Run inference

-preds = model(["i loved the spiderman movie!", "pineapple on pizza is the worst 🤮"])

-```

-

-Here is an end-to-end example using `SetFitHead`:

-

-

-```python

-from datasets import load_dataset

-from sentence_transformers.losses import CosineSimilarityLoss

-

-from setfit import SetFitModel, SetFitTrainer, sample_dataset

-

-

-# Load a dataset from the Hugging Face Hub

-dataset = load_dataset("sst2")

-

-# Simulate the few-shot regime by sampling 8 examples per class

-train_dataset = sample_dataset(dataset["train"], label_column="label", num_samples=8)

-eval_dataset = dataset["validation"]

-

-# Load a SetFit model from Hub

-model = SetFitModel.from_pretrained(

- "sentence-transformers/paraphrase-mpnet-base-v2",

- use_differentiable_head=True,

- head_params={"out_features": num_classes},

-)

-

-# Create trainer

-trainer = SetFitTrainer(

+trainer = Trainer(

model=model,

+ args=args,

train_dataset=train_dataset,

eval_dataset=eval_dataset,

- loss_class=CosineSimilarityLoss,

metric="accuracy",

- batch_size=16,

- num_iterations=20, # The number of text pairs to generate for contrastive learning

- num_epochs=1, # The number of epochs to use for contrastive learning

- column_mapping={"sentence": "text", "label": "label"} # Map dataset columns to text/label expected by trainer

+ column_mapping={"sentence": "text", "label": "label"} # Map dataset columns to text/label expected by trainer

)

# Train and evaluate

-trainer.freeze() # Freeze the head

-trainer.train() # Train only the body

-

-# Unfreeze the head and freeze the body -> head-only training

-trainer.unfreeze(keep_body_frozen=True)

-# or

-# Unfreeze the head and unfreeze the body -> end-to-end training

-trainer.unfreeze(keep_body_frozen=False)

-

-trainer.train(

- num_epochs=25, # The number of epochs to train the head or the whole model (body and head)

- batch_size=16,

- body_learning_rate=1e-5, # The body's learning rate

- learning_rate=1e-2, # The head's learning rate

- l2_weight=0.0, # Weight decay on **both** the body and head. If `None`, will use 0.01.

-)

-metrics = trainer.evaluate()

+trainer.train()

+metrics = trainer.evaluate(test_dataset)

+print(metrics)

+# {'accuracy': 0.8691709844559585}

# Push model to the Hub

-trainer.push_to_hub("my-awesome-setfit-model")

+trainer.push_to_hub("tomaarsen/setfit-paraphrase-mpnet-base-v2-sst2")

-# Download from Hub and run inference

-model = SetFitModel.from_pretrained("lewtun/my-awesome-setfit-model")

+# Download from Hub

+model = SetFitModel.from_pretrained("tomaarsen/setfit-paraphrase-mpnet-base-v2-sst2")

# Run inference

-preds = model(["i loved the spiderman movie!", "pineapple on pizza is the worst 🤮"])

-```

-

-Based on our experiments, `SetFitHead` can achieve similar performance as using a `scikit-learn` head. We use `AdamW` as the optimizer and scale down learning rates by 0.5 every 5 epochs. For more details about the experiments, please check out [here](https://github.com/huggingface/setfit/pull/112#issuecomment-1295773537). We recommend using a large learning rate (e.g. `1e-2`) for `SetFitHead` and a small learning rate (e.g. `1e-5`) for the body in your first attempt.

-

-### Training on multilabel datasets

-

-To train SetFit models on multilabel datasets, specify the `multi_target_strategy` argument when loading the pretrained model:

-

-#### Example using a classification head from `scikit-learn`:

-

-```python

-from setfit import SetFitModel

-

-model = SetFitModel.from_pretrained(

- model_id,

- multi_target_strategy="one-vs-rest",

-)

-```

-

-This will initialise a multilabel classification head from `sklearn` - the following options are available for `multi_target_strategy`:

-

-* `one-vs-rest`: uses a `OneVsRestClassifier` head.

-* `multi-output`: uses a `MultiOutputClassifier` head.

-* `classifier-chain`: uses a `ClassifierChain` head.

-

-From here, you can instantiate a `SetFitTrainer` using the same example above, and train it as usual.

-

-#### Example using the differentiable `SetFitHead`:

-

-```python

-from setfit import SetFitModel

-

-model = SetFitModel.from_pretrained(

- model_id,

- multi_target_strategy="one-vs-rest"

- use_differentiable_head=True,

- head_params={"out_features": num_classes},

-)

-```

-**Note:** If you use the differentiable `SetFitHead` classifier head, it will automatically use `BCEWithLogitsLoss` for training. The prediction involves a `sigmoid` after which probabilities are rounded to 1 or 0. Furthermore, the `"one-vs-rest"` and `"multi-output"` multi-target strategies are equivalent for the differentiable `SetFitHead`.

-

-### Zero-shot text classification

-

-SetFit can also be applied to scenarios where no labels are available. To do so, create a synthetic dataset of training examples:

-

-```python

-from datasets import Dataset

-from setfit import get_templated_dataset

-

-candidate_labels = ["negative", "positive"]

-train_dataset = get_templated_dataset(candidate_labels=candidate_labels, sample_size=8)

-```

-

-This will create examples of the form `"This sentence is {}"`, where the `{}` is filled in with one of the candidate labels. From here you can train a SetFit model as usual:

-

-```python

-from setfit import SetFitModel, SetFitTrainer

-

-model = SetFitModel.from_pretrained("sentence-transformers/paraphrase-mpnet-base-v2")

-trainer = SetFitTrainer(

- model=model,

- train_dataset=train_dataset

-)

-trainer.train()

-```

-

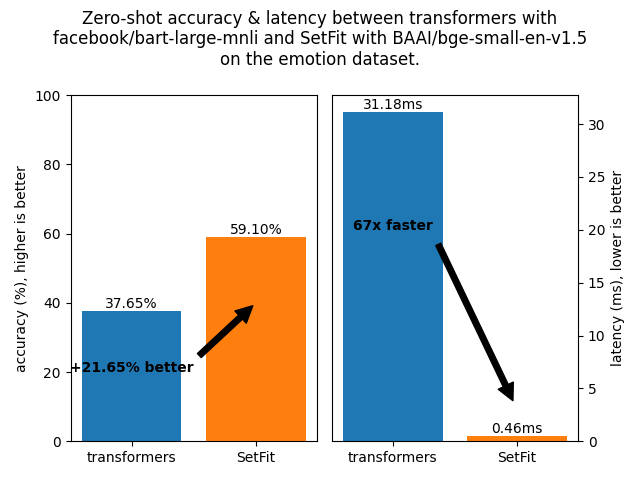

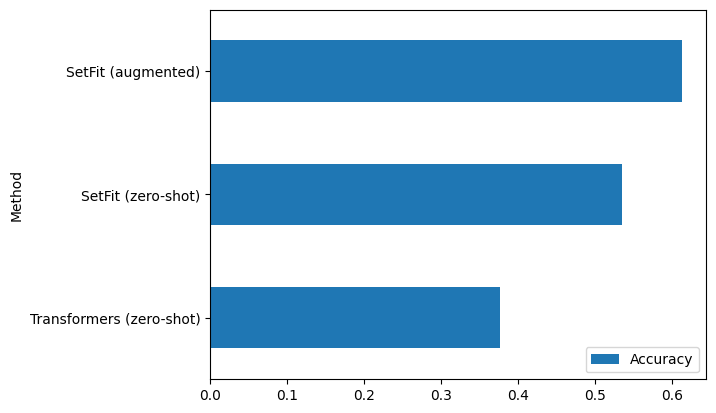

-We find this approach typically outperforms the [zero-shot pipeline](https://huggingface.co/docs/transformers/v4.24.0/en/main_classes/pipelines#transformers.ZeroShotClassificationPipeline) in 🤗 Transformers (based on MNLI with Bart), while being 5x faster to generate predictions with.

-

-

-### Running hyperparameter search

-

-`SetFitTrainer` provides a `hyperparameter_search()` method that you can use to find good hyperparameters for your data. To use this feature, first install the `optuna` backend:

-

-```bash

-python -m pip install setfit[optuna]

-```

-

-To use this method, you need to define two functions:

-

-* `model_init()`: A function that instantiates the model to be used. If provided, each call to `train()` will start from a new instance of the model as given by this function.

-* `hp_space()`: A function that defines the hyperparameter search space.

-

-Here is an example of a `model_init()` function that we'll use to scan over the hyperparameters associated with the classification head in `SetFitModel`:

-

-```python

-from setfit import SetFitModel

-

-def model_init(params):

- params = params or {}

- max_iter = params.get("max_iter", 100)

- solver = params.get("solver", "liblinear")

- params = {

- "head_params": {

- "max_iter": max_iter,

- "solver": solver,

- }

- }

- return SetFitModel.from_pretrained("sentence-transformers/paraphrase-albert-small-v2", **params)

-```

-

-Similarly, to scan over hyperparameters associated with the SetFit training process, we can define a `hp_space()` function as follows:

-

-```python

-def hp_space(trial): # Training parameters

- return {

- "learning_rate": trial.suggest_float("learning_rate", 1e-6, 1e-4, log=True),

- "num_epochs": trial.suggest_int("num_epochs", 1, 5),

- "batch_size": trial.suggest_categorical("batch_size", [4, 8, 16, 32, 64]),

- "seed": trial.suggest_int("seed", 1, 40),

- "num_iterations": trial.suggest_categorical("num_iterations", [5, 10, 20]),

- "max_iter": trial.suggest_int("max_iter", 50, 300),

- "solver": trial.suggest_categorical("solver", ["newton-cg", "lbfgs", "liblinear"]),

- }

-```

-

-**Note:** In practice, we found `num_iterations` to be the most important hyperparameter for the contrastive learning process.

-

-The next step is to instantiate a `SetFitTrainer` and call `hyperparameter_search()`:

-

-```python

-from datasets import Dataset

-from setfit import SetFitTrainer

-

-dataset = Dataset.from_dict(

- {"text_new": ["a", "b", "c"], "label_new": [0, 1, 2], "extra_column": ["d", "e", "f"]}

- )

-

-trainer = SetFitTrainer(

- train_dataset=dataset,

- eval_dataset=dataset,

- model_init=model_init,

- column_mapping={"text_new": "text", "label_new": "label"},

-)

-best_run = trainer.hyperparameter_search(direction="maximize", hp_space=hp_space, n_trials=20)

-```

-

-Finally, you can apply the hyperparameters you found to the trainer, and lock in the optimal model, before training for

-a final time.

-

-```python

-trainer.apply_hyperparameters(best_run.hyperparameters, final_model=True)

-trainer.train()

-```

-

-## Compressing a SetFit model with knowledge distillation

-

-If you have access to unlabeled data, you can use knowledge distillation to compress a trained SetFit model into a smaller version. The result is a model that can run inference much faster, with little to no drop in accuracy. Here's an end-to-end example (see our paper for more details):

-

-```python

-from datasets import load_dataset

-from sentence_transformers.losses import CosineSimilarityLoss

-

-from setfit import SetFitModel, SetFitTrainer, DistillationSetFitTrainer, sample_dataset

-

-# Load a dataset from the Hugging Face Hub

-dataset = load_dataset("ag_news")

-

-# Create a sample few-shot dataset to train the teacher model

-train_dataset_teacher = sample_dataset(dataset["train"], label_column="label", num_samples=16)

-# Create a dataset of unlabeled examples to train the student

-train_dataset_student = dataset["train"].shuffle(seed=0).select(range(500))

-# Dataset for evaluation

-eval_dataset = dataset["test"]

-

-# Load teacher model

-teacher_model = SetFitModel.from_pretrained(

- "sentence-transformers/paraphrase-mpnet-base-v2"

-)

-

-# Create trainer for teacher model

-teacher_trainer = SetFitTrainer(

- model=teacher_model,

- train_dataset=train_dataset_teacher,

- eval_dataset=eval_dataset,

- loss_class=CosineSimilarityLoss,

-)

-

-# Train teacher model

-teacher_trainer.train()

-

-# Load small student model

-student_model = SetFitModel.from_pretrained("paraphrase-MiniLM-L3-v2")

-

-# Create trainer for knowledge distillation

-student_trainer = DistillationSetFitTrainer(

- teacher_model=teacher_model,

- train_dataset=train_dataset_student,

- student_model=student_model,

- eval_dataset=eval_dataset,

- loss_class=CosineSimilarityLoss,

- metric="accuracy",

- batch_size=16,

- num_iterations=20,

- num_epochs=1,

-)

-

-# Train student with knowledge distillation

-student_trainer.train()

+preds = model.predict(["i loved the spiderman movie!", "pineapple on pizza is the worst 🤮"])

+print(preds)

+# tensor([1, 0], dtype=torch.int32)

```

## Reproducing the results from the paper

-We provide scripts to reproduce the results for SetFit and various baselines presented in Table 2 of our paper. Check out the setup and training instructions in the `scripts/` directory.

+We provide scripts to reproduce the results for SetFit and various baselines presented in Table 2 of our paper. Check out the setup and training instructions in the [`scripts/`](scripts/) directory.

## Developer installation

@@ -366,10 +113,10 @@ conda create -n setfit python=3.9 && conda activate setfit

Then install the base requirements with:

```bash

-python -m pip install -e '.[dev]'

+pip install -e '.[dev]'

```

-This will install `datasets` and packages like `black` and `isort` that we use to ensure consistent code formatting.

+This will install mandatory packages for SetFit like `datasets` as well as development packages like `black` and `isort` that we use to ensure consistent code formatting.

### Formatting your code

@@ -379,14 +126,13 @@ We use `black` and `isort` to ensure consistent code formatting. After following

make style && make quality

```

-

-

## Project structure

```

├── LICENSE

├── Makefile <- Makefile with commands like `make style` or `make tests`

├── README.md <- The top-level README for developers using this project.

+├── docs <- Documentation source

├── notebooks <- Jupyter notebooks.

├── final_results <- Model predictions from the paper

├── scripts <- Scripts for training and inference

@@ -398,12 +144,14 @@ make style && make quality

## Related work

+* [https://github.com/pmbaumgartner/setfit](https://github.com/pmbaumgartner/setfit) - A scikit-learn API version of SetFit.

* [jxpress/setfit-pytorch-lightning](https://github.com/jxpress/setfit-pytorch-lightning) - A PyTorch Lightning implementation of SetFit.

* [davidberenstein1957/spacy-setfit](https://github.com/davidberenstein1957/spacy-setfit) - An easy and intuitive approach to use SetFit in combination with spaCy.

## Citation

-```@misc{https://doi.org/10.48550/arxiv.2209.11055,

+```bibtex

+@misc{https://doi.org/10.48550/arxiv.2209.11055,

doi = {10.48550/ARXIV.2209.11055},

url = {https://arxiv.org/abs/2209.11055},

author = {Tunstall, Lewis and Reimers, Nils and Jo, Unso Eun Seo and Bates, Luke and Korat, Daniel and Wasserblat, Moshe and Pereg, Oren},

@@ -411,5 +159,6 @@ make style && make quality

title = {Efficient Few-Shot Learning Without Prompts},

publisher = {arXiv},

year = {2022},

- copyright = {Creative Commons Attribution 4.0 International}}

+ copyright = {Creative Commons Attribution 4.0 International}

+}

```

diff --git a/docs/README.md b/docs/README.md

index befedf88..0178011d 100644

--- a/docs/README.md

+++ b/docs/README.md

@@ -5,7 +5,7 @@ Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

- http://www.apache.org/licenses/LICENSE-2.0

+ https://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

@@ -78,7 +78,7 @@ The `preview` command only works with existing doc files. When you add a complet

Accepted files are Markdown (.md or .mdx).

Create a file with its extension and put it in the source directory. You can then link it to the toc-tree by putting

-the filename without the extension in the [`_toctree.yml`](https://github.com/huggingface/setfit/blob/main/docs/source/_toctree.yml) file.

+the filename without the extension in the [`_toctree.yml`](https://github.com/huggingface/setfit/blob/main/docs/source/en/_toctree.yml) file.

## Renaming section headers and moving sections

@@ -103,7 +103,7 @@ Sections that were moved:

Use the relative style to link to the new file so that the versioned docs continue to work.

-For an example of a rich moved section set please see the very end of [the Trainer doc](https://github.com/huggingface/transformers/blob/main/docs/source/en/main_classes/trainer.mdx).

+For an example of a rich moved section set please see the very end of [the Trainer doc](https://github.com/huggingface/transformers/blob/main/docs/source/en/main_classes/trainer.md).

## Writing Documentation - Specification

@@ -123,34 +123,10 @@ Make sure to put your new file under the proper section. It's unlikely to go in

depending on the intended targets (beginners, more advanced users, or researchers) it should go in sections two, three, or

four.

-### Translating

-When translating, refer to the guide at [./TRANSLATING.md](https://github.com/huggingface/setfit/blob/main/docs/TRANSLATING.md).

+### Autodoc

-

-### Adding a new model

-

-When adding a new model:

-

-- Create a file `xxx.mdx` or under `./source/model_doc` (don't hesitate to copy an existing file as template).

-- Link that file in `./source/_toctree.yml`.

-- Write a short overview of the model:

- - Overview with paper & authors

- - Paper abstract

- - Tips and tricks and how to use it best

-- Add the classes that should be linked in the model. This generally includes the configuration, the tokenizer, and

- every model of that class (the base model, alongside models with additional heads), both in PyTorch and TensorFlow.

- The order is generally:

- - Configuration,

- - Tokenizer

- - PyTorch base model

- - PyTorch head models

- - TensorFlow base model

- - TensorFlow head models

- - Flax base model

- - Flax head models

-

-These classes should be added using our Markdown syntax. Usually as follows:

+The following are some examples of `[[autodoc]]` for documentation building.

```

## XXXConfig

diff --git a/docs/source/_config.py b/docs/source/_config.py

new file mode 100644

index 00000000..2f4f5c51

--- /dev/null

+++ b/docs/source/_config.py

@@ -0,0 +1,9 @@

+# docstyle-ignore

+INSTALL_CONTENT = """

+# SetFit installation

+! pip install setfit

+# To install from source instead of the last release, comment the command above and uncomment the following one.

+# ! pip install git+https://github.com/huggingface/setfit.git

+"""

+

+notebook_first_cells = [{"type": "code", "content": INSTALL_CONTENT}]

\ No newline at end of file

diff --git a/docs/source/en/_toctree.yml b/docs/source/en/_toctree.yml

index bea05d0b..24123729 100644

--- a/docs/source/en/_toctree.yml

+++ b/docs/source/en/_toctree.yml

@@ -6,21 +6,53 @@

- local: installation

title: Installation

title: Get started

+

- sections:

- - local: tutorials/placeholder

- title: Placeholder

+ - local: tutorials/overview

+ title: Overview

+ - local: tutorials/zero_shot

+ title: Zero-shot Text Classification

+ - local: tutorials/onnx

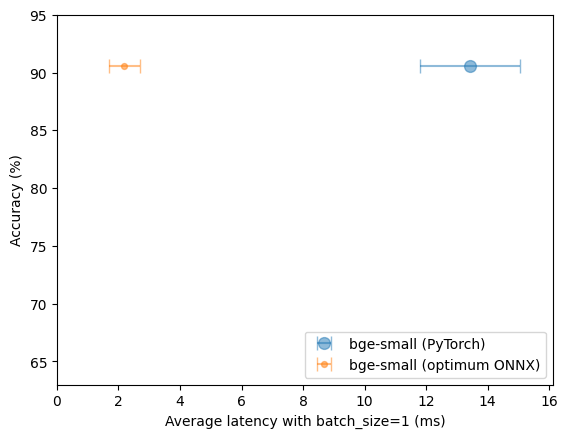

+ title: Efficiently run SetFit with ONNX

title: Tutorials

+

- sections:

- - local: how_to/placeholder

- title: Placeholder

+ - local: how_to/overview

+ title: Overview

+ - local: how_to/callbacks

+ title: Callbacks

+ - local: how_to/model_cards

+ title: Model Cards

+ - local: how_to/classification_heads

+ title: Classification Heads

+ - local: how_to/multilabel

+ title: Multilabel Text Classification

+ - local: how_to/zero_shot

+ title: Zero-shot Text Classification

+ - local: how_to/hyperparameter_optimization

+ title: Hyperparameter Optimization

+ - local: how_to/knowledge_distillation

+ title: Knowledge Distillation

+ - local: how_to/batch_sizes

+ title: Batch Sizes for Inference

+ - local: how_to/absa

+ title: Aspect Based Sentiment Analysis

+ - local: how_to/v1.0.0_migration_guide

+ title: v1.0.0 Migration Guide

title: How-to Guides

+

- sections:

- - local: conceptual_guides/placeholder

- title: Placeholder

+ - local: conceptual_guides/setfit

+ title: SetFit

+ - local: conceptual_guides/sampling_strategies

+ title: Sampling Strategies

title: Conceptual Guides

+

- sections:

- - local: api/main

+ - local: reference/main

title: Main classes

- - local: api/trainer

+ - local: reference/trainer

title: Trainer classes

- title: API

\ No newline at end of file

+ - local: reference/utility

+ title: Utility

+ title: Reference

\ No newline at end of file

diff --git a/docs/source/en/api/main.mdx b/docs/source/en/api/main.mdx

deleted file mode 100644

index ac2b77e4..00000000

--- a/docs/source/en/api/main.mdx

+++ /dev/null

@@ -1,8 +0,0 @@

-

-# SetFitModel

-

-[[autodoc]] SetFitModel

-

-# SetFitHead

-

-[[autodoc]] SetFitHead

diff --git a/docs/source/en/api/trainer.mdx b/docs/source/en/api/trainer.mdx

deleted file mode 100644

index a51df833..00000000

--- a/docs/source/en/api/trainer.mdx

+++ /dev/null

@@ -1,8 +0,0 @@

-

-# SetFitTrainer

-

-[[autodoc]] SetFitTrainer

-

-# DistillationSetFitTrainer

-

-[[autodoc]] DistillationSetFitTrainer

\ No newline at end of file

diff --git a/docs/source/en/conceptual_guides/placeholder.mdx b/docs/source/en/conceptual_guides/placeholder.mdx

deleted file mode 100644

index b79fc271..00000000

--- a/docs/source/en/conceptual_guides/placeholder.mdx

+++ /dev/null

@@ -1,3 +0,0 @@

-

-# Conceptual Guides

-Work in Progress!

\ No newline at end of file

diff --git a/docs/source/en/conceptual_guides/sampling_strategies.mdx b/docs/source/en/conceptual_guides/sampling_strategies.mdx

new file mode 100644

index 00000000..e076138f

--- /dev/null

+++ b/docs/source/en/conceptual_guides/sampling_strategies.mdx

@@ -0,0 +1,87 @@

+

+# SetFit Sampling Strategies

+

+SetFit supports various contrastive pair sampling strategies in [`TrainingArguments`]. In this conceptual guide, we will learn about the following four sampling strategies:

+

+1. `"oversampling"` (the default)

+2. `"undersampling"`

+3. `"unique"`

+4. `"num_iterations"`

+

+Consider first reading the [SetFit conceptual guide](../setfit) for a background on contrastive learning and positive & negative pairs.

+

+## Running example

+

+Throughout this conceptual guide, we will use to the following example scenario:

+

+* 3 classes: "happy", "content", and "sad".

+* 20 total samples: 8 "happy", 4 "content", and 8 "sad" samples.

+

+Considering that a sentence pair of `(X, Y)` and `(Y, X)` result in the same embedding distance/loss, we only want to consider one of those two cases. Furthermore, we don't want pairs where both sentences are the same, e.g. no `(X, X)`.

+

+The resulting positive and negative pairs can be visualized in a table like below. The `+` and `-` represent positive and negative pairs, respectively. Furthermore, `h-n` represents the n-th "happy" sentence, `c-n` the n-th "content" sentence, and `s-n` the n-th "sad" sentence. Note that the area below the diagonal is not used as `(X, Y)` and `(Y, X)` result in the same embedding distances, and that the diagonal is not used as we are not interested in pairs where both sentences are identical.

+

+| |h-1|h-2|h-3|h-4|h-5|h-6|h-7|h-8|c-1|c-2|c-3|c-4|s-1|s-2|s-3|s-4|s-5|s-6|s-7|s-8|

+|-------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

+|**h-1**| | + | + | + | + | + | + | + | - | - | - | - | - | - | - | - | - | - | - | - |

+|**h-2**| | | + | + | + | + | + | + | - | - | - | - | - | - | - | - | - | - | - | - |

+|**h-3**| | | | + | + | + | + | + | - | - | - | - | - | - | - | - | - | - | - | - |

+|**h-4**| | | | | + | + | + | + | - | - | - | - | - | - | - | - | - | - | - | - |

+|**h-5**| | | | | | + | + | + | - | - | - | - | - | - | - | - | - | - | - | - |

+|**h-6**| | | | | | | + | + | - | - | - | - | - | - | - | - | - | - | - | - |

+|**h-7**| | | | | | | | + | - | - | - | - | - | - | - | - | - | - | - | - |

+|**h-8**| | | | | | | | | - | - | - | - | - | - | - | - | - | - | - | - |

+|**c-1**| | | | | | | | | | + | + | + | - | - | - | - | - | - | - | - |

+|**c-2**| | | | | | | | | | | + | + | - | - | - | - | - | - | - | - |

+|**c-3**| | | | | | | | | | | | + | - | - | - | - | - | - | - | - |

+|**c-4**| | | | | | | | | | | | | - | - | - | - | - | - | - | - |

+|**s-1**| | | | | | | | | | | | | | + | + | + | + | + | + | + |

+|**s-2**| | | | | | | | | | | | | | | + | + | + | + | + | + |

+|**s-3**| | | | | | | | | | | | | | | | + | + | + | + | + |

+|**s-4**| | | | | | | | | | | | | | | | | + | + | + | + |

+|**s-5**| | | | | | | | | | | | | | | | | | + | + | + |

+|**s-6**| | | | | | | | | | | | | | | | | | | + | + |

+|**s-7**| | | | | | | | | | | | | | | | | | | | + |

+|**s-8**| | | | | | | | | | | | | | | | | | | | |

+

+As shown in the prior table, we have 28 positive pairs for "happy", 6 positive pairs for "content", and another 28 positive pairs for "sad". In total, this is 62 positive pairs. Also, we have 32 negative pairs between "happy" and "content", 64 negative pairs between "happy" and "sad", and 32 negative pairs between "content" and "sad". In total, this is 128 negative pairs.

+

+## Oversampling

+

+By default, SetFit applies the oversampling strategy for its contrastive pairs. This strategy samples an equal amount of positive and negative training pairs, oversampling the minority pair type to match that of the majority pair type. As the number of negative pairs is generally larger than the number of positive pairs, this usually involves oversampling the positive pairs.

+

+In our running example, this would involve oversampling the 62 positive pairs up to 128, resulting in one epoch of 128 + 128 = 256 pairs. In summary:

+

+* ✅ An equal amount of positive and negative pairs are sampled.

+* ✅ Every possible pair is used.

+* ❌ There is some data duplication.

+

+## Undersampling

+

+Like oversampling, this strategy samples an equal amount of positive and negative training pairs. However, it undersamples the majority pair type to match that of the minority pair type. This usually involves undersampling the negative pairs to match the positive pairs.

+

+In our running example, this would involve undersampling the 128 negative pairs down to 62, resulting in one epoch of 62 + 62 = 124 pairs. In summary:

+

+* ✅ An equal amount of positive and negative pairs are sampled.

+* ❌ **Not** every possible pair is used.

+* ✅ There is **no** data duplication.

+

+## Unique

+

+Thirdly, the unique strategy does not sample an equal amount of positive and negative training pairs. Instead, it simply samples all possible pairs exactly once. No form of oversampling or undersampling is used here.

+

+In our running example, this would involve sampling all negative and positive pairs, resulting in one epoch of 62 + 128 = 190 pairs. In summary:

+

+* ❌ **Not** an equal amount of positive and negative pairs are sampled.

+* ✅ Every possible pair is used.

+* ✅ There is **no** data duplication.

+

+## `num_iterations`

+

+Lastly, SetFit can still be used with a deprecated sampling strategy involving the `num_iterations` training argument. Unlike the other sampling strategies, this strategy does not involve the number of possible pairs. Instead, it samples `num_iterations` positive pairs and `num_iterations` negative pairs for each training sample.

+

+In our running example, if we assume `num_iterations=20`, then we would sample 20 positive pairs and 20 negative pairs per training sample. Because there's 20 samples, this involves (20 + 20) * 20 = 800 pairs. Because there are only 190 unique pairs, this certainly involves some data duplication. However, it does not guarantee that every possible pair is used. In summary:

+

+* ✅ **Not** an equal amount of positive and negative pairs are sampled.

+* ❌ Not necessarily every possible pair is used.

+* ❌ There is some data duplication.

\ No newline at end of file

diff --git a/docs/source/en/conceptual_guides/setfit.mdx b/docs/source/en/conceptual_guides/setfit.mdx

new file mode 100644

index 00000000..b4f158f7

--- /dev/null

+++ b/docs/source/en/conceptual_guides/setfit.mdx

@@ -0,0 +1,28 @@

+

+# Sentence Transformers Finetuning (SetFit)

+

+SetFit is a model framework to efficiently train text classification models with surprisingly little training data. For example, with only 8 labeled examples per class on the Customer Reviews (CR) sentiment dataset, SetFit is competitive with fine-tuning RoBERTa Large on the full training set of 3k examples. Furthermore, SetFit is fast to train and run inference with, and can easily support multilingual tasks.

+

+Every SetFit model consists of two parts: a **sentence transformer** embedding model (the body) and a **classifier** (the head). These two parts are trained in two separate phases: the **embedding finetuning phase** and the **classifier training phase**. This conceptual guide will elaborate on the intuition between these phases, and why SetFit works so well.

+

+## Embedding finetuning phase

+

+The first phase has one primary goal: finetune a sentence transformer embedding model to produce useful embeddings for *our* classification task. The [Hugging Face Hub](https://huggingface.co/models?library=sentence-transformers) already has thousands of sentence transformer available, many of which have been trained to very accurately group the embeddings of texts with similar semantic meaning.

+

+However, models that are good at Semantic Textual Similarity (STS) are not necessarily immediately good at *our* classification task. For example, according to an embedding model, the sentence of 1) `"He biked to work."` will be much more similar to 2) `"He drove his car to work."` than to 3) `"Peter decided to take the bicycle to the beach party!"`. But if our classification task involves classifying texts into transportation modes, then we want our embedding model to place sentences 1 and 3 closely together, and 2 further away.

+

+To do so, we can finetune the chosen sentence transformer embedding model. The goal here is to nudge the model to use its pretrained knowledge in a different way that better aligns with our classification task, rather than making the completely forget what it has learned.

+

+For finetuning, SetFit uses **contrastive learning**. This training approach involves creating **positive and negative pairs** of sentences. A sentence pair will be positive if both of the sentences are of the same class, and negative otherwise. For example, in the case of binary "positive"-"negative" sentiment analysis, `("The movie was awesome", "I loved it")` is a positive pair, and `("The movie was awesome", "It was quite disappointing")` is a negative pair.

+

+During training, the embedding model receives these pairs, and will convert the sentences to embeddings. If the pair is positive, then it will pull on the model weights such that the text embeddings will be more similar, and vice versa for a negative pair. Through this approach, sentences with the same label will be embedded more similarly, and sentences with different labels less similarly.

+

+Conveniently, this contrastive learning works with pairs rather than individual samples, and we can create plenty of unique pairs from just a few samples. For example, given 8 positive sentences and 8 negative sentences, we can create 28 positive pairs and 64 negative pairs for 92 unique training pairs. This grows exponentially to the number of sentences and classes, and that is why SetFit can train with just a few examples and still correctly finetune the sentence transformer embedding model. However, we should still be wary of overfitting.

+

+## Classifier training phase

+

+Once the sentence transformer embedding model has been finetuned for our task at hand, we can start training the classifier. This phase has one primary goal: create a good mapping from the sentence transformer embeddings to the classes.

+

+Unlike with the first phase, training the classifier is done from scratch and using the labeled samples directly, rather than using pairs. By default, the classifier is a simple **logistic regression** classifier from scikit-learn. First, all training sentences are fed through the now-finetuned sentence transformer embedding model, and then the sentence embeddings and labels are used to fit the logistic regression classifier. The result is a strong and efficient classifier.

+

+Using these two parts, SetFit models are efficient, performant and easy to train, even on CPU-only devices.

\ No newline at end of file

diff --git a/docs/source/en/how_to/absa.mdx b/docs/source/en/how_to/absa.mdx

new file mode 100644

index 00000000..68992827

--- /dev/null

+++ b/docs/source/en/how_to/absa.mdx

@@ -0,0 +1,230 @@

+

+# SetFit for Aspect Based Sentiment Analysis

+

+SetFitABSA is an efficient framework for few-shot Aspect Based Sentiment Analysis, achieving competitive performance with little training data. It consists of three phases:

+

+1. Using spaCy to find potential aspect candidates.

+2. Using a SetFit model for filtering these aspect candidates.

+3. Using a SetFit model for classifying the filtered aspect candidates.

+

+This guide will show you how to train, predict, save and load these models.

+

+## Getting Started

+

+First of all, SetFitABSA also requires spaCy to be installed, so we must install it:

+

+```

+!pip install "setfit[absa]"

+# or

+# !pip install spacy

+```

+

+Then, we must download the spaCy model that we intend on using. By default, SetFitABSA uses `en_core_web_lg`, but `en_core_web_sm` and `en_core_web_md` are also good options.

+

+```

+!spacy download en_core_web_lg

+!spacy download en_core_web_sm

+```

+

+## Training SetFitABSA

+

+First of all, we must instantiate a new [`AbsaModel`] via [`AbsaModel.from_pretrained`]. This can be done by providing configuration for each of the three phases for SetFitABSA:

+

+1. Provide the name or path of a Sentence Transformer model to be used for the **aspect filtering** SetFit model as the first argument.

+2. (Optional) Provide the name or path of a Sentence Transformer model to be used for the **polarity classification** SetFit model as the second argument. If not provided, the same Sentence Transformer model as the aspect filtering model is also used for the polarity classification model.

+3. (Optional) Provide the spaCy model to use via the `spacy_model` keyword argument.

+

+For example:

+

+```py

+from setfit import AbsaModel

+

+model = AbsaModel.from_pretrained(

+ "sentence-transformers/all-MiniLM-L6-v2",

+ "sentence-transformers/all-mpnet-base-v2",

+ spacy_model="en_core_web_sm",

+)

+```

+

+Or a minimal example:

+

+```py

+from setfit import AbsaModel

+

+model = AbsaModel.from_pretrained("BAAI/bge-small-en-v1.5")

+```

+

+Then we have to prepare a training/testing set. These datasets must have `"text"`, `"span"`, `"label"`, and `"ordinal"` columns:

+

+* `"text"`: The full sentence or text containing the aspects. For example: `"But the staff was so horrible to us."`.

+* `"span"`: An aspect from the full sentence. Can be multiple words. For example: `"staff"`.

+* `"label"`: The (polarity) label corresponding to the aspect span. For example: `"negative"`.

+* `"ordinal"`: If the aspect span occurs multiple times in the text, then this ordinal represents the index of those occurrences. Often this is just 0. For example: `0`.

+

+Two datasets that already match this format are these datasets of reviews from the SemEval-2014 Task 4:

+

+* [tomaarsen/setfit-absa-semeval-restaurants](https://huggingface.co/datasets/tomaarsen/setfit-absa-semeval-restaurants)

+* [tomaarsen/setfit-absa-semeval-laptops](https://huggingface.co/datasets/tomaarsen/setfit-absa-semeval-laptops)

+

+```py

+# The training/eval dataset must have `text`, `span`, `label`, and `ordinal` columns

+dataset = load_dataset("tomaarsen/setfit-absa-semeval-restaurants", split="train")

+train_dataset = dataset.select(range(128))

+eval_dataset = dataset.select(range(128, 256))

+```

+

+We can commence training like with normal SetFit, but now using [`AbsaTrainer`] instead.

+

+

+

+If you wish, you can specify separate training arguments for the aspect model as the polarity model by using both the `args` and `polarity_args` keyword arguments.

+

+

+

+```py

+args = TrainingArguments(

+ output_dir="models",

+ num_epochs=5,

+ use_amp=True,

+ batch_size=128,

+ evaluation_strategy="steps",

+ eval_steps=50,

+ save_steps=50,

+ load_best_model_at_end=True,

+)

+

+trainer = AbsaTrainer(

+ model,

+ args=args,

+ train_dataset=train_dataset,

+ eval_dataset=eval_dataset,

+ callbacks=[EarlyStoppingCallback(early_stopping_patience=5)],

+)

+trainer.train()

+```

+```

+***** Running training *****

+ Num examples = 249

+ Num epochs = 5

+ Total optimization steps = 1245

+ Total train batch size = 128

+{'aspect_embedding_loss': 0.2542, 'learning_rate': 1.6e-07, 'epoch': 0.0}

+{'aspect_embedding_loss': 0.2437, 'learning_rate': 8.000000000000001e-06, 'epoch': 0.2}

+{'eval_aspect_embedding_loss': 0.2511, 'learning_rate': 8.000000000000001e-06, 'epoch': 0.2}

+{'aspect_embedding_loss': 0.2209, 'learning_rate': 1.6000000000000003e-05, 'epoch': 0.4}

+{'eval_aspect_embedding_loss': 0.2385, 'learning_rate': 1.6000000000000003e-05, 'epoch': 0.4}

+{'aspect_embedding_loss': 0.0165, 'learning_rate': 1.955357142857143e-05, 'epoch': 0.6}

+{'eval_aspect_embedding_loss': 0.2776, 'learning_rate': 1.955357142857143e-05, 'epoch': 0.6}

+{'aspect_embedding_loss': 0.0158, 'learning_rate': 1.8660714285714287e-05, 'epoch': 0.8}

+{'eval_aspect_embedding_loss': 0.2848, 'learning_rate': 1.8660714285714287e-05, 'epoch': 0.8}

+{'aspect_embedding_loss': 0.0015, 'learning_rate': 1.7767857142857143e-05, 'epoch': 1.0}

+{'eval_aspect_embedding_loss': 0.3133, 'learning_rate': 1.7767857142857143e-05, 'epoch': 1.0}

+{'aspect_embedding_loss': 0.0012, 'learning_rate': 1.6875e-05, 'epoch': 1.2}

+{'eval_aspect_embedding_loss': 0.2966, 'learning_rate': 1.6875e-05, 'epoch': 1.2}

+{'aspect_embedding_loss': 0.0009, 'learning_rate': 1.598214285714286e-05, 'epoch': 1.41}

+{'eval_aspect_embedding_loss': 0.2996, 'learning_rate': 1.598214285714286e-05, 'epoch': 1.41}

+ 28%|██████████████████████████████████▎ | 350/1245 [03:40<09:24, 1.59it/s]

+Loading best SentenceTransformer model from step 100.

+{'train_runtime': 226.7429, 'train_samples_per_second': 702.822, 'train_steps_per_second': 5.491, 'epoch': 1.41}

+***** Running training *****

+ Num examples = 39

+ Num epochs = 5

+ Total optimization steps = 195

+ Total train batch size = 128

+{'polarity_embedding_loss': 0.2267, 'learning_rate': 1.0000000000000002e-06, 'epoch': 0.03}

+{'polarity_embedding_loss': 0.1038, 'learning_rate': 1.6571428571428574e-05, 'epoch': 1.28}

+{'eval_polarity_embedding_loss': 0.1946, 'learning_rate': 1.6571428571428574e-05, 'epoch': 1.28}

+{'polarity_embedding_loss': 0.0116, 'learning_rate': 1.0857142857142858e-05, 'epoch': 2.56}

+{'eval_polarity_embedding_loss': 0.2364, 'learning_rate': 1.0857142857142858e-05, 'epoch': 2.56}

+{'polarity_embedding_loss': 0.0059, 'learning_rate': 5.142857142857142e-06, 'epoch': 3.85}

+{'eval_polarity_embedding_loss': 0.2401, 'learning_rate': 5.142857142857142e-06, 'epoch': 3.85}

+100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 195/195 [00:54<00:00, 3.58it/s]

+Loading best SentenceTransformer model from step 50.

+{'train_runtime': 54.4104, 'train_samples_per_second': 458.736, 'train_steps_per_second': 3.584, 'epoch': 5.0}

+```

+

+Evaluation is also like normal, although you now get results from the aspect and polarity models separately:

+

+```py

+metrics = trainer.evaluate(eval_dataset)

+print(metrics)

+```

+```

+***** Running evaluation *****

+{'aspect': {'accuracy': 0.7130649876321116}, 'polarity': {'accuracy': 0.7102310231023102}}

+```

+

+

+

+Note that the aspect accuracy refers to the accuracy of classifying aspect candidate spans from the spaCy model as a true aspect or not, and the polarity accuracy refers to the accuracy of classifying only the filtered aspect candidate spans to the correct class.

+

+

+

+## Saving a SetFitABSA model

+

+Once trained, we can use familiar [`AbsaModel.save_pretrained`] and [`AbsaTrainer.push_to_hub`]/[`AbsaModel.push_to_hub`] methods to save the model. However, unlike normally, saving an [`AbsaModel`] involves saving two separate models: the **aspect** SetFit model and the **polarity** SetFit model. Consequently, we can provide two directories or `repo_id`'s:

+

+```py

+model.save_pretrained(

+ "models/setfit-absa-model-aspect",

+ "models/setfit-absa-model-polarity",

+)

+# or

+model.push_to_hub(

+ "tomaarsen/setfit-absa-bge-small-en-v1.5-restaurants-aspect",

+ "tomaarsen/setfit-absa-bge-small-en-v1.5-restaurants-polarity",

+)

+```

+However, you can also provide just one directory or `repo_id`, and `-aspect` and `-polarity` will be automatically added. So, the following code is equivalent to the previous snippet:

+

+```py

+model.save_pretrained("models/setfit-absa-model")

+# or

+model.push_to_hub("tomaarsen/setfit-absa-bge-small-en-v1.5-restaurants")

+```

+

+## Loading a SetFitABSA model

+

+Loading a trained [`AbsaModel`] involves calling [`AbsaModel.from_pretrained`] with details for each of the three phases for SetFitABSA:

+

+1. Provide the name or path of a trained SetFit ABSA model to be used for the **aspect filtering** model as the first argument.

+2. Provide the name or path of a trained SetFit ABSA model to be used for the **polarity classification** model as the second argument.

+3. (Optional) Provide the spaCy model to use via the `spacy_model` keyword argument. It is recommended to match this with the model used during training. The default is `"en_core_web_lg"`.

+

+For example:

+

+```py

+from setfit import AbsaModel

+

+model = AbsaModel.from_pretrained(

+ "tomaarsen/setfit-absa-bge-small-en-v1.5-restaurants-aspect",

+ "tomaarsen/setfit-absa-bge-small-en-v1.5-restaurants-polarity",

+ spacy_model="en_core_web_lg",

+)

+```

+

+We've now successfully loaded the SetFitABSA model from:

+* [tomaarsen/setfit-absa-bge-small-en-v1.5-restaurants-aspect](https://huggingface.co/tomaarsen/setfit-absa-bge-small-en-v1.5-restaurants-aspect)

+* [tomaarsen/setfit-absa-bge-small-en-v1.5-restaurants-polarity](https://huggingface.co/tomaarsen/setfit-absa-bge-small-en-v1.5-restaurants-polarity)

+

+## Inference with a SetFitABSA model

+

+To perform inference with a trained [`AbsaModel`], we can use [`AbsaModel.predict`]:

+

+```py

+preds = model.predict([

+ "Best pizza outside of Italy and really tasty.",

+ "The food variations are great and the prices are absolutely fair.",

+ "Unfortunately, you have to expect some waiting time and get a note with a waiting number if it should be very full."

+])

+print(preds)

+# [

+# [{'span': 'pizza', 'polarity': 'positive'}],

+# [{'span': 'food variations', 'polarity': 'positive'}, {'span': 'prices', 'polarity': 'positive'}],

+# [{'span': 'waiting number', 'polarity': 'negative'}]

+# ]

+```

+

+## Challenge

+

+If you're up for it, then I challenge you to train and upload a SetFitABSA model for [laptop reviews](https://huggingface.co/datasets/tomaarsen/setfit-absa-semeval-laptops) based on this documentation.

diff --git a/docs/source/en/how_to/batch_sizes.mdx b/docs/source/en/how_to/batch_sizes.mdx

new file mode 100644

index 00000000..5f4d71c0

--- /dev/null

+++ b/docs/source/en/how_to/batch_sizes.mdx

@@ -0,0 +1,21 @@

+

+# Batch sizes for Inference

+In this how-to guide we will explore the effects of increasing the batch sizes in [`SetFitModel.predict`].

+

+## What are they?

+When processing on GPUs, often times not all data fits on the GPU its VRAM at once. As a result, the data gets split up into **batches** of some often pre-determined batch size. This is done both during training and during inference. In both scenarios, increasing the batch size often has notable consequences to processing efficiency and VRAM memory usage, as transferring data to and from the GPU can be relatively slow.

+

+For inference, it is often recommended to set the batch size high to get notably quicker processing speeds.

+

+## In SetFit

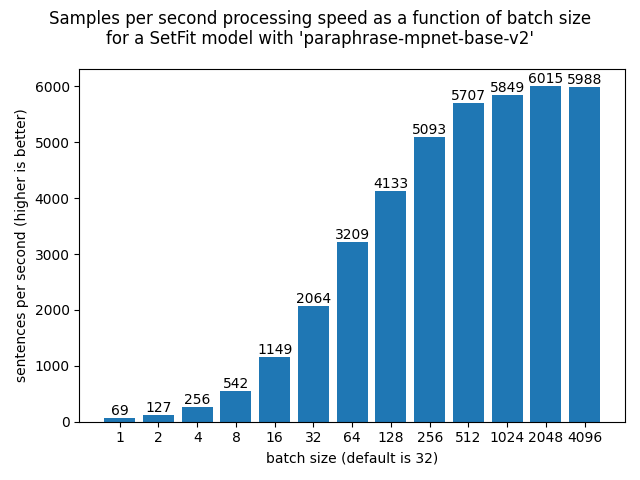

+The batch size for inference in SetFit is set to 32, but it can be affected by passing a `batch_size` argument to [`SetFitModel.predict`]. For example, on a RTX 3090 with a SetFit model based on the [paraphrase-mpnet-base-v2](https://huggingface.co/sentence-transformers/paraphrase-mpnet-base-v2) Sentence Transformer, the following throughputs are reached:

+

+

+

+

+

+Each sentence consists of 11 words in this experiment.

+

+

+

+The default batch size of 32 does not result in the highest possible throughput on this hardware. Consider experimenting with the batch size to reach your highest possible throughput.

\ No newline at end of file

diff --git a/docs/source/en/how_to/callbacks.mdx b/docs/source/en/how_to/callbacks.mdx

new file mode 100644

index 00000000..6ff557f5

--- /dev/null

+++ b/docs/source/en/how_to/callbacks.mdx

@@ -0,0 +1,104 @@

+

+# Callbacks

+SetFit models can be influenced by callbacks, for example for logging or early stopping.

+

+This guide will show you what they are and how they can be used.

+

+## Callbacks in SetFit

+

+Callbacks are objects that customize the behaviour of the training loop in the SetFit [`Trainer`] that can inspect the training loop state (for progress reporting, logging, inspecting embeddings during training) and take decisions (e.g. early stopping).

+

+In particular, the [`Trainer`] uses a [`TrainerControl`](https://huggingface.co/docs/transformers/main_classes/callback#transformers.TrainerControl) that can be influenced by callbacks to stop training, save models, evaluate, or log, and a [`TrainerState`](https://huggingface.co/docs/transformers/main_classes/callback#transformers.TrainerState) which tracks some training loop metrics during training, such as the number of training steps so far.

+

+SetFit relies on the Callbacks implemented in `transformers`, as described in the `transformers` documentation [here](https://huggingface.co/docs/transformers/main_classes/callback).

+

+## Default Callbacks

+

+SetFit uses the `TrainingArguments.report_to` argument to specify which of the built-in callbacks should be enabled. This argument defaults to `"all"`, meaning that all third-party callbacks from `transformers` that are also installed will be enabled. For example the [`TensorBoardCallback`](https://huggingface.co/docs/transformers/main_classes/callback#transformers.integrations.TensorBoardCallback) or the [`WandbCallback`](https://huggingface.co/docs/transformers/main_classes/callback#transformers.integrations.WandbCallback).

+

+Beyond that, the [`PrinterCallback`](https://huggingface.co/docs/transformers/main_classes/callback#transformers.PrinterCallback) or [`ProgressCallback`](https://huggingface.co/docs/transformers/main_classes/callback#transformers.ProgressCallback) is always enabled to show the training progress, and [`DefaultFlowCallback`](https://huggingface.co/docs/transformers/main_classes/callback#transformers.DefaultFlowCallback) is also always enabled to properly update the `TrainerControl`.

+

+## Using Callbacks

+

+As mentioned, you can use `TrainingArguments.report_to` to specify exactly which callbacks you would like to enable. For example:

+

+```py

+from setfit import TrainingArguments

+

+args = TrainingArguments(

+ ...,

+ report_to="wandb",

+ ...,

+)

+# or

+args = TrainingArguments(

+ ...,

+ report_to=["wandb", "tensorboard"],

+ ...,

+)

+```

+You can also use [`Trainer.add_callback`], [`Trainer.pop_callback`] and [`Trainer.remove_callback`] to influence the trainer callbacks, and you can specify callbacks via the [`Trainer`] init, e.g.:

+

+```py

+from setfit import Trainer

+

+...

+

+trainer = Trainer(

+ model,

+ args=args,

+ train_dataset=train_dataset,

+ eval_dataset=eval_dataset,

+ callbacks=[EarlyStoppingCallback(early_stopping_patience=5)],

+)

+trainer.train()

+```

+

+## Custom Callbacks

+

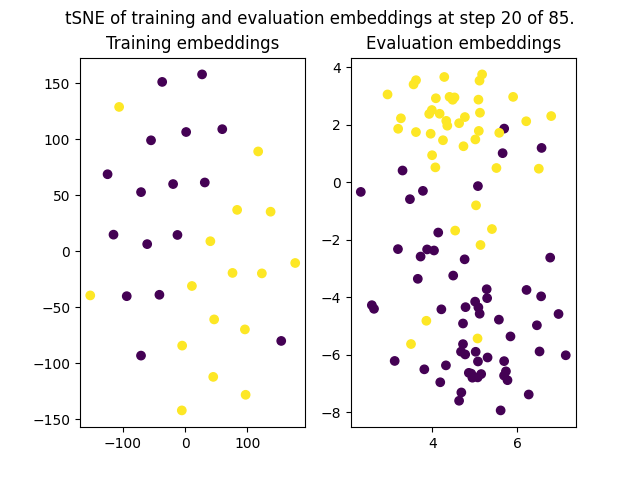

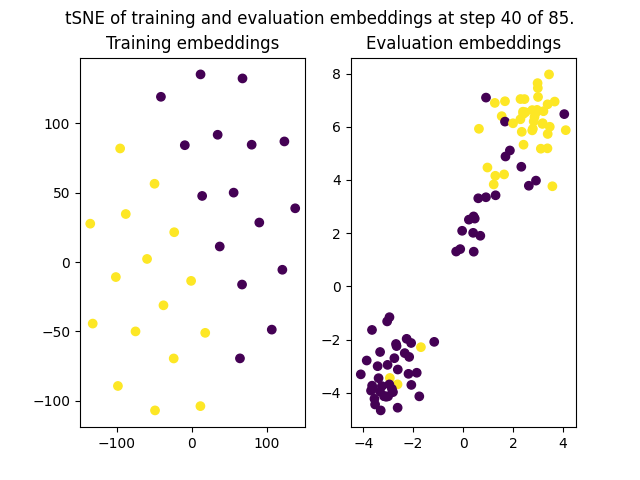

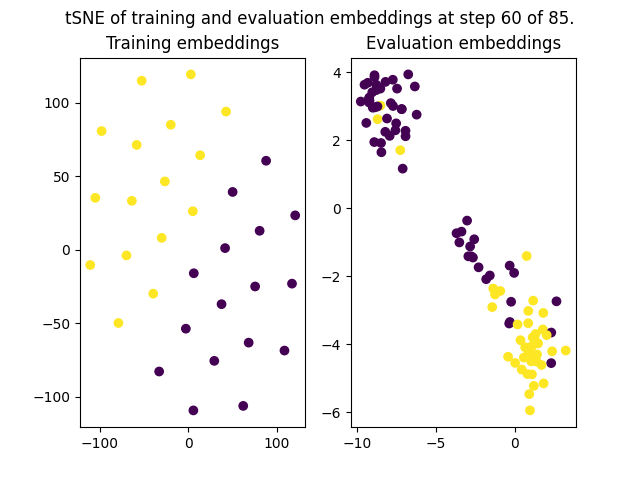

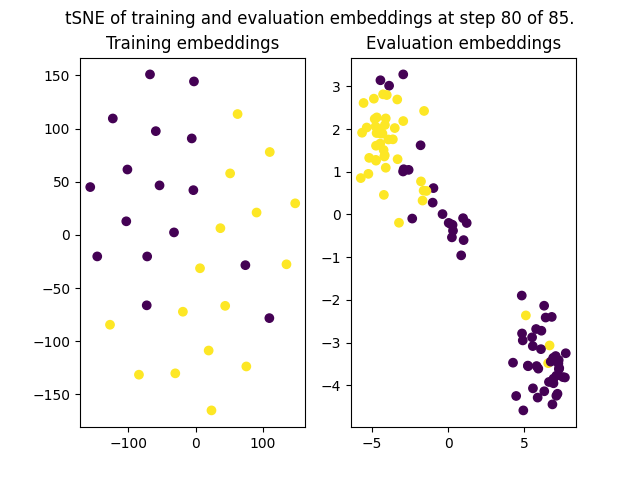

+SetFit supports custom callbacks in the same way that `transformers` does: by subclassing [`TrainerCallback`](https://huggingface.co/docs/transformers/main_classes/callback#transformers.TrainerCallback). This class implements a lot of `on_...` methods that can be overridden. For example, the following script shows a custom callback that saves plots of the tSNE of the training and evaluation embeddings during training.

+

+```py

+import matplotlib.pyplot as plt

+from sklearn.manifold import TSNE

+

+class EmbeddingPlotCallback(TrainerCallback):

+ """Simple embedding plotting callback that plots the tSNE of the training and evaluation datasets throughout training."""

+ def on_evaluate(self, args: TrainingArguments, state: TrainerState, control: TrainerControl, model: SetFitModel, **kwargs):

+ train_embeddings = model.encode(train_dataset["text"])

+ eval_embeddings = model.encode(eval_dataset["text"])

+

+ fig, (train_ax, eval_ax) = plt.subplots(ncols=2)

+

+ train_X = TSNE(n_components=2).fit_transform(train_embeddings)

+ train_ax.scatter(*train_X.T, c=train_dataset["label"], label=train_dataset["label"])

+ train_ax.set_title("Training embeddings")

+

+ eval_X = TSNE(n_components=2).fit_transform(eval_embeddings)

+ eval_ax.scatter(*eval_X.T, c=eval_dataset["label"], label=eval_dataset["label"])

+ eval_ax.set_title("Evaluation embeddings")

+

+ fig.suptitle(f"tSNE of training and evaluation embeddings at step {state.global_step} of {state.max_steps}.")

+ fig.savefig(f"logs/step_{state.global_step}.png")

+```

+

+with

+

+```py

+trainer = Trainer(

+ model=model,

+ args=args,

+ train_dataset=train_dataset,

+ eval_dataset=eval_dataset,

+ callbacks=[EmbeddingPlotCallback()]

+)

+trainer.train()

+```

+

+The `on_evaluate` from `EmbeddingPlotCallback` will be triggered on every single evaluation call. In the case of this example, it resulted in the following figures being plotted:

+

+| Step 20 | Step 40 |

+|-------------|-------------|

+|  |  |

+| **Step 60** | **Step 80** |

+|  |  |

\ No newline at end of file

diff --git a/docs/source/en/how_to/classification_heads.mdx b/docs/source/en/how_to/classification_heads.mdx

new file mode 100644

index 00000000..cb1ef413

--- /dev/null

+++ b/docs/source/en/how_to/classification_heads.mdx

@@ -0,0 +1,208 @@

+

+# Classification heads

+

+[[open-in-colab]]

+

+Any 🤗 SetFit model consists of two parts: a [SentenceTransformer](https://sbert.net/) embedding body and a classification head.

+

+This guide will show you:

+* The built-in logistic regression classification head

+* The built-in differentiable classification head

+* The requirements for a custom classification head

+

+## Logistic Regression classification head

+

+When a new SetFit model is initialized, a [scikit-learn logistic regression](https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.LogisticRegression.html) head is chosen by default. This has been shown to be highly effective when applied on top of a finetuned sentence transformer body, and it remains the recommended classification head. Initializing a new SetFit model with a Logistic Regression head is simple:

+

+```py

+>>> from setfit import SetFitModel

+

+>>> model = SetFitModel.from_pretrained("BAAI/bge-small-en-v1.5")

+>>> model.model_head

+LogisticRegression()

+```

+

+To initialize the Logistic Regression head (or any other head) with additional parameters, then you can use the `head_params` argument on [`SetFitModel.from_pretrained`]:

+

+```py

+>>> from setfit import SetFitModel

+

+>>> model = SetFitModel.from_pretrained("BAAI/bge-small-en-v1.5", head_params={"solver": "liblinear", "max_iter": 300})

+>>> model.model_head

+LogisticRegression(max_iter=300, solver='liblinear')

+```

+

+## Differentiable classification head

+

+SetFit also provides [`SetFitHead`] as an exclusively `torch` classification head. It uses a linear layer to map the embeddings to the class. It can be used by setting the `use_differentiable_head` argument on [`SetFitModel.from_pretrained`] to `True`:

+

+```py

+>>> from setfit import SetFitModel

+

+>>> model = SetFitModel.from_pretrained("BAAI/bge-small-en-v1.5", use_differentiable_head=True)

+>>> model.model_head

+SetFitHead({'in_features': 384, 'out_features': 2, 'temperature': 1.0, 'bias': True, 'device': 'cuda'})

+```

+

+By default, this will assume binary classification. To change that, also set the `out_features` via `head_params` to the number of classes that you are using.

+

+```py

+>>> from setfit import SetFitModel

+

+>>> model = SetFitModel.from_pretrained("BAAI/bge-small-en-v1.5", use_differentiable_head=True, head_params={"out_features": 5})

+>>> model.model_head

+SetFitHead({'in_features': 384, 'out_features': 5, 'temperature': 1.0, 'bias': True, 'device': 'cuda'})

+```

+

+

+

+Unlike the default Logistic Regression head, the differentiable classification head only supports integer labels in the following range: `[0, num_classes)`.

+

+

+

+### Training with a differentiable classification head

+

+Using the [`SetFitHead`] unlocks some new [`TrainingArguments`] that are not used with a sklearn-based head. Note that training with SetFit consists of two phases behind the scenes: **finetuning embeddings** and **training a classification head**. As a result, some of the training arguments can be tuples, where the two values are used for each of the two phases, respectively. For a lot of these cases, the second value is only used if the classification head is differentiable. For example:

+

+* **batch_size**: (`Union[int, Tuple[int, int]]`, defaults to `(16, 2)`) - The second value in the tuple determines the batch size when training the differentiable SetFitHead.

+* **num_epochs**: (`Union[int, Tuple[int, int]]`, defaults to `(1, 16)`) - The second value in the tuple determines the number of epochs when training the differentiable SetFitHead. In practice, the `num_epochs` is usually larger for training the classification head. There are two reasons for this:

+

+ 1. This training phase does not train with contrastive pairs, so unlike when finetuning the embedding model, you only get one training sample per labeled training text.

+ 2. This training phase involves training a classifier from scratch, not finetuning an already capable model. We need more training steps for this.

+* **end_to_end**: (`bool`, defaults to `False`) - If `True`, train the entire model end-to-end during the classifier training phase. Otherwise, freeze the Sentence Transformer body and only train the head.

+* **body_learning_rate**: (`Union[float, Tuple[float, float]]`, defaults to `(2e-5, 1e-5)`) - The second value in the tuple determines the learning rate of the Sentence Transformer body during the classifier training phase. This is only relevant if `end_to_end` is `True`, as otherwise the Sentence Transformer body is frozen when training the classifier.

+* **head_learning_rate** (`float`, defaults to `1e-2`) - This value determines the learning rate of the differentiable head during the classifier training phase. It is only used if the differentiable head is used.

+* **l2_weight** (`float`, *optional*) - Optional l2 weight for both the model body and head, passed to the `AdamW` optimizer in the classifier training phase only if a differentiable head is used.

+

+For example, a full training script using a differentiable classification head may look something like this:

+

+```py

+from setfit import SetFitModel, Trainer, TrainingArguments, sample_dataset

+from datasets import load_dataset

+

+# Initializing a new SetFit model

+model = SetFitModel.from_pretrained("BAAI/bge-small-en-v1.5", use_differentiable_head=True, head_params={"out_features": 2})

+

+# Preparing the dataset

+dataset = load_dataset("SetFit/sst2")

+train_dataset = sample_dataset(dataset["train"], label_column="label", num_samples=32)

+test_dataset = dataset["test"]

+

+# Preparing the training arguments

+args = TrainingArguments(

+ batch_size=(32, 16),

+ num_epochs=(3, 8),

+ end_to_end=True,

+ body_learning_rate=(2e-5, 5e-6),

+ head_learning_rate=2e-3,

+ l2_weight=0.01,

+)

+

+# Preparing the trainer

+trainer = Trainer(

+ model=model,

+ args=args,

+ train_dataset=train_dataset,

+)

+trainer.train()

+# ***** Running training *****

+# Num examples = 66

+# Num epochs = 3

+# Total optimization steps = 198

+# Total train batch size = 3

+# {'embedding_loss': 0.2204, 'learning_rate': 1.0000000000000002e-06, 'epoch': 0.02}

+# {'embedding_loss': 0.0058, 'learning_rate': 1.662921348314607e-05, 'epoch': 0.76}

+# {'embedding_loss': 0.0026, 'learning_rate': 1.101123595505618e-05, 'epoch': 1.52}

+# {'embedding_loss': 0.0022, 'learning_rate': 5.393258426966292e-06, 'epoch': 2.27}

+# {'train_runtime': 36.6756, 'train_samples_per_second': 172.758, 'train_steps_per_second': 5.399, 'epoch': 3.0}

+# 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 198/198 [00:30<00:00, 6.45it/s]

+# The `max_length` is `None`. Using the maximum acceptable length according to the current model body: 512.

+# Epoch: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 8/8 [00:07<00:00, 1.03it/s]

+

+# Evaluating

+metrics = trainer.evaluate(test_dataset)

+print(metrics)

+# => {'accuracy': 0.8632619439868204}

+

+# Performing inference

+preds = model.predict([

+ "It's a charming and often affecting journey.",

+ "It's slow -- very, very slow.",

+ "A sometimes tedious film.",

+])

+print(preds)

+# => tensor([1, 0, 0], device='cuda:0')

+```

+

+## Custom classification head

+Alongside the two built-in options, SetFit allows you to specify a custom classification head. There are two forms of supported heads: a custom **differentiable** head or a custom **non-differentiable** head. Both heads must implement the following two methods:

+

+### Custom differentiable head

+A custom differentiable head must follow these requirements:

+

+* Must subclass `nn.Module`.

+* A `predict` method: `(self, torch.Tensor with shape [num_inputs, embedding_size]) -> torch.Tensor with shape [num_inputs]` - This method classifies the embeddings. The output must integers in the range of `[0, num_classes)`.

+* A `predict_proba` method: `(self, torch.Tensor with shape [num_inputs, embedding_size]) -> torch.Tensor with shape [num_inputs, num_classes]` - This method classifies the embeddings into probabilities for each class. For each input, the tensor of size `num_classes` must sum to 1. Applying `torch.argmax(output, dim=-1)` should result in the output for `predict`.

+* A `get_loss_fn` method: `(self) -> nn.Module` - Returns an initialized loss function, e.g. `torch.nn.CrossEntropyLoss()`.

+* A `forward` method: `(self, Dict[str, torch.Tensor]) -> Dict[str, torch.Tensor]` - Given the output from the Sentence Transformer body, i.e. a dictionary of `'input_ids'`, `'token_type_ids'`, `'attention_mask'`, `'token_embeddings'` and `'sentence_embedding'` keys, return a dictionary with a `'logits'` key and a `torch.Tensor` value with shape `[batch_size, num_classes]`.

+

+### Custom non-differentiable head

+A custom non-differentiable head must follow these requirements:

+

+* A `predict` method: `(self, np.array with shape [num_inputs, embedding_size]) -> np.array with shape [num_inputs]` - This method classifies the embeddings. The output must integers in the range of `[0, num_classes)`.

+* A `predict_proba` method: `(self, np.array with shape [num_inputs, embedding_size]) -> np.array with shape [num_inputs, num_classes]` - This method classifies the embeddings into probabilities for each class. For each input, the array of size `num_classes` must sum to 1. Applying `np.argmax(output, dim=-1)` should result in the output for `predict`.

+* A `fit` method: `(self, np.array with shape [num_inputs, embedding_size], List[Any]) -> None` - This method must take a `numpy` array of embeddings and a list of corresponding labels. The labels need not be integers per se.

+

+Many classifiers from sklearn already fit these requirements, such as [`RandomForestClassifier`](https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestClassifier.html#sklearn.ensemble.RandomForestClassifier), [`MLPClassifier`](https://scikit-learn.org/stable/modules/generated/sklearn.neural_network.MLPClassifier.html#sklearn.neural_network.MLPClassifier), [`KNeighborsClassifier`](https://scikit-learn.org/stable/modules/generated/sklearn.neighbors.KNeighborsClassifier.html#sklearn.neighbors.KNeighborsClassifier), etc.

+

+When initializing a SetFit model using your custom (non-)differentiable classification head, it is recommended to use the regular `__init__` method:

+

+```py

+from setfit import SetFitModel

+from sklearn.svm import LinearSVC

+from sentence_transformers import SentenceTransformer

+

+# Initializing a new SetFit model

+model_body = SentenceTransformer("BAAI/bge-small-en-v1.5")

+model_head = LinearSVC()

+model = SetFitModel(model_body, model_head)

+```

+

+Then, training and inference can commence like normal, e.g.:

+```py

+from setfit import Trainer, TrainingArguments, sample_dataset

+from datasets import load_dataset

+

+# Preparing the dataset

+dataset = load_dataset("SetFit/sst2")

+train_dataset = sample_dataset(dataset["train"], label_column="label", num_samples=32)

+test_dataset = dataset["test"]

+

+# Preparing the training arguments

+args = TrainingArguments(

+ batch_size=32,

+ num_epochs=3,

+)

+

+# Preparing the trainer

+trainer = Trainer(

+ model=model,

+ args=args,

+ train_dataset=train_dataset,

+)

+trainer.train()

+

+# Evaluating

+metrics = trainer.evaluate(test_dataset)

+print(metrics)

+# => {'accuracy': 0.8638110928061504}

+

+# Performing inference

+preds = model.predict([

+ "It's a charming and often affecting journey.",

+ "It's slow -- very, very slow.",

+ "A sometimes tedious film.",

+])

+print(preds)

+# => tensor([1, 0, 0], dtype=torch.int32)

+```

diff --git a/docs/source/en/how_to/hyperparameter_optimization.mdx b/docs/source/en/how_to/hyperparameter_optimization.mdx

new file mode 100644

index 00000000..db687044

--- /dev/null

+++ b/docs/source/en/how_to/hyperparameter_optimization.mdx

@@ -0,0 +1,376 @@

+

+# Hyperparameter Optimization

+

+SetFit models are often very quick to train, making them very suitable for hyperparameter optimization (HPO) to select the best hyperparameters.

+

+This guide will show you how to apply HPO for SetFit.

+

+## Requirements

+

+To use HPO, first install the `optuna` backend:

+

+```bash

+pip install optuna

+```

+

+To use this method, you need to define two functions:

+

+* `model_init()`: A function that instantiates the model to be used. If provided, each call to `train()` will start from a new instance of the model as given by this function.

+* `hp_space()`: A function that defines the hyperparameter search space.

+

+Here is an example of a `model_init()` function that we'll use to scan over the hyperparameters associated with the classification head in `SetFitModel`:

+

+```python

+from setfit import SetFitModel

+from typing import Dict, Any

+

+def model_init(params: Dict[str, Any]) -> SetFitModel:

+ params = params or {}

+ max_iter = params.get("max_iter", 100)

+ solver = params.get("solver", "liblinear")

+ params = {

+ "head_params": {

+ "max_iter": max_iter,

+ "solver": solver,

+ }

+ }

+ return SetFitModel.from_pretrained("BAAI/bge-small-en-v1.5", **params)

+```

+

+Then, to scan over hyperparameters associated with the SetFit training process, we can define a `hp_space(trial)` function as follows:

+

+```python

+from optuna import Trial

+from typing import Dict, Union

+

+def hp_space(trial: Trial) -> Dict[str, Union[float, int, str]]:

+ return {

+ "body_learning_rate": trial.suggest_float("body_learning_rate", 1e-6, 1e-3, log=True),

+ "num_epochs": trial.suggest_int("num_epochs", 1, 3),

+ "batch_size": trial.suggest_categorical("batch_size", [16, 32, 64]),

+ "seed": trial.suggest_int("seed", 1, 40),

+ "max_iter": trial.suggest_int("max_iter", 50, 300),

+ "solver": trial.suggest_categorical("solver", ["newton-cg", "lbfgs", "liblinear"]),

+ }

+```

+

+

+

+In practice, we found `num_epochs`, `max_steps`, and `body_learning_rate` to be the most important hyperparameters for the contrastive learning process.

+

+

+

+The next step is to prepare a dataset.

+

+```py

+from datasets import load_dataset

+from setfit import Trainer, sample_dataset

+

+dataset = load_dataset("SetFit/emotion")

+train_dataset = sample_dataset(dataset["train"], label_column="label", num_samples=8)

+test_dataset = dataset["test"]

+```

+

+After which we can instantiate a [`Trainer`] and commence HPO via [`Trainer.hyperparameter_search`]. I've split up the logs from each trial into separate codeblocks for readability:

+

+```py

+trainer = Trainer(

+ train_dataset=train_dataset,

+ eval_dataset=test_dataset,

+ model_init=model_init,

+)

+best_run = trainer.hyperparameter_search(direction="maximize", hp_space=hp_space, n_trials=10)

+```

+```

+[I 2023-11-14 20:36:55,736] A new study created in memory with name: no-name-d9c6ec29-c5d8-48a2-8f09-299b1f3740f1

+Trial: {'body_learning_rate': 1.937397586885703e-06, 'num_epochs': 3, 'batch_size': 32, 'seed': 16, 'max_iter': 223, 'solver': 'newton-cg'}

+model_head.pkl not found on HuggingFace Hub, initialising classification head with random weights. You should TRAIN this model on a downstream task to use it for predictions and inference.

+***** Running training *****

+ Num examples = 60

+ Num epochs = 3

+ Total optimization steps = 180

+ Total train batch size = 32

+{'embedding_loss': 0.26, 'learning_rate': 1.0763319927142795e-07, 'epoch': 0.02}

+{'embedding_loss': 0.2069, 'learning_rate': 1.5547017672539594e-06, 'epoch': 0.83}

+{'embedding_loss': 0.2145, 'learning_rate': 9.567395490793595e-07, 'epoch': 1.67}

+{'embedding_loss': 0.2236, 'learning_rate': 3.587773309047598e-07, 'epoch': 2.5}

+{'train_runtime': 36.1299, 'train_samples_per_second': 159.425, 'train_steps_per_second': 4.982, 'epoch': 3.0}

+100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 180/180 [00:29<00:00, 6.02it/s]

+***** Running evaluation *****

+[I 2023-11-14 20:37:33,895] Trial 0 finished with value: 0.44 and parameters: {'body_learning_rate': 1.937397586885703e-06, 'num_epochs': 3, 'batch_size': 32, 'seed': 16, 'max_iter': 223, 'solver': 'newton-cg'}. Best is trial 0 with value: 0.44.

+```

+```

+Trial: {'body_learning_rate': 0.000946449838705604, 'num_epochs': 2, 'batch_size': 16, 'seed': 8, 'max_iter': 60, 'solver': 'liblinear'}

+model_head.pkl not found on HuggingFace Hub, initialising classification head with random weights. You should TRAIN this model on a downstream task to use it for predictions and inference.

+***** Running training *****

+ Num examples = 120

+ Num epochs = 2

+ Total optimization steps = 240

+ Total train batch size = 16

+{'embedding_loss': 0.2354, 'learning_rate': 3.943540994606683e-05, 'epoch': 0.01}

+{'embedding_loss': 0.2419, 'learning_rate': 0.0008325253210836332, 'epoch': 0.42}

+{'embedding_loss': 0.3601, 'learning_rate': 0.0006134397102721508, 'epoch': 0.83}

+{'embedding_loss': 0.2694, 'learning_rate': 0.00039435409946066835, 'epoch': 1.25}

+{'embedding_loss': 0.2496, 'learning_rate': 0.0001752684886491859, 'epoch': 1.67}

+{'train_runtime': 33.5015, 'train_samples_per_second': 114.622, 'train_steps_per_second': 7.164, 'epoch': 2.0}

+100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 240/240 [00:33<00:00, 7.16it/s]

+***** Running evaluation *****