You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

I trained a few-shot-gan model with default parameters (trained to 30 kimg). After that I decided this needs more training so changed the resume-pkl to my latest few-shot-gan model snapshot and set the resume-kimg to 30 and total-kimg to 60

Here's the log that it says it loaded my few-shot-gan model Loading networks from "/content/drive/My Drive/FewShotGAN/Test/00003-stylegan2-Test-Test-config-ada-sv-flat-aug/network-snapshot-000030.pkl"

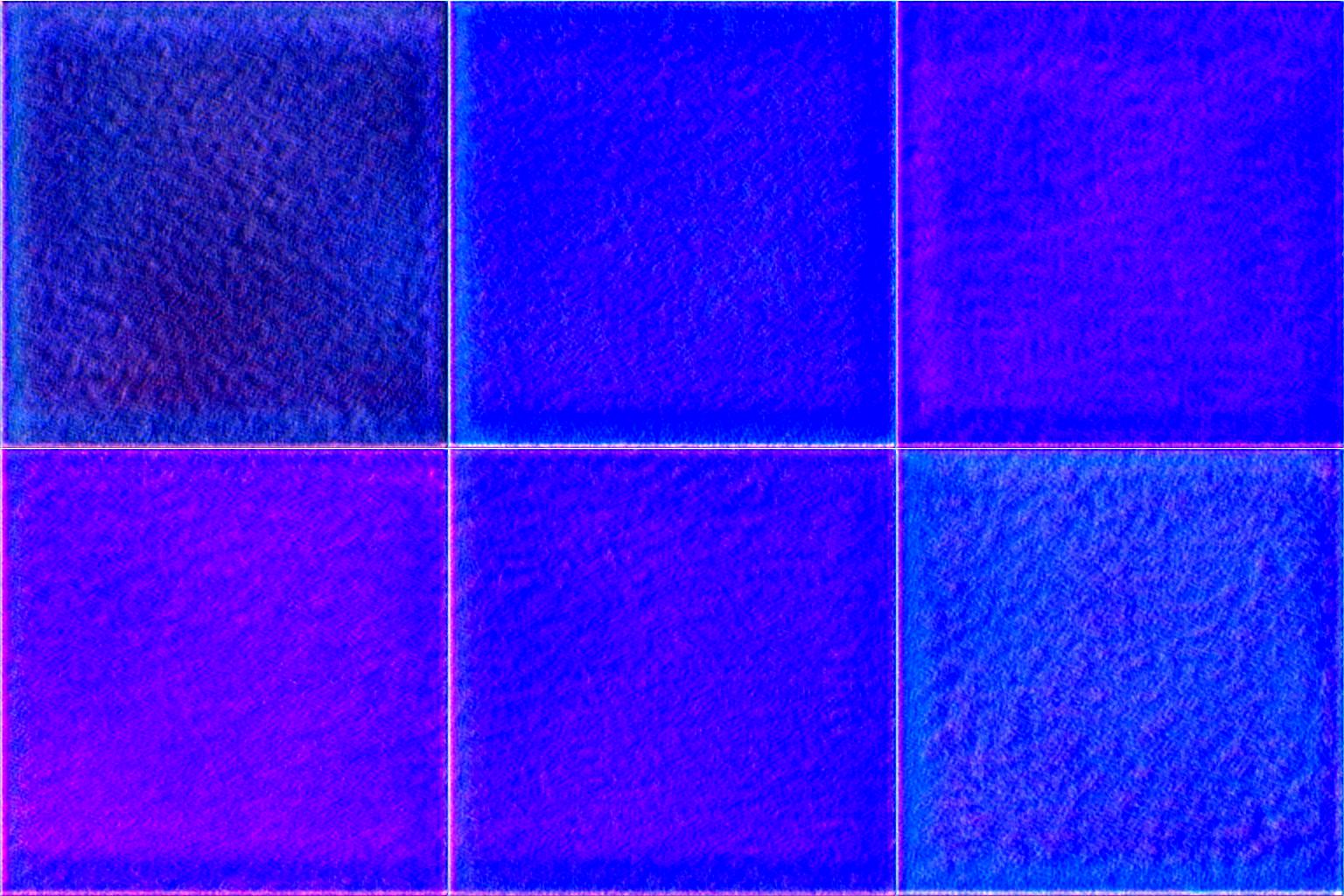

But the problem is the generated fake-init and subsequent generated image ticks look like it is training completely from scratch why? It wasn't like this when I set the resume-pkl to a vanilla StyleGAN2 model.

How could I possibly resume training with more kimg without training it from scratch (i.e. resuming to an original StyleGAN2 model)?

The text was updated successfully, but these errors were encountered:

I trained a few-shot-gan model with default parameters (trained to 30 kimg). After that I decided this needs more training so changed the

resume-pklto my latest few-shot-gan model snapshot and set theresume-kimgto30andtotal-kimgto60Here's the log that it says it loaded my few-shot-gan model

Loading networks from "/content/drive/My Drive/FewShotGAN/Test/00003-stylegan2-Test-Test-config-ada-sv-flat-aug/network-snapshot-000030.pkl"But the problem is the generated fake-init and subsequent generated image ticks look like it is training completely from scratch why? It wasn't like this when I set the

resume-pklto a vanilla StyleGAN2 model.How could I possibly resume training with more kimg without training it from scratch (i.e. resuming to an original StyleGAN2 model)?

The text was updated successfully, but these errors were encountered: