-

Notifications

You must be signed in to change notification settings - Fork 3.8k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Polynomial latency growth for database isolated ("Bridge") multi-tenant models #63206

Comments

|

Hello, I am Blathers. I am here to help you get the issue triaged. Hoot - a bug! Though bugs are the bane of my existence, rest assured the wretched thing will get the best of care here. I have CC'd a few people who may be able to assist you:

If we have not gotten back to your issue within a few business days, you can try the following:

🦉 Hoot! I am a Blathers, a bot for CockroachDB. My owner is otan. |

Nice data analysis. R^2 refers to the goodness-of-fit rather than the growth factor. Being close to 1 means that the trend line is a good fit to the data. As a googled reference says, "Essentially, an R-Squared value of 0.9 would indicate that 90% of the variance of the dependent variable being studied is explained by the variance of the independent variable." So R-squared can never be > 1 |

|

At its core this relates to the zone config infrastructure being and any change to anything in schema being an O(descriptors) operation on behalf of the writer and then triggering an update on each range which is O(ln(descriptors)). We have a string of scattered conversations about this on github but the fact of the matter is that this is reasonably understood and has long been deferred in favor of other work. We've tended to optimize to make things scale in the context of few tables with lots of data. Obviously that won't fly forever and I think this is part of a collective kick I deeply appreciate. The fact that the execution increases slightly super-linearly is also not so surprising. Likely the most relevant conversation on GitHub is #47150 (comment). I also recently advocated in this direction by posting #62128. At the end of the day, you're butting up against a very real limitation of the architecture by which the SQL layer propagates changes which may impact configuration to the KV layer. It's far from a fundamental limitation but it's one that will take some engineering to get out of. I cannot promise you when we're going to do something about it but we very much want to do something about it. |

|

I just want to say, I appreciate all the time and effort you put into this analysis and integration! It's inspiring and is the sort of engagement that motivates me at the start of the day. Sorry we've let you down thus far. I look forward to finding paths forward. This thread is making me wonder whether we should be looking for short-term, pragmatic gains here that have tradeoffs we've thus far been unhappy accepting in order to unblock use-cases which we'll fix more generally in the longer term. |

My bad, I thought it meant something else but it makes sense in this context. I've updated the title accordingly! At least we know that the exponential model fits very well now 😅

Thank you @ajwerner, always appreciate your responses! It sort of settled on me that this is looking like an architectual limitation at the moment as I tried out more and more set ups and repro cases. We went on a call today with Daniel and while there might be some ideas to hotfix / work around things by defining different range sizes it sounded like this would only treat the symptoms and stretch out the time a bit until we hit a wall. I understand that restructuring this layer needs a lot of hard work but between the lines I believe to understand that it is the direction you want to go in anyways as it will improve your economics as well.

Thank you - this is the vibe I've been getting from our interactions and why I really want to make this work with our system and (sometimes ;) ) enjoy spending some late nights. I think you're building something great here because so many things are so much ahead of the competition that it would be almost a crime not to use it. I think it would be tremendously helpful to find something to make it work somehow, even if it is in a controlled way (e.g. no surprise 10.000k new tenants in 24 hours but instead maybe max 10.000k larger tenants per cluster). As far as I understood you are working on a multi-tenant system for CockroachCloud Free so I was wondering if there could be some alignment as to which approach you're taking, so that we can prepare and align there also. Generally, I think the "bridge" model we chose has both advantages and disadvantages but at scale it appears that industry consensus ("Google") favors it over Pool models. Much of the architecture design we chose is from e.g. Google Zanzibar paper which heavily uses Cloud Spanner and - for example - advocates for namespace / table-prefix type of isolation. From a data privacy, ACL, and operations model it kind of makes sense to me. I'm wondering if you're on that avenue also for Cockroach Cloud Free or if you're choosing a different model (e.g. Pool / Row Level Isolation) and if so understand the reasons as to why. |

Indeed there is deep alignment between the multi-tenant design and this problem. We have chosen, in a sense, the same fundamental "bridge" architecture. Each tenant represents a portion of the keyspace under a prefix. The way we made that work, in a sense, is by cheating. In our current multi-tenant system, we make the KV layer blind to anything going on within a tenant. This means we give up entirely on the ability to configure the configuration (placement, size, constraints, gc ttl, etc). This is a big problem for our long term vision on lots of fronts. You should find these problems outlined in the above attached RFC PR. A different source of scalability problems will be the number of ranges. At some number of tenants (and thus ranges) we would have a problem because things like processing queues etc would take too long. However, the root of the problem we're seeing here isn't the number of ranges. Very little in cockroach requires O(ranges) work. Even if it did, nothing latency sensitive and O(ranges) should be synchronous. My sense is that the bottleneck in that world would likely be things like placement decisions and those sorts of subsystems. I think other systems have proven that that sort of work can scale to at least millions. The more pressing problem is doing O(N) work in response to any user action, be it synchronously or asynchronously. I'm not opposed to row-level security and, if I'm being honest, feels like a lower lift in terms of implementation than fixing this architectural problem, but it's not a fundamental solution. Say you did partition each each table by tenant and then had an independent configuration and RLS per tenant because you'd like to place each partition of each table in a different region. In that case we're back in the same pickle but using even less efficient abstractions (our subzones are just nested protos inside a proto, so even if we did optimize per-element changes, we'd now be propagating big changes to sets of subzones). My feeling is we should do both things.

Complete aside, I really like that paper. That being said, it's a pretty advanced use case. Propagating causailty tokens to objects in order to optimize authn latency for abstract policies is sweet but feels like a heavy lift for a lot of application developers. The concept of latency-optimized bounded staleness has come up a number of times in recent months. If you have feelings about what would be a good interface here, I'd love to hear about it. There was a bit of chatter publicly about this here: #45243 (comment) |

|

I like your summary:

From my understanding of zanzibar, this is not really a spanner feature, but rather this GPS time sync within Google's data centers. It allows them to use the current time and compare that to a timestamp stored alongside the object as well as the relation tuple. Spanner is just allowing follower reads, and the caches in the zanzibar nodes are also storing these timestamps. When evaluating a request, zanzibar can check if it got all the necessary data locally/regionally, otherwise it has to handle a request accordingly. I guess the only other alternative to guarantee such consistency is using some kind of logical timestamp, as we really only care about the "older than" relation than the actual time. The reason why Google is using actual timestamps is because they have them available anyways, but it is not strictly required to use them. Also, see my thoughts in this issue ory/keto#517 |

|

There are two things I was surprised to not see discussed here:

|

|

@ajwerner probably a PM or two need to see this (but I don't know which yet) |

|

@aeneasr @ajwerner We are also facing similar issues, In our multitenant system we have 200+ Databases , Issues

Is there any hotfix ,configuration changes that need to be done to make it better? |

|

This should now be fixed in 22.1 🎉 |

|

:O that's awesome! |

|

@aeneasr I'm curious if you tested this with the new version of cockroach? |

|

We abandoned this approach and unfortunately have no way to reproduce the findings easily from back then :/ |

|

We've tested it and can confirm that the latency no longer grows linearly (or superlinearly) with the number of objects. |

@aeneasr Can I ask what approach you decided to implement and use that had the required performance? We're facing a similar issue and we're in the design phase, so I would love to hear your input on this matter. |

Describe the problem

We are running a multi-tenant system which isolates tenants on a per-database level ("Bridge model"). The documentation recommends using schemas ("Limit the number of databases you create. If you need to create multiple tables with the same name in your cluster, do so in different user-defined schemas, in the same database.").

In #60724 and our Cockroach Cloud Ticket 7926 we were told to rely on database isolation instead. Unfortunately, here too we find CockroachDB struggling with this multi-tenant model.

CockroachDB does not work well with running stressful workloads and schema changes in parallel - which is documented and we have observed this in production as well. Effects of this issue are:

SELECT * FROM foo;(~500 rows) statements while tenant workloads (create database, tables, indices; no data) are running.To Reproduce

To reproduce this we clone one of our projects, you can find the migrations here, we create a single-node instance of CRDB with 4GB RAM and 2 vCPUs and then create 1000 tenants by creating 1000 DBs and execute a bunch of CREATE TABLE, DROP, ALTER, ADD INDEX, ... statements:

I was running this workload over night (8+ hours) and we are now at 526 databases, so the workload has not completed yet.

Expected behavior

Given that the databases are empty

we would not expect such a siginficant increase in latency.

Additional data / screenshots

You can parse the log file and output it as CSV using

which in our case shows polynomial (2nd degree) / exponential growth with R^2 of almost 1. I suspect we'll get R^2 > 1 at some point:

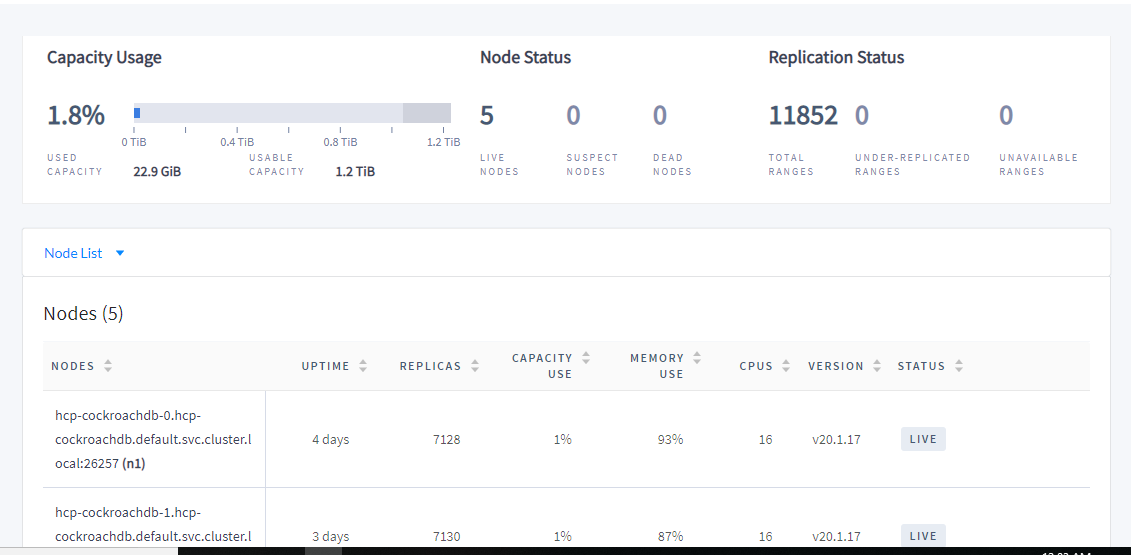

All other metrics point towards the same issue - I have therefore included screenshots from all metrics dashboards:

Overview

Hardware

Runtime

SQL

Storage

Replication

Distributed

Queues

Slow Requests

Changefeeds

Environment:

Additional context

We are heavily impacted by this issue and it has been a month-long effort to somehow get multi-tenancy to work in CRDB. The current effects are:

SELECT) with memory usage capping out at around 600 databases.This currently ruins our economic model where we would like to offer a "free tier" with strong usage limits that developers can use to try out the system, as a $1200 p/mo CockroachCloud cluster can only handle around 300-400 tenants without them having any data!

We were also planning on requesting a multi-region cluster set up in CockroachCloud which we expect to become more expensive. All of this points us to the result where CockroachDB is currently not able to support multi-tenant systems in an economical fashion.

While we are big fans of this technology and want to introduce it to our 30k+ deployments, especially with the new releases of https://github.com/ory/hydra, https://github.com/ory/keto, https://github.com/ory/kratos we are left with a rather frustrating experience of going back and forth - being told to try out different things and in the end hitting the roadblock very quickly.

I have also tried to create a "debug zip" but unfortunately it can not be generated due to query timeouts:

I then killed all workloads and re-ran debug.zip but it is taking painfully long for to collect the table details (one database a 23 tables takes about 30 seconds) so I aborted the process.

Comparison to PostgreSQL

To compare, with PostgreSQL

the container stays below 20% CPU usage

requires less than 1/10th of the time to execute the statements:

and does not show any significant (it looks like O(1)) change in execution time (the spikes correlate with me closing browser tabs and using Google Sheets in Safari...):

Jira issue: CRDB-6472

The text was updated successfully, but these errors were encountered: