A tensorflow implementation of Augustus Odena (at Google Brains) et al's "Conditional Image Synthesis With Auxiliary Classifier GANs" paper )

I've already implemented this kind of GAN structure last Sep. (See : Supervised InfoGAN tensorflow implementation)

I said that I had added supervised loss(in this paper auxiliary classifier) to InfoGAN structure to achieve the consistency of generated categories and training stability.

And the result was promising. It helped the model to generate consistent categories and to converge fast compared to InfoGAN.

The paper which propose the same architecture by researchers at Google Brains was published last Oct.

They've provided many results from diverse data set and tried to generate big images and explained why this architecture works plausibly.

I think this paper is awesome. But they did not provide source codes and I re-introduce this codes.

AC-GAN architecture( from the paper. )

- tensorflow >= rc0.11

- sugartensor >= 0.0.1.7

Execute

python train.py

to train the network. You can see the result ckpt files and log files in the 'asset/train' directory. Launch tensorboard --logdir asset/train/log to monitor training process.

Execute

python generate.py

to generate sample image. The 'sample.png' file will be generated in the 'asset/train' directory.

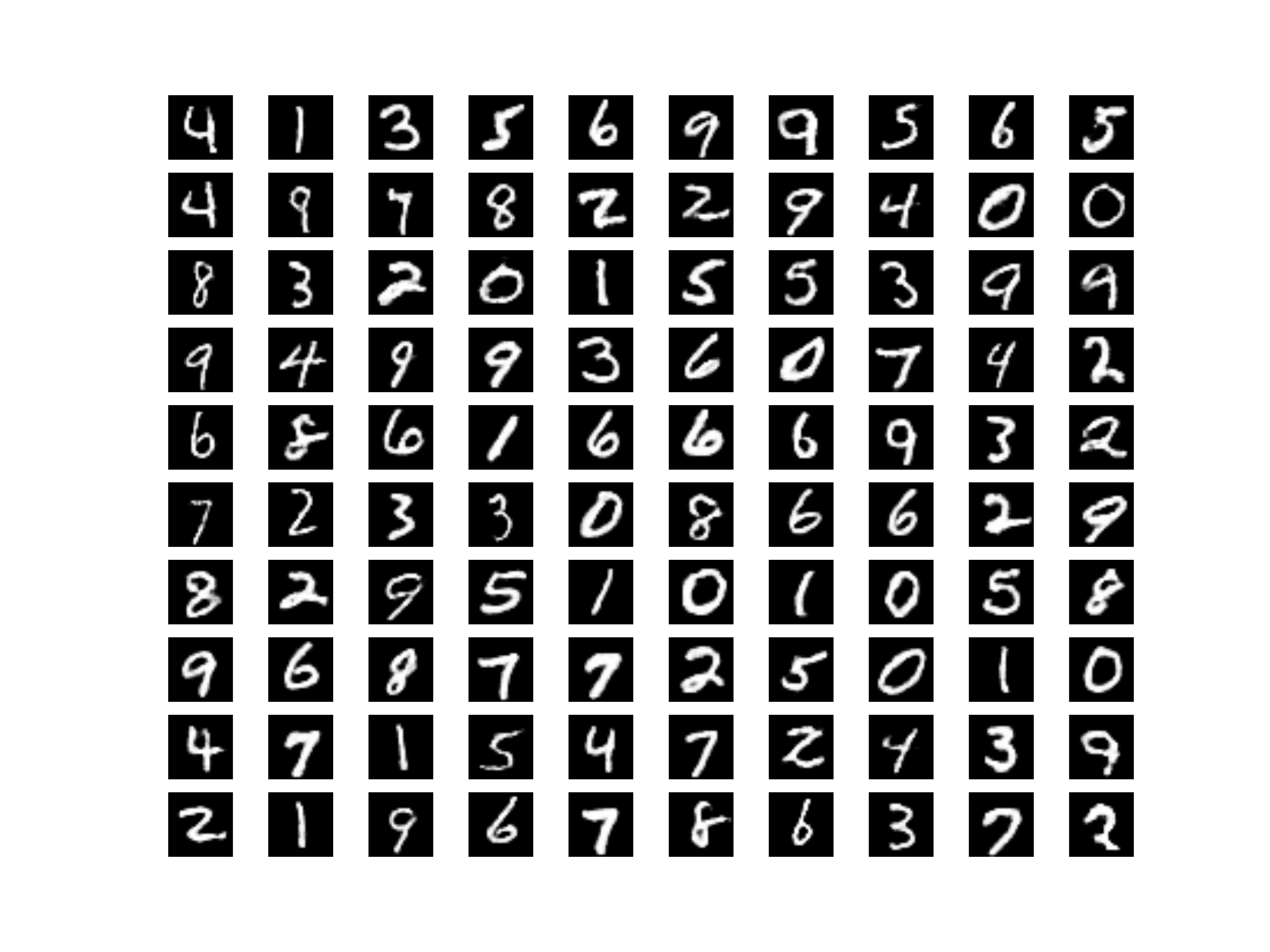

This image was generated by AC-GAN network.

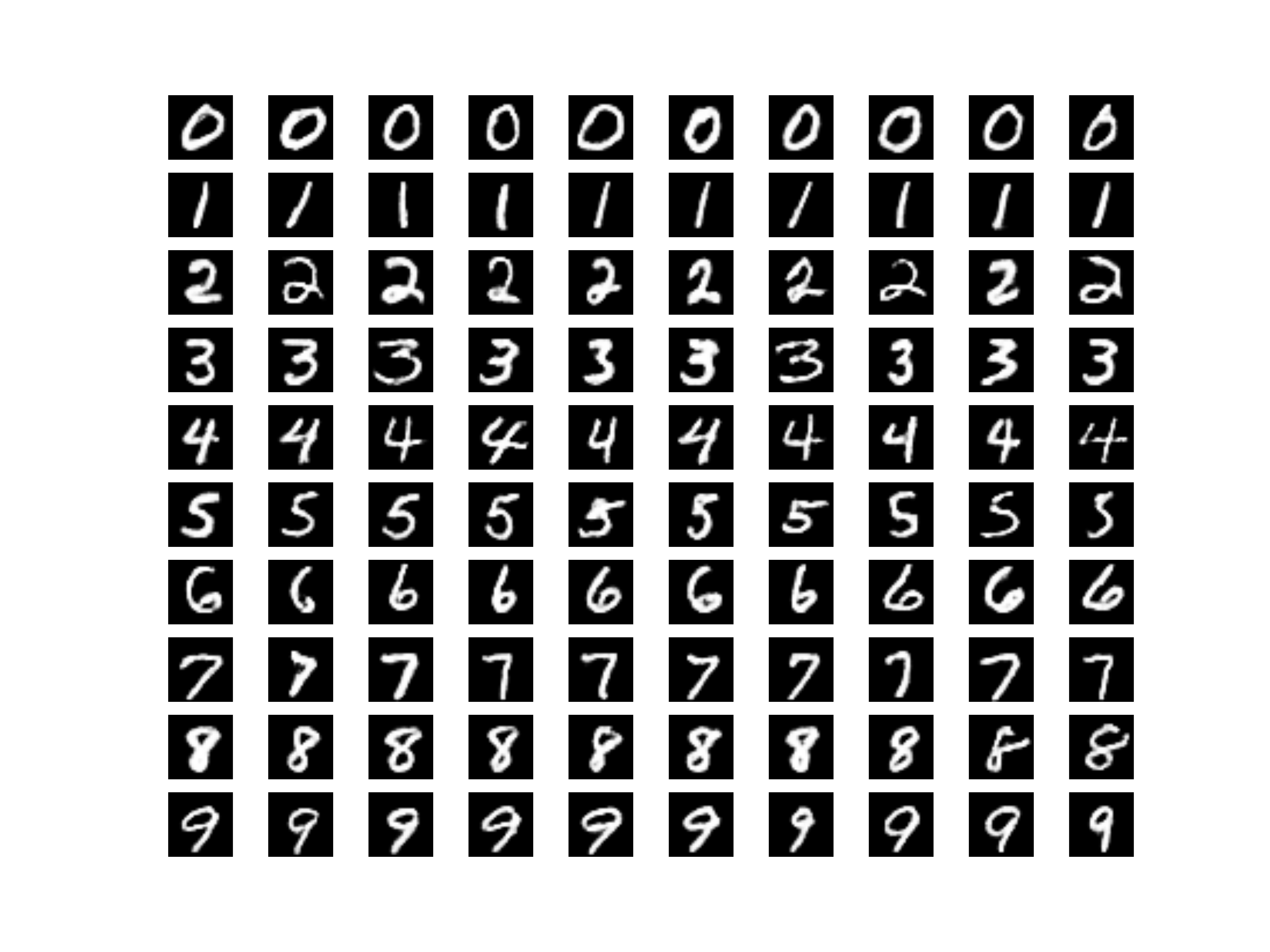

And this image was generated with categorical auxiliary classifier.

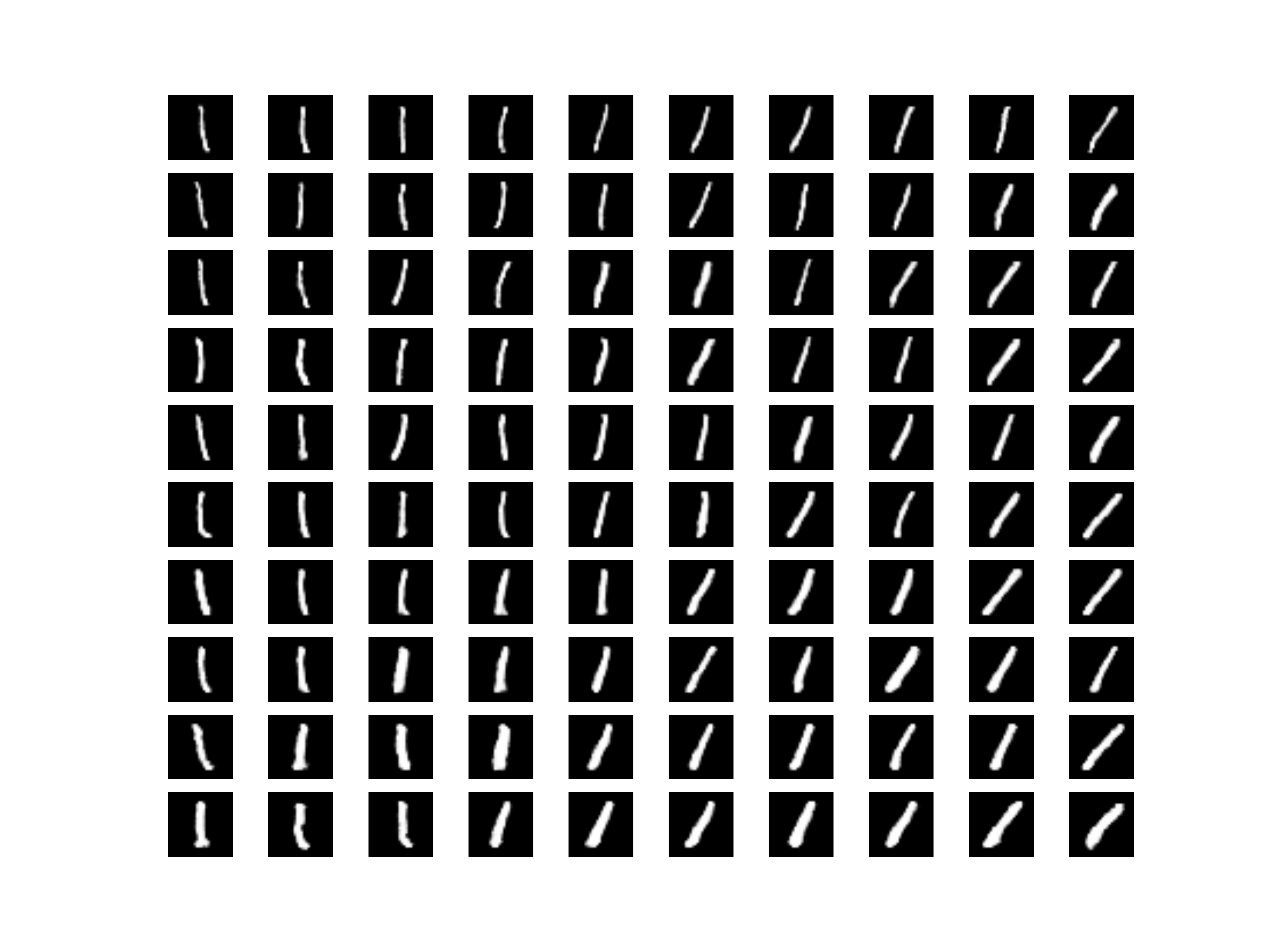

And this image was generated by continuous auxiliary classifier. You can see the rotation change along the X axis and the thickness change along the Y axis.

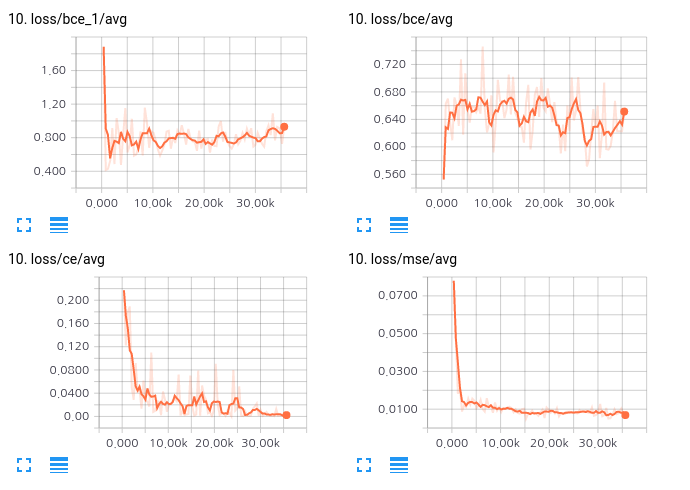

The following image is the loss chart in the training process. This looks more stable than my original GAN implementation.

- Original GAN tensorflow implementation

- EBGAN tensorflow implementation

- Supervised InfoGAN tensorflow implementation

- SRGAN tensorflow implementation

- Timeseries gan tensorflow implementation

Namju Kim ([email protected]) at Jamonglabs Co., Ltd.