Releases: av/harbor

v0.1.8

v0.1.8

This is a smaller release with bugfixes and QoL improvements.

- Fixing

harbor updatethat wasn't pointing to Harbor repo correctly - It's now possible to specify Ollama version with

harbor config set ollama.version <docker tag> harbor config updatecommand - will merge upstreamdefault.envto.env, applied onharbor updateautomaticallywebuihealthcheck relaxed, proper signal propagation in the custom entrypoint

Full Changelog: v0.1.7...v0.1.8

v0.1.7

aider integration

Aider lets you pair program with LLMs, to edit code in your local git repository. Start a new project or work with an existing git repo. 0Aider works best with GPT-4o & Claude 3.5 Sonnet and can connect to almost any LLM.

Starting

Harbor runs aider in a CLI mode.

# See available options

harbor aider --help

# Run aider, pre-configured with current LLM backend

harbor aiderWhen running aider, Harbor will mount current working directory as a container workspace. This means that running aider from a subfolder in a git repo will mask away the fact it's a git repo within the container.

aider is pre-configured to automatically work with the LLM backends supported by Harbor. You only need to point it to the correct model.

# Set a new model for aider as the model that

# vllm is currently using

harbor aider model $(harbor vllm model)

# Or set a specific model

harbor aider model "microsoft/Phi-3-mini-4k-instruct"v0.1.6

TextGrad

An autograd engine -- for textual gradients!

TextGrad is a powerful framework building automatic ``differentiation'' via text. TextGrad implements backpropagation through text feedback provided by LLMs, strongly building on the gradient metaphor.

Starting

# [Optional] pre-build if needed

harobr build tinygrad

# Start the service

harbor up textgrad

# Open JupyterLab server

harbor open textgradSee the sample notebook to get started

v0.1.5

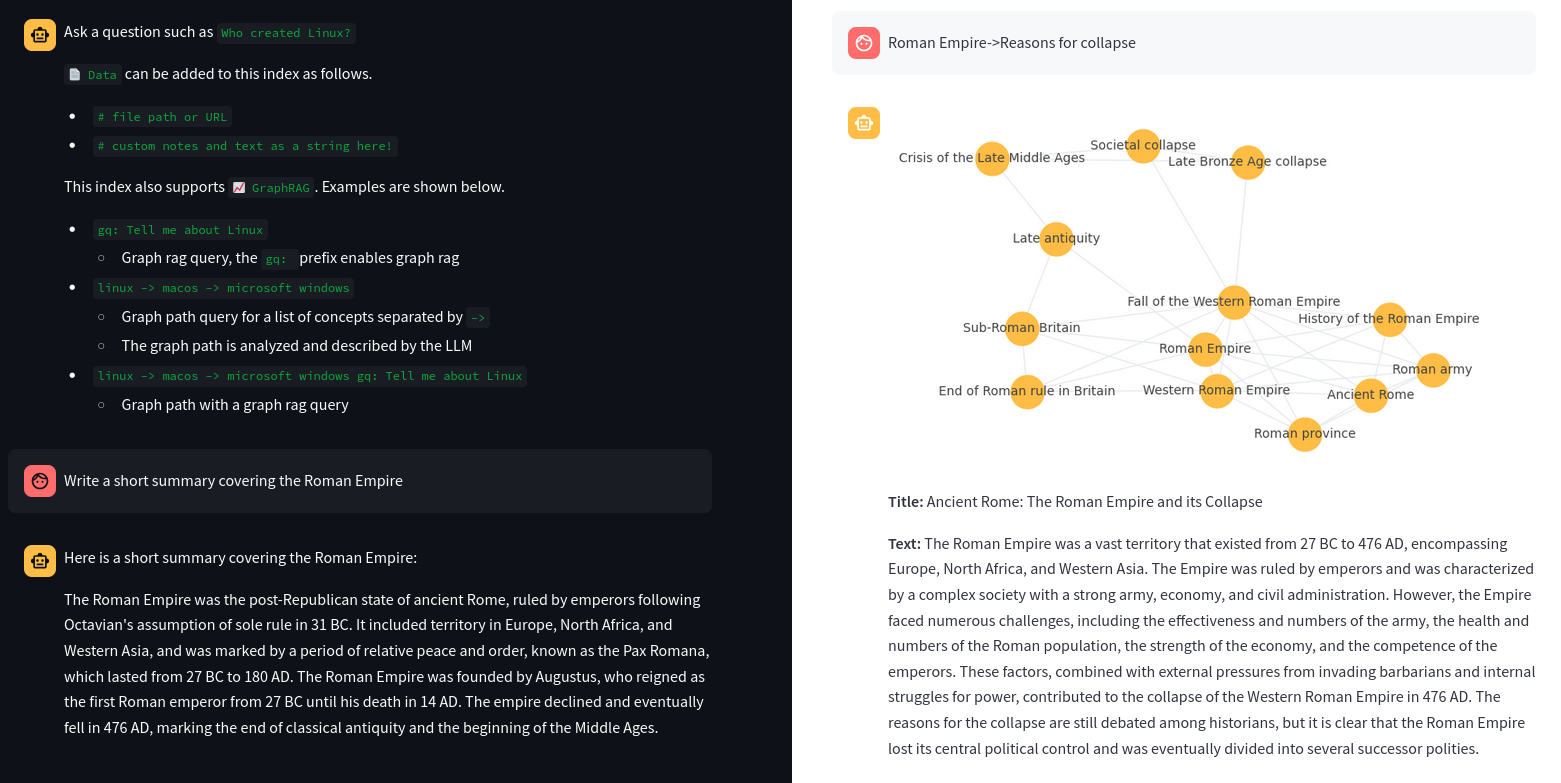

txtai RAG

Handle:

txtairag

URL: http://localhost:33991/

# "harbor txtai rag" is a namespace for the

# txtai RAG service. See available commands and options:

harbor txtai --help

harbor txtai rag --help

# Setting another model

harbor txtairag model llama3.1:8b-instruct-q8_0

# [Tip] See available models

harbor ollama ls

# Switching to different embeddings

# Arxiv - science papers ~6Gb

harbor txtai rag embeddings neuml/txtai-arxiv

# Wikipedia - general knowledge ~8Gb

harbor txtai rag embeddings neuml/txtai-wikipedia

# Switch to completely local embeddings

harbor txtai rag embeddings ""

# Remove embeddings folder in the txtai cache

rm -rf $(harbor txtai cache)/embeddings

# [Optional] Add something to the /data to seed

# the initial version of the new index

echo "Hello, world!" > $(harbor txtai cache)/data/hello.txttxtairagservice docs- Sample integration with

fabricservice

Full Changelog: v0.1.4...v0.1.5

v0.1.4

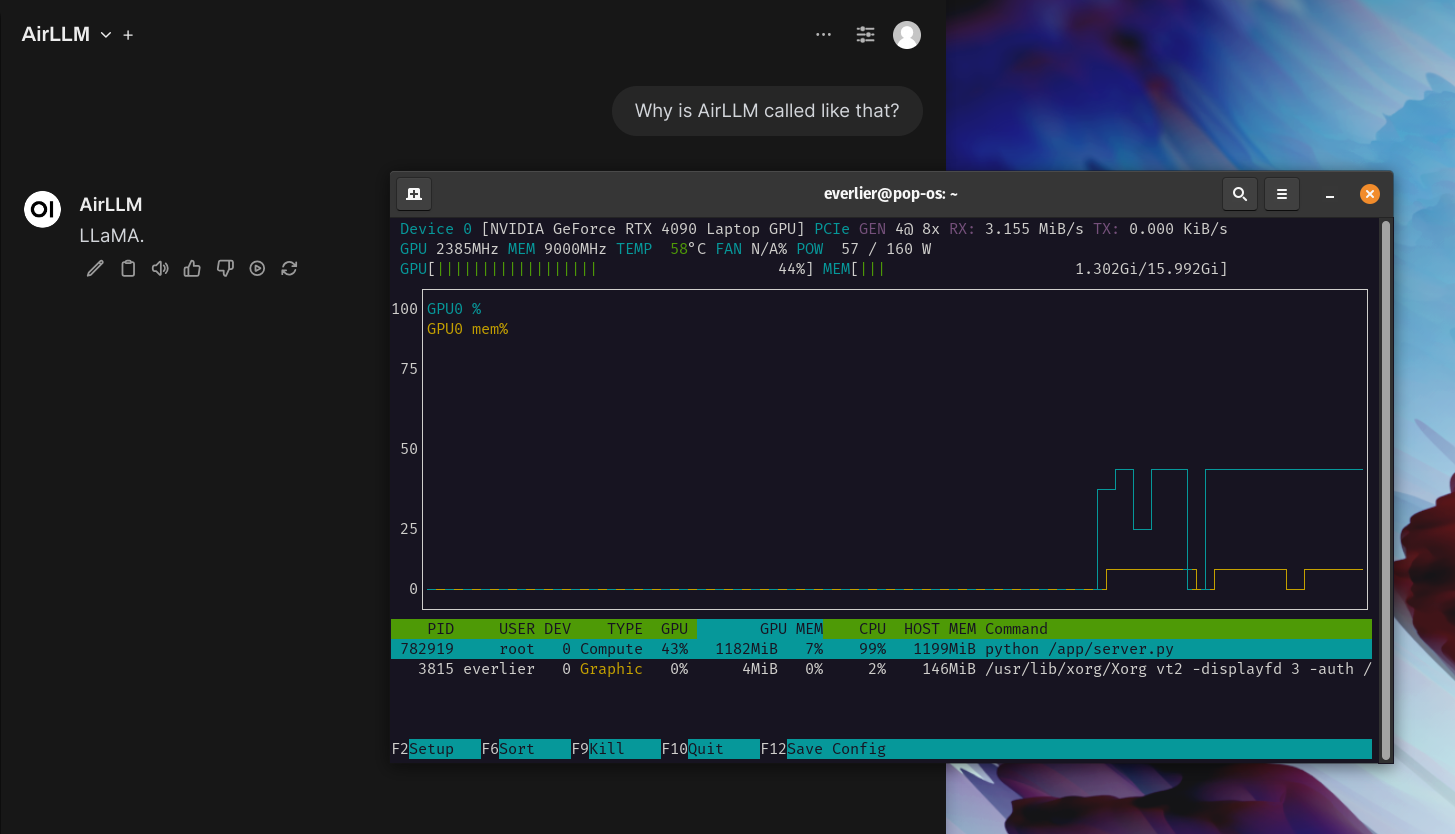

AirLLM

Handle:

airllm

URL: http://localhost:33981

Quickstart |

Configurations |

MacOS |

Example notebooks |

FAQ

AirLLM optimizes inference memory usage, allowing 70B large language models to run inference on a single 4GB GPU card without quantization, distillation and pruning. And you can run 405B Llama3.1 on 8GB vram now.

Note that above is true, but don't expect a performant inference. AirLLM loads LLM layers into memory in small groups. The main benefit is that it allows a "transformers"-like workflow for models that are much much larger than your VRAM.

Note

AirLLM requires a GPU with CUDA by default, can't be run on CPU.

Starting

# [Optional] Pre-build the image

# Needs PyTorch and CUDA, so will be quite large

harbor build airllm

# Start the service

# Will download selected models if not present yet

harbor up airllmFor funsies, Harbor implements an OpenAI-compatible server for AirLLM, so that you can connect it to the Open WebUI and... wait... wait... wait... and then get an amazing response from a previously unreachable model.

Full Changelog: v0.1.3...v0.1.4

v0.1.3

Parler

Adding parler as another voice backend, pre-configured for Open WebUI.

Starting

# [Optional] pre-pull the images

# (otherwise will be pulled on start, ~4Gb due to PyTorch)

harbor pull parler

# [Optional] pre-download the parler model

# (otherwise will be downloaded on start)

harbor hf download $(h parler model)

# Start with Parler

harbor up parler

# Open WebUI pre-configured with Parler as voice backend

harbor open

Configuring

# Configure model and the voice

# See the current model

# parler-tts/parler-tts-mini-v1 by default

# you can also use older parler models

harbor parler model

# Set new model, for example Parler large

harbor parler model parler-tts/parler-tts-large-v1

# See the current voice prompt

harbor parler voice

# Set the new voice prompt

harbor parler voice "Gary speeks in calm and reassuring tone."

# You'll need to restart after reconfiguring either

harbor restartv0.1.2

fabric

Handle:

fabric

URL: -

fabric is an open-source framework for augmenting humans using AI.

# Create a quiz about Harbor CLI - Neat!

cat $(h home)/harbor.sh | head -n 150 | h fabric --pattern create_quiz --stream

# > Subject: Bash Scripting and Container Management (Harbor CLI)

# * Learning objective: Understand and utilize basic container management functions in a bash script

# - Question 1: Which command is used to view the logs of running containers?

# Answer 1: The `logs` or `l` command is used to view the logs of running containers.

#

# - Question 2: What does the `exec` function in the Harbor CLI do?

# Answer 2: The `exec` function in the Harbor CLI allows

# you to execute a specific command inside a running service container.

#

# - Question 3: How can you run a one-off command in a service container using the Harbor CLI?

# Answer 3: You can use the `run` command followed by the name of

# the service and then the command you want to execute, like so: `./harbor.sh run <service> <command>`.

# Why not generate some tags for the Harbor CLI

cat $(h home)/harbor.sh | head -n 50 | h fabric -sp create_tags

# harbor_cli bash_script ollama nvidia gpu docker

# llamacpp tgi lite_llm openai vllm aphrodite tabbyapi

# mistralrs cloudflare parllama plandex open_interpreter fabric hugging_faceMisc

harbor updatenow respects release tags, run with--latestto stick to the bleeding edge- nicer install one-liner with version pinning

- Mac OS install and linking fixes

Full Changelog: v0.1.1...v0.1.2

v0.1.1

Changes

- Nicer one-liner for the install

cmdh- built-in

override.env - Better auto-config based on the running LLM backend (llamacpp added)

- configure OpenAI api key/url via CLI

harbor cmdh url/key - Fixed

"service" has no docker image..."error when running with multiple LLM backends

- built-in

hf- Using custom docker image for a more recent CLI version

- Integrated help between Harbor extensions and native CLI

llamacpp- Configuring model via a path to

.ggufin the cache - Configure cache location

harbor llamacpp cache ~/path/to/cache

- Configuring model via a path to

harbor cmd--hflag prints compose files in a nicer way for debugharbor find- looks up files in caches connected with Harbor (HF, vLLM, llama.cpp, ollama)harbor find *.gguf,harbor find Hermes

litellm- Now uses OpenAI-compatible API for

tgi, as native only works with non-chat completions

- Now uses OpenAI-compatible API for

Full Changelog: v0.1.0...v0.1.1

v0.1.0

Harbor is a toolkit that can help you running LLMs and related projects on your hardware. Harbor is a CLI that manages a set of pre-configured Docker Compose configurations.

Services

UIs

Open WebUI ⦁︎ LibreChat ⦁︎ Hollama ⦁︎ parllama, BionicGPT

Backends

Ollama ⦁︎ llama.cpp ⦁︎ vLLM ⦁︎ TabbyAPI ⦁︎ Aphrodite Engine ⦁︎ mistral.rs ⦁︎ openedai-speech, text-generation-inference ⦁︎ LMDeploy

Satellites

SearXNG ⦁︎ Dify ⦁︎ Plandex ⦁︎ LiteLLM ⦁︎ LangFuse ⦁︎ Open Interpreter ⦁︎ cloudflared ⦁︎ cmdh

CLI

# Start default services

harbor up

# Start more services that are configured to work together

harbor up searxng tts

# Run additional/alternative LLM Inference backends

# Open Webui is automatically connected to them.

harbor up llamacpp tgi litellm vllm tabbyapi aphrodite mistralrs

# Run different Frontends

harbor up librechat bionicgpt hollama

# Shortcut to HF Hub to find the models

harbor hf find gguf gemma-2

# Use HFDownloader and official HF CLI to download models

harbor hf dl -m google/gemma-2-2b-it -c 10 -s ./hf

harbor hf download google/gemma-2-2b-it

# Where possible, cache is shared between the services

harbor tgi model google/gemma-2-2b-it

harbor vllm model google/gemma-2-2b-it

harbor aphrodite model google/gemma-2-2b-it

harbor tabbyapi model google/gemma-2-2b-it-exl2

harbor mistralrs model google/gemma-2-2b-it

harbor opint model google/gemma-2-2b-it

# Convenience tools for docker setup

harbor shell vllm

harbor exec webui curl $(harbor url -i ollama)

# Tell your shell exactly what you think about it

harbor opint # Open Interpreter CLI in current folder

# Access service CLIs without installing them

harbor hf scan-cache

harbor ollama list

# Open services from the CLI

harbor open webui

harbor open llamacpp

# Print yourself a QR to quickly open the

# service on your phone

harbor qr

# Feeling adventurous? Expose your harbor

# to the internet

harbor tunnel

# Config management

harbor config list

harbor config set webui.host.port 8080

# Eject from Harbor into a standalone Docker Compose setup

# Will export related services and variables into a standalone file.

harbor eject searxng llamacpp > docker-compose.harbor.yml

# Gimmick/Fun Area

# Argument scrambling, below commands are all the same as above

# Harbor doesn't care if it's "vllm model" or "model vllm", it'll

# figure it out.

harbor vllm model # harbor model vllm

harbor config get webui.name # harbor get config webui_name

harbor tabbyapi shell # harbor shell tabbyapi

# 50% gimmick, 50% useful

# Ask harbor about itself, note no quotes

harbor how to ping ollama container from the webui?Documentation

- Harbor CLI Reference

Read more about Harbor CLI commands and options. - Harbor Services

Read about supported services and the ways to configure them. - Harbor Compose Setup

Read about the way Harbor uses Docker Compose to manage services. - Compatibility

Known compatibility issues between the services and models as well as possible workarounds.

v0.1.0 changes

- Argument scrambling support

- OpenAI proxy to for Dify to integrate with the rest of the services

mainis now consitered (somewhat) stable, will no long be updated with bleeding edge changes

Full Changelog: v0.0.21...v0.1.0

v0.0.21

Dify

Handle:

dify

URL: http://localhost:33961/

Dify is an open-source LLM app development platform. Dify's intuitive interface combines AI workflow, RAG pipeline, agent capabilities, model management, observability features and more, letting you quickly go from prototype to production.

Starting

# [Optional] Pull the dify images

# ahead of starting the service

harbor pull dify

# Start the service

harbor up dify

harbor open difyMisc

- Fixed running SearXNG without Webui

harbor howprompt tuningvllmversion can now be configured via CLI