From dc2596f2fc7b644a411a6ffc8f04724bf79cf86b Mon Sep 17 00:00:00 2001

From: Jennifer Huang <47805623+Jennifer88huang@users.noreply.github.com>

Date: Wed, 2 Dec 2020 11:08:54 +0800

Subject: [PATCH 1/4] [website] Update the format issue (#8773)

### Motivation

The whole Pulsar website could not be built correctly with some syntax errors.

Found the error here https://github.com/apache/pulsar/runs/1483341061?check_suite_focus=true

### Modifications

Fix the syntax error.

---

site2/website/data/connectors.js | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/site2/website/data/connectors.js b/site2/website/data/connectors.js

index 9933d53a08ddef..4182f2c98648c5 100644

--- a/site2/website/data/connectors.js

+++ b/site2/website/data/connectors.js

@@ -136,7 +136,7 @@ module.exports = [

longName: 'NSQ source',

type: 'Source',

link: 'https://nsq.io/',

- }

+ },

{

name: 'rabbitmq',

longName: 'RabbitMQ source and sink',

From 456f265a4c291b36c02a831e8d939cf5e58be880 Mon Sep 17 00:00:00 2001

From: Jennifer Huang <47805623+Jennifer88huang@users.noreply.github.com>

Date: Wed, 2 Dec 2020 13:51:14 +0800

Subject: [PATCH 2/4] [docs] Update Websocket content (#8762)

* update

* Update site2/docs/client-libraries-websocket.md

Co-authored-by: HuanliMeng <48120384+Huanli-Meng@users.noreply.github.com>

Co-authored-by: HuanliMeng <48120384+Huanli-Meng@users.noreply.github.com>

---

site2/docs/client-libraries-websocket.md | 6 +++---

site2/docs/getting-started-clients.md | 2 +-

site2/website/sidebars.json | 1 +

3 files changed, 5 insertions(+), 4 deletions(-)

diff --git a/site2/docs/client-libraries-websocket.md b/site2/docs/client-libraries-websocket.md

index 7ada71b4be6025..fd3d9c8a28d22f 100644

--- a/site2/docs/client-libraries-websocket.md

+++ b/site2/docs/client-libraries-websocket.md

@@ -1,13 +1,13 @@

---

id: client-libraries-websocket

-title: Pulsar's WebSocket API

+title: Pulsar WebSocket API

sidebar_label: WebSocket

---

-Pulsar's [WebSocket](https://developer.mozilla.org/en-US/docs/Web/API/WebSockets_API) API is meant to provide a simple way to interact with Pulsar using languages that do not have an official [client library](getting-started-clients.md). Through WebSockets you can publish and consume messages and use all the features available in the [Java](client-libraries-java.md), [Go](client-libraries-go.md), [Python](client-libraries-python.md) and [C++](client-libraries-cpp.md) client libraries.

+Pulsar [WebSocket](https://developer.mozilla.org/en-US/docs/Web/API/WebSockets_API) API provides a simple way to interact with Pulsar using languages that do not have an official [client library](getting-started-clients.md). Through WebSocket, you can publish and consume messages and use features available on the [Client Features Matrix](https://github.com/apache/pulsar/wiki/Client-Features-Matrix) page.

-> You can use Pulsar's WebSocket API with any WebSocket client library. See examples for Python and Node.js [below](#client-examples).

+> You can use Pulsar WebSocket API with any WebSocket client library. See examples for Python and Node.js [below](#client-examples).

## Running the WebSocket service

diff --git a/site2/docs/getting-started-clients.md b/site2/docs/getting-started-clients.md

index d1c218f28df2fb..add817b890452c 100644

--- a/site2/docs/getting-started-clients.md

+++ b/site2/docs/getting-started-clients.md

@@ -1,7 +1,7 @@

---

id: client-libraries

title: Pulsar client libraries

-sidebar_label: Use Pulsar with client libraries

+sidebar_label: Overview

---

Pulsar supports the following client libraries:

diff --git a/site2/website/sidebars.json b/site2/website/sidebars.json

index d0968018dde8a1..31bf9f26487c0d 100644

--- a/site2/website/sidebars.json

+++ b/site2/website/sidebars.json

@@ -104,6 +104,7 @@

"performance-pulsar-perf"

],

"Client libraries": [

+ "client-libraries",

"client-libraries-java",

"client-libraries-go",

"client-libraries-python",

From 543bf920155bfc4e414e8c874d32f5616580db17 Mon Sep 17 00:00:00 2001

From: WangJialing <65590138+wangjialing218@users.noreply.github.com>

Date: Wed, 2 Dec 2020 16:12:51 +0800

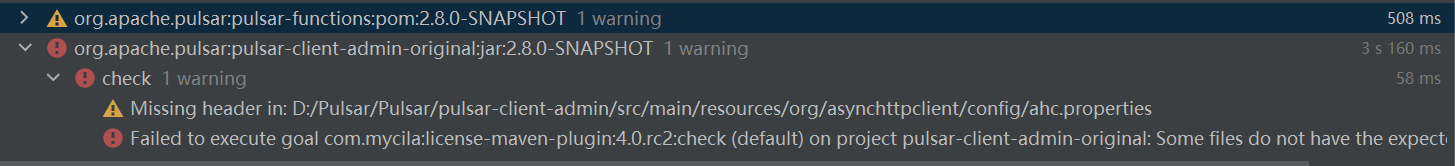

Subject: [PATCH 3/4] exclude ahc.properties for license check (#8745)

### Motivation

license-maven-plugin check fail for ahc.properties, maybe related with license-maven-plugin update in #8706

### Modifications

Add ahc.properties to exclude list of license-maven-plugin

---

pom.xml | 1 +

1 file changed, 1 insertion(+)

diff --git a/pom.xml b/pom.xml

index c64fb717037aa6..e39a82719666fd 100644

--- a/pom.xml

+++ b/pom.xml

@@ -1170,6 +1170,7 @@ flexible messaging model and an intuitive client API.

**/src/main/java/org/apache/bookkeeper/mledger/util/AbstractCASReferenceCounted.java

**/ByteBufCodedInputStream.java

**/ByteBufCodedOutputStream.java

+ **/ahc.properties

bin/proto/*

conf/schema_example.conf

data/**

From ee4cddf35970f6230b8c0a3f3c247e10e85b1973 Mon Sep 17 00:00:00 2001

From: lipenghui

Date: Wed, 2 Dec 2020 16:21:19 +0800

Subject: [PATCH 4/4] Update site for 2.7.0 (#8768)

---

site2/website/releases.json | 1 +

.../version-2.7.0/admin-api-brokers.md | 158 +

.../version-2.7.0/admin-api-clusters.md | 222 ++

.../version-2.7.0/admin-api-functions.md | 579 ++++

.../version-2.7.0/admin-api-namespaces.md | 886 ++++++

.../admin-api-non-partitioned-topics.md | 8 +

.../admin-api-non-persistent-topics.md | 8 +

.../version-2.7.0/admin-api-overview.md | 91 +

.../admin-api-partitioned-topics.md | 8 +

.../version-2.7.0/admin-api-permissions.md | 121 +

.../admin-api-persistent-topics.md | 8 +

.../version-2.7.0/admin-api-tenants.md | 157 +

.../version-2.7.0/admin-api-topics.md | 1206 ++++++++

.../version-2.7.0/administration-proxy.md | 76 +

.../administration-pulsar-manager.md | 183 ++

.../version-2.7.0/administration-zk-bk.md | 348 +++

.../version-2.7.0/client-libraries-java.md | 882 ++++++

.../version-2.7.0/client-libraries-node.md | 431 +++

.../version-2.7.0/client-libraries-python.md | 291 ++

.../client-libraries-websocket.md | 448 +++

.../concepts-architecture-overview.md | 156 +

.../version-2.7.0/concepts-authentication.md | 9 +

.../version-2.7.0/concepts-messaging.md | 518 ++++

.../version-2.7.0/concepts-multi-tenancy.md | 55 +

.../version-2.7.0/concepts-transactions.md | 30 +

.../version-2.7.0/cookbooks-compaction.md | 127 +

.../version-2.7.0/cookbooks-deduplication.md | 124 +

.../version-2.7.0/cookbooks-non-persistent.md | 59 +

.../version-2.7.0/cookbooks-partitioned.md | 7 +

.../cookbooks-retention-expiry.md | 318 ++

.../version-2.7.0/cookbooks-tiered-storage.md | 301 ++

.../version-2.7.0/deploy-aws.md | 227 ++

.../version-2.7.0/deploy-bare-metal.md | 461 +++

.../version-2.7.0/deploy-docker.md | 52 +

.../version-2.7.0/deploy-monitoring.md | 95 +

.../developing-binary-protocol.md | 556 ++++

.../version-2.7.0/functions-develop.md | 1084 +++++++

.../version-2.7.0/functions-package.md | 431 +++

.../version-2.7.0/functions-runtime.md | 313 ++

.../version-2.7.0/functions-worker.md | 286 ++

.../version-2.7.0/getting-started-clients.md | 35 +

.../version-2.7.0/getting-started-helm.md | 358 +++

.../version-2.7.0/helm-deploy.md | 375 +++

.../version-2.7.0/helm-overview.md | 100 +

.../version-2.7.0/helm-upgrade.md | 34 +

.../versioned_docs/version-2.7.0/io-cli.md | 606 ++++

.../version-2.7.0/io-connectors.md | 232 ++

.../version-2.7.0/io-hdfs2-sink.md | 59 +

.../version-2.7.0/io-nsq-source.md | 21 +

.../version-2.7.0/io-quickstart.md | 816 ++++++

.../version-2.7.0/io-rabbitmq-source.md | 81 +

.../versioned_docs/version-2.7.0/io-use.md | 1505 ++++++++++

.../version-2.7.0/reference-cli-tools.md | 745 +++++

.../version-2.7.0/reference-configuration.md | 781 +++++

.../version-2.7.0/reference-metrics.md | 404 +++

.../version-2.7.0/reference-pulsar-admin.md | 2567 +++++++++++++++++

.../version-2.7.0/reference-terminology.md | 167 ++

.../version-2.7.0/schema-get-started.md | 95 +

.../version-2.7.0/security-authorization.md | 101 +

.../version-2.7.0/security-bouncy-castle.md | 139 +

.../version-2.7.0/security-extending.md | 196 ++

.../version-2.7.0/security-oauth2.md | 207 ++

.../sql-deployment-configurations.md | 159 +

.../version-2.7.0/tiered-storage-aws.md | 282 ++

.../version-2.7.0/tiered-storage-azure.md | 225 ++

.../tiered-storage-filesystem.md | 269 ++

.../version-2.7.0/tiered-storage-gcs.md | 274 ++

.../version-2.7.0/tiered-storage-overview.md | 50 +

.../version-2.7.0/transaction-api.md | 150 +

.../version-2.7.0/transaction-guarantee.md | 17 +

.../version-2.7.0-sidebars.json | 160 +

site2/website/versions.json | 1 +

72 files changed, 22532 insertions(+)

create mode 100644 site2/website/versioned_docs/version-2.7.0/admin-api-brokers.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/admin-api-clusters.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/admin-api-functions.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/admin-api-namespaces.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/admin-api-non-partitioned-topics.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/admin-api-non-persistent-topics.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/admin-api-overview.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/admin-api-partitioned-topics.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/admin-api-permissions.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/admin-api-persistent-topics.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/admin-api-tenants.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/admin-api-topics.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/administration-proxy.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/administration-pulsar-manager.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/administration-zk-bk.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/client-libraries-java.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/client-libraries-node.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/client-libraries-python.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/client-libraries-websocket.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/concepts-architecture-overview.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/concepts-authentication.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/concepts-messaging.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/concepts-multi-tenancy.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/concepts-transactions.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/cookbooks-compaction.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/cookbooks-deduplication.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/cookbooks-non-persistent.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/cookbooks-partitioned.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/cookbooks-retention-expiry.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/cookbooks-tiered-storage.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/deploy-aws.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/deploy-bare-metal.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/deploy-docker.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/deploy-monitoring.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/developing-binary-protocol.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/functions-develop.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/functions-package.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/functions-runtime.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/functions-worker.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/getting-started-clients.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/getting-started-helm.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/helm-deploy.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/helm-overview.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/helm-upgrade.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/io-cli.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/io-connectors.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/io-hdfs2-sink.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/io-nsq-source.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/io-quickstart.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/io-rabbitmq-source.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/io-use.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/reference-cli-tools.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/reference-configuration.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/reference-metrics.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/reference-pulsar-admin.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/reference-terminology.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/schema-get-started.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/security-authorization.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/security-bouncy-castle.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/security-extending.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/security-oauth2.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/sql-deployment-configurations.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/tiered-storage-aws.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/tiered-storage-azure.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/tiered-storage-filesystem.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/tiered-storage-gcs.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/tiered-storage-overview.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/transaction-api.md

create mode 100644 site2/website/versioned_docs/version-2.7.0/transaction-guarantee.md

create mode 100644 site2/website/versioned_sidebars/version-2.7.0-sidebars.json

diff --git a/site2/website/releases.json b/site2/website/releases.json

index 0f1d737ab34f25..b5c1523403cf7c 100644

--- a/site2/website/releases.json

+++ b/site2/website/releases.json

@@ -1,5 +1,6 @@

[

"2.6.2",

+ "2.7.0",

"2.6.1",

"2.6.0",

"2.5.2",

diff --git a/site2/website/versioned_docs/version-2.7.0/admin-api-brokers.md b/site2/website/versioned_docs/version-2.7.0/admin-api-brokers.md

new file mode 100644

index 00000000000000..2d19417bebf013

--- /dev/null

+++ b/site2/website/versioned_docs/version-2.7.0/admin-api-brokers.md

@@ -0,0 +1,158 @@

+---

+id: version-2.7.0-admin-api-brokers

+title: Managing Brokers

+sidebar_label: Brokers

+original_id: admin-api-brokers

+---

+

+Pulsar brokers consist of two components:

+

+1. An HTTP server exposing a {@inject: rest:REST:/} interface administration and [topic](reference-terminology.md#topic) lookup.

+2. A dispatcher that handles all Pulsar [message](reference-terminology.md#message) transfers.

+

+[Brokers](reference-terminology.md#broker) can be managed via:

+

+* The [`brokers`](reference-pulsar-admin.md#brokers) command of the [`pulsar-admin`](reference-pulsar-admin.md) tool

+* The `/admin/v2/brokers` endpoint of the admin {@inject: rest:REST:/} API

+* The `brokers` method of the {@inject: javadoc:PulsarAdmin:/admin/org/apache/pulsar/client/admin/PulsarAdmin.html} object in the [Java API](client-libraries-java.md)

+

+In addition to being configurable when you start them up, brokers can also be [dynamically configured](#dynamic-broker-configuration).

+

+> See the [Configuration](reference-configuration.md#broker) page for a full listing of broker-specific configuration parameters.

+

+## Brokers resources

+

+### List active brokers

+

+Fetch all available active brokers that are serving traffic.

+

+

+

+

+```shell

+$ pulsar-admin brokers list use

+```

+

+```

+broker1.use.org.com:8080

+```

+

+

+

+{@inject: endpoint|GET|/admin/v2/brokers/:cluster|operation/getActiveBrokers}

+

+

+

+```java

+admin.brokers().getActiveBrokers(clusterName)

+```

+

+

+

+#### list of namespaces owned by a given broker

+

+It finds all namespaces which are owned and served by a given broker.

+

+

+

+

+```shell

+$ pulsar-admin brokers namespaces use \

+ --url broker1.use.org.com:8080

+```

+

+```json

+{

+ "my-property/use/my-ns/0x00000000_0xffffffff": {

+ "broker_assignment": "shared",

+ "is_controlled": false,

+ "is_active": true

+ }

+}

+```

+

+

+{@inject: endpoint|GET|/admin/v2/brokers/:cluster/:broker/ownedNamespaces|operation/getOwnedNamespaes}

+

+

+

+```java

+admin.brokers().getOwnedNamespaces(cluster,brokerUrl);

+```

+

+

+### Dynamic broker configuration

+

+One way to configure a Pulsar [broker](reference-terminology.md#broker) is to supply a [configuration](reference-configuration.md#broker) when the broker is [started up](reference-cli-tools.md#pulsar-broker).

+

+But since all broker configuration in Pulsar is stored in ZooKeeper, configuration values can also be dynamically updated *while the broker is running*. When you update broker configuration dynamically, ZooKeeper will notify the broker of the change and the broker will then override any existing configuration values.

+

+* The [`brokers`](reference-pulsar-admin.md#brokers) command for the [`pulsar-admin`](reference-pulsar-admin.md) tool has a variety of subcommands that enable you to manipulate a broker's configuration dynamically, enabling you to [update config values](#update-dynamic-configuration) and more.

+* In the Pulsar admin {@inject: rest:REST:/} API, dynamic configuration is managed through the `/admin/v2/brokers/configuration` endpoint.

+

+### Update dynamic configuration

+

+

+

+

+The [`update-dynamic-config`](reference-pulsar-admin.md#brokers-update-dynamic-config) subcommand will update existing configuration. It takes two arguments: the name of the parameter and the new value using the `config` and `value` flag respectively. Here's an example for the [`brokerShutdownTimeoutMs`](reference-configuration.md#broker-brokerShutdownTimeoutMs) parameter:

+

+```shell

+$ pulsar-admin brokers update-dynamic-config --config brokerShutdownTimeoutMs --value 100

+```

+

+

+

+{@inject: endpoint|POST|/admin/v2/brokers/configuration/:configName/:configValue|operation/updateDynamicConfiguration}

+

+

+

+```java

+admin.brokers().updateDynamicConfiguration(configName, configValue);

+```

+

+

+### List updated values

+

+Fetch a list of all potentially updatable configuration parameters.

+

+

+

+```shell

+$ pulsar-admin brokers list-dynamic-config

+brokerShutdownTimeoutMs

+```

+

+

+

+{@inject: endpoint|GET|/admin/v2/brokers/configuration|operation/getDynamicConfigurationName}

+

+

+

+```java

+admin.brokers().getDynamicConfigurationNames();

+```

+

+

+### List all

+

+Fetch a list of all parameters that have been dynamically updated.

+

+

+

+

+```shell

+$ pulsar-admin brokers get-all-dynamic-config

+brokerShutdownTimeoutMs:100

+```

+

+

+

+{@inject: endpoint|GET|/admin/v2/brokers/configuration/values|operation/getAllDynamicConfigurations}

+

+

+

+```java

+admin.brokers().getAllDynamicConfigurations();

+```

+

diff --git a/site2/website/versioned_docs/version-2.7.0/admin-api-clusters.md b/site2/website/versioned_docs/version-2.7.0/admin-api-clusters.md

new file mode 100644

index 00000000000000..faa5cef5723506

--- /dev/null

+++ b/site2/website/versioned_docs/version-2.7.0/admin-api-clusters.md

@@ -0,0 +1,222 @@

+---

+id: version-2.7.0-admin-api-clusters

+title: Managing Clusters

+sidebar_label: Clusters

+original_id: admin-api-clusters

+---

+

+Pulsar clusters consist of one or more Pulsar [brokers](reference-terminology.md#broker), one or more [BookKeeper](reference-terminology.md#bookkeeper)

+servers (aka [bookies](reference-terminology.md#bookie)), and a [ZooKeeper](https://zookeeper.apache.org) cluster that provides configuration and coordination management.

+

+Clusters can be managed via:

+

+* The [`clusters`](reference-pulsar-admin.md#clusters) command of the [`pulsar-admin`](reference-pulsar-admin.md) tool

+* The `/admin/v2/clusters` endpoint of the admin {@inject: rest:REST:/} API

+* The `clusters` method of the {@inject: javadoc:PulsarAdmin:/admin/org/apache/pulsar/client/admin/PulsarAdmin} object in the [Java API](client-libraries-java.md)

+

+## Clusters resources

+

+### Provision

+

+New clusters can be provisioned using the admin interface.

+

+> Please note that this operation requires superuser privileges.

+

+

+

+

+You can provision a new cluster using the [`create`](reference-pulsar-admin.md#clusters-create) subcommand. Here's an example:

+

+```shell

+$ pulsar-admin clusters create cluster-1 \

+ --url http://my-cluster.org.com:8080 \

+ --broker-url pulsar://my-cluster.org.com:6650

+```

+

+

+

+{@inject: endpoint|PUT|/admin/v2/clusters/:cluster|operation/createCluster}

+

+

+

+```java

+ClusterData clusterData = new ClusterData(

+ serviceUrl,

+ serviceUrlTls,

+ brokerServiceUrl,

+ brokerServiceUrlTls

+);

+admin.clusters().createCluster(clusterName, clusterData);

+```

+

+

+### Initialize cluster metadata

+

+When provision a new cluster, you need to initialize that cluster's [metadata](concepts-architecture-overview.md#metadata-store). When initializing cluster metadata, you need to specify all of the following:

+

+* The name of the cluster

+* The local ZooKeeper connection string for the cluster

+* The configuration store connection string for the entire instance

+* The web service URL for the cluster

+* A broker service URL enabling interaction with the [brokers](reference-terminology.md#broker) in the cluster

+

+You must initialize cluster metadata *before* starting up any [brokers](admin-api-brokers.md) that will belong to the cluster.

+

+> **No cluster metadata initialization through the REST API or the Java admin API**

+>

+> Unlike most other admin functions in Pulsar, cluster metadata initialization cannot be performed via the admin REST API

+> or the admin Java client, as metadata initialization involves communicating with ZooKeeper directly.

+> Instead, you can use the [`pulsar`](reference-cli-tools.md#pulsar) CLI tool, in particular

+> the [`initialize-cluster-metadata`](reference-cli-tools.md#pulsar-initialize-cluster-metadata) command.

+

+Here's an example cluster metadata initialization command:

+

+```shell

+bin/pulsar initialize-cluster-metadata \

+ --cluster us-west \

+ --zookeeper zk1.us-west.example.com:2181 \

+ --configuration-store zk1.us-west.example.com:2184 \

+ --web-service-url http://pulsar.us-west.example.com:8080/ \

+ --web-service-url-tls https://pulsar.us-west.example.com:8443/ \

+ --broker-service-url pulsar://pulsar.us-west.example.com:6650/ \

+ --broker-service-url-tls pulsar+ssl://pulsar.us-west.example.com:6651/

+```

+

+You'll need to use `--*-tls` flags only if you're using [TLS authentication](security-tls-authentication.md) in your instance.

+

+### Get configuration

+

+You can fetch the [configuration](reference-configuration.md) for an existing cluster at any time.

+

+

+

+

+Use the [`get`](reference-pulsar-admin.md#clusters-get) subcommand and specify the name of the cluster. Here's an example:

+

+```shell

+$ pulsar-admin clusters get cluster-1

+{

+ "serviceUrl": "http://my-cluster.org.com:8080/",

+ "serviceUrlTls": null,

+ "brokerServiceUrl": "pulsar://my-cluster.org.com:6650/",

+ "brokerServiceUrlTls": null

+ "peerClusterNames": null

+}

+```

+

+

+

+{@inject: endpoint|GET|/admin/v2/clusters/:cluster|operation/getCluster}

+

+

+

+```java

+admin.clusters().getCluster(clusterName);

+```

+

+

+### Update

+

+You can update the configuration for an existing cluster at any time.

+

+

+

+

+Use the [`update`](reference-pulsar-admin.md#clusters-update) subcommand and specify new configuration values using flags.

+

+```shell

+$ pulsar-admin clusters update cluster-1 \

+ --url http://my-cluster.org.com:4081 \

+ --broker-url pulsar://my-cluster.org.com:3350

+```

+

+

+

+{@inject: endpoint|POST|/admin/v2/clusters/:cluster|operation/updateCluster}

+

+

+

+```java

+ClusterData clusterData = new ClusterData(

+ serviceUrl,

+ serviceUrlTls,

+ brokerServiceUrl,

+ brokerServiceUrlTls

+);

+admin.clusters().updateCluster(clusterName, clusterData);

+```

+

+

+### Delete

+

+Clusters can be deleted from a Pulsar [instance](reference-terminology.md#instance).

+

+

+

+

+Use the [`delete`](reference-pulsar-admin.md#clusters-delete) subcommand and specify the name of the cluster.

+

+```

+$ pulsar-admin clusters delete cluster-1

+```

+

+

+

+{@inject: endpoint|DELETE|/admin/v2/clusters/:cluster|operation/deleteCluster}

+

+

+

+```java

+admin.clusters().deleteCluster(clusterName);

+```

+

+

+### List

+

+You can fetch a list of all clusters in a Pulsar [instance](reference-terminology.md#instance).

+

+

+

+

+Use the [`list`](reference-pulsar-admin.md#clusters-list) subcommand.

+

+```shell

+$ pulsar-admin clusters list

+cluster-1

+cluster-2

+```

+

+

+

+{@inject: endpoint|GET|/admin/v2/clusters|operation/getClusters}

+

+

+

+```java

+admin.clusters().getClusters();

+```

+

+

+### Update peer-cluster data

+

+Peer clusters can be configured for a given cluster in a Pulsar [instance](reference-terminology.md#instance).

+

+

+

+

+Use the [`update-peer-clusters`](reference-pulsar-admin.md#clusters-update-peer-clusters) subcommand and specify the list of peer-cluster names.

+

+```

+$ pulsar-admin update-peer-clusters cluster-1 --peer-clusters cluster-2

+```

+

+

+

+{@inject: endpoint|POST|/admin/v2/clusters/:cluster/peers|operation/setPeerClusterNames}

+

+

+

+```java

+admin.clusters().updatePeerClusterNames(clusterName, peerClusterList);

+```

+

\ No newline at end of file

diff --git a/site2/website/versioned_docs/version-2.7.0/admin-api-functions.md b/site2/website/versioned_docs/version-2.7.0/admin-api-functions.md

new file mode 100644

index 00000000000000..fb919251b58325

--- /dev/null

+++ b/site2/website/versioned_docs/version-2.7.0/admin-api-functions.md

@@ -0,0 +1,579 @@

+---

+id: version-2.7.0-admin-api-functions

+title: Manage Functions

+sidebar_label: Functions

+original_id: admin-api-functions

+---

+

+**Pulsar Functions** are lightweight compute processes that

+

+* consume messages from one or more Pulsar topics

+* apply a user-supplied processing logic to each message

+* publish the results of the computation to another topic

+

+Functions can be managed via the following methods.

+

+Method | Description

+---|---

+**Admin CLI** | The [`functions`](reference-pulsar-admin.md#functions) command of the [`pulsar-admin`](reference-pulsar-admin.md) tool.

+**REST API** |The `/admin/v3/functions` endpoint of the admin {@inject: rest:REST:/} API.

+**Java Admin API**| The `functions` method of the {@inject: javadoc:PulsarAdmin:/admin/org/apache/pulsar/client/admin/PulsarAdmin} object in the [Java API](client-libraries-java.md).

+

+## Function resources

+

+You can perform the following operations on functions.

+

+### Create a function

+

+You can create a Pulsar function in cluster mode (deploy it on a Pulsar cluster) using Admin CLI, REST API or Java Admin API.

+

+

+

+

+Use the [`create`](reference-pulsar-admin.md#functions-create) subcommand.

+

+**Example**

+

+```shell

+$ pulsar-admin functions create \

+ --tenant public \

+ --namespace default \

+ --name (the name of Pulsar Functions) \

+ --inputs test-input-topic \

+ --output persistent://public/default/test-output-topic \

+ --classname org.apache.pulsar.functions.api.examples.ExclamationFunction \

+ --jar /examples/api-examples.jar

+```

+

+

+

+{@inject: endpoint|POST|/admin/v3/functions/{tenant}/{namespace}/{functionName}

+

+

+

+```java

+FunctionConfig functionConfig = new FunctionConfig();

+functionConfig.setTenant(tenant);

+functionConfig.setNamespace(namespace);

+functionConfig.setName(functionName);

+functionConfig.setRuntime(FunctionConfig.Runtime.JAVA);

+functionConfig.setParallelism(1);

+functionConfig.setClassName("org.apache.pulsar.functions.api.examples.ExclamationFunction");

+functionConfig.setProcessingGuarantees(FunctionConfig.ProcessingGuarantees.ATLEAST_ONCE);

+functionConfig.setTopicsPattern(sourceTopicPattern);

+functionConfig.setSubName(subscriptionName);

+functionConfig.setAutoAck(true);

+functionConfig.setOutput(sinkTopic);

+admin.functions().createFunction(functionConfig, fileName);

+```

+

+

+### Update a function

+

+You can update a Pulsar function that has been deployed to a Pulsar cluster using Admin CLI, REST API or Java Admin API.

+

+

+

+

+Use the [`update`](reference-pulsar-admin.md#functions-update) subcommand.

+

+**Example**

+

+```shell

+$ pulsar-admin functions update \

+ --tenant public \

+ --namespace default \

+ --name (the name of Pulsar Functions) \

+ --output persistent://public/default/update-output-topic \

+ # other options

+```

+

+

+

+{@inject: endpoint|PUT|/admin/v3/functions/{tenant}/{namespace}/{functionName}

+

+

+

+```java

+FunctionConfig functionConfig = new FunctionConfig();

+functionConfig.setTenant(tenant);

+functionConfig.setNamespace(namespace);

+functionConfig.setName(functionName);

+functionConfig.setRuntime(FunctionConfig.Runtime.JAVA);

+functionConfig.setParallelism(1);

+functionConfig.setClassName("org.apache.pulsar.functions.api.examples.ExclamationFunction");

+UpdateOptions updateOptions = new UpdateOptions();

+updateOptions.setUpdateAuthData(updateAuthData);

+admin.functions().updateFunction(functionConfig, userCodeFile, updateOptions);

+```

+

+

+### Start an instance of a function

+

+You can start a stopped function instance with `instance-id` using Admin CLI, REST API or Java Admin API.

+

+

+

+

+Use the [`start`](reference-pulsar-admin.md#functions-start) subcommand.

+

+```shell

+$ pulsar-admin functions start \

+ --tenant public \

+ --namespace default \

+ --name (the name of Pulsar Functions) \

+ --instance-id 1

+```

+

+

+

+{@inject: endpoint|POST|/admin/v3/functions/{tenant}/{namespace}/{functionName}/{instanceId}/start

+

+

+

+```java

+admin.functions().startFunction(tenant, namespace, functionName, Integer.parseInt(instanceId));

+```

+

+

+### Start all instances of a function

+

+You can start all stopped function instances using Admin CLI, REST API or Java Admin API.

+

+

+

+

+Use the [`start`](reference-pulsar-admin.md#functions-start) subcommand.

+

+**Example**

+

+```shell

+$ pulsar-admin functions start \

+ --tenant public \

+ --namespace default \

+ --name (the name of Pulsar Functions) \

+```

+

+

+

+{@inject: endpoint|POST|/admin/v3/functions/{tenant}/{namespace}/{functionName}/start

+

+

+

+```java

+admin.functions().startFunction(tenant, namespace, functionName);

+```

+

+

+### Stop an instance of a function

+

+You can stop a function instance with `instance-id` using Admin CLI, REST API or Java Admin API.

+

+

+

+

+Use the [`stop`](reference-pulsar-admin.md#functions-stop) subcommand.

+

+**Example**

+

+```shell

+$ pulsar-admin functions stop \

+ --tenant public \

+ --namespace default \

+ --name (the name of Pulsar Functions) \

+ --instance-id 1

+```

+

+

+

+{@inject: endpoint|POST|/admin/v3/functions/{tenant}/{namespace}/{functionName}/{instanceId}/stop

+

+

+

+```java

+admin.functions().stopFunction(tenant, namespace, functionName, Integer.parseInt(instanceId));

+```

+

+

+### Stop all instances of a function

+

+You can stop all function instances using Admin CLI, REST API or Java Admin API.

+

+

+

+

+Use the [`stop`](reference-pulsar-admin.md#functions-stop) subcommand.

+

+**Example**

+

+```shell

+$ pulsar-admin functions stop \

+ --tenant public \

+ --namespace default \

+ --name (the name of Pulsar Functions) \

+```

+

+

+

+{@inject: endpoint|POST|/admin/v3/functions/{tenant}/{namespace}/{functionName}/stop

+

+

+

+```java

+admin.functions().stopFunction(tenant, namespace, functionName);

+```

+

+

+### Restart an instance of a function

+

+Restart a function instance with `instance-id` using Admin CLI, REST API or Java Admin API.

+

+

+

+

+Use the [`restart`](reference-pulsar-admin.md#functions-restart) subcommand.

+

+**Example**

+

+```shell

+$ pulsar-admin functions restart \

+ --tenant public \

+ --namespace default \

+ --name (the name of Pulsar Functions) \

+ --instance-id 1

+```

+

+

+

+{@inject: endpoint|POST|/admin/v3/functions/{tenant}/{namespace}/{functionName}/{instanceId}/restart

+

+

+

+```java

+admin.functions().restartFunction(tenant, namespace, functionName, Integer.parseInt(instanceId));

+```

+

+

+### Restart all instances of a function

+

+You can restart all function instances using Admin CLI, REST API or Java admin API.

+

+

+

+

+Use the [`restart`](reference-pulsar-admin.md#functions-restart) subcommand.

+

+**Example**

+

+```shell

+$ pulsar-admin functions restart \

+ --tenant public \

+ --namespace default \

+ --name (the name of Pulsar Functions) \

+```

+

+

+

+{@inject: endpoint|POST|/admin/v3/functions/{tenant}/{namespace}/{functionName}/restart

+

+

+

+```java

+admin.functions().restartFunction(tenant, namespace, functionName);

+```

+

+

+### List all functions

+

+You can list all Pulsar functions running under a specific tenant and namespace using Admin CLI, REST API or Java Admin API.

+

+

+

+

+Use the [`list`](reference-pulsar-admin.md#functions-list) subcommand.

+

+**Example**

+

+```shell

+$ pulsar-admin functions list \

+ --tenant public \

+ --namespace default

+```

+

+

+

+{@inject: endpoint|GET|/admin/v3/functions/{tenant}/{namespace}

+

+

+

+```java

+admin.functions().getFunctions(tenant, namespace);

+```

+

+

+### Delete a function

+

+You can delete a Pulsar function that is running on a Pulsar cluster using Admin CLI, REST API or Java Admin API.

+

+

+

+

+Use the [`delete`](reference-pulsar-admin.md#functions-delete) subcommand.

+

+**Example**

+

+```shell

+$ pulsar-admin functions delete \

+ --tenant public \

+ --namespace default \

+ --name (the name of Pulsar Functions)

+```

+

+

+

+{@inject: endpoint|DELETE|/admin/v3/functions/{tenant}/{namespace}/{functionName}

+

+

+

+```java

+admin.functions().deleteFunction(tenant, namespace, functionName);

+```

+

+

+### Get info about a function

+

+You can get information about a Pulsar function currently running in cluster mode using Admin CLI, REST API or Java Admin API.

+

+

+

+

+Use the [`get`](reference-pulsar-admin.md#functions-get) subcommand.

+

+**Example**

+

+```shell

+$ pulsar-admin functions get \

+ --tenant public \

+ --namespace default \

+ --name (the name of Pulsar Functions)

+```

+

+

+

+{@inject: endpoint|GET|/admin/v3/functions/{tenant}/{namespace}/{functionName}

+

+

+

+```java

+admin.functions().getFunction(tenant, namespace, functionName);

+```

+

+

+### Get status of an instance of a function

+

+You can get the current status of a Pulsar function instance with `instance-id` using Admin CLI, REST API or Java Admin API.

+

+

+

+Use the [`status`](reference-pulsar-admin.md#functions-status) subcommand.

+

+**Example**

+

+```shell

+$ pulsar-admin functions status \

+ --tenant public \

+ --namespace default \

+ --name (the name of Pulsar Functions) \

+ --instance-id 1

+```

+

+

+

+{@inject: endpoint|GET|/admin/v3/functions/{tenant}/{namespace}/{functionName}/{instanceId}/status

+

+

+

+```java

+admin.functions().getFunctionStatus(tenant, namespace, functionName, Integer.parseInt(instanceId));

+```

+

+

+### Get status of all instances of a function

+

+You can get the current status of a Pulsar function instance using Admin CLI, REST API or Java Admin API.

+

+

+

+

+Use the [`status`](reference-pulsar-admin.md#functions-status) subcommand.

+

+**Example**

+

+```shell

+$ pulsar-admin functions status \

+ --tenant public \

+ --namespace default \

+ --name (the name of Pulsar Functions)

+```

+

+

+

+{@inject: endpoint|GET|/admin/v3/functions/{tenant}/{namespace}/{functionName}/status

+

+

+

+```java

+admin.functions().getFunctionStatus(tenant, namespace, functionName);

+```

+

+

+### Get stats of an instance of a function

+

+You can get the current stats of a Pulsar Function instance with `instance-id` using Admin CLI, REST API or Java admin API.

+

+

+

+Use the [`stats`](reference-pulsar-admin.md#functions-stats) subcommand.

+

+**Example**

+

+```shell

+$ pulsar-admin functions stats \

+ --tenant public \

+ --namespace default \

+ --name (the name of Pulsar Functions) \

+ --instance-id 1

+```

+

+

+

+{@inject: endpoint|GET|/admin/v3/functions/{tenant}/{namespace}/{functionName}/{instanceId}/stats

+

+

+

+```java

+admin.functions().getFunctionStats(tenant, namespace, functionName, Integer.parseInt(instanceId));

+```

+

+

+### Get stats of all instances of a function

+

+You can get the current stats of a Pulsar function using Admin CLI, REST API or Java admin API.

+

+

+

+

+Use the [`stats`](reference-pulsar-admin.md#functions-stats) subcommand.

+

+**Example**

+

+```shell

+$ pulsar-admin functions stats \

+ --tenant public \

+ --namespace default \

+ --name (the name of Pulsar Functions)

+```

+

+

+

+{@inject: endpoint|GET|/admin/v3/functions/{tenant}/{namespace}/{functionName}/stats

+

+

+

+```java

+admin.functions().getFunctionStats(tenant, namespace, functionName);

+```

+

+

+### Trigger a function

+

+You can trigger a specified Pulsar function with a supplied value using Admin CLI, REST API or Java admin API.

+

+

+

+

+Use the [`trigger`](reference-pulsar-admin.md#functions-trigger) subcommand.

+

+**Example**

+

+```shell

+$ pulsar-admin functions trigger \

+ --tenant public \

+ --namespace default \

+ --name (the name of Pulsar Functions) \

+ --topic (the name of input topic) \

+ --trigger-value \"hello pulsar\"

+ # or --trigger-file (the path of trigger file)

+```

+

+

+{@inject: endpoint|POST|/admin/v3/functions/{tenant}/{namespace}/{functionName}/trigger

+

+

+

+```java

+admin.functions().triggerFunction(tenant, namespace, functionName, topic, triggerValue, triggerFile);

+```

+

+

+### Put state associated with a function

+

+You can put the state associated with a Pulsar function using Admin CLI, REST API or Java admin API.

+

+

+

+

+Use the [`putstate`](reference-pulsar-admin.md#functions-putstate) subcommand.

+

+**Example**

+

+```shell

+$ pulsar-admin functions putstate \

+ --tenant public \

+ --namespace default \

+ --name (the name of Pulsar Functions) \

+ --state "{\"key\":\"pulsar\", \"stringValue\":\"hello pulsar\"}"

+```

+

+

+

+{@inject: endpoint|POST|/admin/v3/functions/{tenant}/{namespace}/{functionName}/state/{key}

+

+

+

+```java

+TypeReference typeRef = new TypeReference() {};

+FunctionState stateRepr = ObjectMapperFactory.getThreadLocal().readValue(state, typeRef);

+admin.functions().putFunctionState(tenant, namespace, functionName, stateRepr);

+```

+

+

+### Fetch state associated with a function

+

+You can fetch the current state associated with a Pulsar function using Admin CLI, REST API or Java admin API.

+

+

+

+

+Use the [`querystate`](reference-pulsar-admin.md#functions-querystate) subcommand.

+

+**Example**

+

+```shell

+$ pulsar-admin functions querystate \

+ --tenant public \

+ --namespace default \

+ --name (the name of Pulsar Functions) \

+ --key (the key of state)

+```

+

+

+

+{@inject: endpoint|GET|/admin/v3/functions/{tenant}/{namespace}/{functionName}/state/{key}

+

+

+

+```java

+admin.functions().getFunctionState(tenant, namespace, functionName, key);

+```

+

\ No newline at end of file

diff --git a/site2/website/versioned_docs/version-2.7.0/admin-api-namespaces.md b/site2/website/versioned_docs/version-2.7.0/admin-api-namespaces.md

new file mode 100644

index 00000000000000..b442adf5da1a6a

--- /dev/null

+++ b/site2/website/versioned_docs/version-2.7.0/admin-api-namespaces.md

@@ -0,0 +1,886 @@

+---

+id: version-2.7.0-admin-api-namespaces

+title: Managing Namespaces

+sidebar_label: Namespaces

+original_id: admin-api-namespaces

+---

+

+Pulsar [namespaces](reference-terminology.md#namespace) are logical groupings of [topics](reference-terminology.md#topic).

+

+Namespaces can be managed via:

+

+* The [`namespaces`](reference-pulsar-admin.md#clusters) command of the [`pulsar-admin`](reference-pulsar-admin.md) tool

+* The `/admin/v2/namespaces` endpoint of the admin {@inject: rest:REST:/} API

+* The `namespaces` method of the {@inject: javadoc:PulsarAdmin:/admin/org/apache/pulsar/client/admin/PulsarAdmin} object in the [Java API](client-libraries-java.md)

+

+## Namespaces resources

+

+### Create

+

+You can create new namespaces under a given [tenant](reference-terminology.md#tenant).

+

+

+

+

+Use the [`create`](reference-pulsar-admin.md#namespaces-create) subcommand and specify the namespace by name:

+

+```shell

+$ pulsar-admin namespaces create test-tenant/test-namespace

+```

+

+

+

+{@inject: endpoint|PUT|/admin/v2/namespaces/:tenant/:namespace|operation/createNamespace}

+

+

+

+```java

+admin.namespaces().createNamespace(namespace);

+```

+

+

+### Get policies

+

+You can fetch the current policies associated with a namespace at any time.

+

+

+

+

+Use the [`policies`](reference-pulsar-admin.md#namespaces-policies) subcommand and specify the namespace:

+

+```shell

+$ pulsar-admin namespaces policies test-tenant/test-namespace

+{

+ "auth_policies": {

+ "namespace_auth": {},

+ "destination_auth": {}

+ },

+ "replication_clusters": [],

+ "bundles_activated": true,

+ "bundles": {

+ "boundaries": [

+ "0x00000000",

+ "0xffffffff"

+ ],

+ "numBundles": 1

+ },

+ "backlog_quota_map": {},

+ "persistence": null,

+ "latency_stats_sample_rate": {},

+ "message_ttl_in_seconds": 0,

+ "retention_policies": null,

+ "deleted": false

+}

+```

+

+

+

+{@inject: endpoint|GET|/admin/v2/namespaces/:tenant/:namespace|operation/getPolicies}

+

+

+

+```java

+admin.namespaces().getPolicies(namespace);

+```

+

+

+### List namespaces within a tenant

+

+You can list all namespaces within a given Pulsar [tenant](reference-terminology.md#tenant).

+

+

+

+

+Use the [`list`](reference-pulsar-admin.md#namespaces-list) subcommand and specify the tenant:

+

+```shell

+$ pulsar-admin namespaces list test-tenant

+test-tenant/ns1

+test-tenant/ns2

+```

+

+

+

+{@inject: endpoint|GET|/admin/v2/namespaces/:tenant|operation/getTenantNamespaces}

+

+

+

+```java

+admin.namespaces().getNamespaces(tenant);

+```

+

+

+### Delete

+

+You can delete existing namespaces from a tenant.

+

+

+

+

+Use the [`delete`](reference-pulsar-admin.md#namespaces-delete) subcommand and specify the namespace:

+

+```shell

+$ pulsar-admin namespaces delete test-tenant/ns1

+```

+

+

+

+{@inject: endpoint|DELETE|/admin/v2/namespaces/:tenant/:namespace|operation/deleteNamespace}

+

+

+

+```java

+admin.namespaces().deleteNamespace(namespace);

+```

+

+

+#### Set replication cluster

+

+It sets replication clusters for a namespace, so Pulsar can internally replicate publish message from one colo to another colo.

+

+

+

+

+```

+$ pulsar-admin namespaces set-clusters test-tenant/ns1 \

+ --clusters cl1

+```

+

+

+

+```

+{@inject: endpoint|POST|/admin/v2/namespaces/:tenant/:namespace/replication|operation/setNamespaceReplicationClusters}

+```

+

+

+

+```java

+admin.namespaces().setNamespaceReplicationClusters(namespace, clusters);

+```

+

+

+#### Get replication cluster

+

+It gives a list of replication clusters for a given namespace.

+

+

+

+

+```

+$ pulsar-admin namespaces get-clusters test-tenant/cl1/ns1

+```

+

+```

+cl2

+```

+

+

+

+```

+{@inject: endpoint|GET|/admin/v2/namespaces/{tenant}/{namespace}/replication|operation/getNamespaceReplicationClusters}

+```

+

+

+

+```java

+admin.namespaces().getNamespaceReplicationClusters(namespace)

+```

+

+

+#### Set backlog quota policies

+

+Backlog quota helps the broker to restrict bandwidth/storage of a namespace once it reaches a certain threshold limit. Admin can set the limit and take corresponding action after the limit is reached.

+

+ 1. producer_request_hold: broker will hold and not persist produce request payload

+

+ 2. producer_exception: broker disconnects with the client by giving an exception.

+

+ 3. consumer_backlog_eviction: broker will start discarding backlog messages

+

+ Backlog quota restriction can be taken care by defining restriction of backlog-quota-type: destination_storage

+

+

+

+

+```

+$ pulsar-admin namespaces set-backlog-quota --limit 10 --policy producer_request_hold test-tenant/ns1

+```

+

+```

+N/A

+```

+

+

+

+```

+{@inject: endpoint|POST|/admin/v2/namespaces/{tenant}/{namespace}/backlogQuota|operation/setBacklogQuota}

+```

+

+

+

+```java

+admin.namespaces().setBacklogQuota(namespace, new BacklogQuota(limit, policy))

+```

+

+

+#### Get backlog quota policies

+

+It shows a configured backlog quota for a given namespace.

+

+

+

+

+```

+$ pulsar-admin namespaces get-backlog-quotas test-tenant/ns1

+```

+

+```json

+{

+ "destination_storage": {

+ "limit": 10,

+ "policy": "producer_request_hold"

+ }

+}

+```

+

+

+

+```

+{@inject: endpoint|GET|/admin/v2/namespaces/{tenant}/{namespace}/backlogQuotaMap|operation/getBacklogQuotaMap}

+```

+

+

+

+```java

+admin.namespaces().getBacklogQuotaMap(namespace);

+```

+

+

+#### Remove backlog quota policies

+

+It removes backlog quota policies for a given namespace

+

+

+

+

+```

+$ pulsar-admin namespaces remove-backlog-quota test-tenant/ns1

+```

+

+```

+N/A

+```

+

+

+

+```

+{@inject: endpoint|DELETE|/admin/v2/namespaces/{tenant}/{namespace}/backlogQuota|operation/removeBacklogQuota}

+```

+

+

+

+```java

+admin.namespaces().removeBacklogQuota(namespace, backlogQuotaType)

+```

+

+

+#### Set persistence policies

+

+Persistence policies allow to configure persistency-level for all topic messages under a given namespace.

+

+ - Bookkeeper-ack-quorum: Number of acks (guaranteed copies) to wait for each entry, default: 0

+

+ - Bookkeeper-ensemble: Number of bookies to use for a topic, default: 0

+

+ - Bookkeeper-write-quorum: How many writes to make of each entry, default: 0

+

+ - Ml-mark-delete-max-rate: Throttling rate of mark-delete operation (0 means no throttle), default: 0.0

+

+

+

+

+```

+$ pulsar-admin namespaces set-persistence --bookkeeper-ack-quorum 2 --bookkeeper-ensemble 3 --bookkeeper-write-quorum 2 --ml-mark-delete-max-rate 0 test-tenant/ns1

+```

+

+```

+N/A

+```

+

+

+

+```

+{@inject: endpoint|POST|/admin/v2/namespaces/{tenant}/{namespace}/persistence|operation/setPersistence}

+```

+

+

+

+```java

+admin.namespaces().setPersistence(namespace,new PersistencePolicies(bookkeeperEnsemble, bookkeeperWriteQuorum,bookkeeperAckQuorum,managedLedgerMaxMarkDeleteRate))

+```

+

+

+#### Get persistence policies

+

+It shows the configured persistence policies of a given namespace.

+

+

+

+

+```

+$ pulsar-admin namespaces get-persistence test-tenant/ns1

+```

+

+```json

+{

+ "bookkeeperEnsemble": 3,

+ "bookkeeperWriteQuorum": 2,

+ "bookkeeperAckQuorum": 2,

+ "managedLedgerMaxMarkDeleteRate": 0

+}

+```

+

+

+

+```

+{@inject: endpoint|GET|/admin/v2/namespaces/{tenant}/{namespace}/persistence|operation/getPersistence}

+```

+

+

+

+```java

+admin.namespaces().getPersistence(namespace)

+```

+

+

+#### Unload namespace bundle

+

+The namespace bundle is a virtual group of topics which belong to the same namespace. If the broker gets overloaded with the number of bundles, this command can help unload a bundle from that broker, so it can be served by some other less-loaded brokers. The namespace bundle ID ranges from 0x00000000 to 0xffffffff.

+

+

+

+

+```

+$ pulsar-admin namespaces unload --bundle 0x00000000_0xffffffff test-tenant/ns1

+```

+

+```

+N/A

+```

+

+

+

+```

+{@inject: endpoint|PUT|/admin/v2/namespaces/{tenant}/{namespace}/{bundle}/unload|operation/unloadNamespaceBundle}

+```

+

+

+

+```java

+admin.namespaces().unloadNamespaceBundle(namespace, bundle)

+```

+

+

+#### Set message-ttl

+

+It configures message’s time to live (in seconds) duration.

+

+

+

+

+```

+$ pulsar-admin namespaces set-message-ttl --messageTTL 100 test-tenant/ns1

+```

+

+```

+N/A

+```

+

+

+

+```

+{@inject: endpoint|POST|/admin/v2/namespaces/{tenant}/{namespace}/messageTTL|operation/setNamespaceMessageTTL}

+```

+

+

+

+```java

+admin.namespaces().setNamespaceMessageTTL(namespace, messageTTL)

+```

+

+

+#### Get message-ttl

+

+It gives a message ttl of configured namespace.

+

+

+

+

+```

+$ pulsar-admin namespaces get-message-ttl test-tenant/ns1

+```

+

+```

+100

+```

+

+

+

+```

+{@inject: endpoint|GET|/admin/v2/namespaces/{tenant}/{namespace}/messageTTL|operation/getNamespaceMessageTTL}

+```

+

+

+

+```java

+admin.namespaces().getNamespaceMessageTTL(namespace)

+```

+

+

+#### Split bundle

+

+Each namespace bundle can contain multiple topics and each bundle can be served by only one broker.

+If a single bundle is creating an excessive load on a broker, an admin splits the bundle using this command permitting one or more of the new bundles to be unloaded thus spreading the load across the brokers.

+

+

+

+

+```

+$ pulsar-admin namespaces split-bundle --bundle 0x00000000_0xffffffff test-tenant/ns1

+```

+

+```

+N/A

+```

+

+

+

+```

+{@inject: endpoint|PUT|/admin/v2/namespaces/{tenant}/{namespace}/{bundle}/split|operation/splitNamespaceBundle}

+```

+

+

+

+```java

+admin.namespaces().splitNamespaceBundle(namespace, bundle)

+```

+

+

+#### Clear backlog

+

+It clears all message backlog for all the topics that belong to a specific namespace. You can also clear backlog for a specific subscription as well.

+

+

+

+

+```

+$ pulsar-admin namespaces clear-backlog --sub my-subscription test-tenant/ns1

+```

+

+```

+N/A

+```

+

+

+

+```

+{@inject: endpoint|POST|/admin/v2/namespaces/{tenant}/{namespace}/clearBacklog|operation/clearNamespaceBacklogForSubscription}

+```

+

+

+

+```java

+admin.namespaces().clearNamespaceBacklogForSubscription(namespace, subscription)

+```

+

+

+#### Clear bundle backlog

+

+It clears all message backlog for all the topics that belong to a specific NamespaceBundle. You can also clear backlog for a specific subscription as well.

+

+

+

+

+```

+$ pulsar-admin namespaces clear-backlog --bundle 0x00000000_0xffffffff --sub my-subscription test-tenant/ns1

+```

+

+```

+N/A

+```

+

+

+

+```

+{@inject: endpoint|POST|/admin/v2/namespaces/{tenant}/{namespace}/{bundle}/clearBacklog|operation/clearNamespaceBundleBacklogForSubscription}

+```

+

+

+

+```java

+admin.namespaces().clearNamespaceBundleBacklogForSubscription(namespace, bundle, subscription)

+```

+

+

+#### Set retention

+

+Each namespace contains multiple topics and the retention size (storage size) of each topic should not exceed a specific threshold or it should be stored for a certain period. This command helps configure the retention size and time of topics in a given namespace.

+

+

+

+

+```

+$ pulsar-admin set-retention --size 10 --time 100 test-tenant/ns1

+```

+

+```

+N/A

+```

+

+

+

+```

+{@inject: endpoint|POST|/admin/v2/namespaces/{tenant}/{namespace}/retention|operation/setRetention}

+```

+

+

+

+```java

+admin.namespaces().setRetention(namespace, new RetentionPolicies(retentionTimeInMin, retentionSizeInMB))

+```

+

+

+#### Get retention

+

+It shows retention information of a given namespace.

+

+

+

+

+```

+$ pulsar-admin namespaces get-retention test-tenant/ns1

+```

+

+```json

+{

+ "retentionTimeInMinutes": 10,

+ "retentionSizeInMB": 100

+}

+```

+

+

+

+```

+{@inject: endpoint|GET|/admin/v2/namespaces/{tenant}/{namespace}/retention|operation/getRetention}

+```

+

+

+

+```java

+admin.namespaces().getRetention(namespace)

+```

+

+

+#### Set dispatch throttling

+

+It sets message dispatch rate for all the topics under a given namespace.

+The dispatch rate can be restricted by the number of messages per X seconds (`msg-dispatch-rate`) or by the number of message-bytes per X second (`byte-dispatch-rate`).

+dispatch rate is in second and it can be configured with `dispatch-rate-period`. Default value of `msg-dispatch-rate` and `byte-dispatch-rate` is -1 which

+disables the throttling.

+

+> **Note**

+> - If neither `clusterDispatchRate` nor `topicDispatchRate` is configured, dispatch throttling is disabled.

+> >

+> - If `topicDispatchRate` is not configured, `clusterDispatchRate` takes effect.

+> >

+> - If `topicDispatchRate` is configured, `topicDispatchRate` takes effect.

+

+

+

+

+```

+$ pulsar-admin namespaces set-dispatch-rate test-tenant/ns1 \

+ --msg-dispatch-rate 1000 \

+ --byte-dispatch-rate 1048576 \

+ --dispatch-rate-period 1

+```

+

+

+

+```

+{@inject: endpoint|POST|/admin/v2/namespaces/{tenant}/{namespace}/dispatchRate|operation/setDispatchRate}

+```

+

+

+

+```java

+admin.namespaces().setDispatchRate(namespace, new DispatchRate(1000, 1048576, 1))

+```

+

+

+#### Get configured message-rate

+

+It shows configured message-rate for the namespace (topics under this namespace can dispatch this many messages per second)

+

+

+

+

+```

+$ pulsar-admin namespaces get-dispatch-rate test-tenant/ns1

+```

+

+```json

+{

+ "dispatchThrottlingRatePerTopicInMsg" : 1000,

+ "dispatchThrottlingRatePerTopicInByte" : 1048576,

+ "ratePeriodInSecond" : 1

+}

+```

+

+

+

+```

+{@inject: endpoint|GET|/admin/v2/namespaces/{tenant}/{namespace}/dispatchRate|operation/getDispatchRate}

+```

+

+

+

+```java

+admin.namespaces().getDispatchRate(namespace)

+```

+

+

+#### Set dispatch throttling for subscription

+

+It sets message dispatch rate for all the subscription of topics under a given namespace.

+The dispatch rate can be restricted by the number of messages per X seconds (`msg-dispatch-rate`) or by the number of message-bytes per X second (`byte-dispatch-rate`).

+dispatch rate is in second and it can be configured with `dispatch-rate-period`. Default value of `msg-dispatch-rate` and `byte-dispatch-rate` is -1 which

+disables the throttling.

+

+

+

+

+```

+$ pulsar-admin namespaces set-subscription-dispatch-rate test-tenant/ns1 \

+ --msg-dispatch-rate 1000 \

+ --byte-dispatch-rate 1048576 \

+ --dispatch-rate-period 1

+```

+

+

+

+```

+{@inject: endpoint|POST|/admin/v2/namespaces/{tenant}/{namespace}/subscriptionDispatchRate|operation/setDispatchRate}

+```

+

+

+

+```java

+admin.namespaces().setSubscriptionDispatchRate(namespace, new DispatchRate(1000, 1048576, 1))

+```

+

+

+#### Get configured message-rate

+

+It shows configured message-rate for the namespace (topics under this namespace can dispatch this many messages per second)

+

+

+

+

+```

+$ pulsar-admin namespaces get-subscription-dispatch-rate test-tenant/ns1

+```

+

+```json

+{

+ "dispatchThrottlingRatePerTopicInMsg" : 1000,

+ "dispatchThrottlingRatePerTopicInByte" : 1048576,

+ "ratePeriodInSecond" : 1

+}

+```

+

+

+

+```

+{@inject: endpoint|GET|/admin/v2/namespaces/{tenant}/{namespace}/subscriptionDispatchRate|operation/getDispatchRate}

+```

+

+

+

+```java

+admin.namespaces().getSubscriptionDispatchRate(namespace)

+```

+

+

+#### Set dispatch throttling for replicator

+

+It sets message dispatch rate for all the replicator between replication clusters under a given namespace.

+The dispatch rate can be restricted by the number of messages per X seconds (`msg-dispatch-rate`) or by the number of message-bytes per X second (`byte-dispatch-rate`).

+dispatch rate is in second and it can be configured with `dispatch-rate-period`. Default value of `msg-dispatch-rate` and `byte-dispatch-rate` is -1 which

+disables the throttling.

+

+

+

+

+```

+$ pulsar-admin namespaces set-replicator-dispatch-rate test-tenant/ns1 \

+ --msg-dispatch-rate 1000 \

+ --byte-dispatch-rate 1048576 \

+ --dispatch-rate-period 1

+```

+

+

+

+```

+{@inject: endpoint|POST|/admin/v2/namespaces/{tenant}/{namespace}/replicatorDispatchRate|operation/setDispatchRate}

+```

+

+

+

+```java

+admin.namespaces().setReplicatorDispatchRate(namespace, new DispatchRate(1000, 1048576, 1))

+```

+

+

+#### Get configured message-rate

+

+It shows configured message-rate for the namespace (topics under this namespace can dispatch this many messages per second)

+

+

+

+

+```

+$ pulsar-admin namespaces get-replicator-dispatch-rate test-tenant/ns1

+```

+

+```json

+{

+ "dispatchThrottlingRatePerTopicInMsg" : 1000,

+ "dispatchThrottlingRatePerTopicInByte" : 1048576,

+ "ratePeriodInSecond" : 1

+}

+```

+

+

+

+```

+{@inject: endpoint|GET|/admin/v2/namespaces/{tenant}/{namespace}/replicatorDispatchRate|operation/getDispatchRate}

+```

+

+

+

+```java

+admin.namespaces().getReplicatorDispatchRate(namespace)

+```

+

+

+#### Get deduplication snapshot interval

+

+It shows configured `deduplicationSnapshotInterval` for a namespace (Each topic under the namespace will take a deduplication snapshot according to this interval)

+

+

+

+

+```

+$ pulsar-admin namespaces get-deduplication-snapshot-interval test-tenant/ns1

+```

+

+

+

+```

+{@inject: endpoint|GET|/admin/v2/namespaces/{tenant}/{namespace}/deduplicationSnapshotInterval}

+```

+

+

+

+```java

+admin.namespaces().getDeduplicationSnapshotInterval(namespace)

+```

+

+

+#### Set deduplication snapshot interval

+

+Set configured `deduplicationSnapshotInterval` for a namespace. Each topic under the namespace will take a deduplication snapshot according to this interval.

+`brokerDeduplicationEnabled` must be set to `true` for this property to take effect.

+

+

+

+

+```

+$ pulsar-admin namespaces set-deduplication-snapshot-interval test-tenant/ns1 --interval 1000

+```

+

+

+

+```

+{@inject: endpoint|POST|/admin/v2/namespaces/{tenant}/{namespace}/deduplicationSnapshotInterval}

+```

+

+```json

+{

+ "interval": 1000

+}

+```

+

+

+

+```java

+admin.namespaces().setDeduplicationSnapshotInterval(namespace, 1000)

+```

+

+

+#### Remove deduplication snapshot interval

+

+Remove configured `deduplicationSnapshotInterval` of a namespace (Each topic under the namespace will take a deduplication snapshot according to this interval)

+

+

+

+

+```

+$ pulsar-admin namespaces remove-deduplication-snapshot-interval test-tenant/ns1

+```

+

+

+

+```

+{@inject: endpoint|POST|/admin/v2/namespaces/{tenant}/{namespace}/deduplicationSnapshotInterval}

+```

+

+

+

+```java

+admin.namespaces().removeDeduplicationSnapshotInterval(namespace)

+```

+

+

+### Namespace isolation

+

+Coming soon.

+

+### Unloading from a broker

+

+You can unload a namespace, or a [namespace bundle](reference-terminology.md#namespace-bundle), from the Pulsar [broker](reference-terminology.md#broker) that is currently responsible for it.

+

+#### pulsar-admin

+

+Use the [`unload`](reference-pulsar-admin.md#unload) subcommand of the [`namespaces`](reference-pulsar-admin.md#namespaces) command.

+

+

+

+

+```shell

+$ pulsar-admin namespaces unload my-tenant/my-ns

+```

+

+

+

+```

+{@inject: endpoint|PUT|/admin/v2/namespaces/{tenant}/{namespace}/unload|operation/unloadNamespace}

+```

+

+

+

+```java

+admin.namespaces().unload(namespace)

+```

+

\ No newline at end of file

diff --git a/site2/website/versioned_docs/version-2.7.0/admin-api-non-partitioned-topics.md b/site2/website/versioned_docs/version-2.7.0/admin-api-non-partitioned-topics.md

new file mode 100644

index 00000000000000..c20551e6369801

--- /dev/null

+++ b/site2/website/versioned_docs/version-2.7.0/admin-api-non-partitioned-topics.md

@@ -0,0 +1,8 @@

+---

+id: version-2.7.0-admin-api-non-partitioned-topics

+title: Managing non-partitioned topics

+sidebar_label: Non-partitioned topics

+original_id: admin-api-non-partitioned-topics

+---

+

+For details of the content, refer to [manage topics](admin-api-topics.md).

\ No newline at end of file

diff --git a/site2/website/versioned_docs/version-2.7.0/admin-api-non-persistent-topics.md b/site2/website/versioned_docs/version-2.7.0/admin-api-non-persistent-topics.md

new file mode 100644

index 00000000000000..601d55bab533d8

--- /dev/null

+++ b/site2/website/versioned_docs/version-2.7.0/admin-api-non-persistent-topics.md

@@ -0,0 +1,8 @@

+---

+id: version-2.7.0-admin-api-non-persistent-topics

+title: Managing non-persistent topics

+sidebar_label: Non-Persistent topics

+original_id: admin-api-non-persistent-topics

+---

+

+For details of the content, refer to [manage topics](admin-api-topics.md).

\ No newline at end of file

diff --git a/site2/website/versioned_docs/version-2.7.0/admin-api-overview.md b/site2/website/versioned_docs/version-2.7.0/admin-api-overview.md

new file mode 100644

index 00000000000000..9930a84f8b6f2e

--- /dev/null

+++ b/site2/website/versioned_docs/version-2.7.0/admin-api-overview.md

@@ -0,0 +1,91 @@

+---

+id: version-2.7.0-admin-api-overview

+title: The Pulsar admin interface

+sidebar_label: Overview

+original_id: admin-api-overview

+---

+

+The Pulsar admin interface enables you to manage all of the important entities in a Pulsar [instance](reference-terminology.md#instance), such as [tenants](reference-terminology.md#tenant), [topics](reference-terminology.md#topic), and [namespaces](reference-terminology.md#namespace).

+

+You can currently interact with the admin interface via:

+

+- Making HTTP calls against the admin {@inject: rest:REST:/} API provided by Pulsar [brokers](reference-terminology.md#broker). For some restful apis, they might be redirected to topic owner brokers for serving

+ with [`307 Temporary Redirect`](https://developer.mozilla.org/en-US/docs/Web/HTTP/Status/307), hence the HTTP callers should handle `307 Temporary Redirect`. If you are using `curl`, you should specify `-L`

+ to handle redirections.

+- The `pulsar-admin` CLI tool, which is available in the `bin` folder of your [Pulsar installation](getting-started-standalone.md):

+

+```shell

+$ bin/pulsar-admin

+```

+

+Full documentation for this tool can be found in the [Pulsar command-line tools](reference-pulsar-admin.md) doc.

+

+- A Java client interface.

+

+> #### The REST API is the admin interface

+> Under the hood, both the `pulsar-admin` CLI tool and the Java client both use the REST API. If you’d like to implement your own admin interface client, you should use the REST API as well. Full documentation can be found here.

+

+In this document, examples from each of the three available interfaces will be shown.

+

+## Admin setup

+

+Each of Pulsar's three admin interfaces---the [`pulsar-admin`](reference-pulsar-admin.md) CLI tool, the [Java admin API](/api/admin), and the {@inject: rest:REST:/} API ---requires some special setup if you have [authentication](security-overview.md#authentication-providers) enabled in your Pulsar [instance](reference-terminology.md#instance).

+

+

+

+

+If you have [authentication](security-overview.md#authentication-providers) enabled, you will need to provide an auth configuration to use the [`pulsar-admin`](reference-pulsar-admin.md) tool. By default, the configuration for the `pulsar-admin` tool is found in the [`conf/client.conf`](reference-configuration.md#client) file. Here are the available parameters:

+

+|Name|Description|Default|

+|----|-----------|-------|

+|webServiceUrl|The web URL for the cluster.|http://localhost:8080/|

+|brokerServiceUrl|The Pulsar protocol URL for the cluster.|pulsar://localhost:6650/|

+|authPlugin|The authentication plugin.| |

+|authParams|The authentication parameters for the cluster, as a comma-separated string.| |

+|useTls|Whether or not TLS authentication will be enforced in the cluster.|false|

+|tlsAllowInsecureConnection|Accept untrusted TLS certificate from client.|false|

+|tlsTrustCertsFilePath|Path for the trusted TLS certificate file.| |

+

+

+

+You can find documentation for the REST API exposed by Pulsar [brokers](reference-terminology.md#broker) in this reference {@inject: rest:document:/}.

+

+

+

+To use the Java admin API, instantiate a {@inject: javadoc:PulsarAdmin:/admin/org/apache/pulsar/client/admin/PulsarAdmin} object, specifying a URL for a Pulsar [broker](reference-terminology.md#broker) and a {@inject: javadoc:PulsarAdminBuilder:/admin/org/apache/pulsar/client/admin/PulsarAdminBuilder}. Here's a minimal example using `localhost`:

+

+```java

+String url = "http://localhost:8080";

+// Pass auth-plugin class fully-qualified name if Pulsar-security enabled

+String authPluginClassName = "com.org.MyAuthPluginClass";

+// Pass auth-param if auth-plugin class requires it

+String authParams = "param1=value1";

+boolean useTls = false;

+boolean tlsAllowInsecureConnection = false;

+String tlsTrustCertsFilePath = null;

+PulsarAdmin admin = PulsarAdmin.builder()

+.authentication(authPluginClassName,authParams)

+.serviceHttpUrl(url)

+.tlsTrustCertsFilePath(tlsTrustCertsFilePath)

+.allowTlsInsecureConnection(tlsAllowInsecureConnection)

+.build();

+```

+

+If you have multiple brokers to use, you can use multi-host like Pulsar service. For example,

+```java

+String url = "http://localhost:8080,localhost:8081,localhost:8082";

+// Pass auth-plugin class fully-qualified name if Pulsar-security enabled

+String authPluginClassName = "com.org.MyAuthPluginClass";

+// Pass auth-param if auth-plugin class requires it

+String authParams = "param1=value1";

+boolean useTls = false;

+boolean tlsAllowInsecureConnection = false;

+String tlsTrustCertsFilePath = null;

+PulsarAdmin admin = PulsarAdmin.builder()

+.authentication(authPluginClassName,authParams)

+.serviceHttpUrl(url)

+.tlsTrustCertsFilePath(tlsTrustCertsFilePath)

+.allowTlsInsecureConnection(tlsAllowInsecureConnection)

+.build();

+```

+

diff --git a/site2/website/versioned_docs/version-2.7.0/admin-api-partitioned-topics.md b/site2/website/versioned_docs/version-2.7.0/admin-api-partitioned-topics.md

new file mode 100644

index 00000000000000..ae6c496a6f20e3

--- /dev/null