diff --git a/docs/faq/env_var.md b/docs/faq/env_var.md

index c1c23ba969d2..ffde628d83a3 100644

--- a/docs/faq/env_var.md

+++ b/docs/faq/env_var.md

@@ -280,6 +280,11 @@ When USE_PROFILER is enabled in Makefile or CMake, the following environments ca

- Values: Int ```(default=4)```

- This variable controls how many CuDNN dropout state resources to create for each GPU context for use in operator.

+* MXNET_SUBGRAPH_BACKEND

+ - Values: String ```(default="")```

+ - This variable controls the subgraph partitioning in MXNet.

+ - This variable is used to perform MKL-DNN FP32 operator fusion and quantization. Please refer to the [MKL-DNN operator list](../tutorials/mkldnn/operator_list.md) for how this variable is used and the list of fusion passes.

+

* MXNET_SAFE_ACCUMULATION

- Values: Values: 0(false) or 1(true) ```(default=0)```

- If this variable is set, the accumulation will enter the safe mode, meaning accumulation is done in a data type of higher precision than

diff --git a/docs/faq/perf.md b/docs/faq/perf.md

index e1318b843a03..62b40247081c 100644

--- a/docs/faq/perf.md

+++ b/docs/faq/perf.md

@@ -34,8 +34,13 @@ Performance is mainly affected by the following 4 factors:

## Intel CPU

-For using Intel Xeon CPUs for training and inference, we suggest enabling

-`USE_MKLDNN = 1` in `config.mk`.

+When using Intel Xeon CPUs for training and inference, the `mxnet-mkl` package is recommended. Adding `--pre` installs a nightly build from master. Without it you will install the latest patched release of MXNet:

+

+```

+$ pip install mxnet-mkl [--pre]

+```

+

+Or build MXNet from source code with `USE_MKLDNN=1`. For Linux users, `USE_MKLDNN=1` will be turned on by default.

We also find that setting the following environment variables can help:

diff --git a/docs/install/index.md b/docs/install/index.md

index 10db8d95b44a..ea93d40e0f8c 100644

--- a/docs/install/index.md

+++ b/docs/install/index.md

@@ -124,6 +124,12 @@ Indicate your preferred configuration. Then, follow the customized commands to i

$ pip install mxnet

```

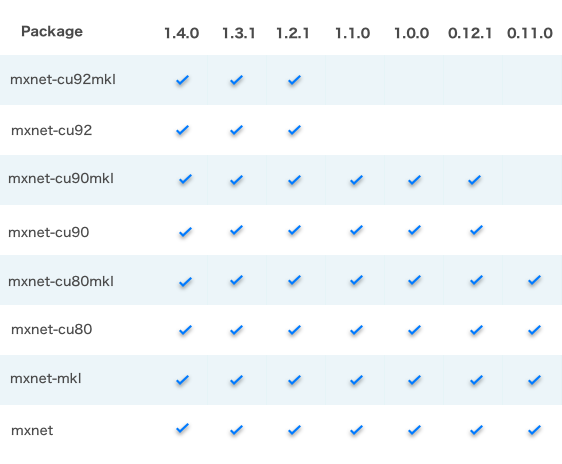

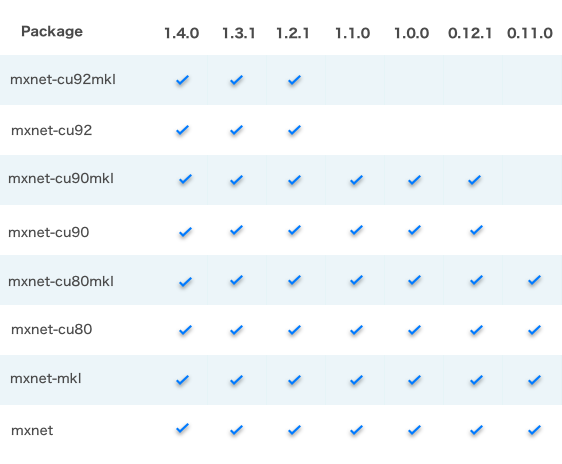

+MKL-DNN enabled pip packages are optimized for Intel hardware. You can find performance numbers in the MXNet tuning guide.

+

+```

+$ pip install mxnet-mkl==1.4.0

+```

+

@@ -131,6 +137,12 @@ $ pip install mxnet

$ pip install mxnet==1.3.1

```

+MKL-DNN enabled pip packages are optimized for Intel hardware. You can find performance numbers in the

MXNet tuning guide.

+

+```

+$ pip install mxnet-mkl==1.3.1

+```

+

@@ -138,6 +150,12 @@ $ pip install mxnet==1.3.1

$ pip install mxnet==1.2.1

```

+MKL-DNN enabled pip packages are optimized for Intel hardware. You can find performance numbers in the

MXNet tuning guide.

+

+```

+$ pip install mxnet-mkl==1.2.1

+```

+

@@ -185,9 +203,15 @@ $ pip install mxnet==0.11.0

$ pip install mxnet --pre

```

+MKL-DNN enabled pip packages are optimized for Intel hardware. You can find performance numbers in the

MXNet tuning guide.

+

+```

+$ pip install mxnet-mkl --pre

+```

+

-MXNet offers MKL pip packages that will be much faster when running on Intel hardware.

+

Check the chart below for other options, refer to PyPI for other MXNet pip packages, or validate your MXNet installation.

diff --git a/docs/tutorials/mkldnn/MKLDNN_README.md b/docs/tutorials/mkldnn/MKLDNN_README.md

index c5779670cd87..2a7cd40ac291 100644

--- a/docs/tutorials/mkldnn/MKLDNN_README.md

+++ b/docs/tutorials/mkldnn/MKLDNN_README.md

@@ -1,25 +1,27 @@

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

# Build/Install MXNet with MKL-DNN

A better training and inference performance is expected to be achieved on Intel-Architecture CPUs with MXNet built with [Intel MKL-DNN](https://github.com/intel/mkl-dnn) on multiple operating system, including Linux, Windows and MacOS.

In the following sections, you will find build instructions for MXNet with Intel MKL-DNN on Linux, MacOS and Windows.

+Please find MKL-DNN optimized operators and other features in the [MKL-DNN operator list](../mkldnn/operator_list.md).

+

The detailed performance data collected on Intel Xeon CPU with MXNet built with Intel MKL-DNN can be found [here](https://mxnet.incubator.apache.org/faq/perf.html#intel-cpu).

@@ -306,14 +308,14 @@ Graph optimization by subgraph feature are available in master branch. You can b

```

export MXNET_SUBGRAPH_BACKEND=MKLDNN

```

-

-When `MKLDNN` backend is enabled, advanced control options are avaliable:

-

-```

-export MXNET_DISABLE_MKLDNN_CONV_OPT=1 # disable MKLDNN convolution optimization pass

-export MXNET_DISABLE_MKLDNN_FC_OPT=1 # disable MKLDNN FullyConnected optimization pass

-```

-

+

+When `MKLDNN` backend is enabled, advanced control options are avaliable:

+

+```

+export MXNET_DISABLE_MKLDNN_CONV_OPT=1 # disable MKLDNN convolution optimization pass

+export MXNET_DISABLE_MKLDNN_FC_OPT=1 # disable MKLDNN FullyConnected optimization pass

+```

+

This limitations of this experimental feature are:

diff --git a/docs/tutorials/mkldnn/operator_list.md b/docs/tutorials/mkldnn/operator_list.md

new file mode 100644

index 000000000000..4958f8d9b602

--- /dev/null

+++ b/docs/tutorials/mkldnn/operator_list.md

@@ -0,0 +1,88 @@

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+# MKL-DNN Operator list

+

+MXNet MKL-DNN backend provides optimized implementations for various operators covering a broad range of applications including image classification, object detection, natural language processing.

+

+To help users understanding MKL-DNN backend better, the following table summarizes the list of supported operators, data types and functionalities. A subset of operators support faster training and inference by using a lower precision version. Refer to the following table's `INT8 Inference` column to see which operators are supported.

+

+| Operator | Function | FP32 Training (backward) | FP32 Inference | INT8 Inference |

+| --- | --- | --- | --- | --- |

+| **Convolution** | 1D Convolution | Y | Y | N |

+| | 2D Convolution | Y | Y | Y |

+| | 3D Convolution | Y | Y | N |

+| **Deconvolution** | 2D Deconvolution | Y | Y | N |

+| | 3D Deconvolution | Y | Y | N |

+| **FullyConnected** | 1D-4D input, flatten=True | N | Y | Y |

+| | 1D-4D input, flatten=False | N | Y | Y |

+| **Pooling** | 2D max Pooling | Y | Y | Y |

+| | 2D avg pooling | Y | Y | Y |

+| **BatchNorm** | 2D BatchNorm | Y | Y | N |

+| **LRN** | 2D LRN | Y | Y | N |

+| **Activation** | ReLU | Y | Y | Y |

+| | Tanh | Y | Y | N |

+| | SoftReLU | Y | Y | N |

+| | Sigmoid | Y | Y | N |

+| **softmax** | 1D-4D input | Y | Y | N |

+| **Softmax_output** | 1D-4D input | N | Y | N |

+| **Transpose** | 1D-4D input | N | Y | N |

+| **elemwise_add** | 1D-4D input | Y | Y | Y |

+| **Concat** | 1D-4D input | Y | Y | Y |

+| **slice** | 1D-4D input | N | Y | N |

+| **Quantization** | 1D-4D input | N | N | Y |

+| **Dequantization** | 1D-4D input | N | N | Y |

+| **Requantization** | 1D-4D input | N | N | Y |

+

+Besides direct operator optimizations, we also provide graph fusion passes listed in the table below. Users can choose to enable or disable these fusion patterns through environmental variables.

+

+For example, you can enable all FP32 fusion passes in the following table by:

+

+```

+export MXNET_SUBGRAPH_BACKEND=MKLDNN

+```

+

+And disable `Convolution + Activation` fusion by:

+

+```

+export MXNET_DISABLE_MKLDNN_FUSE_CONV_RELU=1

+```

+

+When generating the corresponding INT8 symbol, users can enable INT8 operator fusion passes as following:

+

+```

+# get qsym after model quantization

+qsym = qsym.get_backend_symbol('MKLDNN_QUANTIZE')

+qsym.save(symbol_name) # fused INT8 operators will be save into the symbol JSON file

+```

+

+| Fusion pattern | Disable |

+| --- | --- |

+| Convolution + Activation | MXNET_DISABLE_MKLDNN_FUSE_CONV_RELU |

+| Convolution + elemwise_add | MXNET_DISABLE_MKLDNN_FUSE_CONV_SUM |

+| Convolution + BatchNorm | MXNET_DISABLE_MKLDNN_FUSE_CONV_BN |

+| Convolution + Activation + elemwise_add | |

+| Convolution + BatchNorm + Activation + elemwise_add | |

+| FullyConnected + Activation(ReLU) | MXNET_DISABLE_MKLDNN_FUSE_FC_RELU |

+| Convolution (INT8) + re-quantization | |

+| FullyConnected (INT8) + re-quantization | |

+| FullyConnected (INT8) + re-quantization + de-quantization | |

+

+

+To install MXNet MKL-DNN backend, please refer to [MKL-DNN backend readme](MKLDNN_README.md)

+

+For performance numbers, please refer to [performance on Intel CPU](../../faq/perf.md#intel-cpu)

diff --git a/tests/tutorials/test_sanity_tutorials.py b/tests/tutorials/test_sanity_tutorials.py

index 7865000c7608..f89c23484568 100644

--- a/tests/tutorials/test_sanity_tutorials.py

+++ b/tests/tutorials/test_sanity_tutorials.py

@@ -35,6 +35,7 @@

'gluon/index.md',

'mkldnn/index.md',

'mkldnn/MKLDNN_README.md',

+ 'mkldnn/operator_list.md',

'nlp/index.md',

'onnx/index.md',

'python/index.md',

diff --git a/docs/tutorials/mkldnn/MKLDNN_README.md b/docs/tutorials/mkldnn/MKLDNN_README.md

index c5779670cd87..2a7cd40ac291 100644

--- a/docs/tutorials/mkldnn/MKLDNN_README.md

+++ b/docs/tutorials/mkldnn/MKLDNN_README.md

@@ -1,25 +1,27 @@

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

# Build/Install MXNet with MKL-DNN

A better training and inference performance is expected to be achieved on Intel-Architecture CPUs with MXNet built with [Intel MKL-DNN](https://github.com/intel/mkl-dnn) on multiple operating system, including Linux, Windows and MacOS.

In the following sections, you will find build instructions for MXNet with Intel MKL-DNN on Linux, MacOS and Windows.

+Please find MKL-DNN optimized operators and other features in the [MKL-DNN operator list](../mkldnn/operator_list.md).

+

The detailed performance data collected on Intel Xeon CPU with MXNet built with Intel MKL-DNN can be found [here](https://mxnet.incubator.apache.org/faq/perf.html#intel-cpu).

@@ -306,14 +308,14 @@ Graph optimization by subgraph feature are available in master branch. You can b

```

export MXNET_SUBGRAPH_BACKEND=MKLDNN

```

-

-When `MKLDNN` backend is enabled, advanced control options are avaliable:

-

-```

-export MXNET_DISABLE_MKLDNN_CONV_OPT=1 # disable MKLDNN convolution optimization pass

-export MXNET_DISABLE_MKLDNN_FC_OPT=1 # disable MKLDNN FullyConnected optimization pass

-```

-

+

+When `MKLDNN` backend is enabled, advanced control options are avaliable:

+

+```

+export MXNET_DISABLE_MKLDNN_CONV_OPT=1 # disable MKLDNN convolution optimization pass

+export MXNET_DISABLE_MKLDNN_FC_OPT=1 # disable MKLDNN FullyConnected optimization pass

+```

+

This limitations of this experimental feature are:

diff --git a/docs/tutorials/mkldnn/operator_list.md b/docs/tutorials/mkldnn/operator_list.md

new file mode 100644

index 000000000000..4958f8d9b602

--- /dev/null

+++ b/docs/tutorials/mkldnn/operator_list.md

@@ -0,0 +1,88 @@

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+# MKL-DNN Operator list

+

+MXNet MKL-DNN backend provides optimized implementations for various operators covering a broad range of applications including image classification, object detection, natural language processing.

+

+To help users understanding MKL-DNN backend better, the following table summarizes the list of supported operators, data types and functionalities. A subset of operators support faster training and inference by using a lower precision version. Refer to the following table's `INT8 Inference` column to see which operators are supported.

+

+| Operator | Function | FP32 Training (backward) | FP32 Inference | INT8 Inference |

+| --- | --- | --- | --- | --- |

+| **Convolution** | 1D Convolution | Y | Y | N |

+| | 2D Convolution | Y | Y | Y |

+| | 3D Convolution | Y | Y | N |

+| **Deconvolution** | 2D Deconvolution | Y | Y | N |

+| | 3D Deconvolution | Y | Y | N |

+| **FullyConnected** | 1D-4D input, flatten=True | N | Y | Y |

+| | 1D-4D input, flatten=False | N | Y | Y |

+| **Pooling** | 2D max Pooling | Y | Y | Y |

+| | 2D avg pooling | Y | Y | Y |

+| **BatchNorm** | 2D BatchNorm | Y | Y | N |

+| **LRN** | 2D LRN | Y | Y | N |

+| **Activation** | ReLU | Y | Y | Y |

+| | Tanh | Y | Y | N |

+| | SoftReLU | Y | Y | N |

+| | Sigmoid | Y | Y | N |

+| **softmax** | 1D-4D input | Y | Y | N |

+| **Softmax_output** | 1D-4D input | N | Y | N |

+| **Transpose** | 1D-4D input | N | Y | N |

+| **elemwise_add** | 1D-4D input | Y | Y | Y |

+| **Concat** | 1D-4D input | Y | Y | Y |

+| **slice** | 1D-4D input | N | Y | N |

+| **Quantization** | 1D-4D input | N | N | Y |

+| **Dequantization** | 1D-4D input | N | N | Y |

+| **Requantization** | 1D-4D input | N | N | Y |

+

+Besides direct operator optimizations, we also provide graph fusion passes listed in the table below. Users can choose to enable or disable these fusion patterns through environmental variables.

+

+For example, you can enable all FP32 fusion passes in the following table by:

+

+```

+export MXNET_SUBGRAPH_BACKEND=MKLDNN

+```

+

+And disable `Convolution + Activation` fusion by:

+

+```

+export MXNET_DISABLE_MKLDNN_FUSE_CONV_RELU=1

+```

+

+When generating the corresponding INT8 symbol, users can enable INT8 operator fusion passes as following:

+

+```

+# get qsym after model quantization

+qsym = qsym.get_backend_symbol('MKLDNN_QUANTIZE')

+qsym.save(symbol_name) # fused INT8 operators will be save into the symbol JSON file

+```

+

+| Fusion pattern | Disable |

+| --- | --- |

+| Convolution + Activation | MXNET_DISABLE_MKLDNN_FUSE_CONV_RELU |

+| Convolution + elemwise_add | MXNET_DISABLE_MKLDNN_FUSE_CONV_SUM |

+| Convolution + BatchNorm | MXNET_DISABLE_MKLDNN_FUSE_CONV_BN |

+| Convolution + Activation + elemwise_add | |

+| Convolution + BatchNorm + Activation + elemwise_add | |

+| FullyConnected + Activation(ReLU) | MXNET_DISABLE_MKLDNN_FUSE_FC_RELU |

+| Convolution (INT8) + re-quantization | |

+| FullyConnected (INT8) + re-quantization | |

+| FullyConnected (INT8) + re-quantization + de-quantization | |

+

+

+To install MXNet MKL-DNN backend, please refer to [MKL-DNN backend readme](MKLDNN_README.md)

+

+For performance numbers, please refer to [performance on Intel CPU](../../faq/perf.md#intel-cpu)

diff --git a/tests/tutorials/test_sanity_tutorials.py b/tests/tutorials/test_sanity_tutorials.py

index 7865000c7608..f89c23484568 100644

--- a/tests/tutorials/test_sanity_tutorials.py

+++ b/tests/tutorials/test_sanity_tutorials.py

@@ -35,6 +35,7 @@

'gluon/index.md',

'mkldnn/index.md',

'mkldnn/MKLDNN_README.md',

+ 'mkldnn/operator_list.md',

'nlp/index.md',

'onnx/index.md',

'python/index.md',

diff --git a/docs/tutorials/mkldnn/MKLDNN_README.md b/docs/tutorials/mkldnn/MKLDNN_README.md

index c5779670cd87..2a7cd40ac291 100644

--- a/docs/tutorials/mkldnn/MKLDNN_README.md

+++ b/docs/tutorials/mkldnn/MKLDNN_README.md

@@ -1,25 +1,27 @@

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

# Build/Install MXNet with MKL-DNN

A better training and inference performance is expected to be achieved on Intel-Architecture CPUs with MXNet built with [Intel MKL-DNN](https://github.com/intel/mkl-dnn) on multiple operating system, including Linux, Windows and MacOS.

In the following sections, you will find build instructions for MXNet with Intel MKL-DNN on Linux, MacOS and Windows.

+Please find MKL-DNN optimized operators and other features in the [MKL-DNN operator list](../mkldnn/operator_list.md).

+

The detailed performance data collected on Intel Xeon CPU with MXNet built with Intel MKL-DNN can be found [here](https://mxnet.incubator.apache.org/faq/perf.html#intel-cpu).

@@ -306,14 +308,14 @@ Graph optimization by subgraph feature are available in master branch. You can b

```

export MXNET_SUBGRAPH_BACKEND=MKLDNN

```

-

-When `MKLDNN` backend is enabled, advanced control options are avaliable:

-

-```

-export MXNET_DISABLE_MKLDNN_CONV_OPT=1 # disable MKLDNN convolution optimization pass

-export MXNET_DISABLE_MKLDNN_FC_OPT=1 # disable MKLDNN FullyConnected optimization pass

-```

-

+

+When `MKLDNN` backend is enabled, advanced control options are avaliable:

+

+```

+export MXNET_DISABLE_MKLDNN_CONV_OPT=1 # disable MKLDNN convolution optimization pass

+export MXNET_DISABLE_MKLDNN_FC_OPT=1 # disable MKLDNN FullyConnected optimization pass

+```

+

This limitations of this experimental feature are:

diff --git a/docs/tutorials/mkldnn/operator_list.md b/docs/tutorials/mkldnn/operator_list.md

new file mode 100644

index 000000000000..4958f8d9b602

--- /dev/null

+++ b/docs/tutorials/mkldnn/operator_list.md

@@ -0,0 +1,88 @@

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+# MKL-DNN Operator list

+

+MXNet MKL-DNN backend provides optimized implementations for various operators covering a broad range of applications including image classification, object detection, natural language processing.

+

+To help users understanding MKL-DNN backend better, the following table summarizes the list of supported operators, data types and functionalities. A subset of operators support faster training and inference by using a lower precision version. Refer to the following table's `INT8 Inference` column to see which operators are supported.

+

+| Operator | Function | FP32 Training (backward) | FP32 Inference | INT8 Inference |

+| --- | --- | --- | --- | --- |

+| **Convolution** | 1D Convolution | Y | Y | N |

+| | 2D Convolution | Y | Y | Y |

+| | 3D Convolution | Y | Y | N |

+| **Deconvolution** | 2D Deconvolution | Y | Y | N |

+| | 3D Deconvolution | Y | Y | N |

+| **FullyConnected** | 1D-4D input, flatten=True | N | Y | Y |

+| | 1D-4D input, flatten=False | N | Y | Y |

+| **Pooling** | 2D max Pooling | Y | Y | Y |

+| | 2D avg pooling | Y | Y | Y |

+| **BatchNorm** | 2D BatchNorm | Y | Y | N |

+| **LRN** | 2D LRN | Y | Y | N |

+| **Activation** | ReLU | Y | Y | Y |

+| | Tanh | Y | Y | N |

+| | SoftReLU | Y | Y | N |

+| | Sigmoid | Y | Y | N |

+| **softmax** | 1D-4D input | Y | Y | N |

+| **Softmax_output** | 1D-4D input | N | Y | N |

+| **Transpose** | 1D-4D input | N | Y | N |

+| **elemwise_add** | 1D-4D input | Y | Y | Y |

+| **Concat** | 1D-4D input | Y | Y | Y |

+| **slice** | 1D-4D input | N | Y | N |

+| **Quantization** | 1D-4D input | N | N | Y |

+| **Dequantization** | 1D-4D input | N | N | Y |

+| **Requantization** | 1D-4D input | N | N | Y |

+

+Besides direct operator optimizations, we also provide graph fusion passes listed in the table below. Users can choose to enable or disable these fusion patterns through environmental variables.

+

+For example, you can enable all FP32 fusion passes in the following table by:

+

+```

+export MXNET_SUBGRAPH_BACKEND=MKLDNN

+```

+

+And disable `Convolution + Activation` fusion by:

+

+```

+export MXNET_DISABLE_MKLDNN_FUSE_CONV_RELU=1

+```

+

+When generating the corresponding INT8 symbol, users can enable INT8 operator fusion passes as following:

+

+```

+# get qsym after model quantization

+qsym = qsym.get_backend_symbol('MKLDNN_QUANTIZE')

+qsym.save(symbol_name) # fused INT8 operators will be save into the symbol JSON file

+```

+

+| Fusion pattern | Disable |

+| --- | --- |

+| Convolution + Activation | MXNET_DISABLE_MKLDNN_FUSE_CONV_RELU |

+| Convolution + elemwise_add | MXNET_DISABLE_MKLDNN_FUSE_CONV_SUM |

+| Convolution + BatchNorm | MXNET_DISABLE_MKLDNN_FUSE_CONV_BN |

+| Convolution + Activation + elemwise_add | |

+| Convolution + BatchNorm + Activation + elemwise_add | |

+| FullyConnected + Activation(ReLU) | MXNET_DISABLE_MKLDNN_FUSE_FC_RELU |

+| Convolution (INT8) + re-quantization | |

+| FullyConnected (INT8) + re-quantization | |

+| FullyConnected (INT8) + re-quantization + de-quantization | |

+

+

+To install MXNet MKL-DNN backend, please refer to [MKL-DNN backend readme](MKLDNN_README.md)

+

+For performance numbers, please refer to [performance on Intel CPU](../../faq/perf.md#intel-cpu)

diff --git a/tests/tutorials/test_sanity_tutorials.py b/tests/tutorials/test_sanity_tutorials.py

index 7865000c7608..f89c23484568 100644

--- a/tests/tutorials/test_sanity_tutorials.py

+++ b/tests/tutorials/test_sanity_tutorials.py

@@ -35,6 +35,7 @@

'gluon/index.md',

'mkldnn/index.md',

'mkldnn/MKLDNN_README.md',

+ 'mkldnn/operator_list.md',

'nlp/index.md',

'onnx/index.md',

'python/index.md',

diff --git a/docs/tutorials/mkldnn/MKLDNN_README.md b/docs/tutorials/mkldnn/MKLDNN_README.md

index c5779670cd87..2a7cd40ac291 100644

--- a/docs/tutorials/mkldnn/MKLDNN_README.md

+++ b/docs/tutorials/mkldnn/MKLDNN_README.md

@@ -1,25 +1,27 @@

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

# Build/Install MXNet with MKL-DNN

A better training and inference performance is expected to be achieved on Intel-Architecture CPUs with MXNet built with [Intel MKL-DNN](https://github.com/intel/mkl-dnn) on multiple operating system, including Linux, Windows and MacOS.

In the following sections, you will find build instructions for MXNet with Intel MKL-DNN on Linux, MacOS and Windows.

+Please find MKL-DNN optimized operators and other features in the [MKL-DNN operator list](../mkldnn/operator_list.md).

+

The detailed performance data collected on Intel Xeon CPU with MXNet built with Intel MKL-DNN can be found [here](https://mxnet.incubator.apache.org/faq/perf.html#intel-cpu).

@@ -306,14 +308,14 @@ Graph optimization by subgraph feature are available in master branch. You can b

```

export MXNET_SUBGRAPH_BACKEND=MKLDNN

```

-

-When `MKLDNN` backend is enabled, advanced control options are avaliable:

-

-```

-export MXNET_DISABLE_MKLDNN_CONV_OPT=1 # disable MKLDNN convolution optimization pass

-export MXNET_DISABLE_MKLDNN_FC_OPT=1 # disable MKLDNN FullyConnected optimization pass

-```

-

+

+When `MKLDNN` backend is enabled, advanced control options are avaliable:

+

+```

+export MXNET_DISABLE_MKLDNN_CONV_OPT=1 # disable MKLDNN convolution optimization pass

+export MXNET_DISABLE_MKLDNN_FC_OPT=1 # disable MKLDNN FullyConnected optimization pass

+```

+

This limitations of this experimental feature are:

diff --git a/docs/tutorials/mkldnn/operator_list.md b/docs/tutorials/mkldnn/operator_list.md

new file mode 100644

index 000000000000..4958f8d9b602

--- /dev/null

+++ b/docs/tutorials/mkldnn/operator_list.md

@@ -0,0 +1,88 @@

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+# MKL-DNN Operator list

+

+MXNet MKL-DNN backend provides optimized implementations for various operators covering a broad range of applications including image classification, object detection, natural language processing.

+

+To help users understanding MKL-DNN backend better, the following table summarizes the list of supported operators, data types and functionalities. A subset of operators support faster training and inference by using a lower precision version. Refer to the following table's `INT8 Inference` column to see which operators are supported.

+

+| Operator | Function | FP32 Training (backward) | FP32 Inference | INT8 Inference |

+| --- | --- | --- | --- | --- |

+| **Convolution** | 1D Convolution | Y | Y | N |

+| | 2D Convolution | Y | Y | Y |

+| | 3D Convolution | Y | Y | N |

+| **Deconvolution** | 2D Deconvolution | Y | Y | N |

+| | 3D Deconvolution | Y | Y | N |

+| **FullyConnected** | 1D-4D input, flatten=True | N | Y | Y |

+| | 1D-4D input, flatten=False | N | Y | Y |

+| **Pooling** | 2D max Pooling | Y | Y | Y |

+| | 2D avg pooling | Y | Y | Y |

+| **BatchNorm** | 2D BatchNorm | Y | Y | N |

+| **LRN** | 2D LRN | Y | Y | N |

+| **Activation** | ReLU | Y | Y | Y |

+| | Tanh | Y | Y | N |

+| | SoftReLU | Y | Y | N |

+| | Sigmoid | Y | Y | N |

+| **softmax** | 1D-4D input | Y | Y | N |

+| **Softmax_output** | 1D-4D input | N | Y | N |

+| **Transpose** | 1D-4D input | N | Y | N |

+| **elemwise_add** | 1D-4D input | Y | Y | Y |

+| **Concat** | 1D-4D input | Y | Y | Y |

+| **slice** | 1D-4D input | N | Y | N |

+| **Quantization** | 1D-4D input | N | N | Y |

+| **Dequantization** | 1D-4D input | N | N | Y |

+| **Requantization** | 1D-4D input | N | N | Y |

+

+Besides direct operator optimizations, we also provide graph fusion passes listed in the table below. Users can choose to enable or disable these fusion patterns through environmental variables.

+

+For example, you can enable all FP32 fusion passes in the following table by:

+

+```

+export MXNET_SUBGRAPH_BACKEND=MKLDNN

+```

+

+And disable `Convolution + Activation` fusion by:

+

+```

+export MXNET_DISABLE_MKLDNN_FUSE_CONV_RELU=1

+```

+

+When generating the corresponding INT8 symbol, users can enable INT8 operator fusion passes as following:

+

+```

+# get qsym after model quantization

+qsym = qsym.get_backend_symbol('MKLDNN_QUANTIZE')

+qsym.save(symbol_name) # fused INT8 operators will be save into the symbol JSON file

+```

+

+| Fusion pattern | Disable |

+| --- | --- |

+| Convolution + Activation | MXNET_DISABLE_MKLDNN_FUSE_CONV_RELU |

+| Convolution + elemwise_add | MXNET_DISABLE_MKLDNN_FUSE_CONV_SUM |

+| Convolution + BatchNorm | MXNET_DISABLE_MKLDNN_FUSE_CONV_BN |

+| Convolution + Activation + elemwise_add | |

+| Convolution + BatchNorm + Activation + elemwise_add | |

+| FullyConnected + Activation(ReLU) | MXNET_DISABLE_MKLDNN_FUSE_FC_RELU |

+| Convolution (INT8) + re-quantization | |

+| FullyConnected (INT8) + re-quantization | |

+| FullyConnected (INT8) + re-quantization + de-quantization | |

+

+

+To install MXNet MKL-DNN backend, please refer to [MKL-DNN backend readme](MKLDNN_README.md)

+

+For performance numbers, please refer to [performance on Intel CPU](../../faq/perf.md#intel-cpu)

diff --git a/tests/tutorials/test_sanity_tutorials.py b/tests/tutorials/test_sanity_tutorials.py

index 7865000c7608..f89c23484568 100644

--- a/tests/tutorials/test_sanity_tutorials.py

+++ b/tests/tutorials/test_sanity_tutorials.py

@@ -35,6 +35,7 @@

'gluon/index.md',

'mkldnn/index.md',

'mkldnn/MKLDNN_README.md',

+ 'mkldnn/operator_list.md',

'nlp/index.md',

'onnx/index.md',

'python/index.md',