diff --git a/.circleci/config.yml b/.circleci/config.yml

index ae6742563f6c..46bdc16006a9 100644

--- a/.circleci/config.yml

+++ b/.circleci/config.yml

@@ -65,19 +65,20 @@ jobs:

run_tests_torch_and_tf:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

environment:

OMP_NUM_THREADS: 1

RUN_PT_TF_CROSS_TESTS: yes

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

resource_class: xlarge

parallelism: 1

steps:

- checkout

- restore_cache:

keys:

- - v0.4-torch_and_tf-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

+ - v0.5-torch_and_tf-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

- run: sudo apt-get -y update && sudo apt-get install -y libsndfile1-dev espeak-ng git-lfs

- run: git lfs install

- run: pip install --upgrade pip

@@ -87,7 +88,7 @@ jobs:

- run: pip install https://github.com/kpu/kenlm/archive/master.zip

- run: pip install git+https://github.com/huggingface/accelerate

- save_cache:

- key: v0.4-{{ checksum "setup.py" }}

+ key: v0.5-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: python utils/tests_fetcher.py | tee test_preparation.txt

@@ -105,19 +106,20 @@ jobs:

run_tests_torch_and_tf_all:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

environment:

OMP_NUM_THREADS: 1

RUN_PT_TF_CROSS_TESTS: yes

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

resource_class: xlarge

parallelism: 1

steps:

- checkout

- restore_cache:

keys:

- - v0.4-torch_and_tf-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

+ - v0.5-torch_and_tf-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

- run: sudo apt-get -y update && sudo apt-get install -y libsndfile1-dev espeak-ng git-lfs

- run: git lfs install

- run: pip install --upgrade pip

@@ -127,7 +129,7 @@ jobs:

- run: pip install https://github.com/kpu/kenlm/archive/master.zip

- run: pip install git+https://github.com/huggingface/accelerate

- save_cache:

- key: v0.4-{{ checksum "setup.py" }}

+ key: v0.5-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: |

@@ -140,19 +142,20 @@ jobs:

run_tests_torch_and_flax:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

environment:

OMP_NUM_THREADS: 1

RUN_PT_FLAX_CROSS_TESTS: yes

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

resource_class: xlarge

parallelism: 1

steps:

- checkout

- restore_cache:

keys:

- - v0.4-torch_and_flax-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

+ - v0.5-torch_and_flax-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

- run: sudo apt-get -y update && sudo apt-get install -y libsndfile1-dev espeak-ng

- run: pip install --upgrade pip

- run: pip install .[sklearn,flax,torch,testing,sentencepiece,torch-speech,vision]

@@ -160,7 +163,7 @@ jobs:

- run: pip install https://github.com/kpu/kenlm/archive/master.zip

- run: pip install git+https://github.com/huggingface/accelerate

- save_cache:

- key: v0.4-{{ checksum "setup.py" }}

+ key: v0.5-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: python utils/tests_fetcher.py | tee test_preparation.txt

@@ -178,19 +181,20 @@ jobs:

run_tests_torch_and_flax_all:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

environment:

OMP_NUM_THREADS: 1

RUN_PT_FLAX_CROSS_TESTS: yes

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

resource_class: xlarge

parallelism: 1

steps:

- checkout

- restore_cache:

keys:

- - v0.4-torch_and_flax-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

+ - v0.5-torch_and_flax-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

- run: sudo apt-get -y update && sudo apt-get install -y libsndfile1-dev espeak-ng

- run: pip install --upgrade pip

- run: pip install .[sklearn,flax,torch,testing,sentencepiece,torch-speech,vision]

@@ -198,7 +202,7 @@ jobs:

- run: pip install https://github.com/kpu/kenlm/archive/master.zip

- run: pip install git+https://github.com/huggingface/accelerate

- save_cache:

- key: v0.4-{{ checksum "setup.py" }}

+ key: v0.5-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: |

@@ -211,18 +215,19 @@ jobs:

run_tests_torch:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

environment:

OMP_NUM_THREADS: 1

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

resource_class: xlarge

parallelism: 1

steps:

- checkout

- restore_cache:

keys:

- - v0.4-torch-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

+ - v0.5-torch-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

- run: sudo apt-get -y update && sudo apt-get install -y libsndfile1-dev espeak-ng time

- run: pip install --upgrade pip

- run: pip install .[sklearn,torch,testing,sentencepiece,torch-speech,vision,timm]

@@ -230,7 +235,7 @@ jobs:

- run: pip install https://github.com/kpu/kenlm/archive/master.zip

- run: pip install git+https://github.com/huggingface/accelerate

- save_cache:

- key: v0.4-torch-{{ checksum "setup.py" }}

+ key: v0.5-torch-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: python utils/tests_fetcher.py | tee test_preparation.txt

@@ -248,18 +253,19 @@ jobs:

run_tests_torch_all:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

environment:

OMP_NUM_THREADS: 1

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

resource_class: xlarge

parallelism: 1

steps:

- checkout

- restore_cache:

keys:

- - v0.4-torch-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

+ - v0.5-torch-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

- run: sudo apt-get -y update && sudo apt-get install -y libsndfile1-dev espeak-ng

- run: pip install --upgrade pip

- run: pip install .[sklearn,torch,testing,sentencepiece,torch-speech,vision,timm]

@@ -267,7 +273,7 @@ jobs:

- run: pip install https://github.com/kpu/kenlm/archive/master.zip

- run: pip install git+https://github.com/huggingface/accelerate

- save_cache:

- key: v0.4-torch-{{ checksum "setup.py" }}

+ key: v0.5-torch-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: |

@@ -280,25 +286,26 @@ jobs:

run_tests_tf:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

environment:

OMP_NUM_THREADS: 1

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

resource_class: xlarge

parallelism: 1

steps:

- checkout

- restore_cache:

keys:

- - v0.4-tf-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

+ - v0.5-tf-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

- run: sudo apt-get -y update && sudo apt-get install -y libsndfile1-dev espeak-ng

- run: pip install --upgrade pip

- run: pip install .[sklearn,tf-cpu,testing,sentencepiece,tf-speech,vision]

- run: pip install tensorflow_probability

- run: pip install https://github.com/kpu/kenlm/archive/master.zip

- save_cache:

- key: v0.4-tf-{{ checksum "setup.py" }}

+ key: v0.5-tf-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: python utils/tests_fetcher.py | tee test_preparation.txt

@@ -316,25 +323,26 @@ jobs:

run_tests_tf_all:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

environment:

OMP_NUM_THREADS: 1

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

resource_class: xlarge

parallelism: 1

steps:

- checkout

- restore_cache:

keys:

- - v0.4-tf-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

+ - v0.5-tf-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

- run: sudo apt-get -y update && sudo apt-get install -y libsndfile1-dev espeak-ng

- run: pip install --upgrade pip

- run: pip install .[sklearn,tf-cpu,testing,sentencepiece,tf-speech,vision]

- run: pip install tensorflow_probability

- run: pip install https://github.com/kpu/kenlm/archive/master.zip

- save_cache:

- key: v0.4-tf-{{ checksum "setup.py" }}

+ key: v0.5-tf-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: |

@@ -347,24 +355,25 @@ jobs:

run_tests_flax:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

environment:

OMP_NUM_THREADS: 1

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

resource_class: xlarge

parallelism: 1

steps:

- checkout

- restore_cache:

keys:

- - v0.4-flax-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

+ - v0.5-flax-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

- run: sudo apt-get -y update && sudo apt-get install -y libsndfile1-dev espeak-ng

- run: pip install --upgrade pip

- run: pip install .[flax,testing,sentencepiece,flax-speech,vision]

- run: pip install https://github.com/kpu/kenlm/archive/master.zip

- save_cache:

- key: v0.4-flax-{{ checksum "setup.py" }}

+ key: v0.5-flax-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: python utils/tests_fetcher.py | tee test_preparation.txt

@@ -382,24 +391,25 @@ jobs:

run_tests_flax_all:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

environment:

OMP_NUM_THREADS: 1

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

resource_class: xlarge

parallelism: 1

steps:

- checkout

- restore_cache:

keys:

- - v0.4-flax-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

+ - v0.5-flax-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

- run: sudo apt-get -y update && sudo apt-get install -y libsndfile1-dev espeak-ng

- run: pip install --upgrade pip

- run: pip install .[flax,testing,sentencepiece,vision,flax-speech]

- run: pip install https://github.com/kpu/kenlm/archive/master.zip

- save_cache:

- key: v0.4-flax-{{ checksum "setup.py" }}

+ key: v0.5-flax-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: |

@@ -412,26 +422,27 @@ jobs:

run_tests_pipelines_torch:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

environment:

OMP_NUM_THREADS: 1

RUN_PIPELINE_TESTS: yes

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

resource_class: xlarge

parallelism: 1

steps:

- checkout

- restore_cache:

keys:

- - v0.4-torch-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

+ - v0.5-torch-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

- run: sudo apt-get -y update && sudo apt-get install -y libsndfile1-dev espeak-ng

- run: pip install --upgrade pip

- run: pip install .[sklearn,torch,testing,sentencepiece,torch-speech,vision,timm]

- run: pip install torch-scatter -f https://pytorch-geometric.com/whl/torch-1.11.0+cpu.html

- run: pip install https://github.com/kpu/kenlm/archive/master.zip

- save_cache:

- key: v0.4-torch-{{ checksum "setup.py" }}

+ key: v0.5-torch-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: python utils/tests_fetcher.py | tee test_preparation.txt

@@ -449,26 +460,27 @@ jobs:

run_tests_pipelines_torch_all:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

environment:

OMP_NUM_THREADS: 1

RUN_PIPELINE_TESTS: yes

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

resource_class: xlarge

parallelism: 1

steps:

- checkout

- restore_cache:

keys:

- - v0.4-torch-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

+ - v0.5-torch-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

- run: sudo apt-get -y update && sudo apt-get install -y libsndfile1-dev espeak-ng

- run: pip install --upgrade pip

- run: pip install .[sklearn,torch,testing,sentencepiece,torch-speech,vision,timm]

- run: pip install torch-scatter -f https://pytorch-geometric.com/whl/torch-1.11.0+cpu.html

- run: pip install https://github.com/kpu/kenlm/archive/master.zip

- save_cache:

- key: v0.4-torch-{{ checksum "setup.py" }}

+ key: v0.5-torch-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: |

@@ -481,24 +493,25 @@ jobs:

run_tests_pipelines_tf:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

environment:

OMP_NUM_THREADS: 1

RUN_PIPELINE_TESTS: yes

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

resource_class: xlarge

parallelism: 1

steps:

- checkout

- restore_cache:

keys:

- - v0.4-tf-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

+ - v0.5-tf-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

- run: pip install --upgrade pip

- run: pip install .[sklearn,tf-cpu,testing,sentencepiece]

- run: pip install tensorflow_probability

- save_cache:

- key: v0.4-tf-{{ checksum "setup.py" }}

+ key: v0.5-tf-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: python utils/tests_fetcher.py | tee test_preparation.txt

@@ -516,24 +529,25 @@ jobs:

run_tests_pipelines_tf_all:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

environment:

OMP_NUM_THREADS: 1

RUN_PIPELINE_TESTS: yes

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

resource_class: xlarge

parallelism: 1

steps:

- checkout

- restore_cache:

keys:

- - v0.4-tf-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

+ - v0.5-tf-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

- run: pip install --upgrade pip

- run: pip install .[sklearn,tf-cpu,testing,sentencepiece]

- run: pip install tensorflow_probability

- save_cache:

- key: v0.4-tf-{{ checksum "setup.py" }}

+ key: v0.5-tf-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: |

@@ -546,21 +560,22 @@ jobs:

run_tests_custom_tokenizers:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

environment:

RUN_CUSTOM_TOKENIZERS: yes

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

steps:

- checkout

- restore_cache:

keys:

- - v0.4-custom_tokenizers-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

+ - v0.5-custom_tokenizers-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

- run: pip install --upgrade pip

- run: pip install .[ja,testing,sentencepiece,jieba,spacy,ftfy,rjieba]

- run: python -m unidic download

- save_cache:

- key: v0.4-custom_tokenizers-{{ checksum "setup.py" }}

+ key: v0.5-custom_tokenizers-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: |

@@ -579,24 +594,25 @@ jobs:

run_examples_torch:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

environment:

OMP_NUM_THREADS: 1

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

resource_class: xlarge

parallelism: 1

steps:

- checkout

- restore_cache:

keys:

- - v0.4-torch_examples-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

+ - v0.5-torch_examples-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

- run: sudo apt-get -y update && sudo apt-get install -y libsndfile1-dev espeak-ng

- run: pip install --upgrade pip

- run: pip install .[sklearn,torch,sentencepiece,testing,torch-speech]

- run: pip install -r examples/pytorch/_tests_requirements.txt

- save_cache:

- key: v0.4-torch_examples-{{ checksum "setup.py" }}

+ key: v0.5-torch_examples-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: python utils/tests_fetcher.py --filters examples tests | tee test_preparation.txt

@@ -614,24 +630,25 @@ jobs:

run_examples_torch_all:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

environment:

OMP_NUM_THREADS: 1

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

resource_class: xlarge

parallelism: 1

steps:

- checkout

- restore_cache:

keys:

- - v0.4-torch_examples-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

+ - v0.5-torch_examples-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

- run: sudo apt-get -y update && sudo apt-get install -y libsndfile1-dev espeak-ng

- run: pip install --upgrade pip

- run: pip install .[sklearn,torch,sentencepiece,testing,torch-speech]

- run: pip install -r examples/pytorch/_tests_requirements.txt

- save_cache:

- key: v0.4-torch_examples-{{ checksum "setup.py" }}

+ key: v0.5-torch_examples-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: |

@@ -644,23 +661,24 @@ jobs:

run_examples_flax:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

environment:

OMP_NUM_THREADS: 1

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

resource_class: xlarge

parallelism: 1

steps:

- checkout

- restore_cache:

keys:

- - v0.4-flax_examples-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

+ - v0.5-flax_examples-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

- run: pip install --upgrade pip

- - run: sudo pip install .[flax,testing,sentencepiece]

+ - run: pip install .[flax,testing,sentencepiece]

- run: pip install -r examples/flax/_tests_requirements.txt

- save_cache:

- key: v0.4-flax_examples-{{ checksum "setup.py" }}

+ key: v0.5-flax_examples-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: python utils/tests_fetcher.py --filters examples tests | tee test_preparation.txt

@@ -678,23 +696,24 @@ jobs:

run_examples_flax_all:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

environment:

OMP_NUM_THREADS: 1

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

resource_class: xlarge

parallelism: 1

steps:

- checkout

- restore_cache:

keys:

- - v0.4-flax_examples-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

+ - v0.5-flax_examples-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

- run: pip install --upgrade pip

- - run: sudo pip install .[flax,testing,sentencepiece]

+ - run: pip install .[flax,testing,sentencepiece]

- run: pip install -r examples/flax/_tests_requirements.txt

- save_cache:

- key: v0.4-flax_examples-{{ checksum "setup.py" }}

+ key: v0.5-flax_examples-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: |

@@ -707,27 +726,28 @@ jobs:

run_tests_hub:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

environment:

HUGGINGFACE_CO_STAGING: yes

RUN_GIT_LFS_TESTS: yes

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

resource_class: xlarge

parallelism: 1

steps:

- checkout

- restore_cache:

keys:

- - v0.4-hub-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

- - run: sudo apt-get install git-lfs

+ - v0.5-hub-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

+ - run: sudo apt-get -y update && sudo apt-get install git-lfs

- run: |

git config --global user.email "ci@dummy.com"

git config --global user.name "ci"

- run: pip install --upgrade pip

- run: pip install .[torch,sentencepiece,testing]

- save_cache:

- key: v0.4-hub-{{ checksum "setup.py" }}

+ key: v0.5-hub-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: python utils/tests_fetcher.py | tee test_preparation.txt

@@ -745,27 +765,28 @@ jobs:

run_tests_hub_all:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

environment:

HUGGINGFACE_CO_STAGING: yes

RUN_GIT_LFS_TESTS: yes

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

resource_class: xlarge

parallelism: 1

steps:

- checkout

- restore_cache:

keys:

- - v0.4-hub-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

- - run: sudo apt-get install git-lfs

+ - v0.5-hub-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

+ - run: sudo apt-get -y update && sudo apt-get install git-lfs

- run: |

git config --global user.email "ci@dummy.com"

git config --global user.name "ci"

- run: pip install --upgrade pip

- run: pip install .[torch,sentencepiece,testing]

- save_cache:

- key: v0.4-hub-{{ checksum "setup.py" }}

+ key: v0.5-hub-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: |

@@ -778,22 +799,23 @@ jobs:

run_tests_onnxruntime:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

environment:

OMP_NUM_THREADS: 1

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

resource_class: xlarge

parallelism: 1

steps:

- checkout

- restore_cache:

keys:

- - v0.4-torch-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

+ - v0.5-torch-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

- run: pip install --upgrade pip

- run: pip install .[torch,testing,sentencepiece,onnxruntime,vision,rjieba]

- save_cache:

- key: v0.4-onnx-{{ checksum "setup.py" }}

+ key: v0.5-onnx-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: python utils/tests_fetcher.py | tee test_preparation.txt

@@ -811,22 +833,23 @@ jobs:

run_tests_onnxruntime_all:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

environment:

OMP_NUM_THREADS: 1

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

resource_class: xlarge

parallelism: 1

steps:

- checkout

- restore_cache:

keys:

- - v0.4-torch-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

+ - v0.5-torch-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

- run: pip install --upgrade pip

- run: pip install .[torch,testing,sentencepiece,onnxruntime,vision]

- save_cache:

- key: v0.4-onnx-{{ checksum "setup.py" }}

+ key: v0.5-onnx-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: |

@@ -839,21 +862,22 @@ jobs:

check_code_quality:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

resource_class: large

environment:

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

parallelism: 1

steps:

- checkout

- restore_cache:

keys:

- - v0.4-code_quality-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

+ - v0.5-code_quality-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

- run: pip install --upgrade pip

- run: pip install .[all,quality]

- save_cache:

- key: v0.4-code_quality-{{ checksum "setup.py" }}

+ key: v0.5-code_quality-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: black --check --preview examples tests src utils

@@ -862,25 +886,27 @@ jobs:

- run: python utils/sort_auto_mappings.py --check_only

- run: flake8 examples tests src utils

- run: doc-builder style src/transformers docs/source --max_len 119 --check_only --path_to_docs docs/source

+ - run: python utils/check_doc_toc.py

check_repository_consistency:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

resource_class: large

environment:

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

parallelism: 1

steps:

- checkout

- restore_cache:

keys:

- - v0.4-repository_consistency-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

+ - v0.5-repository_consistency-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

- run: pip install --upgrade pip

- run: pip install .[all,quality]

- save_cache:

- key: v0.4-repository_consistency-{{ checksum "setup.py" }}

+ key: v0.5-repository_consistency-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: python utils/check_copies.py

@@ -895,18 +921,19 @@ jobs:

run_tests_layoutlmv2_and_v3:

working_directory: ~/transformers

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

environment:

OMP_NUM_THREADS: 1

TRANSFORMERS_IS_CI: yes

+ PYTEST_TIMEOUT: 120

resource_class: xlarge

parallelism: 1

steps:

- checkout

- restore_cache:

keys:

- - v0.4-torch-{{ checksum "setup.py" }}

- - v0.4-{{ checksum "setup.py" }}

+ - v0.5-torch-{{ checksum "setup.py" }}

+ - v0.5-{{ checksum "setup.py" }}

- run: sudo apt-get -y update && sudo apt-get install -y libsndfile1-dev

- run: pip install --upgrade pip

- run: pip install .[torch,testing,vision]

@@ -915,7 +942,7 @@ jobs:

- run: sudo apt install tesseract-ocr

- run: pip install pytesseract

- save_cache:

- key: v0.4-torch-{{ checksum "setup.py" }}

+ key: v0.5-torch-{{ checksum "setup.py" }}

paths:

- '~/.cache/pip'

- run: python utils/tests_fetcher.py | tee test_preparation.txt

@@ -933,7 +960,7 @@ jobs:

# TPU JOBS

run_examples_tpu:

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

environment:

OMP_NUM_THREADS: 1

TRANSFORMERS_IS_CI: yes

@@ -953,7 +980,7 @@ jobs:

cleanup-gke-jobs:

docker:

- - image: circleci/python:3.7

+ - image: cimg/python:3.7.12

steps:

- gcp-gke/install

- gcp-gke/update-kubeconfig-with-credentials:

diff --git a/.github/ISSUE_TEMPLATE/bug-report.yml b/.github/ISSUE_TEMPLATE/bug-report.yml

index 274de7af15cf..b1d52c8a3cd6 100644

--- a/.github/ISSUE_TEMPLATE/bug-report.yml

+++ b/.github/ISSUE_TEMPLATE/bug-report.yml

@@ -7,7 +7,6 @@ body:

attributes:

label: System Info

description: Please share your system info with us. You can run the command `transformers-cli env` and copy-paste its output below.

- render: shell

placeholder: transformers version, platform, python version, ...

validations:

required: true

@@ -118,4 +117,3 @@ body:

attributes:

label: Expected behavior

description: "A clear and concise description of what you would expect to happen."

- render: shell

diff --git a/.github/conda/meta.yaml b/.github/conda/meta.yaml

index f9caa469be29..6f6b680d1e6b 100644

--- a/.github/conda/meta.yaml

+++ b/.github/conda/meta.yaml

@@ -25,7 +25,7 @@ requirements:

- sacremoses

- regex !=2019.12.17

- protobuf

- - tokenizers >=0.10.1,<0.11.0

+ - tokenizers >=0.11.1,!=0.11.3,<0.13

- pyyaml >=5.1

run:

- python

@@ -40,7 +40,7 @@ requirements:

- sacremoses

- regex !=2019.12.17

- protobuf

- - tokenizers >=0.10.1,<0.11.0

+ - tokenizers >=0.11.1,!=0.11.3,<0.13

- pyyaml >=5.1

test:

diff --git a/.github/workflows/add-model-like.yml b/.github/workflows/add-model-like.yml

index b6e812661669..2d2ab5b2e15b 100644

--- a/.github/workflows/add-model-like.yml

+++ b/.github/workflows/add-model-like.yml

@@ -27,7 +27,7 @@ jobs:

id: cache

with:

path: ~/venv/

- key: v3-tests_model_like-${{ hashFiles('setup.py') }}

+ key: v4-tests_model_like-${{ hashFiles('setup.py') }}

- name: Create virtual environment on cache miss

if: steps.cache.outputs.cache-hit != 'true'

diff --git a/.github/workflows/build-docker-images.yml b/.github/workflows/build-docker-images.yml

index 295f668d4d7c..2d4dfc9f0448 100644

--- a/.github/workflows/build-docker-images.yml

+++ b/.github/workflows/build-docker-images.yml

@@ -5,6 +5,7 @@ on:

branches:

- docker-image*

repository_dispatch:

+ workflow_call:

schedule:

- cron: "0 1 * * *"

diff --git a/.github/workflows/build-past-ci-docker-images.yml b/.github/workflows/build-past-ci-docker-images.yml

new file mode 100644

index 000000000000..5c9d9366e4b2

--- /dev/null

+++ b/.github/workflows/build-past-ci-docker-images.yml

@@ -0,0 +1,108 @@

+name: Build docker images (Past CI)

+

+on:

+ push:

+ branches:

+ - past-ci-docker-image*

+

+concurrency:

+ group: docker-images-builds

+ cancel-in-progress: false

+

+jobs:

+ past-pytorch-docker:

+ name: "Past PyTorch Docker"

+ strategy:

+ fail-fast: false

+ matrix:

+ version: ["1.11", "1.10", "1.9", "1.8", "1.7", "1.6", "1.5", "1.4"]

+ runs-on: ubuntu-latest

+ steps:

+ -

+ name: Set up Docker Buildx

+ uses: docker/setup-buildx-action@v1

+ -

+ name: Check out code

+ uses: actions/checkout@v2

+ -

+ name: Login to DockerHub

+ uses: docker/login-action@v1

+ with:

+ username: ${{ secrets.DOCKERHUB_USERNAME }}

+ password: ${{ secrets.DOCKERHUB_PASSWORD }}

+ -

+ name: Build and push

+ uses: docker/build-push-action@v2

+ with:

+ context: ./docker/transformers-past-gpu

+ build-args: |

+ REF=main

+ FRAMEWORK=pytorch

+ VERSION=${{ matrix.version }}

+ push: true

+ tags: huggingface/transformers-pytorch-past-${{ matrix.version }}-gpu

+

+ past-tensorflow-docker:

+ name: "Past TensorFlow Docker"

+ strategy:

+ fail-fast: false

+ matrix:

+ version: ["2.8", "2.7", "2.6", "2.5"]

+ runs-on: ubuntu-latest

+ steps:

+ -

+ name: Set up Docker Buildx

+ uses: docker/setup-buildx-action@v1

+ -

+ name: Check out code

+ uses: actions/checkout@v2

+ -

+ name: Login to DockerHub

+ uses: docker/login-action@v1

+ with:

+ username: ${{ secrets.DOCKERHUB_USERNAME }}

+ password: ${{ secrets.DOCKERHUB_PASSWORD }}

+ -

+ name: Build and push

+ uses: docker/build-push-action@v2

+ with:

+ context: ./docker/transformers-past-gpu

+ build-args: |

+ REF=main

+ FRAMEWORK=tensorflow

+ VERSION=${{ matrix.version }}

+ push: true

+ tags: huggingface/transformers-tensorflow-past-${{ matrix.version }}-gpu

+

+ past-tensorflow-docker-2-4:

+ name: "Past TensorFlow Docker"

+ strategy:

+ fail-fast: false

+ matrix:

+ version: ["2.4"]

+ runs-on: ubuntu-latest

+ steps:

+ -

+ name: Set up Docker Buildx

+ uses: docker/setup-buildx-action@v1

+ -

+ name: Check out code

+ uses: actions/checkout@v2

+ -

+ name: Login to DockerHub

+ uses: docker/login-action@v1

+ with:

+ username: ${{ secrets.DOCKERHUB_USERNAME }}

+ password: ${{ secrets.DOCKERHUB_PASSWORD }}

+ -

+ name: Build and push

+ uses: docker/build-push-action@v2

+ with:

+ context: ./docker/transformers-past-gpu

+ build-args: |

+ REF=main

+ BASE_DOCKER_IMAGE=nvidia/cuda:11.0.3-cudnn8-devel-ubuntu20.04

+ FRAMEWORK=tensorflow

+ VERSION=${{ matrix.version }}

+ push: true

+ tags: huggingface/transformers-tensorflow-past-${{ matrix.version }}-gpu

\ No newline at end of file

diff --git a/.github/workflows/model-templates.yml b/.github/workflows/model-templates.yml

index 6ade77a2792b..ad57d331c231 100644

--- a/.github/workflows/model-templates.yml

+++ b/.github/workflows/model-templates.yml

@@ -21,7 +21,7 @@ jobs:

id: cache

with:

path: ~/venv/

- key: v3-tests_templates-${{ hashFiles('setup.py') }}

+ key: v4-tests_templates-${{ hashFiles('setup.py') }}

- name: Create virtual environment on cache miss

if: steps.cache.outputs.cache-hit != 'true'

diff --git a/.github/workflows/self-past-caller.yml b/.github/workflows/self-past-caller.yml

new file mode 100644

index 000000000000..2cc81dac8ca2

--- /dev/null

+++ b/.github/workflows/self-past-caller.yml

@@ -0,0 +1,136 @@

+name: Self-hosted runner (past-ci-caller)

+

+on:

+ push:

+ branches:

+ - run-past-ci*

+

+jobs:

+ run_past_ci_pytorch_1-11:

+ name: PyTorch 1.11

+ if: always()

+ uses: ./.github/workflows/self-past.yml

+ with:

+ framework: pytorch

+ version: "1.11"

+ secrets: inherit

+

+ run_past_ci_pytorch_1-10:

+ name: PyTorch 1.10

+ if: always()

+ needs: [run_past_ci_pytorch_1-11]

+ uses: ./.github/workflows/self-past.yml

+ with:

+ framework: pytorch

+ version: "1.10"

+ secrets: inherit

+

+ run_past_ci_pytorch_1-9:

+ name: PyTorch 1.9

+ if: always()

+ needs: [run_past_ci_pytorch_1-10]

+ uses: ./.github/workflows/self-past.yml

+ with:

+ framework: pytorch

+ version: "1.9"

+ secrets: inherit

+

+ run_past_ci_pytorch_1-8:

+ name: PyTorch 1.8

+ if: always()

+ needs: [run_past_ci_pytorch_1-9]

+ uses: ./.github/workflows/self-past.yml

+ with:

+ framework: pytorch

+ version: "1.8"

+ secrets: inherit

+

+ run_past_ci_pytorch_1-7:

+ name: PyTorch 1.7

+ if: always()

+ needs: [run_past_ci_pytorch_1-8]

+ uses: ./.github/workflows/self-past.yml

+ with:

+ framework: pytorch

+ version: "1.7"

+ secrets: inherit

+

+ run_past_ci_pytorch_1-6:

+ name: PyTorch 1.6

+ if: always()

+ needs: [run_past_ci_pytorch_1-7]

+ uses: ./.github/workflows/self-past.yml

+ with:

+ framework: pytorch

+ version: "1.6"

+ secrets: inherit

+

+ run_past_ci_pytorch_1-5:

+ name: PyTorch 1.5

+ if: always()

+ needs: [run_past_ci_pytorch_1-6]

+ uses: ./.github/workflows/self-past.yml

+ with:

+ framework: pytorch

+ version: "1.5"

+ secrets: inherit

+

+ run_past_ci_pytorch_1-4:

+ name: PyTorch 1.4

+ if: always()

+ needs: [run_past_ci_pytorch_1-5]

+ uses: ./.github/workflows/self-past.yml

+ with:

+ framework: pytorch

+ version: "1.4"

+ secrets: inherit

+

+ run_past_ci_tensorflow_2-8:

+ name: TensorFlow 2.8

+ if: always()

+ needs: [run_past_ci_pytorch_1-4]

+ uses: ./.github/workflows/self-past.yml

+ with:

+ framework: tensorflow

+ version: "2.8"

+ secrets: inherit

+

+ run_past_ci_tensorflow_2-7:

+ name: TensorFlow 2.7

+ if: always()

+ needs: [run_past_ci_tensorflow_2-8]

+ uses: ./.github/workflows/self-past.yml

+ with:

+ framework: tensorflow

+ version: "2.7"

+ secrets: inherit

+

+ run_past_ci_tensorflow_2-6:

+ name: TensorFlow 2.6

+ if: always()

+ needs: [run_past_ci_tensorflow_2-7]

+ uses: ./.github/workflows/self-past.yml

+ with:

+ framework: tensorflow

+ version: "2.6"

+ secrets: inherit

+

+ run_past_ci_tensorflow_2-5:

+ name: TensorFlow 2.5

+ if: always()

+ needs: [run_past_ci_tensorflow_2-6]

+ uses: ./.github/workflows/self-past.yml

+ with:

+ framework: tensorflow

+ version: "2.5"

+ secrets: inherit

+

+ run_past_ci_tensorflow_2-4:

+ name: TensorFlow 2.4

+ if: always()

+ needs: [run_past_ci_tensorflow_2-5]

+ uses: ./.github/workflows/self-past.yml

+ with:

+ framework: tensorflow

+ version: "2.4"

+ secrets: inherit

\ No newline at end of file

diff --git a/.github/workflows/self-past.yml b/.github/workflows/self-past.yml

new file mode 100644

index 000000000000..b3871dc92fa4

--- /dev/null

+++ b/.github/workflows/self-past.yml

@@ -0,0 +1,192 @@

+name: Self-hosted runner (past)

+

+# Note that each job's dependencies go into a corresponding docker file.

+#

+# For example for `run_all_tests_torch_cuda_extensions_gpu` the docker image is

+# `huggingface/transformers-pytorch-deepspeed-latest-gpu`, which can be found at

+# `docker/transformers-pytorch-deepspeed-latest-gpu/Dockerfile`

+

+on:

+ workflow_call:

+ inputs:

+ framework:

+ required: true

+ type: string

+ version:

+ required: true

+ type: string

+

+env:

+ HF_HOME: /mnt/cache

+ TRANSFORMERS_IS_CI: yes

+ OMP_NUM_THREADS: 8

+ MKL_NUM_THREADS: 8

+ RUN_SLOW: yes

+ SIGOPT_API_TOKEN: ${{ secrets.SIGOPT_API_TOKEN }}

+ TF_FORCE_GPU_ALLOW_GROWTH: true

+ RUN_PT_TF_CROSS_TESTS: 1

+

+jobs:

+ setup:

+ name: Setup

+ runs-on: ubuntu-latest

+ outputs:

+ matrix: ${{ steps.set-matrix.outputs.matrix }}

+ steps:

+ - name: Checkout transformers

+ uses: actions/checkout@v2

+ with:

+ fetch-depth: 2

+

+ - name: Cleanup

+ run: |

+ rm -rf tests/__pycache__

+ rm -rf tests/models/__pycache__

+ rm -rf reports

+

+ - id: set-matrix

+ name: Identify models to test

+ run: |

+ cd tests

+ echo "::set-output name=matrix::$(python3 -c 'import os; tests = os.getcwd(); model_tests = os.listdir(os.path.join(tests, "models")); d1 = sorted(list(filter(os.path.isdir, os.listdir(tests)))); d2 = sorted(list(filter(os.path.isdir, [f"models/{x}" for x in model_tests]))); d1.remove("models"); d = d2 + d1; print(d)')"

+

+ run_tests_single_gpu:

+ name: Model tests

+ strategy:

+ fail-fast: false

+ matrix:

+ folders: ${{ fromJson(needs.setup.outputs.matrix) }}

+ machine_type: [single-gpu]

+ runs-on: ${{ format('{0}-{1}', matrix.machine_type, 'docker-past-ci') }}

+ container:

+ image: huggingface/transformers-${{ inputs.framework }}-past-${{ inputs.version }}-gpu

+ options: --gpus 0 --shm-size "16gb" --ipc host -v /mnt/cache/.cache/huggingface:/mnt/cache/

+ needs: setup

+ steps:

+ - name: Update clone

+ working-directory: /transformers

+ run: git fetch && git checkout ${{ github.sha }}

+

+ - name: Echo folder ${{ matrix.folders }}

+ shell: bash

+ # For folders like `models/bert`, set an env. var. (`matrix_folders`) to `models_bert`, which will be used to

+ # set the artifact folder names (because the character `/` is not allowed).

+ run: |

+ echo "${{ matrix.folders }}"

+ matrix_folders=${{ matrix.folders }}

+ matrix_folders=${matrix_folders/'models/'/'models_'}

+ echo "$matrix_folders"

+ echo "matrix_folders=$matrix_folders" >> $GITHUB_ENV

+

+ - name: NVIDIA-SMI

+ run: |

+ nvidia-smi

+

+ - name: Environment

+ working-directory: /transformers

+ run: |

+ python3 utils/print_env.py

+

+ - name: Run all tests on GPU

+ working-directory: /transformers

+ run: python3 -m pytest -v --make-reports=${{ matrix.machine_type }}_tests_gpu_${{ matrix.folders }} tests/${{ matrix.folders }}

+

+ - name: Failure short reports

+ if: ${{ failure() }}

+ continue-on-error: true

+ run: cat /transformers/reports/${{ matrix.machine_type }}_tests_gpu_${{ matrix.folders }}/failures_short.txt

+

+ - name: Test suite reports artifacts

+ if: ${{ always() }}

+ uses: actions/upload-artifact@v2

+ with:

+ name: ${{ matrix.machine_type }}_run_all_tests_gpu_${{ env.matrix_folders }}_test_reports

+ path: /transformers/reports/${{ matrix.machine_type }}_tests_gpu_${{ matrix.folders }}

+

+ run_tests_multi_gpu:

+ name: Model tests

+ strategy:

+ fail-fast: false

+ matrix:

+ folders: ${{ fromJson(needs.setup.outputs.matrix) }}

+ machine_type: [multi-gpu]

+ runs-on: ${{ format('{0}-{1}', matrix.machine_type, 'docker-past-ci') }}

+ container:

+ image: huggingface/transformers-${{ inputs.framework }}-past-${{ inputs.version }}-gpu

+ options: --gpus all --shm-size "16gb" --ipc host -v /mnt/cache/.cache/huggingface:/mnt/cache/

+ needs: setup

+ steps:

+ - name: Update clone

+ working-directory: /transformers

+ run: git fetch && git checkout ${{ github.sha }}

+

+ - name: Echo folder ${{ matrix.folders }}

+ shell: bash

+ # For folders like `models/bert`, set an env. var. (`matrix_folders`) to `models_bert`, which will be used to

+ # set the artifact folder names (because the character `/` is not allowed).

+ run: |

+ echo "${{ matrix.folders }}"

+ matrix_folders=${{ matrix.folders }}

+ matrix_folders=${matrix_folders/'models/'/'models_'}

+ echo "$matrix_folders"

+ echo "matrix_folders=$matrix_folders" >> $GITHUB_ENV

+

+ - name: NVIDIA-SMI

+ run: |

+ nvidia-smi

+

+ - name: Environment

+ working-directory: /transformers

+ run: |

+ python3 utils/print_env.py

+

+ - name: Run all tests on GPU

+ working-directory: /transformers

+ run: python3 -m pytest -v --make-reports=${{ matrix.machine_type }}_tests_gpu_${{ matrix.folders }} tests/${{ matrix.folders }}

+

+ - name: Failure short reports

+ if: ${{ failure() }}

+ continue-on-error: true

+ run: cat /transformers/reports/${{ matrix.machine_type }}_tests_gpu_${{ matrix.folders }}/failures_short.txt

+

+ - name: Test suite reports artifacts

+ if: ${{ always() }}

+ uses: actions/upload-artifact@v2

+ with:

+ name: ${{ matrix.machine_type }}_run_all_tests_gpu_${{ env.matrix_folders }}_test_reports

+ path: /transformers/reports/${{ matrix.machine_type }}_tests_gpu_${{ matrix.folders }}

+

+ send_results:

+ name: Send results to webhook

+ runs-on: ubuntu-latest

+ if: always()

+ needs: [setup, run_tests_single_gpu, run_tests_multi_gpu]

+ steps:

+ - uses: actions/checkout@v2

+ - uses: actions/download-artifact@v2

+

+ # Create a directory to store test failure tables in the next step

+ - name: Create directory

+ run: mkdir test_failure_tables

+

+ - name: Send message to Slack

+ env:

+ CI_SLACK_BOT_TOKEN: ${{ secrets.CI_SLACK_BOT_TOKEN }}

+ CI_SLACK_CHANNEL_ID: ${{ secrets.CI_SLACK_CHANNEL_ID }}

+ CI_SLACK_CHANNEL_ID_DAILY: ${{ secrets.CI_SLACK_CHANNEL_ID_DAILY }}

+ CI_SLACK_CHANNEL_DUMMY_TESTS: ${{ secrets.CI_SLACK_CHANNEL_DUMMY_TESTS }}

+ CI_SLACK_REPORT_CHANNEL_ID: ${{ secrets.CI_SLACK_CHANNEL_ID_PAST_FUTURE }}

+ CI_EVENT: Past CI - ${{ inputs.framework }}-${{ inputs.version }}

+ # We pass `needs.setup.outputs.matrix` as the argument. A processing in `notification_service.py` to change

+ # `models/bert` to `models_bert` is required, as the artifact names use `_` instead of `/`.

+ run: |

+ pip install slack_sdk

+ python utils/notification_service.py "${{ needs.setup.outputs.matrix }}"

+

+ # Upload complete failure tables, as they might be big and only truncated versions could be sent to Slack.

+ - name: Failure table artifacts

+ if: ${{ always() }}

+ uses: actions/upload-artifact@v2

+ with:

+ name: test_failure_tables_${{ inputs.framework }}-${{ inputs.version }}

+ path: test_failure_tables

\ No newline at end of file

diff --git a/.github/workflows/self-push-caller.yml b/.github/workflows/self-push-caller.yml

index c6986ddf28a0..6dffef5da7fb 100644

--- a/.github/workflows/self-push-caller.yml

+++ b/.github/workflows/self-push-caller.yml

@@ -13,9 +13,40 @@ on:

- "utils/**"

jobs:

+ check-for-setup:

+ runs-on: ubuntu-latest

+ name: Check if setup was changed

+ outputs:

+ changed: ${{ steps.was_changed.outputs.changed }}

+ steps:

+ - uses: actions/checkout@v3

+ with:

+ fetch-depth: "2"

+

+ - name: Get changed files

+ id: changed-files

+ uses: tj-actions/changed-files@v22.2

+

+ - name: Was setup changed

+ id: was_changed

+ run: |

+ for file in ${{ steps.changed-files.outputs.all_changed_files }}; do

+ if [ `basename "${file}"` = "setup.py" ]; then

+ echo ::set-output name=changed::"1"

+ fi

+ done

+

+ build-docker-containers:

+ needs: check-for-setup

+ if: (github.event_name == 'push') && (needs.check-for-setup.outputs.changed == '1')

+ uses: ./.github/workflows/build-docker-images.yml

+ secrets: inherit

+

run_push_ci:

name: Trigger Push CI

runs-on: ubuntu-latest

+ if: ${{ always() }}

+ needs: build-docker-containers

steps:

- name: Trigger push CI via workflow_run

run: echo "Trigger push CI via workflow_run"

\ No newline at end of file

diff --git a/.github/workflows/self-push.yml b/.github/workflows/self-push.yml

index 5a53a844c47b..bb397bc85748 100644

--- a/.github/workflows/self-push.yml

+++ b/.github/workflows/self-push.yml

@@ -353,14 +353,14 @@ jobs:

- name: Failure short reports

if: ${{ failure() }}

continue-on-error: true

- run: cat reports/${{ matrix.machine_type }}_tests_torch_cuda_extensions_gpu/failures_short.txt

+ run: cat /workspace/transformers/reports/${{ matrix.machine_type }}_tests_torch_cuda_extensions_gpu/failures_short.txt

- name: Test suite reports artifacts

if: ${{ always() }}

uses: actions/upload-artifact@v2

with:

name: ${{ matrix.machine_type }}_run_tests_torch_cuda_extensions_gpu_test_reports

- path: reports/${{ matrix.machine_type }}_tests_torch_cuda_extensions_gpu

+ path: /workspace/transformers/reports/${{ matrix.machine_type }}_tests_torch_cuda_extensions_gpu

run_tests_torch_cuda_extensions_multi_gpu:

name: Torch CUDA extension tests

diff --git a/.github/workflows/self-scheduled.yml b/.github/workflows/self-scheduled.yml

index c1a4f3fd371d..323ca5eb54db 100644

--- a/.github/workflows/self-scheduled.yml

+++ b/.github/workflows/self-scheduled.yml

@@ -187,19 +187,19 @@ jobs:

working-directory: /transformers

run: |

pip install -r examples/pytorch/_tests_requirements.txt

- python3 -m pytest -v --make-reports=examples_gpu examples/pytorch

+ python3 -m pytest -v --make-reports=single-gpu_examples_gpu examples/pytorch

- name: Failure short reports

if: ${{ failure() }}

continue-on-error: true

- run: cat /transformers/reports/examples_gpu/failures_short.txt

+ run: cat /transformers/reports/single-gpu_examples_gpu/failures_short.txt

- name: Test suite reports artifacts

if: ${{ always() }}

uses: actions/upload-artifact@v2

with:

- name: run_examples_gpu

- path: /transformers/reports/examples_gpu

+ name: single-gpu_run_examples_gpu

+ path: /transformers/reports/single-gpu_examples_gpu

run_pipelines_torch_gpu:

name: PyTorch pipelines

diff --git a/.github/workflows/update_metdata.yml b/.github/workflows/update_metdata.yml

index dcd2ac502100..1fc71893aaf2 100644

--- a/.github/workflows/update_metdata.yml

+++ b/.github/workflows/update_metdata.yml

@@ -21,7 +21,7 @@ jobs:

id: cache

with:

path: ~/venv/

- key: v2-metadata-${{ hashFiles('setup.py') }}

+ key: v3-metadata-${{ hashFiles('setup.py') }}

- name: Create virtual environment on cache miss

if: steps.cache.outputs.cache-hit != 'true'

diff --git a/CONTRIBUTING.md b/CONTRIBUTING.md

index e74510948a9c..7dbc492f7ef7 100644

--- a/CONTRIBUTING.md

+++ b/CONTRIBUTING.md

@@ -128,7 +128,7 @@ You will need basic `git` proficiency to be able to contribute to

manual. Type `git --help` in a shell and enjoy. If you prefer books, [Pro

Git](https://git-scm.com/book/en/v2) is a very good reference.

-Follow these steps to start contributing:

+Follow these steps to start contributing ([supported Python versions](https://github.com/huggingface/transformers/blob/main/setup.py#L426)):

1. Fork the [repository](https://github.com/huggingface/transformers) by

clicking on the 'Fork' button on the repository's page. This creates a copy of the code

diff --git a/Makefile b/Makefile

index f0abc15de8e0..6c6200cfe728 100644

--- a/Makefile

+++ b/Makefile

@@ -51,6 +51,7 @@ quality:

python utils/sort_auto_mappings.py --check_only

flake8 $(check_dirs)

doc-builder style src/transformers docs/source --max_len 119 --check_only --path_to_docs docs/source

+ python utils/check_doc_toc.py

# Format source code automatically and check is there are any problems left that need manual fixing

@@ -58,6 +59,7 @@ extra_style_checks:

python utils/custom_init_isort.py

python utils/sort_auto_mappings.py

doc-builder style src/transformers docs/source --max_len 119 --path_to_docs docs/source

+ python utils/check_doc_toc.py --fix_and_overwrite

# this target runs checks on all files and potentially modifies some of them

diff --git a/README.md b/README.md

index b03cc35753c7..0cda209bdfc3 100644

--- a/README.md

+++ b/README.md

@@ -116,22 +116,46 @@ To immediately use a model on a given input (text, image, audio, ...), we provid

The second line of code downloads and caches the pretrained model used by the pipeline, while the third evaluates it on the given text. Here the answer is "positive" with a confidence of 99.97%.

-Many NLP tasks have a pre-trained `pipeline` ready to go. For example, we can easily extract question answers given context:

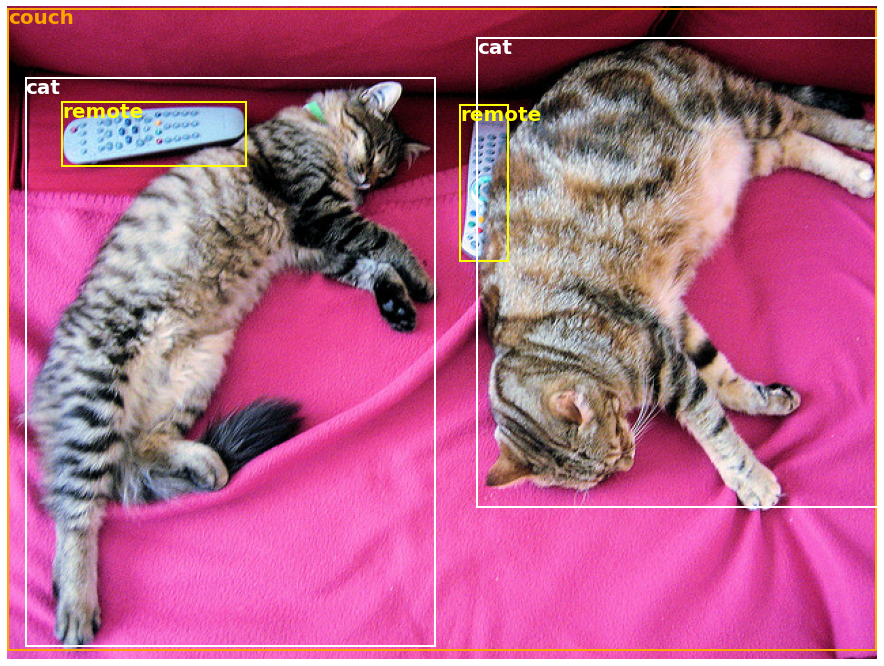

+Many tasks have a pre-trained `pipeline` ready to go, in NLP but also in computer vision and speech. For example, we can easily extract detected objects in an image:

``` python

+>>> import requests

+>>> from PIL import Image

>>> from transformers import pipeline

-# Allocate a pipeline for question-answering

->>> question_answerer = pipeline('question-answering')

->>> question_answerer({

-... 'question': 'What is the name of the repository ?',

-... 'context': 'Pipeline has been included in the huggingface/transformers repository'

-... })

-{'score': 0.30970096588134766, 'start': 34, 'end': 58, 'answer': 'huggingface/transformers'}

-

+# Download an image with cute cats

+>>> url = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/coco_sample.png"

+>>> image_data = requests.get(url, stream=True).raw

+>>> image = Image.open(image_data)

+

+# Allocate a pipeline for object detection

+>>> object_detector = pipeline('object_detection')

+>>> object_detector(image)

+[{'score': 0.9982201457023621,

+ 'label': 'remote',

+ 'box': {'xmin': 40, 'ymin': 70, 'xmax': 175, 'ymax': 117}},

+ {'score': 0.9960021376609802,

+ 'label': 'remote',

+ 'box': {'xmin': 333, 'ymin': 72, 'xmax': 368, 'ymax': 187}},

+ {'score': 0.9954745173454285,

+ 'label': 'couch',

+ 'box': {'xmin': 0, 'ymin': 1, 'xmax': 639, 'ymax': 473}},

+ {'score': 0.9988006353378296,

+ 'label': 'cat',

+ 'box': {'xmin': 13, 'ymin': 52, 'xmax': 314, 'ymax': 470}},

+ {'score': 0.9986783862113953,

+ 'label': 'cat',

+ 'box': {'xmin': 345, 'ymin': 23, 'xmax': 640, 'ymax': 368}}]

```

-In addition to the answer, the pretrained model used here returned its confidence score, along with the start position and end position of the answer in the tokenized sentence. You can learn more about the tasks supported by the `pipeline` API in [this tutorial](https://huggingface.co/docs/transformers/task_summary).

+Here we get a list of objects detected in the image, with a box surrounding the object and a confidence score. Here is the original image on the right, with the predictions displayed on the left:

+

+

+

+

+You can learn more about the tasks supported by the `pipeline` API in [this tutorial](https://huggingface.co/docs/transformers/task_summary).

To download and use any of the pretrained models on your given task, all it takes is three lines of code. Here is the PyTorch version:

```python

@@ -143,6 +167,7 @@ To download and use any of the pretrained models on your given task, all it take

>>> inputs = tokenizer("Hello world!", return_tensors="pt")

>>> outputs = model(**inputs)

```

+

And here is the equivalent code for TensorFlow:

```python

>>> from transformers import AutoTokenizer, TFAutoModel

@@ -246,6 +271,7 @@ Current number of checkpoints: ** (from Inria/Facebook/Sorbonne) released with the paper [CamemBERT: a Tasty French Language Model](https://arxiv.org/abs/1911.03894) by Louis Martin*, Benjamin Muller*, Pedro Javier Ortiz Suárez*, Yoann Dupont, Laurent Romary, Éric Villemonte de la Clergerie, Djamé Seddah and Benoît Sagot.

1. **[CANINE](https://huggingface.co/docs/transformers/model_doc/canine)** (from Google Research) released with the paper [CANINE: Pre-training an Efficient Tokenization-Free Encoder for Language Representation](https://arxiv.org/abs/2103.06874) by Jonathan H. Clark, Dan Garrette, Iulia Turc, John Wieting.

1. **[CLIP](https://huggingface.co/docs/transformers/model_doc/clip)** (from OpenAI) released with the paper [Learning Transferable Visual Models From Natural Language Supervision](https://arxiv.org/abs/2103.00020) by Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, Gretchen Krueger, Ilya Sutskever.

+1. **[CodeGen](https://huggingface.co/docs/transformers/model_doc/codegen)** (from Salesforce) released with the paper [A Conversational Paradigm for Program Synthesis](https://arxiv.org/abs/2203.13474) by Erik Nijkamp, Bo Pang, Hiroaki Hayashi, Lifu Tu, Huan Wang, Yingbo Zhou, Silvio Savarese, Caiming Xiong.

1. **[ConvBERT](https://huggingface.co/docs/transformers/model_doc/convbert)** (from YituTech) released with the paper [ConvBERT: Improving BERT with Span-based Dynamic Convolution](https://arxiv.org/abs/2008.02496) by Zihang Jiang, Weihao Yu, Daquan Zhou, Yunpeng Chen, Jiashi Feng, Shuicheng Yan.

1. **[ConvNeXT](https://huggingface.co/docs/transformers/model_doc/convnext)** (from Facebook AI) released with the paper [A ConvNet for the 2020s](https://arxiv.org/abs/2201.03545) by Zhuang Liu, Hanzi Mao, Chao-Yuan Wu, Christoph Feichtenhofer, Trevor Darrell, Saining Xie.

1. **[CPM](https://huggingface.co/docs/transformers/model_doc/cpm)** (from Tsinghua University) released with the paper [CPM: A Large-scale Generative Chinese Pre-trained Language Model](https://arxiv.org/abs/2012.00413) by Zhengyan Zhang, Xu Han, Hao Zhou, Pei Ke, Yuxian Gu, Deming Ye, Yujia Qin, Yusheng Su, Haozhe Ji, Jian Guan, Fanchao Qi, Xiaozhi Wang, Yanan Zheng, Guoyang Zeng, Huanqi Cao, Shengqi Chen, Daixuan Li, Zhenbo Sun, Zhiyuan Liu, Minlie Huang, Wentao Han, Jie Tang, Juanzi Li, Xiaoyan Zhu, Maosong Sun.

@@ -274,6 +300,7 @@ Current number of checkpoints: ** (from EleutherAI) released with the paper [GPT-NeoX-20B: An Open-Source Autoregressive Language Model](https://arxiv.org/abs/2204.06745) by Sid Black, Stella Biderman, Eric Hallahan, Quentin Anthony, Leo Gao, Laurence Golding, Horace He, Connor Leahy, Kyle McDonell, Jason Phang, Michael Pieler, USVSN Sai Prashanth, Shivanshu Purohit, Laria Reynolds, Jonathan Tow, Ben Wang, Samuel Weinbach

1. **[GPT-2](https://huggingface.co/docs/transformers/model_doc/gpt2)** (from OpenAI) released with the paper [Language Models are Unsupervised Multitask Learners](https://blog.openai.com/better-language-models/) by Alec Radford*, Jeffrey Wu*, Rewon Child, David Luan, Dario Amodei** and Ilya Sutskever**.

1. **[GPT-J](https://huggingface.co/docs/transformers/model_doc/gptj)** (from EleutherAI) released in the repository [kingoflolz/mesh-transformer-jax](https://github.com/kingoflolz/mesh-transformer-jax/) by Ben Wang and Aran Komatsuzaki.

+1. **[GroupViT](https://huggingface.co/docs/transformers/model_doc/groupvit)** (from UCSD, NVIDIA) released with the paper [GroupViT: Semantic Segmentation Emerges from Text Supervision](https://arxiv.org/abs/2202.11094) by Jiarui Xu, Shalini De Mello, Sifei Liu, Wonmin Byeon, Thomas Breuel, Jan Kautz, Xiaolong Wang.

1. **[Hubert](https://huggingface.co/docs/transformers/model_doc/hubert)** (from Facebook) released with the paper [HuBERT: Self-Supervised Speech Representation Learning by Masked Prediction of Hidden Units](https://arxiv.org/abs/2106.07447) by Wei-Ning Hsu, Benjamin Bolte, Yao-Hung Hubert Tsai, Kushal Lakhotia, Ruslan Salakhutdinov, Abdelrahman Mohamed.

1. **[I-BERT](https://huggingface.co/docs/transformers/model_doc/ibert)** (from Berkeley) released with the paper [I-BERT: Integer-only BERT Quantization](https://arxiv.org/abs/2101.01321) by Sehoon Kim, Amir Gholami, Zhewei Yao, Michael W. Mahoney, Kurt Keutzer.

1. **[ImageGPT](https://huggingface.co/docs/transformers/model_doc/imagegpt)** (from OpenAI) released with the paper [Generative Pretraining from Pixels](https://openai.com/blog/image-gpt/) by Mark Chen, Alec Radford, Rewon Child, Jeffrey Wu, Heewoo Jun, David Luan, Ilya Sutskever.

@@ -297,10 +324,15 @@ Current number of checkpoints: ** (from NVIDIA) released with the paper [Megatron-LM: Training Multi-Billion Parameter Language Models Using Model Parallelism](https://arxiv.org/abs/1909.08053) by Mohammad Shoeybi, Mostofa Patwary, Raul Puri, Patrick LeGresley, Jared Casper and Bryan Catanzaro.

1. **[mLUKE](https://huggingface.co/docs/transformers/model_doc/mluke)** (from Studio Ousia) released with the paper [mLUKE: The Power of Entity Representations in Multilingual Pretrained Language Models](https://arxiv.org/abs/2110.08151) by Ryokan Ri, Ikuya Yamada, and Yoshimasa Tsuruoka.

1. **[MobileBERT](https://huggingface.co/docs/transformers/model_doc/mobilebert)** (from CMU/Google Brain) released with the paper [MobileBERT: a Compact Task-Agnostic BERT for Resource-Limited Devices](https://arxiv.org/abs/2004.02984) by Zhiqing Sun, Hongkun Yu, Xiaodan Song, Renjie Liu, Yiming Yang, and Denny Zhou.

+1. **[MobileViT](https://huggingface.co/docs/transformers/model_doc/mobilevit)** (from Apple) released with the paper [MobileViT: Light-weight, General-purpose, and Mobile-friendly Vision Transformer](https://arxiv.org/abs/2110.02178) by Sachin Mehta and Mohammad Rastegari.

1. **[MPNet](https://huggingface.co/docs/transformers/model_doc/mpnet)** (from Microsoft Research) released with the paper [MPNet: Masked and Permuted Pre-training for Language Understanding](https://arxiv.org/abs/2004.09297) by Kaitao Song, Xu Tan, Tao Qin, Jianfeng Lu, Tie-Yan Liu.

1. **[MT5](https://huggingface.co/docs/transformers/model_doc/mt5)** (from Google AI) released with the paper [mT5: A massively multilingual pre-trained text-to-text transformer](https://arxiv.org/abs/2010.11934) by Linting Xue, Noah Constant, Adam Roberts, Mihir Kale, Rami Al-Rfou, Aditya Siddhant, Aditya Barua, Colin Raffel.

+1. **[MVP](https://huggingface.co/docs/transformers/model_doc/mvp)** (from RUC AI Box) released with the paper [MVP: Multi-task Supervised Pre-training for Natural Language Generation](https://arxiv.org/abs/2206.12131) by Tianyi Tang, Junyi Li, Wayne Xin Zhao and Ji-Rong Wen.

+1. **[Nezha](https://huggingface.co/docs/transformers/model_doc/nezha)** (from Huawei Noah’s Ark Lab) released with the paper [NEZHA: Neural Contextualized Representation for Chinese Language Understanding](https://arxiv.org/abs/1909.00204) by Junqiu Wei, Xiaozhe Ren, Xiaoguang Li, Wenyong Huang, Yi Liao, Yasheng Wang, Jiashu Lin, Xin Jiang, Xiao Chen and Qun Liu.

+1. **[NLLB](https://huggingface.co/docs/transformers/model_doc/nllb)** (from Meta) released with the paper [No Language Left Behind: Scaling Human-Centered Machine Translation](https://arxiv.org/abs/2207.04672) by the NLLB team.

1. **[Nyströmformer](https://huggingface.co/docs/transformers/model_doc/nystromformer)** (from the University of Wisconsin - Madison) released with the paper [Nyströmformer: A Nyström-Based Algorithm for Approximating Self-Attention](https://arxiv.org/abs/2102.03902) by Yunyang Xiong, Zhanpeng Zeng, Rudrasis Chakraborty, Mingxing Tan, Glenn Fung, Yin Li, Vikas Singh.

1. **[OPT](https://huggingface.co/docs/transformers/master/model_doc/opt)** (from Meta AI) released with the paper [OPT: Open Pre-trained Transformer Language Models](https://arxiv.org/abs/2205.01068) by Susan Zhang, Stephen Roller, Naman Goyal, Mikel Artetxe, Moya Chen, Shuohui Chen et al.

+1. **[OWL-ViT](https://huggingface.co/docs/transformers/model_doc/owlvit)** (from Google AI) released with the paper [Simple Open-Vocabulary Object Detection with Vision Transformers](https://arxiv.org/abs/2205.06230) by Matthias Minderer, Alexey Gritsenko, Austin Stone, Maxim Neumann, Dirk Weissenborn, Alexey Dosovitskiy, Aravindh Mahendran, Anurag Arnab, Mostafa Dehghani, Zhuoran Shen, Xiao Wang, Xiaohua Zhai, Thomas Kipf, and Neil Houlsby.

1. **[Pegasus](https://huggingface.co/docs/transformers/model_doc/pegasus)** (from Google) released with the paper [PEGASUS: Pre-training with Extracted Gap-sentences for Abstractive Summarization](https://arxiv.org/abs/1912.08777) by Jingqing Zhang, Yao Zhao, Mohammad Saleh and Peter J. Liu.

1. **[Perceiver IO](https://huggingface.co/docs/transformers/model_doc/perceiver)** (from Deepmind) released with the paper [Perceiver IO: A General Architecture for Structured Inputs & Outputs](https://arxiv.org/abs/2107.14795) by Andrew Jaegle, Sebastian Borgeaud, Jean-Baptiste Alayrac, Carl Doersch, Catalin Ionescu, David Ding, Skanda Koppula, Daniel Zoran, Andrew Brock, Evan Shelhamer, Olivier Hénaff, Matthew M. Botvinick, Andrew Zisserman, Oriol Vinyals, João Carreira.

1. **[PhoBERT](https://huggingface.co/docs/transformers/model_doc/phobert)** (from VinAI Research) released with the paper [PhoBERT: Pre-trained language models for Vietnamese](https://www.aclweb.org/anthology/2020.findings-emnlp.92/) by Dat Quoc Nguyen and Anh Tuan Nguyen.

@@ -324,6 +356,7 @@ Current number of checkpoints: ** (from Tel Aviv University), released together with the paper [Few-Shot Question Answering by Pretraining Span Selection](https://arxiv.org/abs/2101.00438) by Ori Ram, Yuval Kirstain, Jonathan Berant, Amir Globerson, Omer Levy.

1. **[SqueezeBERT](https://huggingface.co/docs/transformers/model_doc/squeezebert)** (from Berkeley) released with the paper [SqueezeBERT: What can computer vision teach NLP about efficient neural networks?](https://arxiv.org/abs/2006.11316) by Forrest N. Iandola, Albert E. Shaw, Ravi Krishna, and Kurt W. Keutzer.

1. **[Swin Transformer](https://huggingface.co/docs/transformers/model_doc/swin)** (from Microsoft) released with the paper [Swin Transformer: Hierarchical Vision Transformer using Shifted Windows](https://arxiv.org/abs/2103.14030) by Ze Liu, Yutong Lin, Yue Cao, Han Hu, Yixuan Wei, Zheng Zhang, Stephen Lin, Baining Guo.

+1. **[Swin Transformer V2](https://huggingface.co/docs/transformers/main/model_doc/swinv2)** (from Microsoft) released with the paper [Swin Transformer V2: Scaling Up Capacity and Resolution](https://arxiv.org/abs/2111.09883) by Ze Liu, Han Hu, Yutong Lin, Zhuliang Yao, Zhenda Xie, Yixuan Wei, Jia Ning, Yue Cao, Zheng Zhang, Li Dong, Furu Wei, Baining Guo.

1. **[T5](https://huggingface.co/docs/transformers/model_doc/t5)** (from Google AI) released with the paper [Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer](https://arxiv.org/abs/1910.10683) by Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu.

1. **[T5v1.1](https://huggingface.co/docs/transformers/model_doc/t5v1.1)** (from Google AI) released in the repository [google-research/text-to-text-transfer-transformer](https://github.com/google-research/text-to-text-transfer-transformer/blob/main/released_checkpoints.md#t511) by Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu.

1. **[TAPAS](https://huggingface.co/docs/transformers/model_doc/tapas)** (from Google AI) released with the paper [TAPAS: Weakly Supervised Table Parsing via Pre-training](https://arxiv.org/abs/2004.02349) by Jonathan Herzig, Paweł Krzysztof Nowak, Thomas Müller, Francesco Piccinno and Julian Martin Eisenschlos.

@@ -331,10 +364,11 @@ Current number of checkpoints: ** (from the University of California at Berkeley) released with the paper [Offline Reinforcement Learning as One Big Sequence Modeling Problem](https://arxiv.org/abs/2106.02039) by Michael Janner, Qiyang Li, Sergey Levine

1. **[Transformer-XL](https://huggingface.co/docs/transformers/model_doc/transfo-xl)** (from Google/CMU) released with the paper [Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context](https://arxiv.org/abs/1901.02860) by Zihang Dai*, Zhilin Yang*, Yiming Yang, Jaime Carbonell, Quoc V. Le, Ruslan Salakhutdinov.

1. **[TrOCR](https://huggingface.co/docs/transformers/model_doc/trocr)** (from Microsoft), released together with the paper [TrOCR: Transformer-based Optical Character Recognition with Pre-trained Models](https://arxiv.org/abs/2109.10282) by Minghao Li, Tengchao Lv, Lei Cui, Yijuan Lu, Dinei Florencio, Cha Zhang, Zhoujun Li, Furu Wei.

-1. **[UL2](https://huggingface.co/docs/transformers/main/model_doc/ul2)** (from Google Research) released with the paper [Unifying Language Learning Paradigms](https://arxiv.org/abs/2205.05131v1) by Yi Tay, Mostafa Dehghani, Vinh Q. Tran, Xavier Garcia, Dara Bahri, Tal Schuster, Huaixiu Steven Zheng, Neil Houlsby, Donald Metzler

+1. **[UL2](https://huggingface.co/docs/transformers/model_doc/ul2)** (from Google Research) released with the paper [Unifying Language Learning Paradigms](https://arxiv.org/abs/2205.05131v1) by Yi Tay, Mostafa Dehghani, Vinh Q. Tran, Xavier Garcia, Dara Bahri, Tal Schuster, Huaixiu Steven Zheng, Neil Houlsby, Donald Metzler

1. **[UniSpeech](https://huggingface.co/docs/transformers/model_doc/unispeech)** (from Microsoft Research) released with the paper [UniSpeech: Unified Speech Representation Learning with Labeled and Unlabeled Data](https://arxiv.org/abs/2101.07597) by Chengyi Wang, Yu Wu, Yao Qian, Kenichi Kumatani, Shujie Liu, Furu Wei, Michael Zeng, Xuedong Huang.

1. **[UniSpeechSat](https://huggingface.co/docs/transformers/model_doc/unispeech-sat)** (from Microsoft Research) released with the paper [UNISPEECH-SAT: UNIVERSAL SPEECH REPRESENTATION LEARNING WITH SPEAKER AWARE PRE-TRAINING](https://arxiv.org/abs/2110.05752) by Sanyuan Chen, Yu Wu, Chengyi Wang, Zhengyang Chen, Zhuo Chen, Shujie Liu, Jian Wu, Yao Qian, Furu Wei, Jinyu Li, Xiangzhan Yu.

1. **[VAN](https://huggingface.co/docs/transformers/model_doc/van)** (from Tsinghua University and Nankai University) released with the paper [Visual Attention Network](https://arxiv.org/abs/2202.09741) by Meng-Hao Guo, Cheng-Ze Lu, Zheng-Ning Liu, Ming-Ming Cheng, Shi-Min Hu.

+1. **[VideoMAE](https://huggingface.co/docs/transformers/main/model_doc/videomae)** (from Multimedia Computing Group, Nanjing University) released with the paper [VideoMAE: Masked Autoencoders are Data-Efficient Learners for Self-Supervised Video Pre-Training](https://arxiv.org/abs/2203.12602) by Zhan Tong, Yibing Song, Jue Wang, Limin Wang.

1. **[ViLT](https://huggingface.co/docs/transformers/model_doc/vilt)** (from NAVER AI Lab/Kakao Enterprise/Kakao Brain) released with the paper [ViLT: Vision-and-Language Transformer Without Convolution or Region Supervision](https://arxiv.org/abs/2102.03334) by Wonjae Kim, Bokyung Son, Ildoo Kim.

1. **[Vision Transformer (ViT)](https://huggingface.co/docs/transformers/model_doc/vit)** (from Google AI) released with the paper [An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale](https://arxiv.org/abs/2010.11929) by Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, Jakob Uszkoreit, Neil Houlsby.

1. **[VisualBERT](https://huggingface.co/docs/transformers/model_doc/visual_bert)** (from UCLA NLP) released with the paper [VisualBERT: A Simple and Performant Baseline for Vision and Language](https://arxiv.org/pdf/1908.03557) by Liunian Harold Li, Mark Yatskar, Da Yin, Cho-Jui Hsieh, Kai-Wei Chang.

diff --git a/README_ko.md b/README_ko.md

index f977b1ecc3da..c63fdca749da 100644

--- a/README_ko.md

+++ b/README_ko.md

@@ -227,6 +227,7 @@ Flax, PyTorch, TensorFlow 설치 페이지에서 이들을 conda로 설치하는

1. **[CamemBERT](https://huggingface.co/docs/transformers/model_doc/camembert)** (from Inria/Facebook/Sorbonne) released with the paper [CamemBERT: a Tasty French Language Model](https://arxiv.org/abs/1911.03894) by Louis Martin*, Benjamin Muller*, Pedro Javier Ortiz Suárez*, Yoann Dupont, Laurent Romary, Éric Villemonte de la Clergerie, Djamé Seddah and Benoît Sagot.

1. **[CANINE](https://huggingface.co/docs/transformers/model_doc/canine)** (from Google Research) released with the paper [CANINE: Pre-training an Efficient Tokenization-Free Encoder for Language Representation](https://arxiv.org/abs/2103.06874) by Jonathan H. Clark, Dan Garrette, Iulia Turc, John Wieting.

1. **[CLIP](https://huggingface.co/docs/transformers/model_doc/clip)** (from OpenAI) released with the paper [Learning Transferable Visual Models From Natural Language Supervision](https://arxiv.org/abs/2103.00020) by Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, Gretchen Krueger, Ilya Sutskever.

+1. **[CodeGen](https://huggingface.co/docs/transformers/model_doc/codegen)** (from Salesforce) released with the paper [A Conversational Paradigm for Program Synthesis](https://arxiv.org/abs/2203.13474) by Erik Nijkamp, Bo Pang, Hiroaki Hayashi, Lifu Tu, Huan Wang, Yingbo Zhou, Silvio Savarese, Caiming Xiong.

1. **[ConvBERT](https://huggingface.co/docs/transformers/model_doc/convbert)** (from YituTech) released with the paper [ConvBERT: Improving BERT with Span-based Dynamic Convolution](https://arxiv.org/abs/2008.02496) by Zihang Jiang, Weihao Yu, Daquan Zhou, Yunpeng Chen, Jiashi Feng, Shuicheng Yan.

1. **[ConvNeXT](https://huggingface.co/docs/transformers/model_doc/convnext)** (from Facebook AI) released with the paper [A ConvNet for the 2020s](https://arxiv.org/abs/2201.03545) by Zhuang Liu, Hanzi Mao, Chao-Yuan Wu, Christoph Feichtenhofer, Trevor Darrell, Saining Xie.

1. **[CPM](https://huggingface.co/docs/transformers/model_doc/cpm)** (from Tsinghua University) released with the paper [CPM: A Large-scale Generative Chinese Pre-trained Language Model](https://arxiv.org/abs/2012.00413) by Zhengyan Zhang, Xu Han, Hao Zhou, Pei Ke, Yuxian Gu, Deming Ye, Yujia Qin, Yusheng Su, Haozhe Ji, Jian Guan, Fanchao Qi, Xiaozhi Wang, Yanan Zheng, Guoyang Zeng, Huanqi Cao, Shengqi Chen, Daixuan Li, Zhenbo Sun, Zhiyuan Liu, Minlie Huang, Wentao Han, Jie Tang, Juanzi Li, Xiaoyan Zhu, Maosong Sun.

@@ -255,6 +256,7 @@ Flax, PyTorch, TensorFlow 설치 페이지에서 이들을 conda로 설치하는

1. **[GPT NeoX](https://huggingface.co/docs/transformers/model_doc/gpt_neox)** (from EleutherAI) released with the paper [GPT-NeoX-20B: An Open-Source Autoregressive Language Model](https://arxiv.org/abs/2204.06745) by Sid Black, Stella Biderman, Eric Hallahan, Quentin Anthony, Leo Gao, Laurence Golding, Horace He, Connor Leahy, Kyle McDonell, Jason Phang, Michael Pieler, USVSN Sai Prashanth, Shivanshu Purohit, Laria Reynolds, Jonathan Tow, Ben Wang, Samuel Weinbach

1. **[GPT-2](https://huggingface.co/docs/transformers/model_doc/gpt2)** (from OpenAI) released with the paper [Language Models are Unsupervised Multitask Learners](https://blog.openai.com/better-language-models/) by Alec Radford*, Jeffrey Wu*, Rewon Child, David Luan, Dario Amodei** and Ilya Sutskever**.

1. **[GPT-J](https://huggingface.co/docs/transformers/model_doc/gptj)** (from EleutherAI) released in the repository [kingoflolz/mesh-transformer-jax](https://github.com/kingoflolz/mesh-transformer-jax/) by Ben Wang and Aran Komatsuzaki.

+1. **[GroupViT](https://huggingface.co/docs/transformers/model_doc/groupvit)** (from UCSD, NVIDIA) released with the paper [GroupViT: Semantic Segmentation Emerges from Text Supervision](https://arxiv.org/abs/2202.11094) by Jiarui Xu, Shalini De Mello, Sifei Liu, Wonmin Byeon, Thomas Breuel, Jan Kautz, Xiaolong Wang.

1. **[Hubert](https://huggingface.co/docs/transformers/model_doc/hubert)** (from Facebook) released with the paper [HuBERT: Self-Supervised Speech Representation Learning by Masked Prediction of Hidden Units](https://arxiv.org/abs/2106.07447) by Wei-Ning Hsu, Benjamin Bolte, Yao-Hung Hubert Tsai, Kushal Lakhotia, Ruslan Salakhutdinov, Abdelrahman Mohamed.

1. **[I-BERT](https://huggingface.co/docs/transformers/model_doc/ibert)** (from Berkeley) released with the paper [I-BERT: Integer-only BERT Quantization](https://arxiv.org/abs/2101.01321) by Sehoon Kim, Amir Gholami, Zhewei Yao, Michael W. Mahoney, Kurt Keutzer.

1. **[ImageGPT](https://huggingface.co/docs/transformers/model_doc/imagegpt)** (from OpenAI) released with the paper [Generative Pretraining from Pixels](https://openai.com/blog/image-gpt/) by Mark Chen, Alec Radford, Rewon Child, Jeffrey Wu, Heewoo Jun, David Luan, Ilya Sutskever.

@@ -278,10 +280,15 @@ Flax, PyTorch, TensorFlow 설치 페이지에서 이들을 conda로 설치하는

1. **[Megatron-GPT2](https://huggingface.co/docs/transformers/model_doc/megatron_gpt2)** (from NVIDIA) released with the paper [Megatron-LM: Training Multi-Billion Parameter Language Models Using Model Parallelism](https://arxiv.org/abs/1909.08053) by Mohammad Shoeybi, Mostofa Patwary, Raul Puri, Patrick LeGresley, Jared Casper and Bryan Catanzaro.

1. **[mLUKE](https://huggingface.co/docs/transformers/model_doc/mluke)** (from Studio Ousia) released with the paper [mLUKE: The Power of Entity Representations in Multilingual Pretrained Language Models](https://arxiv.org/abs/2110.08151) by Ryokan Ri, Ikuya Yamada, and Yoshimasa Tsuruoka.

1. **[MobileBERT](https://huggingface.co/docs/transformers/model_doc/mobilebert)** (from CMU/Google Brain) released with the paper [MobileBERT: a Compact Task-Agnostic BERT for Resource-Limited Devices](https://arxiv.org/abs/2004.02984) by Zhiqing Sun, Hongkun Yu, Xiaodan Song, Renjie Liu, Yiming Yang, and Denny Zhou.

+1. **[MobileViT](https://huggingface.co/docs/transformers/model_doc/mobilevit)** (from Apple) released with the paper [MobileViT: Light-weight, General-purpose, and Mobile-friendly Vision Transformer](https://arxiv.org/abs/2110.02178) by Sachin Mehta and Mohammad Rastegari.

1. **[MPNet](https://huggingface.co/docs/transformers/model_doc/mpnet)** (from Microsoft Research) released with the paper [MPNet: Masked and Permuted Pre-training for Language Understanding](https://arxiv.org/abs/2004.09297) by Kaitao Song, Xu Tan, Tao Qin, Jianfeng Lu, Tie-Yan Liu.