Real-time Simultaneous Multi-Object 3D Shape Reconstruction, 6DoF Pose Estimation and Dense Grasp Prediction

[Project Page] [ArXiv]

Shubham Agrawal, Nikhil Chavan-Dafle,

Isaac Kasahara, Selim Engin,

Jinwook Huh, Volkan Isler

Samsung AI Center, New York

Install the python dependencies

conda create --prefix ./pyvenv python=3.8

conda activate ./pyvenv

pip install -r requirements.txt

pip install git+https://github.com/facebookresearch/pytorch3d.git@stable

Setup the environment by running following commands from the project root:

export PYTHONPATH=${PYTHONPATH}:${PWD}

WANDB_MODE="disabled"

Optionally, to enable html gif visualizations, install gifsicle.

Download pre-trained checkpoints and unzip them at the project root. Run demo using following script:

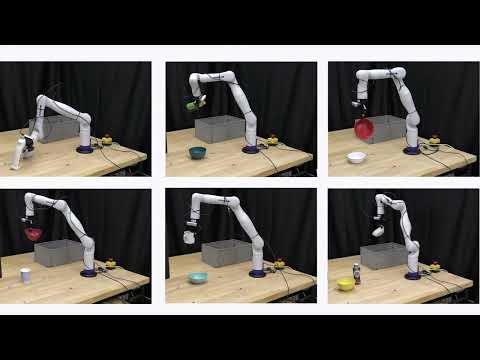

python scripts/demo.pyThis script loads the pretrained checkpoints and shows the results on a small-subset of the NOCS-Real-Test set. For every input, it first shows the predicted shapes and then it shows the predicted grasps, as shown below.

Please refer to the SceneGraspModel class for running on your own data (RGB, Depth, camera_K).

There are two major steps for training this:

- Data generation and training of scale-shape-grasp-auto-encoder. See instructions here.

- Data generation and training of SceneGraspNet. See instructions here.

SceneGraspNet code is adapted from Centersnap and object-deformnet implementations. We sincerely thanks the authors for providing their implementations.

@inproceedings{

agrawal2023realtime,

title={Real-time Simultaneous Multi-Object 3D Shape Reconstruction, 6DoF Pose Estimation and Dense Grasp Prediction},

author={Shubham Agrawal and Nikhil Chavan-Dafle and Isaac Kasahara and Selim Engin and Jinwook Huh and Volkan Isler},

booktitle={{IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)}},

year={2023}

}