-

Notifications

You must be signed in to change notification settings - Fork 532

Performance

Performance is also a top priority with Dynomite.

In our initial tests, we measured the time it took for a key/value pair to become available on another region replica by writing 1k key/value pairs to Dynomite in one region, then polling the other region randomly for 20 keys. The value for each key in this case is just timestamp when the write action started. The client in the other region then reads back those timestamps and compute the durations. We repeated this same experiment several times and took the average. From this we could derive a rough idea of the speed of the replication.

We expect this latency to remain more or less constant as we add code path optimization as well as enhancements in the replication strategy itself (optimizations will improve speed, features will potentially add latency).

Result:

For 5 iterations of this experiment, the average duration for replications was around 85ms. (note that the duration numbers are measured at the client layers so the real numbers should be smaller).

One of our top priorities at Netflix is to be able to scale a data store linearly with growing traffic demands. We have a symmetric deployment model at Netflix where Dynomite is deployed in every aws zone and sized equally, as in same number of nodes in every zone. We want the capability to simultaneously scale dynomite in every zone linearly as traffic grows in that region.

We conducted a simple load test to prove this ability of Dynomite. Details

- Server instance type was r3.xlarge. These instances are well suited for Dynomite workloads - i.e good balance between memory and network

- Replication factor was set to 3. We did this by deploying Dynomite in 3 zones in us-east-1 and each zone had the same no of servers and hence the same no of tokens.

- Client fleet used instance type as m2.2xls which is also typical for an application here at Netflix.

- Demo application used a simple workload of just key value pairs for read and writes i.e the Redis GET and SET api.

- Payload size was chosen to be 1K

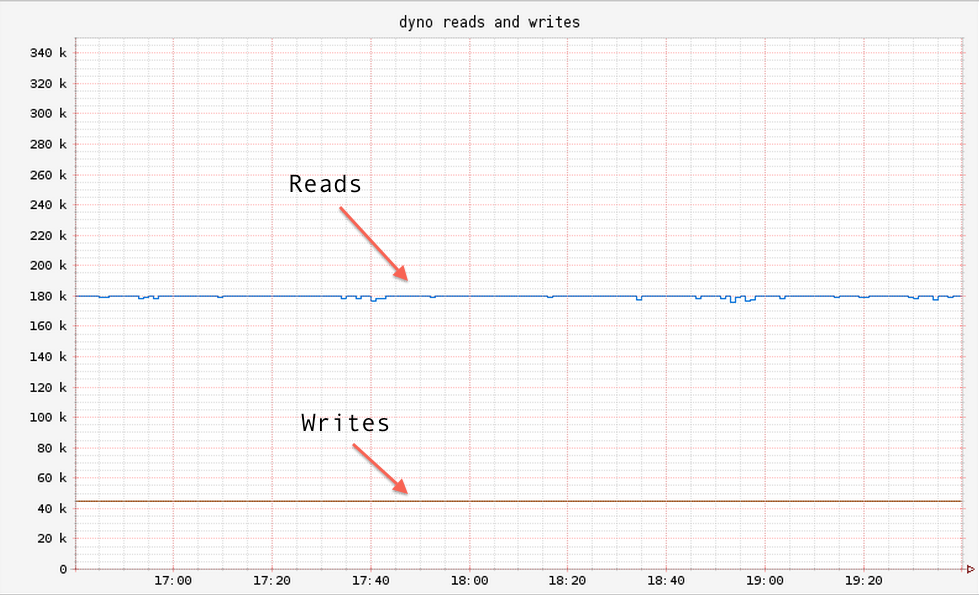

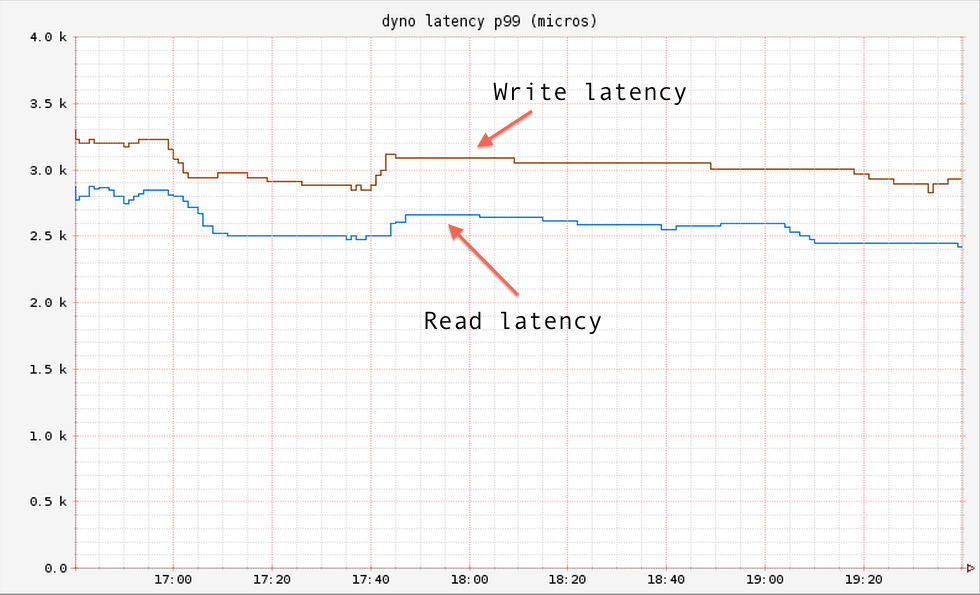

We setup a Dynomite cluster of 6 nodes (i.e 2 per zone). We observed throughput of about 80k per second across the client fleet, while keeping the 99 percentile latency in the single digit ms range (~ 4ms)

| RPS | Latency (avg) | Latency 99 Percentile |

|---|---|---|

| [[/images/linear_scale/linear_scale_12_rps.png | align=left]] | bar |