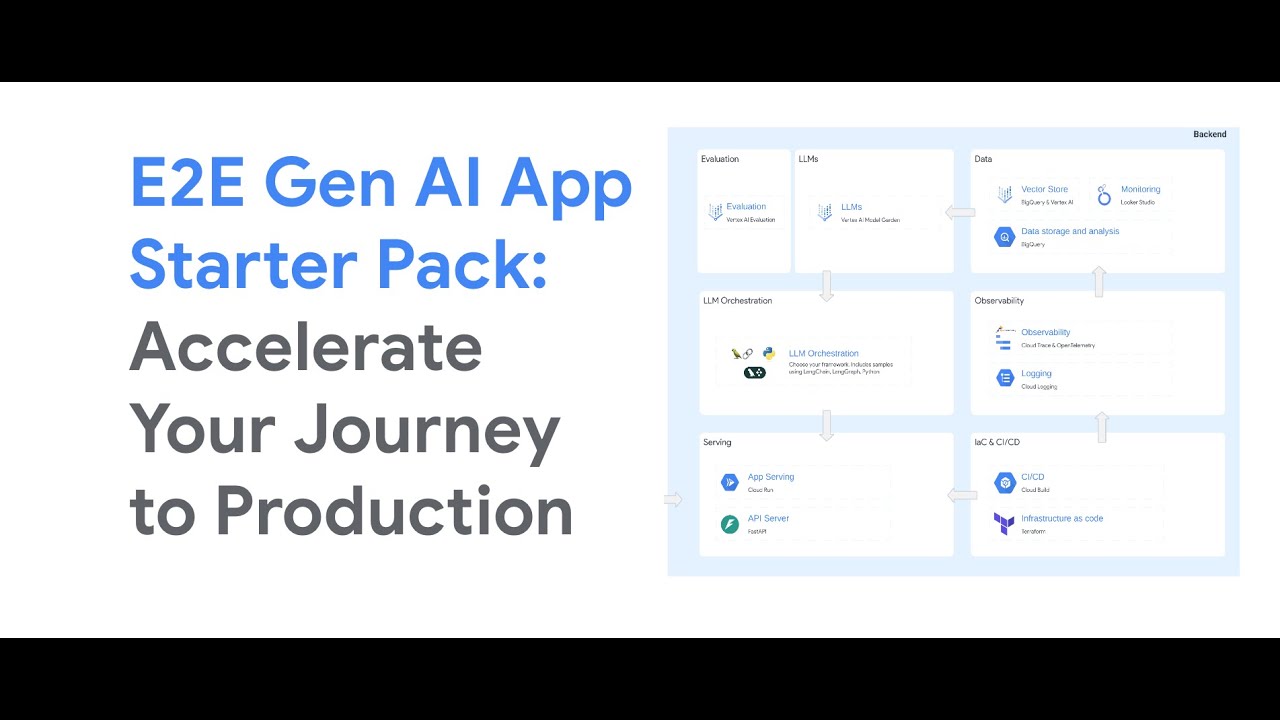

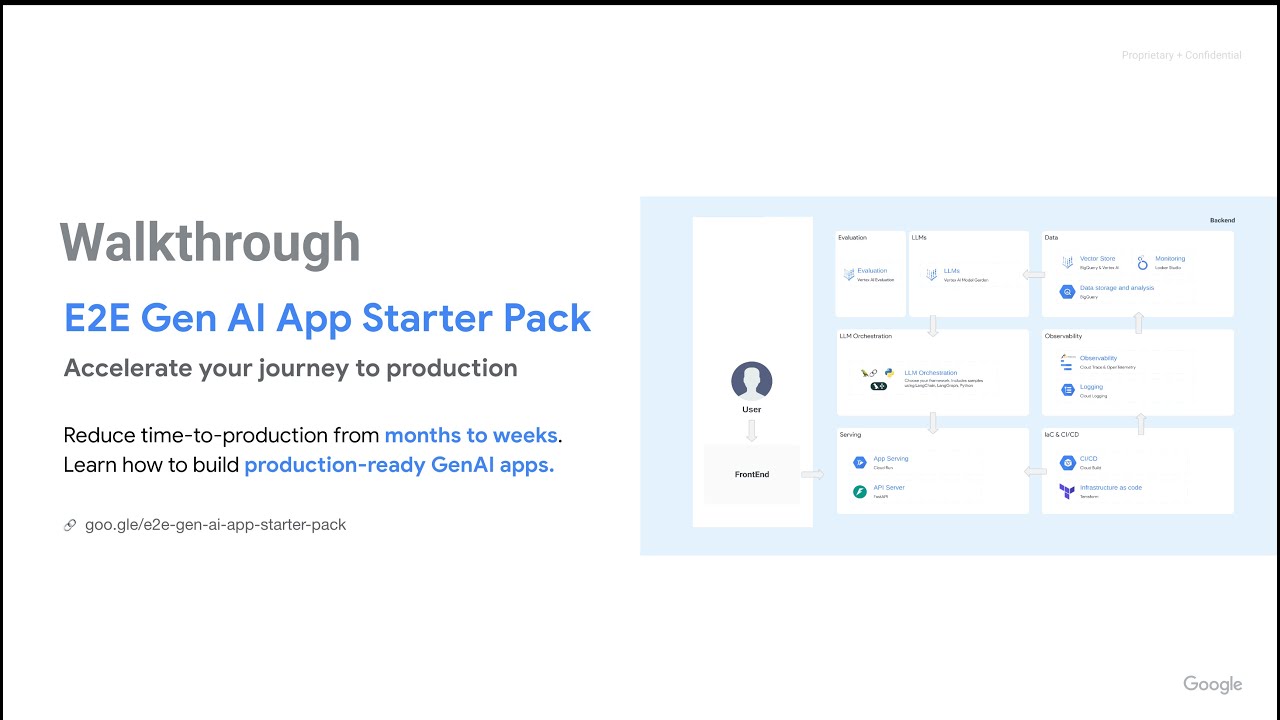

From Prototype to Production in Minutes.

| 📧 Contact | [email protected] |

| 1-Minute Video Overview |  |

| 20-Minute Video Walkthrough |  |

This repository provides a template starter pack for building a Generative AI application on Google Cloud.

We provide a comprehensive set of resources to guide you through the entire development process, from prototype to production.

This is a suggested approach, and you can adapt it to fit your specific needs and preferences. There are multiple ways to build Gen AI applications on Google Cloud, and this template serves as a starting point and example.

This starter pack covers all aspects of Generative AI app development, from prototyping and evaluation to deployment and monitoring.

A prod-ready FastAPI server

Ready-to-use AI patterns

| Description | Visualization |

|---|---|

| Start with a variety of common patterns: this repository offers examples including a basic conversational chain, a production-ready RAG (Retrieval-Augmented Generation) chain developed with Python, and a LangGraph agent implementation. Use them in the application by changing one line of code. See the Readme for more details. |  |

Integration with Vertex AI Evaluation and Experiments

| Description | Visualization |

|---|---|

| The repository showcases how to evaluate Generative AI applications using tools like Vertex AI rapid eval SDK and Vertex AI Experiments. |  |

Unlock Insights with Google Cloud Native Tracing & Logging

Monitor Responses from the application

| Description | Visualization |

|---|---|

| Monitor your Generative AI application's performance. We provide a Looker Studio dashboard to monitor application conversation statistics and user feedback. |  |

| We can also drill down to individual conversations and view the messages exchanged. |  |

CICD and Terraform

A comprehensive UI Playground

| Description | Visualization |

|---|---|

| Experiment with your Generative AI application in a feature-rich playground, including chat curation, user feedback collection, multimodal input, and more! |  |

- Python >=3.10,<3.13

- Google Cloud SDK installed and configured

- Poetry for dependency management

- A development environment (e.g. your local IDE or, when running remotely on Google Cloud, Cloud Shell or Cloud Workstations).

gsutil cp gs://e2e-gen-ai-app-starter-pack/app-starter-pack.zip . && unzip app-starter-pack.zip && cd app-starter-packUse the downloaded folder as a starting point for your own Generative AI application.

Install required packages using Poetry:

poetry install --with streamlit,jupyterSet your default Google Cloud project and region:

export PROJECT_ID="YOUR_PROJECT_ID"

gcloud config set project $PROJECT_ID

gcloud auth application-default login

gcloud auth application-default set-quota-project $PROJECT_ID| Command | Description |

|---|---|

make playground |

Start the backend and frontend for local playground execution |

make test |

Run unit and integration tests |

make load_test |

Execute load tests (see tests/load_test/README.md for details) |

poetry run jupyter |

Launch Jupyter notebook |

For full command options and usage, refer to the Makefile.

- Prototype Your Chain: Build your Generative AI application using different methodologies and frameworks. Use Vertex AI Evaluation for assessing the performance of your application and its chain of steps. See

notebooks/getting_started.ipynbfor a tutorial to get started building and evaluating your chain. - Integrate into the App: Import your chain into the app. Edit the

app/chain.pyfile to add your chain. - Playground Testing: Explore your chain's functionality using the Streamlit playground. Take advantage of the comprehensive playground features, such as chat history management, user feedback mechanisms, support for various input types, and additional capabilities. You can run the playground locally with the

make playgroundcommand. - Deploy with CI/CD: Configure and trigger the CI/CD pipelines. Edit tests if needed. See the deployment section below for more details.

- Monitor in Production: Track performance and gather insights using Cloud Logging, Tracing, and the Looker Studio dashboard. Use the gathered data to iterate on your Generative AI application.

You can test deployment towards a Dev Environment using the following command:

gcloud run deploy genai-app-sample --source . --project YOUR_DEV_PROJECT_IDThe repository includes a Terraform configuration for the setup of the Dev Google Cloud project. See deployment/README.md for instructions.

Quick Start:

-

Enable required APIs in the CI/CD project.

gcloud config set project YOUR_CI_CD_PROJECT_ID gcloud services enable serviceusage.googleapis.com cloudresourcemanager.googleapis.com cloudbuild.googleapis.com secretmanager.googleapis.com

-

Create a Git repository (GitHub, GitLab, Bitbucket).

-

Connect to Cloud Build following Cloud Build Repository Setup.

-

Configure

deployment/terraform/vars/env.tfvarswith your project details. -

Deploy infrastructure:

cd deployment/terraform terraform init terraform apply --var-file vars/env.tfvars -

Perform a commit and push to the repository to see the CI/CD pipelines in action!

For detailed deployment instructions, refer to deployment/README.md.

Contributions are welcome! See the Contributing Guide.

We value your input! Your feedback helps us improve this starter pack and make it more useful for the community.

If you encounter any issues or have specific suggestions, please first consider raising an issue on our GitHub repository.

For other types of feedback, or if you'd like to share a positive experience or success story using this starter pack, we'd love to hear from you! You can reach out to us at [email protected].

Thank you for your contributions!

This repository is for demonstrative purposes only and is not an officially supported Google product.