-

Notifications

You must be signed in to change notification settings - Fork 8k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Export darknet weights to ONNX #7002

Comments

|

@linghu8812 Hi, with some changes: #6987 (comment) |

|

@AlexeyAB Hi, run this command can export the yolov4x-mish.onnx model I have tested the yolov4x-mish.onnx with my tensorrt inference code, the result is shown below, the scores of the output objects are consistent with darknet outputs: |

|

@linghu8812 can you please share how have you created the graphs you have mentioned above? |

|

@linghu8812 The generated onnx model can't used in MNN framework on mobile device, because unsupport onnx operation |

@xiaochus it may caused by make conv node, however, I am not familiar with MNN, if I know which operation MNN can supported, I can modify it. |

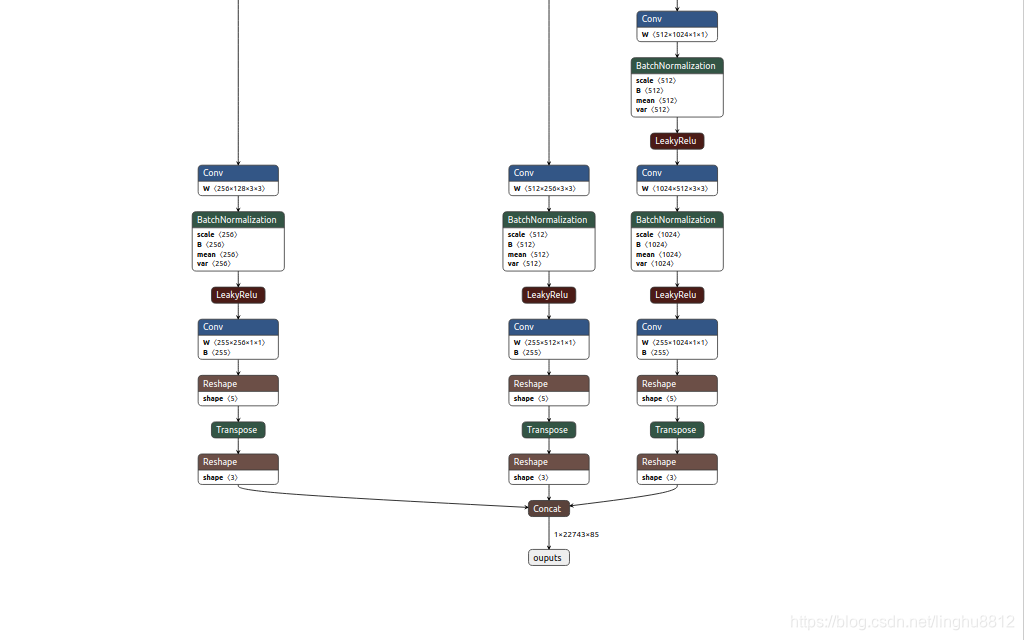

Hello everyone, here is a code that can convert the weights of darknet to onnx model. Now it can support the conversion of yolov4, yolov4-tiny, yolov3, yolov3-spp and yolov3-tiny darknet models. The transformed model can be transformed into tensorrt model based on onnx parser of tensorrt for inference acceleration. There are also codes for tensorrt inference in the repo.

Code file: https://github.com/linghu8812/tensorrt_inference/blob/master/project/Yolov4/export_onnx.py

After the yolo layer, transpose and concat layers are added to make the post processing of the model easier. The structure of the transpose layer is shown in the following figure:

The text was updated successfully, but these errors were encountered: